In the Ecosystm Predicts: Building an Agile & Resilient Organisation: Top 5 Trends in 2024, Principal Advisor Darian Bird said, “The emergence of Generative AI combined with the maturing of deepfake technology will make it possible for malicious agents to create personalised voice and video attacks.” Darian highlighted that this democratisation of phishing, facilitated by professional-sounding prose in various languages and tones, poses a significant threat to potential victims who rely on misspellings or oddly worded appeals to detect fraud. As we see more of these attacks and social engineering attempts, it is important to improve defence mechanisms and increase awareness.

Understanding Deepfake Technology

The term Deepfake is a combination of the words ‘deep learning’ and ‘fake’. Deepfakes are AI-generated media, typically in the form of images, videos, or audio recordings. These synthetic content pieces are designed to appear genuine, often leading to the manipulation of faces and voices in a highly realistic manner. Deepfake technology has gained spotlight due to its potential for creating convincing yet fraudulent content that blurs the line of reality.

Deepfake algorithms are powered by Generative Adversarial Networks (GANs) and continuously enhance synthetic content to closely resemble real data. Through iterative training on extensive datasets, these algorithms refine features such as facial expressions and voice inflections, ensuring a seamless emulation of authentic characteristics.

Deepfakes Becoming Increasingly Convincing

Hyper-realistic deepfakes, undetectable to the human eye and ear, have become a huge threat to the financial and technology sectors. Deepfake technology has become highly convincing, blurring the line between real and fake content. One of the early examples of a successful deepfake fraud was when a UK-based energy company lost USD 243k through a deepfake audio scam in 2019, where scammers mimicked the voice of their CEO to authorise an illegal fund transfer.

Deepfakes have evolved from audio simulations to highly convincing video manipulations where faces and expressions are altered in real-time, making it hard to distinguish between real and fake content. In 2022, for instance, a deepfake video of Elon Musk was used in a crypto scam that resulted in a loss of about USD 2 million for US consumers. This year, a multinational company in Hong Kong lost over USD 25 million when an employee was tricked into sending money to fraudulent accounts after a deepfake video call by what appeared to be his colleagues.

Regulatory Responses to Deepfakes

Countries worldwide are responding to the challenges posed by deepfake technology through regulations and awareness campaigns.

- Singapore’s Online Criminal Harms Act, that will come into effect in 2024, will empower authorities to order individuals and Internet service providers to remove or block criminal content, including deepfakes used for malicious purposes.

- The UAE National Programme for Artificial Intelligence released a deepfake guide to educate the public about both harmful and beneficial applications of this technology. The guide categorises fake content into shallow and deep fakes, providing methods to detect deepfakes using AI-based tools, with a focus on promoting positive uses of advanced technologies.

- The proposed EU AI Act aims to regulate them by imposing transparency requirements on creators, mandating them to disclose when content has been artificially generated or manipulated.

- South Korea passed a law in 2020 banning the distribution of harmful deepfakes. Offenders could be sentenced to up to five years in prison or fined up to USD 43k.

- In the US, states like California and Virginia have passed laws against deepfake pornography, while federal bills like the DEEP FAKES Accountability Act aim to mandate disclosure and counter malicious use, highlighting the diverse global efforts to address the multifaceted challenges of deepfake regulation.

Detecting and Protecting Against Deepfakes

Detecting deepfake becomes increasingly challenging as technology advances. Several methods are needed – sometimes in conjunction – to be able to detect a convincing deepfake. These include visual inspection that focuses on anomalies, metadata analysis to examine clues about authenticity, forensic analysis for pattern and audio examination, and machine learning that uses algorithms trained on real and fake video datasets to classify new videos.

However, identifying deepfakes requires sophisticated technology that many organisations may not have access to. This heightens the need for robust cybersecurity measures. Deepfakes have seen an increase in convincing and successful phishing – and spear phishing – attacks and cyber leaders need to double down on cyber practices.

Defences can no longer depend on spotting these attacks. It requires a multi-pronged approach which combines cyber technologies, incidence response, and user education.

Preventing access to users. By employing anti-spoofing measures organisations can safeguard their email addresses from exploitation by fraudulent actors. Simultaneously, minimising access to readily available information, particularly on websites and social media, reduces the chance of spear-phishing attempts. This includes educating employees about the implications of sharing personal information and clear digital footprint policies. Implementing email filtering mechanisms, whether at the server or device level, helps intercept suspicious emails; and the filtering rules need to be constantly evaluated using techniques such as IP filtering and attachment analysis.

Employee awareness and reporting. There are many ways that organisations can increase awareness in employees starting from regular training sessions to attack simulations. The usefulness of these sessions is often questioned as sometimes they are merely aimed at ticking off a compliance box. Security leaders should aim to make it easier for employees to recognise these attacks by familiarising them with standard processes and implementing verification measures for important email requests. This should be strengthened by a culture of reporting without any individual blame.

Securing against malware. Malware is often distributed through these attacks, making it crucial to ensure devices are well-configured and equipped with effective endpoint defences to prevent malware installation, even if users inadvertently click on suspicious links. Specific defences may include disabling macros and limiting administrator privileges to prevent accidental malware installation. Strengthening authentication and authorisation processes is also important, with measures such as multi-factor authentication, password managers, and alternative authentication methods like biometrics or smart cards. Zero trust and least privilege policies help protect organisation data and assets.

Detection and Response. A robust security logging system is crucial, either through off-the shelf monitoring tools, managed services, or dedicated teams for monitoring. What is more important is that the monitoring capabilities are regularly updated. Additionally, having a well-defined incident response can swiftly mitigate post-incident harm post-incident. This requires clear procedures for various incident types and designated personnel for executing them, such as initiating password resets or removing malware. Organisations should ensure that users are informed about reporting procedures, considering potential communication challenges in the event of device compromise.

Conclusion

The rise of deepfakes has brought forward the need for a collaborative approach. Policymakers, technology companies, and the public must work together to address the challenges posed by deepfakes. This collaboration is crucial for making better detection technologies, establishing stronger laws, and raising awareness on media literacy.

Ecosystm research reveals a stark reality: 75% of technology leaders in Financial Services anticipate data breaches.

Given the sector’s regulatory environment, data breaches carry substantial financial implications, emphasising the critical importance of giving precedence to cybersecurity. This is compelling a fresh cyber strategy focused on early threat detection and reduction of attack impact.

Read on to find out how tech leaders are building a culture of cyber-resilience, re-evaluating their cyber policies, and adopting technologies that keep them one step ahead of their adversaries.

Download ‘Cyber-Resilience in Finance: People, Policy & Technology’ as a PDF

As an industry, the tech sector tends to jump on keywords and terms – and sometimes reshapes their meaning and intention. “Sustainable” is one of those terms. Technology vendors are selling (allegedly!) “sustainable software/hardware/services/solutions” – in fact, the focus on “green” or “zero carbon” or “recycled” or “circular economy” is increasing exponentially at the moment. And that is good news – as I mentioned in my previous post, we need to significantly reduce greenhouse gas emissions if we want a future for our kids. But there is a significant disconnect between the way tech vendors use the word “sustainable” and the way it is used in boardrooms and senior management teams of their clients.

Defining Sustainability

For organisations, Sustainability is a broad business goal – in fact for many, it is the over-arching goal. A sustainable organisation operates in a way that balances economic, social, and environmental (ESG) considerations. Rather than focusing solely on profits, a sustainable organisation aims to meet the needs of the present without compromising the ability of future generations to meet their own needs.

This is what building a “Sustainable Organisation” typically involves:

Economic Sustainability. The organisation must be financially stable and operate in a manner that ensures long-term economic viability. It doesn’t just focus on short-term profits but invests in long-term growth and resilience.

Social Sustainability. This involves the organisation’s responsibility to its employees, stakeholders, and the wider community. A sustainable organisation will promote fair labour practices, invest in employee well-being, foster diversity and inclusion, and engage in ethical decision-making. It often involves community engagement and initiatives that support societal growth and well-being.

Environmental Sustainability. This facet includes the responsible use of natural resources and minimising negative impacts on the environment. A sustainable organisation seeks to reduce its carbon footprint, minimise waste, enhance energy efficiency, and often supports or initiates activities that promote environmental conservation.

Governance and Ethical Considerations. Sustainable organisations tend to have transparent and responsible governance. They follow ethical business practices, comply with laws and regulations, and foster a culture of integrity and accountability.

Security and Resilience. Sustainable organisations have the ability to thwart bad actors – and in the situation that they are breached, to recover from these breaches quickly and safely. Sustainable organisations can survive cybersecurity incidents and continue to operate when breaches occur, with the least impact.

Long-Term Focus. Sustainability often requires a long-term perspective. By looking beyond immediate gains and considering the long-term impact of decisions, a sustainable organisation can better align its strategies with broader societal goals.

Stakeholder Engagement. Understanding and addressing the needs and concerns of different stakeholders (including employees, customers, suppliers, communities, and shareholders) is key to sustainability. This includes open communication and collaboration with these groups to foster relationships based on trust and mutual benefit.

Adaptation and Innovation. The organisation is not static and recognises the need for continual improvement and adaptation. This might include innovation in products, services, or processes to meet evolving sustainability standards and societal expectations.

Alignment with the United Nations’ Sustainable Development Goals (UNSDGs). Many sustainable organisations align their strategies and operations with the UNSDGs which provide a global framework for addressing sustainability challenges.

Organisations Appreciate Precise Messaging

A sustainable organisation is one that integrates economic, social, and environmental considerations into all aspects of its operations. It goes beyond mere compliance with laws to actively pursue positive impacts on people and the planet, maintaining a balance that ensures long-term success and resilience.

These factors are all top of mind when business leaders, boards and government agencies use the word “sustainable”. Helping organisations meet their emission reduction targets is a good starting point – but it is a long way from all businesses need to become sustainable organisations.

Tech providers need to reconsider their use of the term “sustainable” – unless their solution or service is helping organisations meet all of the features outlined above. Using specific language would be favoured by most customers – telling them how the solution will help them reduce greenhouse gas emissions, meet compliance requirements for CO2 and/or waste reduction, and save money on electricity and/or management costs – these are all likely to get the sale over the line faster than a broad “sustainability” messaging will.

The ongoing Ecosystm State of ESG Study throws up some interesting data about organisations in Asia Pacific.

We see ESG more firmly entrenched in organisational strategies; organisations leading with Social and Governance initiatives that are easily integrated within their CSR policies; and supply chain partners driving change.

Download ‘Sustainable Asia Pacific: The ESG Growth Story’ as a PDF

It is not hyperbole to state that AI is on the cusp of having significant implications on society, business, economies, governments, individuals, cultures, politics, the arts, manufacturing, customer experience… I think you get the idea! We cannot understate the impact that AI will have on society. In times gone by, businesses tested ideas, new products, or services with small customer segments before they went live. But with AI we are all part of this experiment on the impacts of AI on society – its benefits, use cases, weaknesses, and threats.

What seemed preposterous just six months ago is not only possible but EASY! Do you want a virtual version of yourself, a friend, your CEO, or your deceased family member? Sure – just feed the data. Will succession planning be more about recording all conversations and interactions with an executive so their avatar can make the decisions when they leave? Why not? How about you turn the thousands of hours of recorded customer conversations with your contact centre team into a virtual contact centre team? Your head of product can present in multiple countries in multiple languages, tailored to the customer segments, industries, geographies, or business needs at the same moment.

AI has the potential to create digital clones of your employees, it can spread fake news as easily as real news, it can be used for deception as easily as for benefit. Is your organisation prepared for the social, personal, cultural, and emotional impacts of AI? Do you know how AI will evolve in your organisation?

When we focus on the future of AI, we often interview AI leaders, business leaders, futurists, and analysts. I haven’t seen enough focus on psychologists, sociologists, historians, academics, counselors, or even regulators! The Internet and social media changed the world more than we ever imagined – at this stage, it looks like these two were just a rehearsal for the real show – Artificial Intelligence.

Lack of Government or Industry Regulation Means You Need to Self-Regulate

These rapid developments – and the notable silence from governments, lawmakers, and regulators – make the requirement for an AI Ethics Policy for your organisation urgent! Even if you have one, it probably needs updating, as the scenarios that AI can operate within are growing and changing literally every day.

- For example, your customer service team might want to create a virtual customer service agent from a real person. What is the policy on this? How will it impact the person?

- Your marketing team might be using ChatGPT or Bard for content creation. Do you have a policy specifically for the creation and use of content using assets your business does not own?

- What data is acceptable to be ingested by a public Large Language Model (LLM). Are are you governing data at creation and publishing to ensure these policies are met?

- With the impending public launch of Microsoft’s Co-Pilot AI service, what data can be ingested by Co-Pilot? How are you governing the distribution of the insights that come out of that capability?

If policies are not put in place, data tagged, staff trained, before using a tool such as Co-Pilot, your business will be likely to break some privacy or employment laws – on the very first day!

What do the LLMs Say About AI Ethics Policies?

So where do you go when looking for an AI Ethics policy? ChatGPT and Bard of course! I asked the two for a modern AI Ethics policy.

You can read what they generated in the graphic below.

I personally prefer the ChatGPT4 version as it is more prescriptive. At the same time, I would argue that MOST of the AI tools that your business has access to today don’t meet all of these principles. And while they are tools and the ethics should dictate the way the tools are used, with AI you cannot always separate the process and outcome from the tool.

For example, a tool that is inherently designed to learn an employee’s character, style, or mannerisms cannot be unbiased if it is based on a biased opinion (and humans have biases!).

LLMs take data, content, and insights created by others, and give it to their customers to reuse. Are you happy with your website being used as a tool to train a startup on the opportunities in the markets and customers you serve?

By making content public, you acknowledge the risk of others using it. But at least they visited your website or app to consume it. Not anymore…

A Policy is Useless if it Sits on a Shelf

Your AI ethics policy needs to be more than a published document. It should be the beginning of a conversation across the entire organisation about the use of AI. Your employees need to be trained in the policy. It needs to be part of the culture of the business – particularly as low and no-code capabilities push these AI tools, practices, and capabilities into the hands of many of your employees.

Nearly every business leader I interview mentions that their organisation is an “intelligent, data-led, business.” What is the role of AI in driving this intelligent business? If being data-driven and analytical is in the DNA of your organisation, soon AI will also be at the heart of your business. You might think you can delay your investments to get it right – but your competitors may be ahead of you.

So, as you jump head-first into the AI pool, start to create, improve and/or socialise your AI Ethics Policy. It should guide your investments, protect your brand, empower your employees, and keep your business resilient and compliant with legacy and new legislation and regulations.

With organisations facing an infrastructure, application, and end-point sprawl, the attack surface continues to grow; as do the number of malicious attacks. Cyber breaches are also becoming exceedingly real for consumers, as they see breaches and leaks in brands and services they interact with regularly. 2023 will see CISOs take charge of their cyber environment – going beyond a checklist.

Here are the top 5 trends for Cybersecurity & Compliance for 2023 according to Ecosystm analysts Alan Hesketh, Alea Fairchild, Andrew Milroy, and Sash Mukherjee.

- An Escalating Cybercrime Flood Will Drive Proactive Protection

- Incident Detection and Response Will Be the Main Focus

- Organisations Will Choose Visibility Over More Cyber Tools

- Regulations Will Increase the Risk of Collecting and Storing Data

- Cyber Risk Will Include a Focus on Enterprise Operational Resilience

Read on for more details.

Download Ecosystm Predicts: The Top 5 Trends for Cybersecurity & Compliance in 2023 as a PDF

Ecosystm and Bitstamp, conducted an invitation-only Executive ThinkTank at the Point Zero Forum in Zurich. A select group of regulators and senior leaders from financial institutions from across the globe came together to share their insights and experiences on Decentralised Finance (DeFi), innovations in the industry, and the outlook for the future.

Here are the 5 key takeaways from the ThinkTank.

- Regulators: Perception vs. Reality. Regulators are generally perceived as having a bias against innovations in the Financial Services industry. In reality, they want to encourage innovation, and the industry players welcome these regulations as guardrails against unscrupulous practices.

- Institutional Players’ Interest in DeFi. Many institutional players are interested in DeFi to enable the smooth running of processes and products and to reduce costs. It is being evaluated in areas such as lending, borrowing, and insurance.

- Evolving Traditional Regulations. In a DeFi world, participants and actors are connected by technology. Hence, setting the framework and imposing good practices when building projects will be critical. Regulations need to find the right balance between flexibility and rigidity.

- The Importance of a Digital Asset Listing Framework. There has been a long debate on who should be the gatekeeper of digital asset listings. From a regulator’s perspective, the liability of projects needs to shift from the consumer to the project and the gatekeeper.

- A Simplified Disclosure Document. Major players are willing to work with regulators to develop a simple disclosure document that describes the project for end-users or investors.

Read below to find out more.

Download Pathways for Aligning Innovation and Regulation in a DeFi World as a PDF

It’s been a while since I lived in Zurich. It was about this time of year when I first visited the city that I instantly fell in love with. Beautiful blue skies and if you’re lucky enough, you can see the snow-capped mountains from Lake Zurich. It’s hard not to be instantly drawn to this small city of approximately 1.4 million people, which punches well above its weight class. One out of every eleven jobs in Switzerland is in Zurich. The financial sector generates around a quarter of the city’s economic output and provides approximately 59,000 full time equivalent jobs – accounting for 16% of all employment in the city.

Between 21-23 June, Zurich will also be home to the Point Zero Forum – an exclusive invite-only, in-person gathering of select global leaders, founders and investors with the purpose of developing new ideas on emerging concepts such as decentralised finance (DeFi), Web 3.0, embedded finance and sustainable finance; driving investment activity; and bringing together public and private sector leaders to brainstorm on regulatory requirements.

The Future of DeFi

Zug is a little canton outside of Zurich and is famously known as “Crypto Valley”. When I lived in Zurich, Zug was the home of many of the country’s leading hedge funds as Zug’s low tax, business friendly environment and fantastic quality of life attracted many of the world’s leading fund managers and companies. Today the same can be said about crypto companies setting up shop in Zug. And crypto ecosystems are expanding exponentially.

However, with the increase in the global adoption of cryptocurrency, what role will the regulators play in aligning regulation without stifling innovation? How can Crypto Valley and Singapore play a role in defining the role regulation will play in a DeFi world?

DeFi is moving fast and we are seeing an explosion of new ideas and positive outcomes. So, what can we expect from all of this? Well, that is what I will discuss with a group of regulators and industry players in a round table discussion on How an Adaptive and Centralised Regulatory Approach can Shape a Protected Future of Finance at the Point Zero Forum. We will explore the role of regulators in a fast-moving industry that has recently seen some horror stories and how industry participants are willing to work with regulators to meet in the middle to build an exciting and sometimes unpredictable future. How do we regulate something in the future? I am personally looking forward to the knowledge sharing.

For the industry to strive and innovate, we need both regulators and industry players to work together and agree to a working framework that helps deliver innovation and growth by creating new technology and jobs. But we also need to keep an eye out on the increasing number of scams in the industry. It is true to say that we have seen our fair share of them in recent months. The total collapse of TerraUSD and Luna and the collapse of the wider crypto market that saw an estimated loss of USD 500 billion has really spooked global markets.

So is cryptocurrency here for good and will it be widely adopted globally? How will regulators see the recent collapse of Luna and view regulations moving forward? We have reached an interesting point with cryptocurrencies and digital assets in general. Is it time to reflect on the current market or should we push forward and try to find a workable middle ground?

Let’s find out. Watch this space for my follow-up post after the Point Zero Forum event!

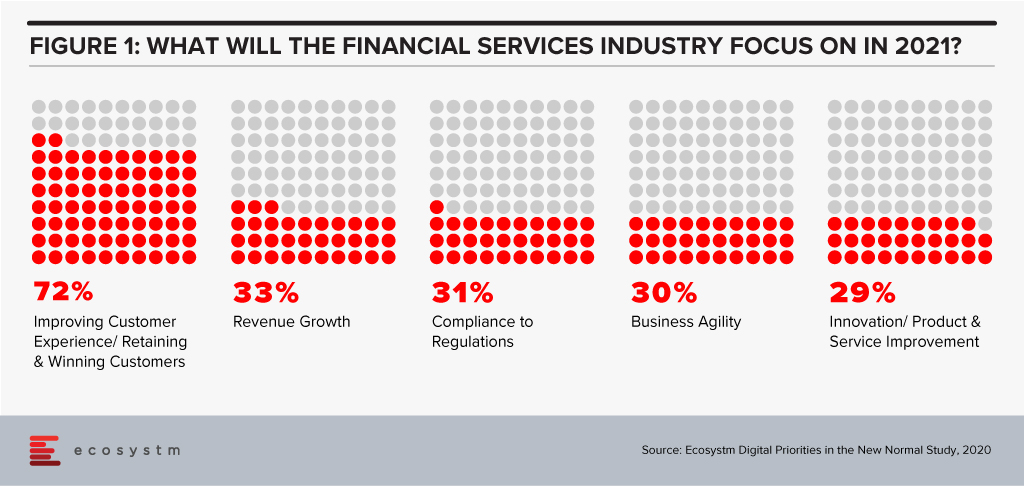

The disruption that we faced in 2020 has created a new appetite for adoption of technology and digital in a shorter period. Crises often present opportunities – and the FinTech and Financial Services industries benefitted from the high adoption of digital financial services and eCommerce. In 2021, there will be several drivers to the transformation of the Financial Services industry – the rise of the gig economy will give access to a larger talent pool; the challenges of government aid disbursement will be mitigated through tech adoption; compliance will come sharply back into focus after a year of ad-hoc technology deployments; and social and environmental awareness will create a greater appetite for green financing. However, the overarching driver will be the heightened focus on the individual consumer (Figure 1).

2021 will finally see consumers at the core of the digital financial ecosystem.

Ecosystm Advisors Dr. Alea Fairchild, Amit Gupta and Dheeraj Chowdhry present the top 5 Ecosystm predictions for FinTech in 2021 – written in collaboration with the Singapore FinTech Festival. This is a summary of the predictions; the full report (including the implications) is available to download for free on the Ecosystm platform.

The Top 5 FinTech Trends for 2021

#1 The New Decade of the ‘Empowered’ Consumer Will Propel Green Finance and Sustainability Considerations Beyond Regulators and Corporates

We have seen multiple countries set regulations and implement Emissions Trading Systems (ETS) and 2021 will see Environmental, Social and Governance (ESG) considerations growing in importance in the investment decisions for asset managers and hedge funds. Efforts for ESG standards for risk measurement will benefit and support that effort.

The primary driver will not only be regulatory frameworks – rather it will be further propelled by consumer preferences. The increased interest in climate change, sustainable business investments and ESG metrics will be an integral part of the reaction of the society to assist in the global transition to a greener and more humane economy in the post-COVID era. Individuals and consumers will demand FinTech solutions that empower them to be more environmentally and socially responsible. The performance of companies on their ESG ratings will become a key consideration for consumers making investment decisions. We will see corporate focus on ESG become a mainstay as a result – driven by regulatory frameworks and the consumer’s desire to place significant important on ESG as an investment criterion.

#2 Consumers Will Truly Be ‘Front and Centre’ in Reshaping the Financial Services Digital Ecosystems

Consumers will also shape the market because of the way they exercise their choices when it comes to transactional finance. They will opt for more discrete solutions – like microfinance, micro-insurances, multiple digital wallets and so on. Even long-standing customers will no longer be completely loyal to their main financial institutions. This will in effect take away traditional business from established financial institutions. Digital transformation will need to go beyond just a digital Customer Experience and will go hand-in-hand with digital offerings driven by consumer choice.

As a result, we will see the emergence of stronger digital ecosystems and partnerships between traditional financial institutions and like-minded FinTechs. As an example, platforms such as the API Exchange (APIX) will get a significant boost and play a crucial role in this emerging collaborative ecosystem. APIX was launched by AFIN, a non-profit organisation established in 2018 by the ASEAN Bankers Association (ABA), International Finance Corporation (IFC), a member of the World Bank Group, and the Monetary Authority of Singapore (MAS). Such platforms will create a level playing field across all tiers of the Financial Services innovation ecosystem by allowing industry participants to Discover, Design and rapidly Deploy innovative digital solutions and offerings.

#3 APIfication of Banking Will Become Mainstream

2020 was the year when banks accepted FinTechs into their product and services offerings – 2021 will see FinTech more established and their technology offerings becoming more sophisticated and consumer-led. These cutting-edge apps will have financial institutions seeking to establish partnerships with them, licensing their technologies and leveraging them to benefit and expand their customer base. This is already being called the “APIficiation” of banking. There will be more emphasis on the partnerships with regulated licensed banking entities in 2021, to gain access to the underlying financial products and services for a seamless customer experience.

This will see the growth of financial institutions’ dependence on third-party developers that have access to – and knowledge of – the financial institutions’ business models and data. But this also gives them an opportunity to leverage the existent Fintech innovations especially for enhanced customer engagement capabilities (Prediction #2).

#4 AI & Automation Will Proliferate in Back-Office Operations

From quicker loan origination to heightened surveillance against fraud and money laundering, financial institutions will push their focus on back-office automation using machine learning, AI and RPA tools (Figure 3). This is not only to improve efficiency and lower risks, but to further enhance the customer experience. AI is already being rolled out in customer-facing operations, but banks will actively be consolidating and automating their mid and back-office procedures for efficiency and automation transition in the post COVID-19 environment. This includes using AI for automating credit operations, policy making and data audits and using RPA for reducing the introduction of errors in datasets and processes.

There is enormous economic pressure to deliver cost savings and reduce risks through the adoption of technology. Financial Services leaders believe that insights gathered from compliance should help other areas of the business, and this requires a completely different mindset. Given the manual and semi-automated nature of current AML compliance, human-only efforts slow down processing timelines and impact business productivity. KYC will leverage AI and real-time environmental data (current accounts, mortgage payment status) and integration of third-party data to make the knowledge richer and timelier in this adaptive economic environment. This will make lending risk assessment more relevant.

#5 Driven by Post Pandemic Recovery, Collaboration Will Shape FinTech Regulation

Travel corridors across border controls have started to push the boundaries. Just as countries develop new processes and policies based on shared learning from other countries, FinTech regulators will collaborate to harmonise regulations that are similar in nature. These collaborative regulators will accelerate FinTech proliferation and osmosis i.e. proliferation of FinTechs into geographies with lower digital adoption.

Data corridors between countries will be the other outcome of this collaboration of FinTech regulators. Sharing of data in a regulated environment will advance data science and machine learning to new heights assisting credit models, AI, and innovations in general. The resulting ‘borderless nature’ of FinTech and the acceleration of policy convergence across several previously siloed regulators will result in new digital innovations. These Trusted Data Corridors between economies will be further driven by the desire for progressive governments to boost the Digital Economy in order to help the post-pandemic recovery.