The White House has mandated federal agencies to conduct risk assessments on AI tools and appoint officers, including Chief Artificial Intelligence Officers (CAIOs), for oversight. This directive, led by the Office of Management and Budget (OMB), aims to modernise government AI adoption and promote responsible use. Agencies must integrate AI oversight into their core functions, ensuring safety, security, and ethical use. CAIOs will be tasked with assessing AI’s impact on civil rights and market competition. Agencies have until December 1, 2024, to address non-compliant AI uses, emphasising swift implementation.

How will this impact global AI adoption? Ecosystm analysts share their views.

Click here to download ‘Ensuring Ethical AI: US Federal Agencies’ New Mandate’ as a PDF.

The Larger Impact: Setting a Global Benchmark

This sets a potential global benchmark for AI governance, with the U.S. leading the way in responsible AI use, inspiring other nations to follow suit. The emphasis on transparency and accountability could boost public trust in AI applications worldwide.

The appointment of CAIOs across U.S. federal agencies marks a significant shift towards ethical AI development and application. Through mandated risk management practices, such as independent evaluations and real-world testing, the government recognises AI’s profound impact on rights, safety, and societal norms.

This isn’t merely a regulatory action; it’s a foundational shift towards embedding ethical and responsible AI at the heart of government operations. The balance struck between fostering innovation and ensuring public safety and rights protection is particularly noteworthy.

This initiative reflects a deep understanding of AI’s dual-edged nature – the potential to significantly benefit society, countered by its risks.

The Larger Impact: Blueprint for Risk Management

In what is likely a world first, AI brings together technology, legal, and policy leaders in a concerted effort to put guardrails around a new technology before a major disaster materialises. These efforts span from technology firms providing a form of legal assurance for use of their products (for example Microsoft’s Customer Copyright Commitment) to parliaments ratifying AI regulatory laws (such as the EU AI Act) to the current directive of installing AI accountability in US federal agencies just in the past few months.

It is universally accepted that AI needs risk management to be responsible and acceptable – installing an accountable C-suite role is another major step of AI risk mitigation.

This is an interesting move for three reasons:

- The balance of innovation versus governance and risk management.

- Accountability mandates for each agency’s use of AI in a public and transparent manner.

- Transparency mandates regarding AI use cases and technologies, including those that may impact safety or rights.

Impact on the Private Sector: Greater Accountability

AI Governance is one of the rare occasions where government action moves faster than private sector. While the immediate pressure is now on US federal agencies (and there are 438 of them) to identify and appoint CAIOs, the announcement sends a clear signal to the private sector.

Following hot on the heels of recent AI legislation steps, it puts AI governance straight into the Boardroom. The air is getting very thin for enterprises still in denial that AI governance has advanced to strategic importance. And unlike the CFC ban in the Eighties (the Montreal protocol likely set the record for concerted global action) this time the technology providers are fully onboard.

There’s no excuse for delaying the acceleration of AI governance and establishing accountability for AI within organisations.

Impact on Tech Providers: More Engagement Opportunities

Technology vendors are poised to benefit from the medium to long-term acceleration of AI investment, especially those based in the U.S., given government agencies’ preferences for local sourcing.

In the short term, our advice to technology vendors and service partners is to actively engage with CAIOs in client agencies to identify existing AI usage in their tools and platforms, as well as algorithms implemented by consultants and service partners.

Once AI guardrails are established within agencies, tech providers and service partners can expedite investments by determining which of their platforms, tools, or capabilities comply with specific guardrails and which do not.

Impact on SE Asia: Promoting a Digital Innovation Hub

By 2030, Southeast Asia is poised to emerge as the world’s fourth-largest economy – much of that growth will be propelled by the adoption of AI and other emerging technologies.

The projected economic growth presents both challenges and opportunities, emphasizing the urgency for regional nations to enhance their AI governance frameworks and stay competitive with international standards. This initiative highlights the critical role of AI integration for private sector businesses in Southeast Asia, urging organizations to proactively address AI’s regulatory and ethical complexities. Furthermore, it has the potential to stimulate cross-border collaborations in AI governance and innovation, bridging the U.S., Southeast Asian nations, and the private sector.

It underscores the global interconnectedness of AI policy and its impact on regional economies and business practices.

By leading with a strategic approach to AI, the U.S. sets an example for Southeast Asia and the global business community to reevaluate their AI strategies, fostering a more unified and responsible global AI ecosystem.

The Risks

U.S. government agencies face the challenge of sourcing experts in technology, legal frameworks, risk management, privacy regulations, civil rights, and security, while also identifying ongoing AI initiatives. Establishing a unified definition of AI and cataloguing processes involving ML, algorithms, or GenAI is essential, given AI’s integral role in organisational processes over the past two decades.

However, there’s a risk that focusing on AI governance may hinder adoption.

The role should prioritise establishing AI guardrails to expedite compliant initiatives while flagging those needing oversight. While these guardrails will facilitate “safe AI” investments, the documentation process could potentially delay progress.

The initiative also echoes a 20th-century mindset for a 21st-century dilemma. Hiring leaders and forming teams feel like a traditional approach. Today, organisations can increase productivity by considering AI and automation as initial solutions. Investing more time upfront to discover initiatives, set guardrails, and implement AI decision-making processes could significantly improve CAIO effectiveness from the outset.

As AI evolves rapidly, the emergence of GenAI technologies such as GPT models has sparked a novel and critical role: prompt engineering. This specialised function is becoming indispensable in optimising the interaction between humans and AI, serving as a bridge that translates human intentions into prompts that guide AI to produce desired outcomes. In this Ecosystm Insight, I will explore the importance of prompt engineering, highlighting its significance, responsibilities, and the impact it has on harnessing AI’s full potential.

Understanding Prompt Engineering

Prompt engineering is an interdisciplinary role that combines elements of linguistics, psychology, computer science, and creative writing. It involves crafting inputs (prompts) that are specifically designed to elicit the most accurate, relevant, and contextually appropriate responses from AI models. This process requires a nuanced understanding of how different models process information, as well as creativity and strategic thinking to manipulate these inputs for optimal results.

As GenAI applications become more integrated across sectors – ranging from creative industries to technical fields – the ability to effectively communicate with AI systems has become a cornerstone of leveraging AI capabilities. Prompt engineers play a crucial role in this scenario, refining the way we interact with AI to enhance productivity, foster innovation, and create solutions that were previously unimaginable.

The Art and Science of Crafting Prompts

Prompt engineering is as much an art as it is a science. It demands a balance between technical understanding of AI models and the creative flair to engage these models in producing novel content. A well-crafted prompt can be the difference between an AI generating generic, irrelevant content and producing work that is insightful, innovative, and tailored to specific needs.

Key responsibilities in prompt engineering include:

- Prompt Optimisation. Fine-tuning prompts to achieve the highest quality output from AI models. This involves understanding the intricacies of model behaviour and leveraging this knowledge to guide the AI towards desired responses.

- Performance Testing and Iteration. Continuously evaluating the effectiveness of different prompts through systematic testing, analysing outcomes, and refining strategies based on empirical data.

- Cross-Functional Collaboration. Engaging with a diverse team of professionals, including data scientists, AI researchers, and domain experts, to ensure that prompts are aligned with project goals and leverage domain-specific knowledge effectively.

- Documentation and Knowledge Sharing. Developing comprehensive guidelines, best practices, and training materials to standardise prompt engineering methodologies within an organisation, facilitating knowledge transfer and consistency in AI interactions.

The Strategic Importance of Prompt Engineering

Effective prompt engineering can significantly enhance the efficiency and outcomes of AI projects. By reducing the need for extensive trial and error, prompt engineers help streamline the development process, saving time and resources. Moreover, their work is vital in mitigating biases and errors in AI-generated content, contributing to the development of responsible and ethical AI solutions.

As AI technologies continue to advance, the role of the prompt engineer will evolve, incorporating new insights from research and practice. The ability to dynamically interact with AI, guiding its creative and analytical processes through precisely engineered prompts, will be a key differentiator in the success of AI applications across industries.

Want to Hire a Prompt Engineer?

Here is a sample job description for a prompt engineer if you think that your organisation will benefit from the role.

Conclusion

Prompt engineering represents a crucial evolution in the field of AI, addressing the gap between human intention and machine-generated output. As we continue to explore the boundaries of what AI can achieve, the demand for skilled prompt engineers – who can navigate the complex interplay between technology and human language – will grow. Their work not only enhances the practical applications of AI but also pushes the frontier of human-machine collaboration, making them indispensable in the modern AI ecosystem.

“AI Guardrails” are often used as a method to not only get AI programs on track, but also as a way to accelerate AI investments. Projects and programs that fall within the guardrails should be easy to approve, govern, and manage – whereas those outside of the guardrails require further review by a governance team or approval body. The concept of guardrails is familiar to many tech businesses and are often applied in areas such as cybersecurity, digital initiatives, data analytics, governance, and management.

While guidance on implementing guardrails is common, organisations often leave the task of defining their specifics, including their components and functionalities, to their AI and data teams. To assist with this, Ecosystm has surveyed some leading AI users among our customers to get their insights on the guardrails that can provide added value.

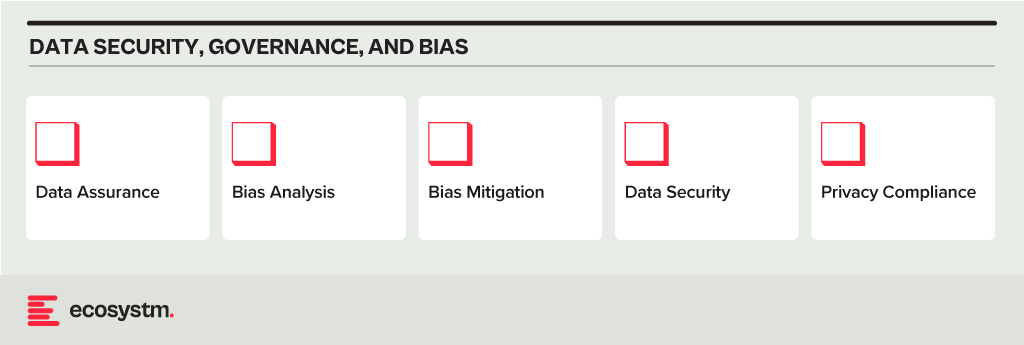

Data Security, Governance, and Bias

- Data Assurance. Has the organisation implemented robust data collection and processing procedures to ensure data accuracy, completeness, and relevance for the purpose of the AI model? This includes addressing issues like missing values, inconsistencies, and outliers.

- Bias Analysis. Does the organisation analyse training data for potential biases – demographic, cultural and so on – that could lead to unfair or discriminatory outputs?

- Bias Mitigation. Is the organisation implementing techniques like debiasing algorithms and diverse data augmentation to mitigate bias in model training?

- Data Security. Does the organisation use strong data security measures to protect sensitive information used in training and running AI models?

- Privacy Compliance. Is the AI opportunity compliant with relevant data privacy regulations (country and industry-specific as well as international standards) when collecting, storing, and utilising data?

Model Development and Explainability

- Explainable AI. Does the model use explainable AI (XAI) techniques to understand and explain how AI models reach their decisions, fostering trust and transparency?

- Fair Algorithms. Are algorithms and models designed with fairness in mind, considering factors like equal opportunity and non-discrimination?

- Rigorous Testing. Does the organisation conduct thorough testing and validation of AI models before deployment, ensuring they perform as intended, are robust to unexpected inputs, and avoid generating harmful outputs?

AI Deployment and Monitoring

- Oversight Accountability. Has the organisation established clear roles and responsibilities for human oversight throughout the AI lifecycle, ensuring human control over critical decisions and mitigation of potential harm?

- Continuous Monitoring. Are there mechanisms to continuously monitor AI systems for performance, bias drift, and unintended consequences, addressing any issues promptly?

- Robust Safety. Can the organisation ensure AI systems are robust and safe, able to handle errors or unexpected situations without causing harm? This includes thorough testing and validation of AI models under diverse conditions before deployment.

- Transparency Disclosure. Is the organisation transparent with stakeholders about AI use, including its limitations, potential risks, and how decisions made by the system are reached?

Other AI Considerations

- Ethical Guidelines. Has the organisation developed and adhered to ethical principles for AI development and use, considering areas like privacy, fairness, accountability, and transparency?

- Legal Compliance. Has the organisation created mechanisms to stay updated on and compliant with relevant legal and regulatory frameworks governing AI development and deployment?

- Public Engagement. What mechanisms are there in place to encourage open discussion and engage with the public regarding the use of AI, addressing concerns and building trust?

- Social Responsibility. Has the organisation considered the environmental and social impact of AI systems, including energy consumption, ecological footprint, and potential societal consequences?

Implementing these guardrails requires a comprehensive approach that includes policy formulation, technical measures, and ongoing oversight. It might take a little longer to set up this capability, but in the mid to longer term, it will allow organisations to accelerate AI implementations and drive a culture of responsible AI use and deployment.

As tech providers such as Microsoft enhance their capabilities and products, they will impact business processes and technology skills, and influence other tech providers to reshape their product and service offerings. Microsoft recently organised briefing sessions in Sydney and Singapore, to present their future roadmap, with a focus on their AI capabilities.

Ecosystm Advisors Achim Granzen, Peter Carr, and Tim Sheedy provide insights on Microsoft’s recent announcements and messaging.

Click here to download Ecosystm VendorSphere: Microsoft’s AI Vision – Initiatives & Impact

Ecosystm Question: What are your thoughts on Microsoft Copilot?

Tim Sheedy. The future of GenAI will not be about single LLMs getting bigger and better – it will be about the use of multiple large and small language models working together to solve specific challenges. It is wasteful to use a large and complex LLM to solve a problem that is simpler. Getting these models to work together will be key to solving industry and use case specific business and customer challenges in the future. Microsoft is already doing this with Microsoft 365 Copilot.

Achim Granzen. Microsoft’s Copilot – a shrink-wrapped GenAI tool based on OpenAI – has become a mainstream product. Microsoft has made it available to their enterprise clients in multiple ways: for personal productivity in Microsoft 365, for enterprise applications in Dynamics 365, for developers in Github and Copilot Studio, and to partners to integrate Copilot into their applications suites (E.g. Amdocs’ Customer Engagement Platform).

Ecosystm Question: How, in your opinion, is the Microsoft Copilot a game changer?

Microsoft’s Customer Copyright Commitment, initially launched as Copilot Copyright Commitment, is the true game changer.

Achim Granzen. It safeguards Copilot users from potential copyright infringement lawsuits related to data used for algorithm training or output results. In November 2023, Microsoft expanded its scope to cover commercial usage of their OpenAI interface as well.

This move not only protects commercial clients using Microsoft’s GenAI products but also extends to any GenAI solutions built by their clients. This initiative significantly reduces a key risk associated with GenAI adoption, outlined in the product terms and conditions.

However, compliance with a set of Required Mitigations and Codes of Conduct is necessary for clients to benefit from this commitment, aligning with responsible AI guidelines and best practices.

Ecosystm Question: Where will organisations need most help on their AI journeys?

Peter Carr. Unfortunately, there is no playbook for AI.

- The path to integrating AI into business strategies and operations lacks a one-size-fits-all guide. Organisations will have to navigate uncharted territories for the time being. This means experimenting with AI applications and learning from successes and failures. This exploratory approach is crucial for leveraging AI’s potential while adapting to unique organisational challenges and opportunities. So, companies that are better at agile innovation will do better in the short term.

- The effectiveness of AI is deeply tied to the availability and quality of connected data. AI systems require extensive datasets to learn and make informed decisions. Ensuring data is accessible, clean, and integrated is fundamental for AI to accurately analyse trends, predict outcomes, and drive intelligent automation across various applications.

Ecosystm Question: What advice would you give organisations adopting AI?

Tim Sheedy. It is all about opportunities and responsibility.

- There is a strong need for responsible AI – at a global level, at a country level, at an industry level and at an organisational level. Microsoft (and other AI leaders) are helping to create responsible AI systems that are fair, reliable, safe, private, secure, and inclusive. There is still a long way to go, but these capabilities do not completely indemnify users of AI. They still have a responsibility to set guardrails in their own businesses about the use and opportunities for AI.

- AI and hybrid work are often discussed as different trends in the market, with different solution sets. But in reality, they are deeply linked. AI can help enhance and improve hybrid work in businesses – and is a great opportunity to demonstrate the value of AI and tools such as Copilot.

Ecosystm Question: What should Microsoft focus on?

Tim Sheedy. Microsoft faces a challenge in educating the market about adopting AI, especially Copilot. They need to educate business, IT, and AI users on embracing AI effectively. Additionally, they must educate existing partners and find new AI partners to drive change in their client base. Success in the race for knowledge workers requires not only being first but also helping users maximise solutions. Customers have limited visibility of Copilot’s capabilities, today. Improving customer upskilling and enhancing tools to prompt users to leverage capabilities will contribute to Microsoft’s (or their competitors’) success in dominating the AI tool market.

Peter Carr. Grassroots businesses form the economic foundation of the Asia Pacific economies. Typically, these businesses do not engage with global SIs (GSIs), which drive Microsoft’s new service offerings. This leads to an adoption gap in the sector that could benefit most from operational efficiencies. To bridge this gap, Microsoft must empower non-GSI partners and managed service providers (MSPs) at the local and regional levels. They won’t achieve their goal of democratising AI, unless they do. Microsoft has the potential to advance AI technology while ensuring fair and widespread adoption.

2023 has been an eventful year. In May, the WHO announced that the pandemic was no longer a global public health emergency. However, other influencers in 2023 will continue to impact the market, well into 2024 and beyond.

Global Conflicts. The Russian invasion of Ukraine persisted; the Israeli-Palestinian conflict escalated into war; African nations continued to see armed conflicts and political crises; there has been significant population displacement.

Banking Crisis. American regional banks collapsed – Silicon Valley Bank and First Republic Bank collapses ranking as the third and second-largest banking collapses in US history; Credit Suisse was acquired by UBS in Switzerland.

Climate Emergency. The UN’s synthesis report found that there’s still a chance to limit global temperature increases by 1.5°C; Loss and Damage conversations continued without a significant impact.

Power of AI. The interest in generative AI models heated up; tech vendors incorporated foundational models in their enterprise offerings – Microsoft Copilot was launched; awareness of AI risks strengthened calls for Ethical/Responsible AI.

Click below to find out what Ecosystm analysts Achim Granzen, Darian Bird, Peter Carr, Sash Mukherjee and Tim Sheedy consider the top 5 tech market forces that will impact organisations in 2024.

Click here to download ‘Ecosystm Predicts: Tech Market Dynamics 2024’ as a PDF

#1 State-sponsored Attacks Will Alter the Nature Of Security Threats

It is becoming clearer that the post-Cold-War era is over, and we are transitioning to a multi-polar world. In this new age, malevolent governments will become increasingly emboldened to carry out cyber and physical attacks without the concern of sanction.

Unlike most malicious actors driven by profit today, state adversaries will be motivated to maximise disruption.

Rather than encrypting valuable data with ransomware, wiper malware will be deployed. State-sponsored attacks against critical infrastructure, such as transportation, energy, and undersea cables will be designed to inflict irreversible damage. The recent 23andme breach is an example of how ethnically directed attacks could be designed to sow fear and distrust. Additionally, even the threat of spyware and phishing will cause some activists, journalists, and politicians to self-censor.

#2 AI Legislation Breaches Will Occur, But Will Go Unpunished

With US President Biden’s recently published “Executive order on Safe, Secure and Trustworthy AI” and the European Union’s “AI Act” set for adoption by the European Parliament in mid-2024, codified and enforceable AI legislation is on the verge of becoming reality. However, oversight structures with powers to enforce the rules are currently not in place for either initiative and will take time to build out.

In 2024, the first instances of AI legislation violations will surface – potentially revealed by whistleblowers or significant public AI failures – but no legal action will be taken yet.

#3 AI Will Increase Net-New Carbon Emissions

In an age focused on reducing carbon and greenhouse gas emissions, AI is contributing to the opposite. Organisations often fail to track these emissions under the broader “Scope 3” category. Researchers at the University of Massachusetts, Amherst, found that training a single AI model can emit over 283T of carbon dioxide, equal to emissions from 62.6 gasoline-powered vehicles in a year.

Organisations rely on cloud providers for carbon emission reduction (Amazon targets net-zero by 2040, and Microsoft and Google aim for 2030, with the trajectory influencing global climate change); yet transparency on AI greenhouse gas emissions is limited. Diverse routes to net-zero will determine the level of greenhouse gas emissions.

Some argue that AI can help in better mapping a path to net-zero, but there is concern about whether the damage caused in the process will outweigh the benefits.

#4 ESG Will Transform into GSE to Become the Future of GRC

Previously viewed as a standalone concept, ESG will be increasingly recognised as integral to Governance, Risk, and Compliance (GRC) practices. The ‘E’ in ESG, representing environmental concerns, is becoming synonymous with compliance due to growing environmental regulations. The ‘S’, or social aspect, is merging with risk management, addressing contemporary issues such as ethical supply chains, workplace equity, and modern slavery, which traditional GRC models often overlook. Governance continues to be a crucial component.

The key to organisational adoption and transformation will be understanding that ESG is not an isolated function but is intricately linked with existing GRC capabilities.

This will present opportunities for GRC and Risk Management providers to adapt their current solutions, already deployed within organisations, to enhance ESG effectiveness. This strategy promises mutual benefits, improving compliance and risk management while simultaneously advancing ESG initiatives.

#5 Productivity Will Dominate Workforce Conversations

The skills discussions have shifted significantly over 2023. At the start of the year, HR leaders were still dealing with the ‘productivity conundrum’ – balancing employee flexibility and productivity in a hybrid work setting. There were also concerns about skills shortage, particularly in IT, as organisations prioritised tech-driven transformation and innovation.

Now, the focus is on assessing the pros and cons (mainly ROI) of providing employees with advanced productivity tools. For example, early studies on Microsoft Copilot showed that 70% of users experienced increased productivity. Discussions, including Narayana Murthy’s remarks on 70-hour work weeks, have re-ignited conversations about employee well-being and the impact of technology in enabling employees to achieve more in less time.

Against the backdrop of skills shortages and the need for better employee experience to retain talent, organisations are increasingly adopting/upgrading their productivity tools – starting with their Sales & Marketing functions.

Generative AI has stolen the limelight in 2023 from nearly every other technology – and for good reason. The advances made by Generative AI providers have been incredible, with many human “thinking” processes now in line to be automated.

But before we had Generative AI, there was the run-of-the-mill “traditional AI”. However, despite the traditional tag, these capabilities have a long way to run within your organisation. In fact, they are often easier to implement, have less risk (and more predictability) and are easier to generate business cases for. Traditional AI systems are often already embedded in many applications, systems, and processes, and can easily be purchased as-a-service from many providers.

Unlocking the Potential of AI Across Industries

Many organisations around the world are exploring AI solutions today, and the opportunities for improvement are significant:

- Manufacturers are designing, developing and testing in digital environments, relying on AI to predict product responses to stress and environments. In the future, Generative AI will be called upon to suggest improvements.

- Retailers are using AI to monitor customer behaviours and predict next steps. Algorithms are being used to drive the best outcome for the customer and the retailer, based on previous behaviours and trained outcomes.

- Transport and logistics businesses are using AI to minimise fuel usage and driver expenses while maximising delivery loads. Smart route planning and scheduling is ensuring timely deliveries while reducing costs and saving on vehicle maintenance.

- Warehouses are enhancing the safety of their environments and efficiently moving goods with AI. Through a combination of video analytics, connected IoT devices, and logistical software, they are maximising the potential of their limited space.

- Public infrastructure providers (such as shopping centres, public transport providers etc) are using AI to monitor public safety. Video analytics and sensors is helping safety and security teams take public safety beyond traditional human monitoring.

AI Impacts Multiple Roles

Even within the organisation, different lines of business expect different outcomes for AI implementations.

- IT teams are monitoring infrastructure, applications, and transactions – to better understand root-cause analysis and predict upcoming failures – using AI. In fact, AIOps, one of the fastest-growing areas of AI, yields substantial productivity gains for tech teams and boosts reliability for both customers and employees.

- Finance teams are leveraging AI to understand customer payment patterns and automate the issuance of invoices and reminders, a capability increasingly being integrated into modern finance systems.

- Sales teams are using AI to discover the best prospects to target and what offers they are most likely to respond to.

- Contact centres are monitoring calls, automating suggestions, summarising records, and scheduling follow-up actions through conversational AI. This is allowing to get agents up to speed in a shorter period, ensuring greater customer satisfaction and increased brand loyalty.

Transitioning from Low-Risk to AI-Infused Growth

These are just a tiny selection of the opportunities for AI. And few of these need testing or business cases – many of these capabilities are available out-of-the-box or out of the cloud. They don’t need deep analysis by risk, legal, or cybersecurity teams. They just need a champion to make the call and switch them on.

One potential downside of Generative AI is that it is drawing unwarranted attention to well-established, low-risk AI applications. Many of these do not require much time from data scientists – and if they do, the challenge is often finding the data and creating the algorithm. Humans can typically understand the logic and rules that the models create – unlike Generative AI, where the outcome cannot be reverse-engineered.

The opportunity today is to take advantage of the attention that LLMs and other Generative AI engines are getting to incorporate AI into every conceivable aspect of a business. When organisations understand the opportunities for productivity improvements, speed enhancement, better customer outcomes and improved business performance, the spend on AI capabilities will skyrocket. Ecosystm estimates that for most organisations, AI spend will be less than 5% of their total tech spend in 2024 – but it is likely to grow to over 20% within the next 4-5 years.

It’s been barely one year since we entered the Generative AI Age. On November 30, 2022, OpenAI launched ChatGPT, with no fanfare or promotion. Since then, Generative AI has become arguably the most talked-about tech topic, both in terms of opportunities it may bring and risks that it may carry.

The landslide success of ChatGPT and other Generative AI applications with consumers and businesses has put a renewed and strengthened focus on the potential risks associated with the technology – and how best to regulate and manage these. Government bodies and agencies have created voluntary guidelines for the use of AI for a number of years now (the Singapore Framework, for example, was launched in 2019).

There is no active legislation on the development and use of AI yet. Crucially, however, a number of such initiatives are currently on their way through legislative processes globally.

EU’s Landmark AI Act: A Step Towards Global AI Regulation

The European Union’s “Artificial Intelligence Act” is a leading example. The European Commission (EC) started examining AI legislation in 2020 with a focus on

- Protecting consumers

- Safeguarding fundamental rights, and

- Avoiding unlawful discrimination or bias

The EC published an initial legislative proposal in 2021, and the European Parliament adopted a revised version as their official position on AI in June 2023, moving the legislation process to its final phase.

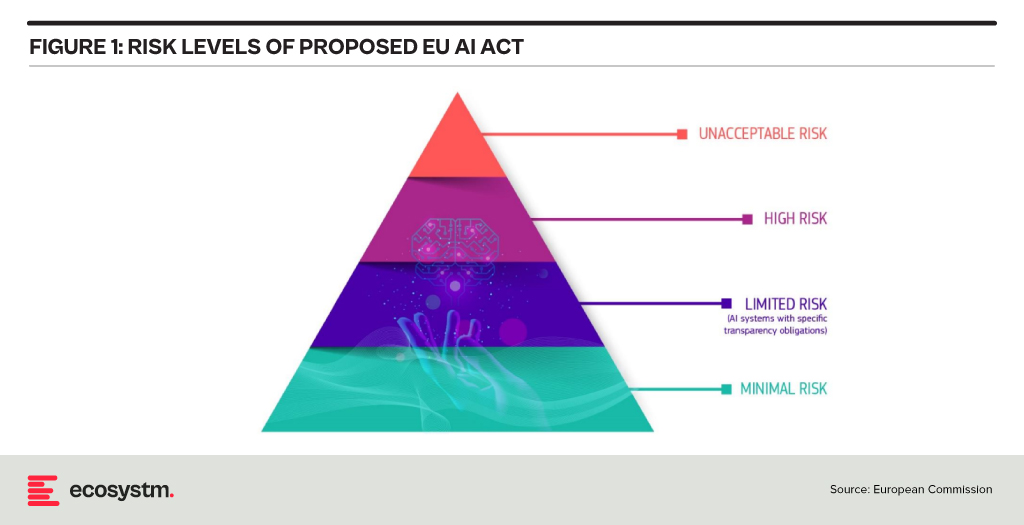

This proposed EU AI Act takes a risk management approach to regulating AI. Organisations looking to employ AI must take note: an internal risk management approach to deploying AI would essentially be mandated by the Act. It is likely that other legislative initiatives will follow a similar approach, making the AI Act a potential role model for global legislations (following the trail blazed by the General Data Protection Regulation). The “G7 Hiroshima AI Process”, established at the G7 summit in Japan in May 2023, is a key example of international discussion and collaboration on the topic (with a focus on Generative AI).

Risk Classification and Regulations in the EU AI Act

At the heart of the AI Act is a system to assess the risk level of AI technology, classify the technology (or its use case), and prescribe appropriate regulations to each risk class.

For each of these four risk levels, the AI Act proposes a set of rules and regulations. Evidently, the regulatory focus is on High-Risk AI systems.

Contrasting Approaches: EU AI Act vs. UK’s Pro-Innovation Regulatory Approach

The AI Act has received its share of criticism, and somewhat different approaches are being considered, notably in the UK. One set of criticism revolves around the lack of clarity and vagueness of concepts (particularly around person-related data and systems). Another set of criticism revolves around the strong focus on the protection of rights and individuals and highlights the potential negative economic impact for EU organisations looking to leverage AI, and for EU tech companies developing AI systems.

A white paper by the UK government published in March 2023, perhaps tellingly, named “A pro-innovation approach to AI regulation” emphasises on a “pragmatic, proportionate regulatory approach … to provide a clear, pro-innovation regulatory environment”, The paper talks about an approach aiming to balance the protection of individuals with economic advancements for the UK on its way to become an “AI superpower”.

Further aspects of the EU AI Act are currently being critically discussed. For example, the current text exempts all open-source AI components not part of a medium or higher risk system from regulation but lacks definition and considerations for proliferation.

Adopting AI Risk Management in Organisations: The Singapore Approach

Regardless of how exactly AI regulations will turn out around the world, organisations must start today to adopt AI risk management practices. There is an added complexity: while the EU AI Act does clearly identify high-risk AI systems and example use cases, the realisation of regulatory practices must be tackled with an industry-focused approach.

The approach taken by the Monetary Authority of Singapore (MAS) is a primary example of an industry-focused approach to AI risk management. The Veritas Consortium, led by MAS, is a public-private-tech partnership consortium aiming to guide the financial services sector on the responsible use of AI. As there is no AI legislation in Singapore to date, the consortium currently builds on Singapore’s aforementioned “Model Artificial Intelligence Governance Framework”. Additional initiatives are already underway to focus specifically on Generative AI for financial services, and to build a globally aligned framework.

To Comply with Upcoming AI Regulations, Risk Management is the Path Forward

As AI regulation initiatives move from voluntary recommendation to legislation globally, a risk management approach is at the core of all of them. Adding risk management capabilities for AI is the path forward for organisations looking to deploy AI-enhanced solutions and applications. As that task can be daunting, an industry consortium approach can help circumnavigate challenges and align on implementation and realisation strategies for AI risk management across the industry. Until AI legislations are in place, such industry consortia can chart the way for their industry – organisations should seek to participate now to gain a head start with AI.

Zurich will be the centre of attention for the Financial and Regulatory industries from June 26th to 28th as it hosts the second edition of the Point Zero Forum. Organised by Elevandi and the Swiss State Secretariat for International Finance, this event serves as a platform to encourage dialogue on policy and technology in Financial Services, with a particular emphasis on adopting transformative technologies and establishing the necessary governance and risk frameworks.

As a knowledge partner, Ecosystm is deeply involved in the Point Zero Forum. Throughout the event, we will actively engage in discussions and closely monitor three key areas: ESG, digital assets, and Responsible AI.

Read on to find out what our leaders — Amit Gupta (CEO, Ecosystm Group), Ullrich Loeffler (CEO and Co-Founder, Ecosystm), and Anubhav Nayyar (Chief Growth Advisor, Ecosystm) — say about why this will be core to building a sustainable and innovative future.

Download ‘Building Synergy Between Policy & Technology’ as a PDF