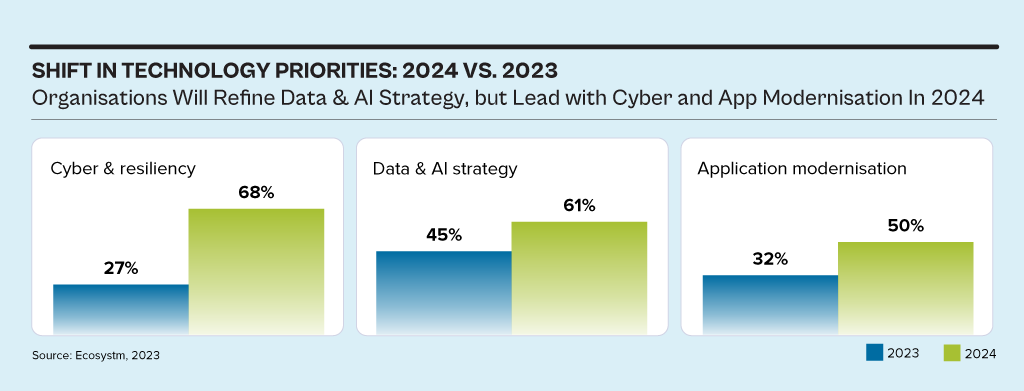

While the discussions have centred around AI, particularly Generative AI in 2023, the influence of AI innovations is extensive. Organisations will urgently need to re-examine their risk strategies, particularly in cyber and resilience practices. They will also reassess their infrastructure needs, optimise applications for AI, and re-evaluate their skills requirements.

This impacts the entire tech market, including tech skills, market opportunities, and innovations.

Ecosystm analysts Alea Fairchild, Darian Bird, Richard Wilkins, and Tim Sheedy present the top 5 trends in building an Agile & Resilient Organisation in 2024.

Click here to download ‘Ecosystm Predicts: Top 5 Resilience Trends in 2024’ as a PDF.

#1 Gen AI Will See Spike in Infrastructure Innovation

Enterprises considering the adoption of Generative AI are evaluating cloud-based solutions versus on-premises solutions. Cloud-based options present an advantage in terms of simplified integration, but raise concerns over the management of training data, potentially resulting in AI-generated hallucinations. On-premises alternatives offer enhanced control and data security but encounter obstacles due to the unexpectedly high demands of GPU computing needed for inferencing, impeding widespread implementation. To overcome this, there’s a need for hardware innovation to meet Generative AI demands, ensuring scalable on-premises deployments.

The collaboration between hardware development and AI innovation is crucial to unleash the full potential of Generative AI and drive enterprise adoption in the AI ecosystem.

Striking the right balance between cloud-based flexibility and on-premises control is pivotal, with considerations like data control, privacy, scalability, compliance, and operational requirements.

#2 Cloud Migrations Will Make Way for Cloud Transformations

The steady move to the public cloud has slowed down. Organisations – particularly those in mature economies – now prioritise cloud efficiencies, having largely completed most of their application migration. The “easy” workloads have moved to the cloud – either through lift-and-shift, SaaS, or simple replatforming.

New skills will be needed as organisations adopt public and hybrid cloud for their entire application and workload portfolio.

- Cloud-native development frameworks like Spring Boot and ASP.NET Core make it easier to develop cloud-native applications

- Cloud-native databases like MongoDB and Cassandra are designed for the cloud and offer scalability, performance, and reliability

- Cloud-native storage like Snowflake, Amazon S3 and Google Cloud Storage provides secure and scalable storage

- Cloud-native messaging like Amazon SNS and Google Cloud Pub/Sub provide reliable and scalable communication between different parts of the cloud-native application

#3 2024 Will be a Good Year for Technology Services Providers

Several changes are set to fuel the growth of tech services providers (systems integrators, consultants, and managed services providers).

There will be a return of “big apps” projects in 2024.

Companies are embarking on significant updates for their SAP, Oracle, and other large ERP, CRM, SCM, and HRM platforms. Whether moving to the cloud or staying on-premises, these upgrades will generate substantial activity for tech services providers.

The migration of complex apps to the cloud involves significant refactoring and rearchitecting, presenting substantial opportunities for managed services providers to transform and modernise these applications beyond traditional “lift-and-shift” activities.

The dynamic tech landscape, marked by AI growth, evolving security threats, and constant releases of new cloud services, has led to a shortage of modern tech skills. Despite a more relaxed job market, organisations will increasingly turn to their tech services partners, whether onshore or offshore, to fill crucial skill gaps.

#4 Gen AI and Maturing Deepfakes Will Democratise Phishing

As with any emerging technology, malicious actors will be among the fastest to exploit Generative AI for their own purposes. The most immediate application will be employing widely available LLMs to generate convincing text and images for their phishing schemes. For many potential victims, misspellings and strangely worded appeals are the only hints that an email from their bank, courier, or colleague is not what it seems. The ability to create professional-sounding prose in any language and a variety of tones will unfortunately democratise phishing.

The emergence of Generative AI combined with the maturing of deepfake technology will make it possible for malicious agents to create personalised voice and video attacks. Digital channels for communication and entertainment will be stretched to differentiate between real and fake.

Security training that underscores the threat of more polished and personalised phishing is a must.

#5 A Holistic Approach to Risk and Operational Resilience Will Drive Adoption of VMaaS

Vulnerability management is a continuous, proactive approach to managing system security. It not only involves vulnerability assessments but also includes developing and implementing strategies to address these vulnerabilities. This is where Vulnerability Management Platforms (VMPs) become table stakes for small and medium enterprises (SMEs) as they are often perceived as “easier targets” by cybercriminals due to potentially lesser investments in security measures.

Vulnerability Management as a Service (VMaaS) – a third-party service that manages and controls threats to automate vulnerability response to remediate faster – can improve the asset cybersecurity management and let SMEs focus on their core activities.

In-house security teams will particularly value the flexibility and customisation of dashboards and reports that give them enhanced visibility over all assets and vulnerabilities.

While there has been much speculation about AI being a potential negative force on humanity, what we do know today is that the accelerated use of AI WILL mean an accelerated use of energy. And if that energy source is not renewable, AI will have a meaningful negative impact on CO2 emissions and will accelerate climate change. Even if the energy is renewable, GPUs and CPUs generate significant heat – and if that heat is not captured and used effectively then it too will have a negative impact on warming local environments near data centres.

Balancing Speed and Energy Efficiency

While GPUs use significantly more energy than CPUs, they run many AI algorithms faster than CPUs – so use less energy overall. But the process needs to run – and these are additional processes. Data needs to be discovered, moved, stored, analysed, cleansed. In many cases, algorithms need to be recreated, tweaked and improved. And then that algorithm itself will kick off new digital processes that are often more processor and energy-intensive – as now organisations might have a unique process for every customer or many customer groups, requiring more decisioning and hence more digitally intensive.

The GPUs, servers, storage, cabling, cooling systems, racks, and buildings have to be constructed – often built from raw materials – and these raw materials need to be mined, transported and transformed. With the use of AI exploding at the moment, so is the demand for AI infrastructure – all of which has an impact on the resources of the planet and ultimately on climate change.

Sustainable Sourcing

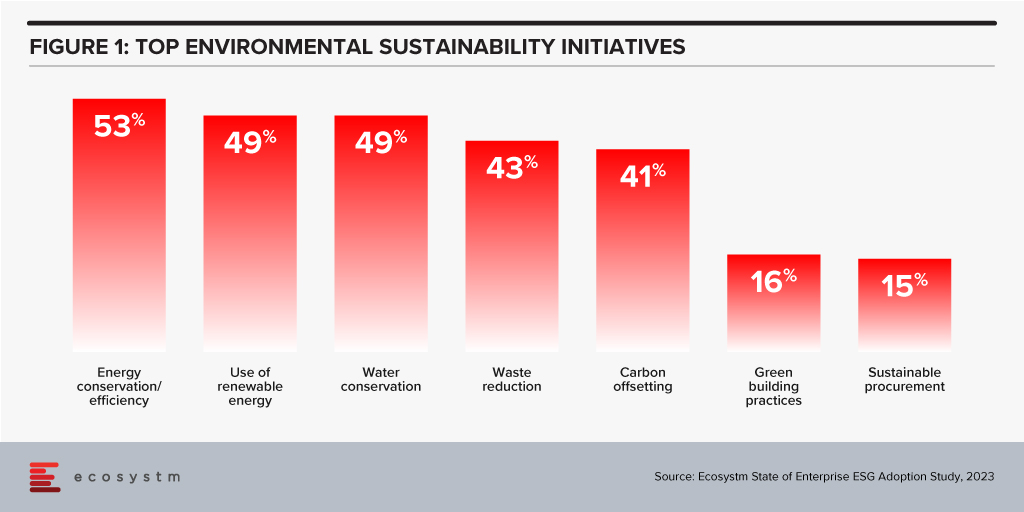

Some organisations understand this already and are beginning to use sustainable sourcing for their technology services. However, it is not a top priority with Ecosystm research showing only 15% of organisations focus on sustainable procurement.

Technology Providers Can Help

Leading technology providers are introducing initiatives that make it easier for organisations to procure sustainable IT solutions. The recently announced HPE GreenLake for Large Language Models will be based in a data centre built and run by Qscale in Canada that is not only sustainably built and sourced, but sits on a grid supplying 99.5% renewable electricity – and waste (warm) air from the data centre and cooling systems is funneled to nearby greenhouses that grow berries. I find the concept remarkable and this is one of the most impressive sustainable data centre stories to date.

The focus on sustainability needs to be universal – across all cloud and AI providers. AI usage IS exploding – and we are just at the tip of the iceberg today. It will continue to grow as it becomes easier to use and deploy, more readily available, and more relevant across all industries and organisations. But we are at a stage of climate warming where we cannot increase our greenhouse gas emissions – and offsetting these emissions just passes the buck.

We need more companies like HPE and Qscale to build this Sustainable Future – and we need to be thinking the same way in our own data centres and putting pressure on our own AI and overall technology value chain to think more sustainably and act in the interests of the planet and future generations. Cloud providers – like AWS – are committed to the NetZero goal (by 2040 in their case) – but this is meaningless if our requirement for computing capacity increases a hundred-fold in that period. Our businesses and our tech partners need to act today. It is time for organisations to demand it from their tech providers to influence change in the industry.

It is not hyperbole to state that AI is on the cusp of having significant implications on society, business, economies, governments, individuals, cultures, politics, the arts, manufacturing, customer experience… I think you get the idea! We cannot understate the impact that AI will have on society. In times gone by, businesses tested ideas, new products, or services with small customer segments before they went live. But with AI we are all part of this experiment on the impacts of AI on society – its benefits, use cases, weaknesses, and threats.

What seemed preposterous just six months ago is not only possible but EASY! Do you want a virtual version of yourself, a friend, your CEO, or your deceased family member? Sure – just feed the data. Will succession planning be more about recording all conversations and interactions with an executive so their avatar can make the decisions when they leave? Why not? How about you turn the thousands of hours of recorded customer conversations with your contact centre team into a virtual contact centre team? Your head of product can present in multiple countries in multiple languages, tailored to the customer segments, industries, geographies, or business needs at the same moment.

AI has the potential to create digital clones of your employees, it can spread fake news as easily as real news, it can be used for deception as easily as for benefit. Is your organisation prepared for the social, personal, cultural, and emotional impacts of AI? Do you know how AI will evolve in your organisation?

When we focus on the future of AI, we often interview AI leaders, business leaders, futurists, and analysts. I haven’t seen enough focus on psychologists, sociologists, historians, academics, counselors, or even regulators! The Internet and social media changed the world more than we ever imagined – at this stage, it looks like these two were just a rehearsal for the real show – Artificial Intelligence.

Lack of Government or Industry Regulation Means You Need to Self-Regulate

These rapid developments – and the notable silence from governments, lawmakers, and regulators – make the requirement for an AI Ethics Policy for your organisation urgent! Even if you have one, it probably needs updating, as the scenarios that AI can operate within are growing and changing literally every day.

- For example, your customer service team might want to create a virtual customer service agent from a real person. What is the policy on this? How will it impact the person?

- Your marketing team might be using ChatGPT or Bard for content creation. Do you have a policy specifically for the creation and use of content using assets your business does not own?

- What data is acceptable to be ingested by a public Large Language Model (LLM). Are are you governing data at creation and publishing to ensure these policies are met?

- With the impending public launch of Microsoft’s Co-Pilot AI service, what data can be ingested by Co-Pilot? How are you governing the distribution of the insights that come out of that capability?

If policies are not put in place, data tagged, staff trained, before using a tool such as Co-Pilot, your business will be likely to break some privacy or employment laws – on the very first day!

What do the LLMs Say About AI Ethics Policies?

So where do you go when looking for an AI Ethics policy? ChatGPT and Bard of course! I asked the two for a modern AI Ethics policy.

You can read what they generated in the graphic below.

I personally prefer the ChatGPT4 version as it is more prescriptive. At the same time, I would argue that MOST of the AI tools that your business has access to today don’t meet all of these principles. And while they are tools and the ethics should dictate the way the tools are used, with AI you cannot always separate the process and outcome from the tool.

For example, a tool that is inherently designed to learn an employee’s character, style, or mannerisms cannot be unbiased if it is based on a biased opinion (and humans have biases!).

LLMs take data, content, and insights created by others, and give it to their customers to reuse. Are you happy with your website being used as a tool to train a startup on the opportunities in the markets and customers you serve?

By making content public, you acknowledge the risk of others using it. But at least they visited your website or app to consume it. Not anymore…

A Policy is Useless if it Sits on a Shelf

Your AI ethics policy needs to be more than a published document. It should be the beginning of a conversation across the entire organisation about the use of AI. Your employees need to be trained in the policy. It needs to be part of the culture of the business – particularly as low and no-code capabilities push these AI tools, practices, and capabilities into the hands of many of your employees.

Nearly every business leader I interview mentions that their organisation is an “intelligent, data-led, business.” What is the role of AI in driving this intelligent business? If being data-driven and analytical is in the DNA of your organisation, soon AI will also be at the heart of your business. You might think you can delay your investments to get it right – but your competitors may be ahead of you.

So, as you jump head-first into the AI pool, start to create, improve and/or socialise your AI Ethics Policy. It should guide your investments, protect your brand, empower your employees, and keep your business resilient and compliant with legacy and new legislation and regulations.

Google recently extended its Generative AI, Bard, to include coding in more than 20 programming languages, including C++, Go, Java, Javascript, and Python. The search giant has been eager to respond to last year’s launch of ChatGPT but as the trusted incumbent, it has naturally been hesitant to move too quickly. The tendency for large language models (LLMs) to produce controversial and erroneous outputs has the potential to tarnish established brands. Google Bard was released in March in the US and the UK as an LLM but lacked the coding ability of OpenAI’s ChatGPT and Microsoft’s Bing Chat.

Bard’s new features include code generation, optimisation, debugging, and explanation. Using natural language processing (NLP), users can explain their requirements to the AI and ask it to generate code that can then be exported to an integrated development environment (IDE) or executed directly in the browser with Google Colab. Similarly, users can request Bard to debug already existing code, explain code snippets, or optimise code to improve performance.

Google continues to refer to Bard as an experiment and highlights that as is the case with generated text, code produced by the AI may not function as expected. Regardless, the new functionality will be useful for both beginner and experienced developers. Those learning to code can use Generative AI to debug and explain their mistakes or write simple programs. More experienced developers can use the tool to perform lower-value work, such as commenting on code, or scaffolding to identify potential problems.

GitHub Copilot X to Face Competition

While the ability for Bard, Bing, and ChatGPT to generate code is one of their most important use cases, developers are now demanding AI directly in their IDEs.

In March, Microsoft made one of its most significant announcements of the year when it demonstrated GitHub Copilot X, which embeds GPT-4 in the development environment. Earlier this year, Microsoft invested $10 billion into OpenAI to add to the $1 billion from 2019, cementing the partnership between the two AI heavyweights. Among other benefits, this agreement makes Azure the exclusive cloud provider to OpenAI and provides Microsoft with the opportunity to enhance its software with AI co-pilots.

Currently, under technical preview, when Copilot X eventually launches, it will integrate into Visual Studio — Microsoft’s IDE. Presented as a sidebar or chat directly in the IDE, Copilot X will be able to generate, explain, and comment on code, debug, write unit tests, and identify vulnerabilities. The “Hey, GitHub” functionality will allow users to chat using voice, suitable for mobile users or more natural interaction on a desktop.

Not to be outdone by its cloud rivals, in April, AWS announced the general availability of what it describes as a real-time AI coding companion. Amazon CodeWhisperer, integrates with a range of IDEs, namely Visual Studio Code, IntelliJ IDEA, CLion, GoLand, WebStorm, Rider, PhpStorm, PyCharm, RubyMine, and DataGrip, or natively in AWS Cloud9 and AWS Lambda console. While the preview worked for Python, Java, JavaScript, TypeScript, and C#, the general release extends support for most languages. Amazon’s key differentiation is that it is available for free to individual users, while GitHub Copilot is currently subscription-based with exceptions only for teachers, students, and maintainers of open-source projects.

The Next Step: Generative AI in Security

The next battleground for Generative AI will be assisting overworked security analysts. Currently, some of the greatest challenges that Security Operations Centres (SOCs) face are being understaffed and overwhelmed with the number of alerts. Security vendors, such as IBM and Securonix, have already deployed automation to reduce alert noise and help analysts prioritise tasks to avoid responding to false threats.

Google recently introduced Sec-PaLM and Microsoft announced Security Copilot, bringing the power of Generative AI to the SOC. These tools will help analysts interact conversationally with their threat management systems and will explain alerts in natural language. How effective these tools will be is yet to be seen, considering hallucinations in security is far riskier than writing an essay with ChatGPT.

The Future of AI Code Generators

Although GitHub Copilot and Amazon CodeWhisperer had already launched with limited feature sets, it was the release of ChatGPT last year that ushered in a new era in AI code generation. There is now a race between the cloud hyperscalers to win over developers and to provide AI that supports other functions, such as security.

Despite fears that AI will replace humans, in their current state it is more likely that they will be used as tools to augment developers. Although AI and automated testing reduce the burden on the already stretched workforce, humans will continue to be in demand to ensure code is secure and satisfies requirements. A likely scenario is that with coding becoming simpler, rather than the number of developers shrinking, the volume and quality of code written will increase. AI will generate a new wave of citizen developers able to work on projects that would previously have been impossible to start. This may, in turn, increase demand for developers to build on these proofs-of-concept.

How the Generative AI landscape evolves over the next year will be interesting. In a recent interview, OpenAI’s founder, Sam Altman, explained that the non-profit model it initially pursued is not feasible, necessitating the launch of a capped-for-profit subsidiary. The company retains its values, however, focusing on advancing AI responsibly and transparently with public consultation. The appearance of Microsoft, Google, and AWS will undoubtedly change the market dynamics and may force OpenAI to at least reconsider its approach once again.

Microsoft’s intention to invest a further USD 10B in OpenAI – the owner of ChatGPT and Dall-E2 confirms what we said in the Ecosystm Predicts – Cloud will be replaced by AI as the right transformation goal. Microsoft has already invested an estimated USD 3B in the company since 2019. Let’s take a look at what this means to the tech industry.

Implications for OpenAI & Microsoft

OpenAI’s tools – such as ChatGPT and the image engine Dell-E2 – require significant processing power to operate, particularly as they move beyond beta programs and offer services at scale. In a single week in December, the company moved past 1 million users for ChatGPT alone. The company must be burning through cash at a significant rate. This means they need significant funding to keep the lights on, particularly as the capability of the product continues to improve and the amount of data, images and content it trawls continues to expand. ChatGPT is being talked about as one of the most revolutionary tech capabilities of the decade – but it will be all for nothing if the company doesn’t have the resources to continue to operate!

This is huge for Microsoft! Much has already been discussed about the opportunity for Microsoft to compete with Google more effectively for search-related advertising dollars. But every product and service that Microsoft develops can be enriched and improved by ChatGPT:

- A spreadsheet tool that automatically categorises data and extract insight

- A word processing tool that creates content automatically

- A CRM that creates custom offers for every individual customer based on their current circumstances

- A collaboration tool that gets answers to questions before they are even asked and acts on the insights and analytics that it needs to drive the right customer and business outcomes

- A presentation tool that creates slides with compelling storylines based on the needs of specific audiences

- LinkedIn providing the insights users need to achieve their outcomes

- A cloud-based AI engine that can be embedded into any process or application through a simple API call (this already exists!)

How Microsoft chooses to monetise these opportunities is up to the company – but the investment certainly puts Microsoft in the box seat to monetise the AI services through their own products while also taking a cut from other ways that OpenAI monetises their services.

Impact on Microsoft’s competitors

Microsoft’s investment in OpenAI will accelerate the rate of AI development and adoption. As we move into the AI era, everything will change. New business opportunities will emerge, and traditional ones will disappear. Markets will be created and destroyed. Microsoft’s investment is an attempt for the company to end up on the right side of this equation. But the other existing (and yet to be created) AI businesses won’t just give up. The Microsoft investment will create a greater urgency for Google, Apple, and others to accelerate their AI capabilities and investments. And we will see investments in OpenAI’s competitors, such as Stability AI (which raised USD 101M in October 2022).

What will change for enterprises?

Too many businesses have put “the cloud” at the centre of their transformation strategies – as if being in the cloud is an achievement in itself. While cloud made applications and processes are easier to transform (and sometimes cheaper to deploy and run), for many businesses, they have just modernised their legacy end-to-end business processes on a better platform. True transformation happens when businesses realise that their processes only existed because they of lack of human or technology capacity to treat every customer and employee as an individual, to determine their specific needs and to deliver a custom solution for them. Not to mention the huge cost of creating unique processes for every customer! But AI does this.

AI engines have the ability to make businesses completely rethink their entire application stack. They have the ability to deliver unique outcomes for every customer. Businesses need to have AI as their transformation goal – where they put intelligence at the centre of every transformation, they will make different decisions and drive better customer and business outcomes. But once again, delivering this will take significant processing power and access to huge amounts of content and data.

The Burning Question: Who owns the outcome of AI?

In the end, ChatGPT only knows what it knows – and the content that it learns from is likely to have been created by someone (ideally – as we don’t want AI to learn from bad AI!). What we don’t really understand is the unintended consequences of commercialising AI. Will content creators be less willing to share their content? Will we see the emergence of many more walled content gardens? Will blockchain and even NFTs emerge as a way of protecting and proving origin? Will legislation protect content creators or AI engines? If everyone is using AI to create content, will all content start to look more similar (as this will be the stage that the AI is learning from content created by AI)? And perhaps the biggest question of all – where does the human stop and the machine start?

These questions will need answers and they are not going to be answered in advance. Whatever the answers might be, we are definitely at the beginning of the next big shift in human-technology relations. Microsoft wants to accelerate this shift. As a technology analyst, 2023 just got a lot more interesting!