“AI Guardrails” are often used as a method to not only get AI programs on track, but also as a way to accelerate AI investments. Projects and programs that fall within the guardrails should be easy to approve, govern, and manage – whereas those outside of the guardrails require further review by a governance team or approval body. The concept of guardrails is familiar to many tech businesses and are often applied in areas such as cybersecurity, digital initiatives, data analytics, governance, and management.

While guidance on implementing guardrails is common, organisations often leave the task of defining their specifics, including their components and functionalities, to their AI and data teams. To assist with this, Ecosystm has surveyed some leading AI users among our customers to get their insights on the guardrails that can provide added value.

Data Security, Governance, and Bias

- Data Assurance. Has the organisation implemented robust data collection and processing procedures to ensure data accuracy, completeness, and relevance for the purpose of the AI model? This includes addressing issues like missing values, inconsistencies, and outliers.

- Bias Analysis. Does the organisation analyse training data for potential biases – demographic, cultural and so on – that could lead to unfair or discriminatory outputs?

- Bias Mitigation. Is the organisation implementing techniques like debiasing algorithms and diverse data augmentation to mitigate bias in model training?

- Data Security. Does the organisation use strong data security measures to protect sensitive information used in training and running AI models?

- Privacy Compliance. Is the AI opportunity compliant with relevant data privacy regulations (country and industry-specific as well as international standards) when collecting, storing, and utilising data?

Model Development and Explainability

- Explainable AI. Does the model use explainable AI (XAI) techniques to understand and explain how AI models reach their decisions, fostering trust and transparency?

- Fair Algorithms. Are algorithms and models designed with fairness in mind, considering factors like equal opportunity and non-discrimination?

- Rigorous Testing. Does the organisation conduct thorough testing and validation of AI models before deployment, ensuring they perform as intended, are robust to unexpected inputs, and avoid generating harmful outputs?

AI Deployment and Monitoring

- Oversight Accountability. Has the organisation established clear roles and responsibilities for human oversight throughout the AI lifecycle, ensuring human control over critical decisions and mitigation of potential harm?

- Continuous Monitoring. Are there mechanisms to continuously monitor AI systems for performance, bias drift, and unintended consequences, addressing any issues promptly?

- Robust Safety. Can the organisation ensure AI systems are robust and safe, able to handle errors or unexpected situations without causing harm? This includes thorough testing and validation of AI models under diverse conditions before deployment.

- Transparency Disclosure. Is the organisation transparent with stakeholders about AI use, including its limitations, potential risks, and how decisions made by the system are reached?

Other AI Considerations

- Ethical Guidelines. Has the organisation developed and adhered to ethical principles for AI development and use, considering areas like privacy, fairness, accountability, and transparency?

- Legal Compliance. Has the organisation created mechanisms to stay updated on and compliant with relevant legal and regulatory frameworks governing AI development and deployment?

- Public Engagement. What mechanisms are there in place to encourage open discussion and engage with the public regarding the use of AI, addressing concerns and building trust?

- Social Responsibility. Has the organisation considered the environmental and social impact of AI systems, including energy consumption, ecological footprint, and potential societal consequences?

Implementing these guardrails requires a comprehensive approach that includes policy formulation, technical measures, and ongoing oversight. It might take a little longer to set up this capability, but in the mid to longer term, it will allow organisations to accelerate AI implementations and drive a culture of responsible AI use and deployment.

2024 has started cautiously for organisations, with many choosing to continue with tech projects that have already initiated, while waiting for clearer market conditions before starting newer transformation projects. This means that tech providers must continue to refine their market messaging and enhance their service/product offerings to strengthen their market presence in the latter part of the year. Ecosystm analysts present five key considerations for tech providers as they navigate evolving market and customer trends, this year.

Navigating Market Dynamics

Continuing Economic Uncertainties. Organisations will focus on ongoing projects and consider expanding initiatives in the latter part of the year. This means that tech providers should maintain visibility and trust with existing clients. They also need to help their customers meet multiple KPIs.

Popularity of Generative AI. For organisations, this will be the time to go beyond the novelty factor and assess practical business outcomes, allied costs, and change management. Tech providers need to include ROI discussions for short-term and mid-term perspectives as organisations move beyond pilots.

Infrastructure Market Disruption. Tech leaders will keep an eye out for advancements and disruptions in the market (likely to originate from the semiconductor sector). The disruptions might require tech vendors to re-assess the infrastructure partner ecosystem.

Need for New Tech Skills. Tech leaders will evaluate Generative AI’s impact on AIOps and IT Architecture; invest in upskilling for talent retention. Tech providers must prioritise creating user-friendly experiences to make technology accessible to business users. Training and partner enablement will also need a higher focus.

Increased Focus on Governance. Tech leaders will consult tech vendors on how to implement safeguards for data usage, sharing, and cybersecurity. This opens up opportunities in offering governance-related services.

5 Key Considerations for Tech Vendors

#1 Get Ready for the Year of the AI Startup

While many AI companies have been around for years, this will be the year that many of them make a significant play into enterprises in Asia Pacific. This comes at a time when many organisations are attempting to reduce tech debt and simplify their tech architecture.

For these AI startups to succeed, they will need to create watertight business cases, and do a lot of the hard work in pre-integrating their solutions with the larger platforms to reduce the time to value and simplify the systems integration work.

To respond to these emerging threats, existing tech providers will need to not only accelerate their own use of AI in their platforms, but also ramp up the education and promotion of these capabilities.

#2 Lead With Data, Not AI Capabilities

Organisations recognise the need for AI to enhance their workforce, improve customer experience, and automate processes. However, the initial challenge lies in improving data quality, as trust in early AI models hinges on high-quality training data for long-term success.

Tech vendors that can help with data source discovery, metadata analysis, and seamless data pipeline creation will emerge as trusted AI partners. Transformation tools that automate deduplication and quality assurance tasks empower data scientists to focus on high-value work. Automation models like Segment Anything enhance unstructured data labeling, particularly for images. Finally synthetic data will gain importance as quality sources become scarce.

Tech vendors will be tempted to capitalise on the Generative AI hype but for sake of positive early experiences, they should begin with data quality.

#3 Prepare Thoroughly for AI-driven Business Demand

Besides pureplay AI opportunities, AI will drive a renewed and increased interest in data and data management. Tech and service providers can capitalise on this by understanding the larger picture around their clients’ data maturity and governance. Initial conversations around AI can be door openers to bigger, transformational engagements.

Tech vendors should avoid the pitfall of downplaying AI risks. Instead, they should make all efforts to own and drive the conversation with their clients. They need to be forthcoming about their in-house responsible AI guidelines and understand what is happening in AI legislation world-wide (hint: a lot!)

Tech providers must establish strong client partnerships for AI initiatives to succeed. They must address risk and benefit equally to reap the benefits of larger AI-driven transformation engagements.

#4 Converge Network & Security Capabilities

Networking and security vendors will need to develop converged offerings as these two technologies increasingly overlap in the hybrid working era. Organisations are now entering a new phase of maturity as they evolve their remote working policies and invest in tools to regain control. They will require simplified management, increased visibility, and to provide a consistent user experience, wherever employees are located.

There has already been a widespread adoption of SD-WAN and now organisations are starting to explore next generation SSE technologies. Procuring these capabilities from a single provider will help to remove complexity from networks as the number of endpoints continue to grow.

Tech providers should take a land and expand approach, getting a foothold with SASE modules that offer rapid ROI. They should focus on SWG and ZTNA deals with an eye to expanding in CASB and FWaaS, as customers gain experience.

#5 Double Down on Your Partner Ecosystem

The IT services market, particularly in Asia Pacific, is poised for significant growth. Factors, including the imperative to cut IT operational costs, the growing complexity of cloud migrations and transformations, change management for Generative AI capabilities, and rising security and data governance needs, will drive increased spending on IT services.

Tech services providers – consultants, SIs, managed services providers, and VARs – will help drive organisations’ tech spend and strategy. This is a good time to review partners, evaluating whether they can take the business forward, or whether there is a need to expand or change the partner mix.

Partner reviews should start with an evaluation of processes and incentives to ensure they foster desired behaviour from customers and partners. Tech vendors should develop a 21st century partner program to improve chances of success.

2024 will be another crucial year for tech leaders – through the continuing economic uncertainties, they will have to embrace transformative technologies and keep an eye on market disruptors such as infrastructure providers and AI startups. Ecosystm analysts outline the key considerations for leaders shaping their organisations’ tech landscape in 2024.

Navigating Market Dynamics

Continuing Economic Uncertainties. Organisations will focus on ongoing projects and consider expanding initiatives in the latter part of the year.

Popularity of Generative AI. This will be the time to go beyond the novelty factor and assess practical business outcomes, allied costs, and change management.

Infrastructure Market Disruption. Keeping an eye out for advancements and disruptions in the market (likely to originate from the semiconductor sector) will define vendor conversations.

Need for New Tech Skills. Generative AI will influence multiple tech roles, including AIOps and IT Architecture. Retaining talent will depend on upskilling and reskilling.

Increased Focus on Governance. Tech vendors are guide tech leaders on how to implement safeguards for data usage, sharing, and cybersecurity.

5 Key Considerations for Tech Leaders

#1 Accelerate and Adapt: Streamline IT with a DevOps Culture

Over the next 12-18 months, advancements in AI, machine learning, automation, and cloud-native technologies will be vital in leveraging scalability and efficiency. Modernisation is imperative to boost responsiveness, efficiency, and competitiveness in today’s dynamic business landscape.

The continued pace of disruption demands that organisations modernise their applications portfolios with agility and purpose. Legacy systems constrained by technical debt drag down velocity, impairing the ability to deliver new innovative offerings and experiences customers have grown to expect.

Prioritising modernisation initiatives that align with key value drivers is critical. Technology leaders should empower development teams to move beyond outdated constraints and swiftly deploy enhanced applications, microservices, and platforms.

#2 Empowering Tomorrow: Spring Clean Your Tech Legacy for New Leaders

Modernising legacy systems is a strategic and inter-generational shift that goes beyond simple technical upgrades. It requires transformation through the process of decomposing and replatforming systems – developed by previous generations – into contemporary services and signifies a fundamental realignment of your business with the evolving digital landscape of the 21st century.

The essence of this modernisation effort is multifaceted. It not only facilitates the integration of advanced technologies but also significantly enhances business agility and drives innovation. It is an approach that prepares your organisation for impending skill gaps, particularly as the older workforce begins to retire over the next decade. Additionally, it provides a valuable opportunity to thoroughly document, reevaluate, and improve business processes. This ensures that operations are not only efficient but also aligned with current market demands, contemporary regulatory standards, and the changing expectations of customers.

#3 Employee Retention: Consider the Strategic Role of Skills Acquisition

The agile, resilient organisation needs to be able to respond at pace to any threat or opportunity it faces. Some of this ability to respond will be related to technology platforms and architectures, but it will be the skills of employees that will dictate the pace of reform. While employee attrition rates will continue to decline in 2024 – but it will be driven by skills acquisition, not location of work.

Organisations who offer ongoing staff training – recognising that their business needs new skills to become a 21st century organisation – are the ones who will see increasing rates of employee retention and happier employees. They will also be the ones who offer better customer experiences, driven by motivated employees who are committed to their personal success, knowing that the organisation values their performance and achievements.

#4 Next-Gen IT Operations: Explore Gen AI for Incident Avoidance and Predictive Analysis

The integration of Generative AI in IT Operations signifies a transformative shift from the automation of basic tasks, to advanced functions like incident avoidance and predictive analysis. Initially automating routine tasks, Generative AI has evolved to proactively avoiding incidents by analysing historical data and current metrics. This shift from proactive to reactive management will be crucial for maintaining uninterrupted business operations and enhancing application reliability.

Predictive analysis provides insight into system performance and user interaction patterns, empowering IT teams to optimise applications pre-emptively, enhancing efficiency and user experience. This also helps organisations meet sustainability goals through accurate capacity planning and resource allocation, also ensuring effective scaling of business applications to meet demands.

#5 Expanding Possibilities: Incorporate AI Startups into Your Portfolio

While many of the AI startups have been around for over five years, this will be the year they come into your consciousness and emerge as legitimate solutions providers to your organisation. And it comes at a difficult time for you!

Most tech leaders are looking to reduce technical debt – looking to consolidate their suppliers and simplify their tech architecture. Considering AI startups will mean a shift back to more rather than fewer tech suppliers; a different sourcing strategy; more focus on integration and ongoing management of the solutions; and a more complex tech architecture.

To meet business requirements will mean that business cases will need to be watertight – often the value will need to be delivered before a contract has been signed.

In our previous Ecosystm Insights, Ecosystm Principal Advisor, Gerald Mackenzie, highlighted the key drivers for boosting ESG maturity and the need to transition from standalone ESG projects to integrating ESG goals into organisational strategy and operations.

This shift can be difficult, requiring an alignment of ESG objectives with broader strategic aims and using organisational capabilities effectively. The solution involves prioritising essential goals, knitting them into overall business strategy, quantifying success metrics, and establishing incentives and governance for effective execution.

The benefits are proven and significant. Stronger Customer and Employee Value Propositions, better bottom line, improved risk profile, and more attractive enterprise valuations for investors and lenders.

According to Gerald, here are 5 things to keep in mind when starting on an ESG journey.

Download ‘Embedding Sustainability in Corporate Strategy and Operations’ as a PDF

In an era marked by heightened global awareness of environmental, social, and governance (ESG) issues, organisations find themselves at a crossroad where profitability converges with responsibility. The imperative to take resolute action on ESG fronts is underscored by a compelling array of statistics and evidence that highlight the profound impact of these considerations on long-term success.

A 2020 McKinsey report revealed that executives and investors value companies with robust ESG performance around 10% higher in valuations than laggards. Equally pivotal, workplace diversity is now recognised as a strategic advantage; a study in the Harvard Business Review finds that companies with above-average total diversity had both 19% higher innovation revenues and 9% higher EBIT margins, on average. Against this backdrop, organisations must recognise that embracing ESG principles is not merely an ethical gesture but a strategic imperative that safeguards resilience, reputation, and enduring financial prosperity.

The data from the ongoing Ecosystm State of ESG Adoption study was used to evaluate the status and maturity of organisations’ ESG strategy and implementation progress. A diverse representation across industries such as Financial Services, Manufacturing, and Retail & eCommerce, as well as from roles across the organisation has helped us with insights and an understanding of where organisations stand in terms of the maturity of their ESG strategy and implementation efforts.

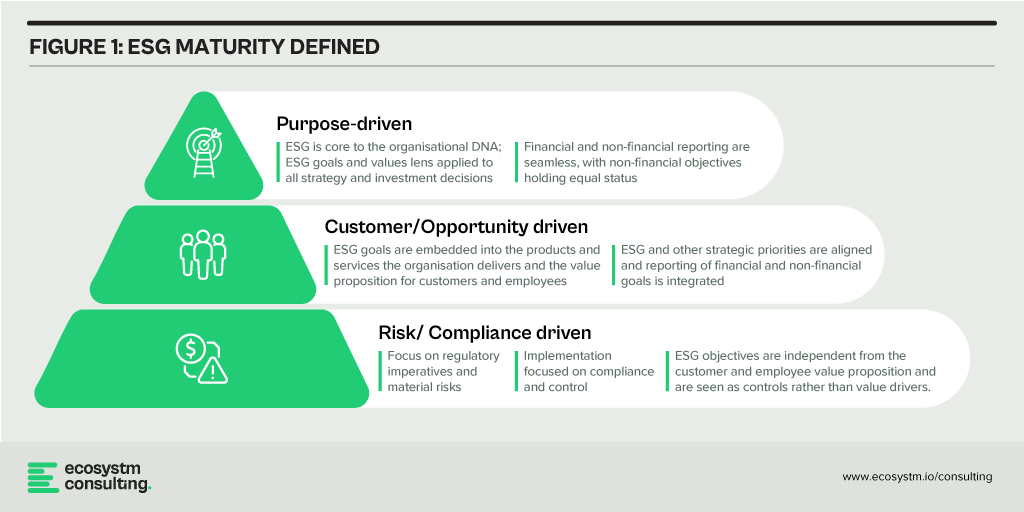

A Tailored Approach to Improve ESG Maturity

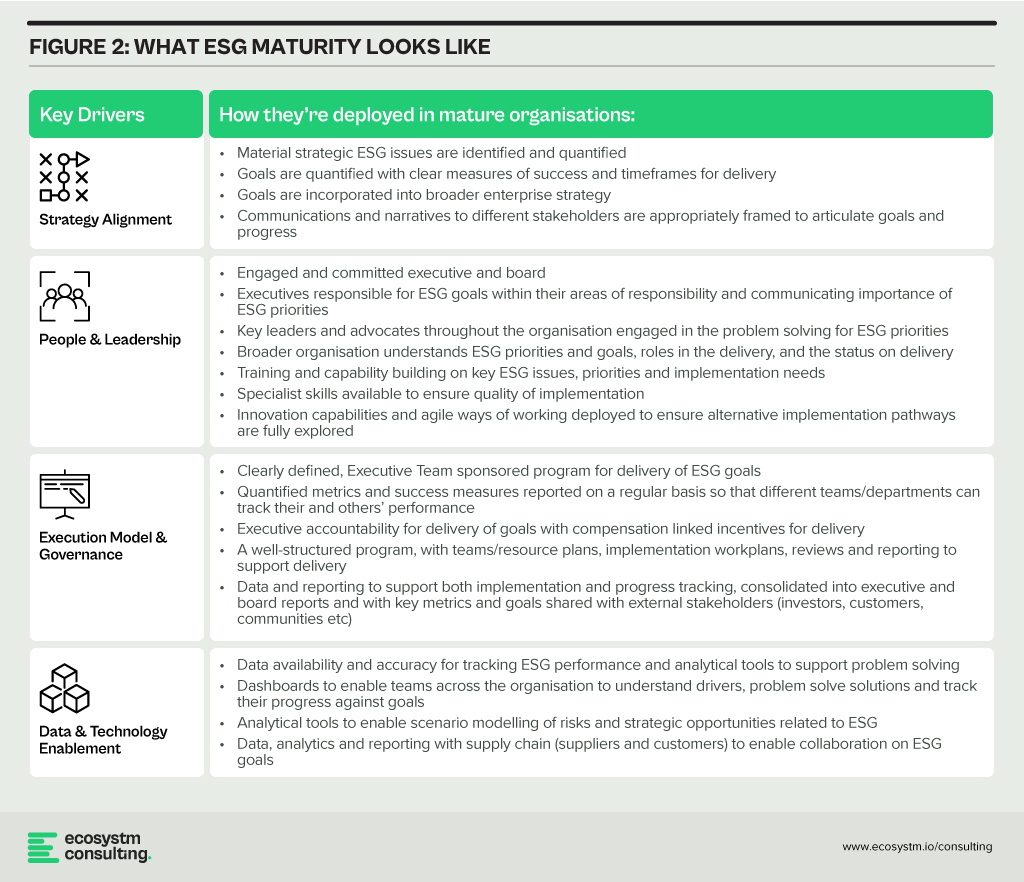

Ecosystm assists clients in driving greater impact through their ESG adoption. Our tools evaluate an organisation’s aspirations and roadmaps using a maturity model, along with a series of practical drivers that enhance ESG response maturity. The maturity of an organisation’s approach on ESG tends to progress from a reactive, or risk/compliance-based focus, to a customer, or opportunity driven approach, to a purpose led approach that is focused on embedding ESG into the core culture of the organisation. Our advisory, research and consulting offerings are customised to the transitions organisations are seeking to make in their maturity levels over time.

Within the maturity framework outlined above, Ecosystm has identified the key organisational drivers to improve maturity and adoption. The Ecosystm ESG Consulting offerings are configured to both support the development of ESG strategy and the delivery and ‘story telling’ around ESG programs based on the goals of the customer (maturity aspiration) and the gaps they need to close to deliver the aspiration.

Key Findings of the Ecosystm State of ESG Study

89% of respondents self-reported that their organisation had an ESG strategy; however, a notable 60% also identified that a lack of alignment of sustainability goals to enterprise strategy was a key issue in implementation. This reflects many of the client discussions we’ve had, where customers share that ESG goals that have not been fully tested against other organisational priorities can create tensions and make it difficult to solve trade-offs across the organisation during implementation.

People & Leadership/Execution & Governance

Capabilities are still emerging. 40% of respondents mentioned that a lack of a governance framework for ESG was a barrier to adoption, and 56% mentioned that immature metrics and reporting maturity slowed adoption. 64% of respondents also mentioned that a lack of specialised resources as a key barrier to ESG adoption.

In our discussions with customers, we understand that there is good support for ESG across organisations, but there needs to be a simple narrative compelling them to action on a few clearly articulated priorities, a clear mandate from senior leadership and credible resourcing and governance to ensure follow through.

Data and Technology Enablement

There is a strong opportunity for improvement. “We can’t manage what we cannot measure” has been the common refrain from the clients we have spoken to and the survey reflected this. Only 47% of respondents say that preparing data, analytics, reporting, and metrics for internal consumption is a priority for their tech teams.

ESG is rapidly emerging as a key priority for customers, investors, talent, and other stakeholders who seek a comprehensive and genuine commitment from the organisations they interact with. Successfully determining the right priorities and effectively mobilising your organisation and external collaborators for implementation are pivotal. It’s crucial to acknowledge the intricacy and extent of effort needed for this endeavour.

With our timely research findings complementing our ESG maturity and implementation frameworks, analyst insights and consulting support, Ecosystm is well-positioned to help you to navigate your journey to ESG maturity.

As an industry, the tech sector tends to jump on keywords and terms – and sometimes reshapes their meaning and intention. “Sustainable” is one of those terms. Technology vendors are selling (allegedly!) “sustainable software/hardware/services/solutions” – in fact, the focus on “green” or “zero carbon” or “recycled” or “circular economy” is increasing exponentially at the moment. And that is good news – as I mentioned in my previous post, we need to significantly reduce greenhouse gas emissions if we want a future for our kids. But there is a significant disconnect between the way tech vendors use the word “sustainable” and the way it is used in boardrooms and senior management teams of their clients.

Defining Sustainability

For organisations, Sustainability is a broad business goal – in fact for many, it is the over-arching goal. A sustainable organisation operates in a way that balances economic, social, and environmental (ESG) considerations. Rather than focusing solely on profits, a sustainable organisation aims to meet the needs of the present without compromising the ability of future generations to meet their own needs.

This is what building a “Sustainable Organisation” typically involves:

Economic Sustainability. The organisation must be financially stable and operate in a manner that ensures long-term economic viability. It doesn’t just focus on short-term profits but invests in long-term growth and resilience.

Social Sustainability. This involves the organisation’s responsibility to its employees, stakeholders, and the wider community. A sustainable organisation will promote fair labour practices, invest in employee well-being, foster diversity and inclusion, and engage in ethical decision-making. It often involves community engagement and initiatives that support societal growth and well-being.

Environmental Sustainability. This facet includes the responsible use of natural resources and minimising negative impacts on the environment. A sustainable organisation seeks to reduce its carbon footprint, minimise waste, enhance energy efficiency, and often supports or initiates activities that promote environmental conservation.

Governance and Ethical Considerations. Sustainable organisations tend to have transparent and responsible governance. They follow ethical business practices, comply with laws and regulations, and foster a culture of integrity and accountability.

Security and Resilience. Sustainable organisations have the ability to thwart bad actors – and in the situation that they are breached, to recover from these breaches quickly and safely. Sustainable organisations can survive cybersecurity incidents and continue to operate when breaches occur, with the least impact.

Long-Term Focus. Sustainability often requires a long-term perspective. By looking beyond immediate gains and considering the long-term impact of decisions, a sustainable organisation can better align its strategies with broader societal goals.

Stakeholder Engagement. Understanding and addressing the needs and concerns of different stakeholders (including employees, customers, suppliers, communities, and shareholders) is key to sustainability. This includes open communication and collaboration with these groups to foster relationships based on trust and mutual benefit.

Adaptation and Innovation. The organisation is not static and recognises the need for continual improvement and adaptation. This might include innovation in products, services, or processes to meet evolving sustainability standards and societal expectations.

Alignment with the United Nations’ Sustainable Development Goals (UNSDGs). Many sustainable organisations align their strategies and operations with the UNSDGs which provide a global framework for addressing sustainability challenges.

Organisations Appreciate Precise Messaging

A sustainable organisation is one that integrates economic, social, and environmental considerations into all aspects of its operations. It goes beyond mere compliance with laws to actively pursue positive impacts on people and the planet, maintaining a balance that ensures long-term success and resilience.

These factors are all top of mind when business leaders, boards and government agencies use the word “sustainable”. Helping organisations meet their emission reduction targets is a good starting point – but it is a long way from all businesses need to become sustainable organisations.

Tech providers need to reconsider their use of the term “sustainable” – unless their solution or service is helping organisations meet all of the features outlined above. Using specific language would be favoured by most customers – telling them how the solution will help them reduce greenhouse gas emissions, meet compliance requirements for CO2 and/or waste reduction, and save money on electricity and/or management costs – these are all likely to get the sale over the line faster than a broad “sustainability” messaging will.

Technology is reshaping the Public Sector worldwide, optimising operations, improving citizen services, and fostering data-driven decision-making. Government agencies are also embracing innovation for effective governance in this digital era.

Public sector organisations worldwide recognise the need for swift and agile interventions. With citizen expectations resembling those of commercial customers, public sector organisations face mounting pressure to break down the barriers to provide seamless service experiences.

Read on to find out how public sector organisations in countries such as Australia, Vietnam, the Philippines, South Korea, and Singapore are innovating to stay ahead of the curve; and what Ecosystm VP Consulting, Peter Carr sees as the Future of Public Sector.

Click here to Download ‘The Future of the Public Sector’ as a PDF

It is not hyperbole to state that AI is on the cusp of having significant implications on society, business, economies, governments, individuals, cultures, politics, the arts, manufacturing, customer experience… I think you get the idea! We cannot understate the impact that AI will have on society. In times gone by, businesses tested ideas, new products, or services with small customer segments before they went live. But with AI we are all part of this experiment on the impacts of AI on society – its benefits, use cases, weaknesses, and threats.

What seemed preposterous just six months ago is not only possible but EASY! Do you want a virtual version of yourself, a friend, your CEO, or your deceased family member? Sure – just feed the data. Will succession planning be more about recording all conversations and interactions with an executive so their avatar can make the decisions when they leave? Why not? How about you turn the thousands of hours of recorded customer conversations with your contact centre team into a virtual contact centre team? Your head of product can present in multiple countries in multiple languages, tailored to the customer segments, industries, geographies, or business needs at the same moment.

AI has the potential to create digital clones of your employees, it can spread fake news as easily as real news, it can be used for deception as easily as for benefit. Is your organisation prepared for the social, personal, cultural, and emotional impacts of AI? Do you know how AI will evolve in your organisation?

When we focus on the future of AI, we often interview AI leaders, business leaders, futurists, and analysts. I haven’t seen enough focus on psychologists, sociologists, historians, academics, counselors, or even regulators! The Internet and social media changed the world more than we ever imagined – at this stage, it looks like these two were just a rehearsal for the real show – Artificial Intelligence.

Lack of Government or Industry Regulation Means You Need to Self-Regulate

These rapid developments – and the notable silence from governments, lawmakers, and regulators – make the requirement for an AI Ethics Policy for your organisation urgent! Even if you have one, it probably needs updating, as the scenarios that AI can operate within are growing and changing literally every day.

- For example, your customer service team might want to create a virtual customer service agent from a real person. What is the policy on this? How will it impact the person?

- Your marketing team might be using ChatGPT or Bard for content creation. Do you have a policy specifically for the creation and use of content using assets your business does not own?

- What data is acceptable to be ingested by a public Large Language Model (LLM). Are are you governing data at creation and publishing to ensure these policies are met?

- With the impending public launch of Microsoft’s Co-Pilot AI service, what data can be ingested by Co-Pilot? How are you governing the distribution of the insights that come out of that capability?

If policies are not put in place, data tagged, staff trained, before using a tool such as Co-Pilot, your business will be likely to break some privacy or employment laws – on the very first day!

What do the LLMs Say About AI Ethics Policies?

So where do you go when looking for an AI Ethics policy? ChatGPT and Bard of course! I asked the two for a modern AI Ethics policy.

You can read what they generated in the graphic below.

I personally prefer the ChatGPT4 version as it is more prescriptive. At the same time, I would argue that MOST of the AI tools that your business has access to today don’t meet all of these principles. And while they are tools and the ethics should dictate the way the tools are used, with AI you cannot always separate the process and outcome from the tool.

For example, a tool that is inherently designed to learn an employee’s character, style, or mannerisms cannot be unbiased if it is based on a biased opinion (and humans have biases!).

LLMs take data, content, and insights created by others, and give it to their customers to reuse. Are you happy with your website being used as a tool to train a startup on the opportunities in the markets and customers you serve?

By making content public, you acknowledge the risk of others using it. But at least they visited your website or app to consume it. Not anymore…

A Policy is Useless if it Sits on a Shelf

Your AI ethics policy needs to be more than a published document. It should be the beginning of a conversation across the entire organisation about the use of AI. Your employees need to be trained in the policy. It needs to be part of the culture of the business – particularly as low and no-code capabilities push these AI tools, practices, and capabilities into the hands of many of your employees.

Nearly every business leader I interview mentions that their organisation is an “intelligent, data-led, business.” What is the role of AI in driving this intelligent business? If being data-driven and analytical is in the DNA of your organisation, soon AI will also be at the heart of your business. You might think you can delay your investments to get it right – but your competitors may be ahead of you.

So, as you jump head-first into the AI pool, start to create, improve and/or socialise your AI Ethics Policy. It should guide your investments, protect your brand, empower your employees, and keep your business resilient and compliant with legacy and new legislation and regulations.

Data & AI initiatives are firmly at the core of any organisation’s tech-led transformation efforts. Businesses today realise the value of real-time data insights to deliver the agility that is required to succeed in today’s competitive, and often volatile, market.

But organisations continue to struggle with their data & AI initiatives for a variety of reasons. Organisations in ASEAN report some common challenges in implementing successful data & AI initiatives.

Here are 5 insights to build scalable AI.

- Data Access a Key Stumbling Block. Many organisations find that they no longer need to rely on centralised data repositories.

- Organisations Need Data Creativity. A true data-first organisation derives value from their data & AI investments across the entire organisation, cross-leveraging data.

- Governance Not Built into Organisational Psyche. A data-first organisation needs all employees to have a data-driven mindset. This can only be driven by clear guidelines that are laid out early on and adhered to by data generators, managers, and consumers.

- Lack of End-to-End Data Lifecycle Management. It is critical to have observability, intelligence, and automation built into the entire data lifecycle.

- Democratisation of Data & AI Should Be the Goal. The true value of data & AI solutions will be fully realised when the people who benefit from the solutions are the ones managing the solutions and running the queries that will help them deliver better value to the business.

Read below to find out more.

Download 5 Insights to Help Organisations Build Scalable AI – An ASEAN View as a PDF