Despite financial institutions’ unwavering efforts to safeguard their customers, scammers continually evolve to exploit advancements in technology. For example, the number of scams and cybercrimes reported to the police in Singapore increased by a staggering 49.6% to 50,376 at an estimated cost of USD 482M in 2023. GenAI represents the latest challenge to the industry, providing fraudsters with new avenues for deception.

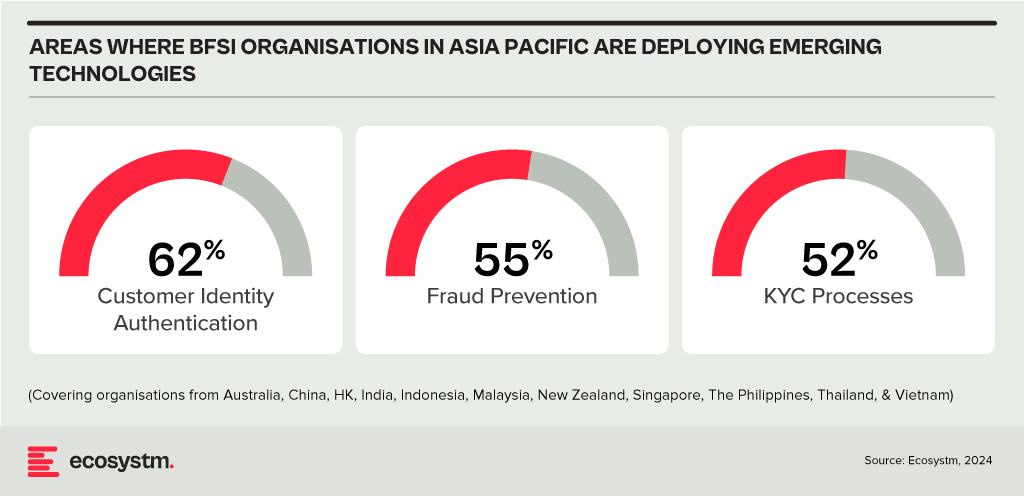

Ecosystm research shows that BFSI organisations in Asia Pacific are spending more on technologies to authenticate customer identity and prevent fraud, than they are in their Know Your Customer (KYC) processes.

The Evolution of the Threat Landscape in BFSI

Synthetic Identity Fraud. This involves the creation of fictitious identities by combining real and fake information, distinct from traditional identity theft where personal data is stolen. These synthetic identities are then exploited to open fraudulent accounts, obtain credit, or engage in financial crimes, often evading detection due to their lack of association with real individuals. The Deloitte Centre for Financial Services predicts that synthetic identity fraud will result in USD 23B in losses by 2030. Synthetic fraud is posing significant challenges for financial institutions and law enforcement agencies, especially with the emergence of advanced technologies like GenAI being used to produce realistic documents blending genuine and false information, undermining Know Your Customer (KYC) protocols.

AI-Enhanced Phishing. Ecosystm research reveals that in Asia Pacific, 71% of customer interactions in BFSI occur across multiple digital channels, including mobile apps, emails, messaging, web chats, and conversational AI. In fact, 57% of organisations plan to further improve customer self-service capabilities to meet the demand for flexible and convenient service delivery. The proliferation of digital channels brings with it an increased risk of phishing attacks.

While these organisations continue to educate their customers on how to secure their accounts in a digital world, GenAI poses an escalating threat here as well. Phishing schemes will employ widely available LLMs to generate convincing text and even images. For many potential victims, misspellings and strangely worded appeals are the only hint that an email from their bank is not what it seems. The maturing of deepfake technology will also make it possible for malicious agents to create personalised voice and video attacks.

Identity Fraud Detection and Prevention

Although fraudsters are exploiting every new vulnerability, financial organisations also have new tools to protect their customers. Organisations should build a layered defence to prevent increasingly sophisticated attempts at fraud.

- Behavioural analytics. Using machine learning, financial organisations can differentiate between standard activities and suspicious behaviour at the account level. Data that can be analysed includes purchase patterns, unusual transaction values, VPN use, browser choice, log-in times, and impossible travel. Anomalies can be flagged, and additional security measures initiated to stem the attack.

- Passive authentication. Accounts can be protected even before password or biometric authentication by analysing additional data, such as phone number and IP address. This approach can be enhanced by comparing databases populated with the details of suspicious actors.

- SIM swap detection. SMS-based MFA is vulnerable to SIM swap attacks where a customer’s phone number is transferred to the fraudster’s own device. This can be prevented by using an authenticator app rather than SMS. Alternatively, SIM swap history can be detected before sending one-time passwords (OTPs).

- Breached password detection. Although customers are strongly discouraged to reuse passwords across sites, some inevitably will. By employing a service that maintains a database of credentials leaked during third-party breaches, it is possible to compare with active customer passwords and initiate a reset.

- Stronger biometrics. Phone-based fingerprint recognition has helped financial organisations safeguard against fraud and simplify the authentication experience. Advances in biometrics continue with recognition for faces, retina, iris, palm print, and voice making multimodal biometric protection possible. Liveness detection will grow in importance to combat against AI-generated content.

- Step-up validation. Authentication requirements can be differentiated according to risk level. Lower risk activities, such as balance check or internal transfer, may only require minimal authentication while higher risk ones, like international or cryptocurrency transactions may require a step up in validation. When anomalous behaviour is detected, even greater levels of security can be initiated.

Recommendations

- Reduce friction. While it may be tempting to implement heavy handed approaches to prevent fraud, it is also important to minimise friction in the authentication system. Frustrated users may abandon services or find risky ways to circumvent security. An effective layered defence should act in the background to prevent attackers getting close.

- AI Phishing Awareness. Even the savviest of customers could fall prey to advanced phishing attacks that are using GenAI. Social engineering at scale becomes increasingly more possible with each advance in AI. Monitor emerging global phishing activities and remind customers to be ever vigilant of more polished and personalised phishing attempts.

- Deploy an authenticator app. Consider shifting away from OTP SMS as an MFA method and implement either an authenticator app or one embedded in the financial app instead.

- Integrate authentication with fraud analytics. Select an authentication provider that can integrate its offering with analytics to identify fraud or unusual behaviour during account creation, log in, and transactions. The two systems should work in tandem.

- Take a zero-trust approach. Protecting both customers and employees is critical, particularly in the hybrid work era. Implement zero trust tools to prevent employees from falling victim to malicious attacks and minimising damage if they do.

In the Ecosystm Predicts: Building an Agile & Resilient Organisation: Top 5 Trends in 2024, Principal Advisor Darian Bird said, “The emergence of Generative AI combined with the maturing of deepfake technology will make it possible for malicious agents to create personalised voice and video attacks.” Darian highlighted that this democratisation of phishing, facilitated by professional-sounding prose in various languages and tones, poses a significant threat to potential victims who rely on misspellings or oddly worded appeals to detect fraud. As we see more of these attacks and social engineering attempts, it is important to improve defence mechanisms and increase awareness.

Understanding Deepfake Technology

The term Deepfake is a combination of the words ‘deep learning’ and ‘fake’. Deepfakes are AI-generated media, typically in the form of images, videos, or audio recordings. These synthetic content pieces are designed to appear genuine, often leading to the manipulation of faces and voices in a highly realistic manner. Deepfake technology has gained spotlight due to its potential for creating convincing yet fraudulent content that blurs the line of reality.

Deepfake algorithms are powered by Generative Adversarial Networks (GANs) and continuously enhance synthetic content to closely resemble real data. Through iterative training on extensive datasets, these algorithms refine features such as facial expressions and voice inflections, ensuring a seamless emulation of authentic characteristics.

Deepfakes Becoming Increasingly Convincing

Hyper-realistic deepfakes, undetectable to the human eye and ear, have become a huge threat to the financial and technology sectors. Deepfake technology has become highly convincing, blurring the line between real and fake content. One of the early examples of a successful deepfake fraud was when a UK-based energy company lost USD 243k through a deepfake audio scam in 2019, where scammers mimicked the voice of their CEO to authorise an illegal fund transfer.

Deepfakes have evolved from audio simulations to highly convincing video manipulations where faces and expressions are altered in real-time, making it hard to distinguish between real and fake content. In 2022, for instance, a deepfake video of Elon Musk was used in a crypto scam that resulted in a loss of about USD 2 million for US consumers. This year, a multinational company in Hong Kong lost over USD 25 million when an employee was tricked into sending money to fraudulent accounts after a deepfake video call by what appeared to be his colleagues.

Regulatory Responses to Deepfakes

Countries worldwide are responding to the challenges posed by deepfake technology through regulations and awareness campaigns.

- Singapore’s Online Criminal Harms Act, that will come into effect in 2024, will empower authorities to order individuals and Internet service providers to remove or block criminal content, including deepfakes used for malicious purposes.

- The UAE National Programme for Artificial Intelligence released a deepfake guide to educate the public about both harmful and beneficial applications of this technology. The guide categorises fake content into shallow and deep fakes, providing methods to detect deepfakes using AI-based tools, with a focus on promoting positive uses of advanced technologies.

- The proposed EU AI Act aims to regulate them by imposing transparency requirements on creators, mandating them to disclose when content has been artificially generated or manipulated.

- South Korea passed a law in 2020 banning the distribution of harmful deepfakes. Offenders could be sentenced to up to five years in prison or fined up to USD 43k.

- In the US, states like California and Virginia have passed laws against deepfake pornography, while federal bills like the DEEP FAKES Accountability Act aim to mandate disclosure and counter malicious use, highlighting the diverse global efforts to address the multifaceted challenges of deepfake regulation.

Detecting and Protecting Against Deepfakes

Detecting deepfake becomes increasingly challenging as technology advances. Several methods are needed – sometimes in conjunction – to be able to detect a convincing deepfake. These include visual inspection that focuses on anomalies, metadata analysis to examine clues about authenticity, forensic analysis for pattern and audio examination, and machine learning that uses algorithms trained on real and fake video datasets to classify new videos.

However, identifying deepfakes requires sophisticated technology that many organisations may not have access to. This heightens the need for robust cybersecurity measures. Deepfakes have seen an increase in convincing and successful phishing – and spear phishing – attacks and cyber leaders need to double down on cyber practices.

Defences can no longer depend on spotting these attacks. It requires a multi-pronged approach which combines cyber technologies, incidence response, and user education.

Preventing access to users. By employing anti-spoofing measures organisations can safeguard their email addresses from exploitation by fraudulent actors. Simultaneously, minimising access to readily available information, particularly on websites and social media, reduces the chance of spear-phishing attempts. This includes educating employees about the implications of sharing personal information and clear digital footprint policies. Implementing email filtering mechanisms, whether at the server or device level, helps intercept suspicious emails; and the filtering rules need to be constantly evaluated using techniques such as IP filtering and attachment analysis.

Employee awareness and reporting. There are many ways that organisations can increase awareness in employees starting from regular training sessions to attack simulations. The usefulness of these sessions is often questioned as sometimes they are merely aimed at ticking off a compliance box. Security leaders should aim to make it easier for employees to recognise these attacks by familiarising them with standard processes and implementing verification measures for important email requests. This should be strengthened by a culture of reporting without any individual blame.

Securing against malware. Malware is often distributed through these attacks, making it crucial to ensure devices are well-configured and equipped with effective endpoint defences to prevent malware installation, even if users inadvertently click on suspicious links. Specific defences may include disabling macros and limiting administrator privileges to prevent accidental malware installation. Strengthening authentication and authorisation processes is also important, with measures such as multi-factor authentication, password managers, and alternative authentication methods like biometrics or smart cards. Zero trust and least privilege policies help protect organisation data and assets.

Detection and Response. A robust security logging system is crucial, either through off-the shelf monitoring tools, managed services, or dedicated teams for monitoring. What is more important is that the monitoring capabilities are regularly updated. Additionally, having a well-defined incident response can swiftly mitigate post-incident harm post-incident. This requires clear procedures for various incident types and designated personnel for executing them, such as initiating password resets or removing malware. Organisations should ensure that users are informed about reporting procedures, considering potential communication challenges in the event of device compromise.

Conclusion

The rise of deepfakes has brought forward the need for a collaborative approach. Policymakers, technology companies, and the public must work together to address the challenges posed by deepfakes. This collaboration is crucial for making better detection technologies, establishing stronger laws, and raising awareness on media literacy.

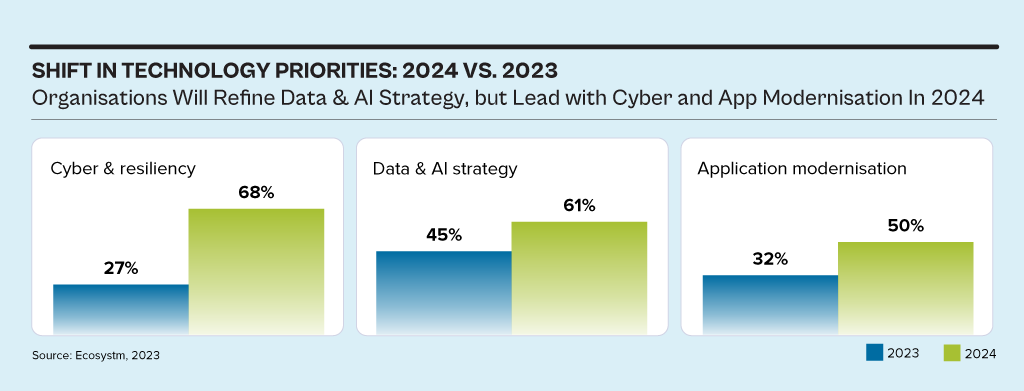

While the discussions have centred around AI, particularly Generative AI in 2023, the influence of AI innovations is extensive. Organisations will urgently need to re-examine their risk strategies, particularly in cyber and resilience practices. They will also reassess their infrastructure needs, optimise applications for AI, and re-evaluate their skills requirements.

This impacts the entire tech market, including tech skills, market opportunities, and innovations.

Ecosystm analysts Alea Fairchild, Darian Bird, Richard Wilkins, and Tim Sheedy present the top 5 trends in building an Agile & Resilient Organisation in 2024.

Click here to download ‘Ecosystm Predicts: Top 5 Resilience Trends in 2024’ as a PDF.

#1 Gen AI Will See Spike in Infrastructure Innovation

Enterprises considering the adoption of Generative AI are evaluating cloud-based solutions versus on-premises solutions. Cloud-based options present an advantage in terms of simplified integration, but raise concerns over the management of training data, potentially resulting in AI-generated hallucinations. On-premises alternatives offer enhanced control and data security but encounter obstacles due to the unexpectedly high demands of GPU computing needed for inferencing, impeding widespread implementation. To overcome this, there’s a need for hardware innovation to meet Generative AI demands, ensuring scalable on-premises deployments.

The collaboration between hardware development and AI innovation is crucial to unleash the full potential of Generative AI and drive enterprise adoption in the AI ecosystem.

Striking the right balance between cloud-based flexibility and on-premises control is pivotal, with considerations like data control, privacy, scalability, compliance, and operational requirements.

#2 Cloud Migrations Will Make Way for Cloud Transformations

The steady move to the public cloud has slowed down. Organisations – particularly those in mature economies – now prioritise cloud efficiencies, having largely completed most of their application migration. The “easy” workloads have moved to the cloud – either through lift-and-shift, SaaS, or simple replatforming.

New skills will be needed as organisations adopt public and hybrid cloud for their entire application and workload portfolio.

- Cloud-native development frameworks like Spring Boot and ASP.NET Core make it easier to develop cloud-native applications

- Cloud-native databases like MongoDB and Cassandra are designed for the cloud and offer scalability, performance, and reliability

- Cloud-native storage like Snowflake, Amazon S3 and Google Cloud Storage provides secure and scalable storage

- Cloud-native messaging like Amazon SNS and Google Cloud Pub/Sub provide reliable and scalable communication between different parts of the cloud-native application

#3 2024 Will be a Good Year for Technology Services Providers

Several changes are set to fuel the growth of tech services providers (systems integrators, consultants, and managed services providers).

There will be a return of “big apps” projects in 2024.

Companies are embarking on significant updates for their SAP, Oracle, and other large ERP, CRM, SCM, and HRM platforms. Whether moving to the cloud or staying on-premises, these upgrades will generate substantial activity for tech services providers.

The migration of complex apps to the cloud involves significant refactoring and rearchitecting, presenting substantial opportunities for managed services providers to transform and modernise these applications beyond traditional “lift-and-shift” activities.

The dynamic tech landscape, marked by AI growth, evolving security threats, and constant releases of new cloud services, has led to a shortage of modern tech skills. Despite a more relaxed job market, organisations will increasingly turn to their tech services partners, whether onshore or offshore, to fill crucial skill gaps.

#4 Gen AI and Maturing Deepfakes Will Democratise Phishing

As with any emerging technology, malicious actors will be among the fastest to exploit Generative AI for their own purposes. The most immediate application will be employing widely available LLMs to generate convincing text and images for their phishing schemes. For many potential victims, misspellings and strangely worded appeals are the only hints that an email from their bank, courier, or colleague is not what it seems. The ability to create professional-sounding prose in any language and a variety of tones will unfortunately democratise phishing.

The emergence of Generative AI combined with the maturing of deepfake technology will make it possible for malicious agents to create personalised voice and video attacks. Digital channels for communication and entertainment will be stretched to differentiate between real and fake.

Security training that underscores the threat of more polished and personalised phishing is a must.

#5 A Holistic Approach to Risk and Operational Resilience Will Drive Adoption of VMaaS

Vulnerability management is a continuous, proactive approach to managing system security. It not only involves vulnerability assessments but also includes developing and implementing strategies to address these vulnerabilities. This is where Vulnerability Management Platforms (VMPs) become table stakes for small and medium enterprises (SMEs) as they are often perceived as “easier targets” by cybercriminals due to potentially lesser investments in security measures.

Vulnerability Management as a Service (VMaaS) – a third-party service that manages and controls threats to automate vulnerability response to remediate faster – can improve the asset cybersecurity management and let SMEs focus on their core activities.

In-house security teams will particularly value the flexibility and customisation of dashboards and reports that give them enhanced visibility over all assets and vulnerabilities.

Ecosystm research finds that 47% of organisations re-evaluated cybersecurity risks and management making it the biggest measure undertaken by IT Teams when COVID-19 hit. There is no denying any more that cybersecurity is a key business enabler. This year witnessed cybercrime escalating in all parts of the world and several governments issued advisories warning enterprises and citizens of the increase in the threat landscape, during and post COVID-19. Against this backdrop, Ecosystm Advisors, Alex Woerndle, Andrew Milroy, Carl Woerndle and Claus Mortensen present the top 5 Ecosystm predictions for Cybersecurity & Compliance in 2021.

This is a summary of the predictions, the full report (including the implications) is available to download for free on the Ecosystm platform here.

The Top 5 Cybersecurity & Compliance Trends for 2021

- There will be Further Expansion of M&A Activities Through 2021 and Beyond

As predicted last year, the market is set to witness mergers and acquisitions (M&As) to consolidate the market. The pandemic has slowed down M&A activities in 2020. However, the market remains fragmented and there is a demand for consolidation. As the cyber market continues to mature, we expect M&A activities to ramp up over the next couple of years especially once we emerge from COVID-19. Some organisations that understand the full impact of the shift to remote working and the threats it creates have embraced the opportunity to acquire, based on perceived value due to COVID-19. The recent acquisition of Asavie by Akamai Technologies is a case in point. Asavie’s platform is expected to strengthen Akamai’s IoT and mobile device security and management services.

- After a Year of Pandemic Leniency, Regulators will Get Stricter in 2021

The regulators in the EU appear to have gone through a period of relative leniency or less activity during the first few months of the pandemic and have started to increase their efforts after the summer break. Expect regulators – even outside the EU – to step up their enforcement activities in 2021 and seek larger penalties for breaches.

Governments continue to evolve their Compliance policies across broader sectors, which will impact all industries. As an example, in Australia, the Federal Government has made changes to its definition of critical infrastructure, which brings mandates to many more organisations. Governments have shown an acute awareness of the rise in cyber-attacks highlighted by several high-profile breaches reported in mainstream media. Insider threats – highlighted by Tesla, where an employee raised the allegations of bribery by unknown third parties in exchange for exfiltrating corporate information – will also lead regulators to double down on their enforcement activities.

- The Zero Trust Model Will Gain Momentum

Remote working has challenged the traditional network security perimeter model. The use of personal and corporate devices to access the network via public networks and third-party clouds is creating more opportunity for attackers. Organisations have started turning to a Zero Trust security model to mitigate the risk, applying advanced authentication and continuous monitoring. We expect the adoption of the Zero Trust model to gain momentum through 2021. This will also see an increase in managed services around active security monitoring such as Threat Detection & Response and the increased adoption of authentication technologies. With an eye on the future, especially around quantum computing, authentication technologies will need to continually evolve.

- The Endpoint Will be the Weakest Link

The attack surface continues to grow exponentially, with the increase in remote working, IoT devices and multicloud environments. Remote endpoints require the same, if not higher levels of security than assets that sit within corporate firewalls, and it will become very clear to organisations that endpoints are the most vulnerable. Remote workers are often using unsecure home Wi-Fi connections and unpatched VPNs, and are increasingly vulnerable to phishing attacks. IoT device passwords are often so weak that brute-force attackers can enter networks in milliseconds.

Although endpoint security can be dealt with through strict policies together with hardware or software authentication, the difficult part is to adopt an approach that retains a relatively high level of security without having a too negative an impact on the employee experience. Experience shows that if the security measures are too cumbersome, employees will find ways to circumvent them.

- Hackers Will Turn the Table on AI Security

Cybersecurity vendors are increasingly offering solutions that leverage AI to identify and stop cyber-attacks with less human intervention than is typically expected or needed with traditional security approaches. AI can enhance cybersecurity by better predicting attacks enabling more proactive countermeasures, shortening response times, and potentially saving cybersecurity investment costs. The problem is that the exact same thing applies to the hackers. By leveraging AI, the costs and efforts needed to launch and coordinate large hacker attacks will also go down. Hackers can automate their attacks well beyond the use of botnets, target and customise their attacks with more granularity than before and can effectively target the biggest weakness of any IT security system – people.

Already, phishing attacks account for many of the breaches we see today typically by employees being tricked into sharing their IT credentials via email or over the phone. As we move forward, these types of attacks will become much more sophisticated. Many of the deepfake videos we see have been made using cheap or free AI-enabled apps that are easy enough for even a child to use. As we move into 2021, this ability to manipulate both video and audio will increasingly enable attackers to accurately impersonate individuals.