Advanced technology and the growing interconnectedness of devices are no longer futuristic concepts in health and life sciences – they’re driving a powerful transformation. Technology, combined with societal demands, is reshaping drug discovery, clinical trials, patient care, and even our understanding of the human body.

The potential to create more efficient, personalised, and effective healthcare solutions has never been greater.

Click here to download “Future Forward: Reimagining Health & Life Sciences” as a PDF.

Modernising HR for Enhanced Efficiency & Employee Experience

The National Healthcare Group (NHG), a leading public healthcare provider in Singapore, recognised the need to modernise their HR system to better support 20,000+ healthcare professionals and improve patient services.

The iConnect@NHG initiative was launched to centralise HR functions, providing mobile access and self-service capabilities, and streamlining workflows across NHG’s integrated network of hospitals, polyclinics, and specialty centres.

The solution streamlined HR processes, giving employees easy access to essential data, career tools, and claims. The cloud-based platform improved data accuracy, reduced admin work, and integrated analytics for better decision-making and engagement. With 95% adoption, productivity and job satisfaction surged, enabling staff to focus on care delivery.

Automating Workflows for Better Patient Outcomes

Gold Coast Health handles a high volume of patient interactions across a wide range of medical services. The challenge was to streamline operations and reduce administrative burdens to improve patient care.

The solution involved automating the patient intake process by replacing paper forms with electronic versions, freeing up significant staff time.

A new clinical imaging solution also automates the uploading of wound images and descriptions into patient records, further saving time. Additionally, Gold Coast Health implemented a Discharge to Reassess system to automate follow-ups for long-term outpatient care. They are also exploring AI to simplify tasks and improve access to information, allowing clinical teams to focus more on patient care.

Streamlining Operations, Improving Care

IHH Healthcare, a global provider with over 80 hospitals across 10 countries, faced a fragmented IT landscape that hindered data management and patient care.

To resolve this, IHH migrated their core application workloads, including EMRs, to a next-gen cloud platform, unifying data across their network and enhancing analytics.

Additionally, they adopted an on-prem cloud solution to comply with local data residency requirements. This transformation reduced report generation time from days to hours, boosting operational efficiency and improving patient and clinician experiences. By leveraging advanced cloud technologies, IHH is strengthening their commitment to delivering world-class healthcare.

Creating Seamless & Compassionate Patient Journeys

The Narayana Health group in India is committed to providing accessible, high-quality care. However, they faced challenges with fragmented patient data, which hindered personalised care and efficient interactions.

To address this, Narayana Health centralised patient data, providing agents with a 360-degree view to offer more informed and compassionate service.

By automating tasks like call routing and form-filling, the organisation reduced average handling times and increased appointment conversions. Additionally, automated communication tools delivered timely, sensitive updates, strengthening patient relationships. The initiative has improved operational efficiency and deepened the organisation’s patient-centric focus.

Reimagining Location Services for Digital Healthcare

Halodoc, a leading digital health platform in Indonesia, connects millions of users with healthcare professionals and pharmacies.

To improve key services like home lab appointments and medicine delivery, Halodoc sought a more cost-effective and secure location service.

The transition resulted in an 88% reduction in costs for geocoding and places functionalities while enhancing data security. With better performance monitoring, Halodoc processed millions of geocoding and place requests with no major issues. This migration not only optimised costs but also resolved long-standing technical challenges, positioning Halodoc for future innovation, including machine learning and AI. The move strengthened their data security and provided a solid foundation for continued growth and high-quality healthcare delivery across Indonesia.

Driving Efficiency & Accessibility through Integrated Systems

Lupin, a global pharma leader, aimed to boost patient care, streamline operations, and enhance accessibility. By integrating systems and centralising data, Lupin wanted seamless interactions between patients, doctors, and the salesforce.

The company implemented a scalable infrastructure optimised for critical business applications, backed by high-performance server and storage technologies.

This integration improved data-driven decision-making, leading to optimised operations, reduced costs, and improved medicine quality and affordability. The robust infrastructure also ensured near-zero downtime, enhancing reliability and efficiency. Through this transformation, Lupin reinforced its commitment to providing patient-centred, affordable healthcare with faster, more efficient outcomes.

Leveraging AI for Cloud Security

Mitsubishi Tanabe Pharma’s “VISION 30” seeks to deliver personalised healthcare by 2030, focusing on precision medicine and digital solutions. The company is investing in advanced digital technologies and secure data infrastructure to achieve these goals.

To secure their expanding cloud platform, the company adopted a zero-trust model and enhanced identity management.

A security assessment identified gaps in cloud configuration, prompting tailored security improvements. GenAI was introduced to translate and summarise security alerts, reducing processing time from 10 minutes to just one minute, improving efficiency and security awareness across the team. The company is actively exploring further AI-driven solutions to strengthen security and drive their digital transformation, advancing the vision for personalised healthcare.

Automation and AI hold immense promise for accelerating productivity, reducing errors, and streamlining tasks across virtually every industry. From manufacturing plants that operate robotic arms to software-driven solutions that analyse millions of data points in seconds, these technological advancements are revolutionising how we work. However, AI has already led to, and will continue to bring about, many unintended consequences.

One that has been discussed for nearly a decade but is starting to impact employees and brand experiences is the “automation paradox”. As AI and automation take on more routine tasks, employees find themselves tackling the complex exceptions and making high-stakes decisions.

What is the Automation Paradox?

1. The Shifting Burden from Low to High Value Tasks

When AI systems handle mundane or repetitive tasks, ‘human’ employees can direct their efforts toward higher-value activities. At first glance, this shift seems purely beneficial. AI helps filter out extraneous work, enabling humans to focus on the tasks that require creativity, empathy, or nuanced judgment. However, by design, these remaining tasks often carry greater responsibility. For instance, in a retail environment with automated checkout systems, a human staff member is more likely to deal with complex refund disputes or tense customer interactions. Or in a warehouse, as many processes are automated by AI and robots, humans are left with the oversight of, and responsibility for entire processes. Over time, handling primarily high-pressure situations can become mentally exhausting, contributing to job stress and potential burnout.

2. Increased Reliance on Human Judgment in Edge Cases

AI excels at pattern recognition and data processing at scale, but unusual or unprecedented scenarios can stump even the best-trained models. The human workforce is left to solve these complex, context-dependent challenges. Take self-driving cars as an example. While most day-to-day driving can be safely automated, human oversight is essential for unpredictable events – like sudden weather changes or unexpected road hazards.

Human intervention can be a critical, life-or-death matter, amplifying the pressure and stakes for those still in the loop.

3. The Fallibility Factor of AI

Ironically, as AI becomes more capable, humans may trust it too much. When systems make mistakes, it is the human operator who must detect and rectify them. But the further removed people are from the routine checks and balances – since “the system” seems to handle things so competently – the greater the chance that an error goes unnoticed until it has grown into a major problem. For instance, in the aviation industry, pilots who rely heavily on autopilot systems must remain vigilant for rare but critical emergency scenarios, which can be more taxing due to limited practice in handling manual controls.

Add to These the Known Challenges of AI!

Bias in Data and Algorithms. AI systems learn from historical data, which can carry societal and organisational biases. If left unchecked, these algorithms can perpetuate or even amplify unfairness. For instance, an AI-driven hiring platform trained on past decisions might favour candidates from certain backgrounds, unintentionally excluding qualified applicants from underrepresented groups.

Privacy and Data Security Concerns. The power of AI often comes from massive data collection, whether for predicting consumer trends or personalising user experiences. This accumulation of personal and sensitive information raises complex legal and ethical questions. Leaks, hacks, or improper data sharing can cause reputational damage and legal repercussions.

Skills Gap and Workforce Displacement. While AI can eliminate the need for certain manual tasks, it creates a demand for specialised skills, such as data science, machine learning operations, and AI ethics oversight. If an organisation fails to provide employees with retraining opportunities, it risks exacerbating skill gaps and losing valuable institutional knowledge.

Ethical and Social Implications. AI-driven decision-making can have profound impacts on communities. For example, a predictive policing system might inadvertently target specific neighbourhoods based on historical arrest data. When these systems lack transparency or accountability, public trust erodes, and social unrest can follow.

How Can We Mitigate the Known and Unknown Consequences of AI?

While some of the unintended consequences of AI and automation won’t be known until systems are deployed and processes are in practice, there are some basic hygiene approaches that technology leaders and their organisational peers can take to minimise these impacts.

- Human-Centric Design. Incorporate user feedback into AI system development. Tools should be designed to complement human skills, not overshadow them.

- Comprehensive Training. Provide ongoing education for employees expected to handle advanced AI or edge-case scenarios, ensuring they remain engaged and confident when high-stakes decisions arise.

- Robust Governance. Develop clear policies and frameworks that address bias, privacy, and security. Assign accountability to leaders who understand both technology and organisational ethics.

- Transparent Communication. Maintain clear channels of communication regarding what AI can and cannot do. Openness fosters trust, both internally and externally.

- Increase your organisational AIQ (AI Quotient). Most employees are not fully aware of the potential of AI and its opportunity to improve – or change – their roles. Conduct regular upskilling and knowledge sharing activities to improve the AIQ of your employees so they start to understand how people, plus data and technology, will drive their organisation forward.

Let me know your thoughts on the Automation Paradox, and stay tuned for my next blog on redefining employee skill pathways to tackle its challenges.

In my previous blogs, I outlined strategies for public sector organisations to incorporate technology into citizen services and internal processes. Building on those perspectives, let’s talk about the critical role of data in powering digital transformation across the public sector.

Effectively leveraging data is integral to delivering enhanced digital services and streamlining operations. Organisations must adopt a forward-looking roadmap that accounts for different data maturity levels – from core data foundations and emerging catalysts to future-state capabilities.

1. Data Essentials: Establishing the Bedrock

Data model. At the core of developing government e-services portals, strategic data modelling establishes the initial groundwork for scalable data infrastructures that can support future analytics, AI, and reporting needs. Effective data models define how information will be structured and analysed as data volumes grow. Beginning with an Entity-Relationship model, these blueprints guide the implementation of database schemas within database management systems (DBMS). This foundational approach ensures that the data infrastructure can accommodate the vast amounts of data generated by public services, crucial for maintaining public trust in government systems.

Cloud Databases. Cloud databases provide flexible, scalable, and cost-effective storage solutions, allowing public sector organisations to handle vast amounts of data generated by public services. Data warehouses, on the other hand, are centralised repositories designed to store structured data, enabling advanced querying and reporting capabilities. This combination allows for robust data analytics and AI-driven insights, ensuring that the data infrastructure can support future growth and evolving analytical needs.

Document management. Incorporating a document or records management system (DMS/RMS) early in the data portfolio of a government e-services portal is crucial for efficient operations. This system organises extensive paperwork and records like applications, permits, and legal documents systematically. It ensures easy storage, retrieval, and management, preventing issues with misplaced documents.

Emerging Catalysts: Unleashing Data’s Potential

Digital Twins. A digital twin is a sophisticated virtual model of a physical object or system. It surpasses traditional reporting methods through advanced analytics, including predictive insights and data mining. By creating detailed virtual replicas of infrastructure, utilities, and public services, digital twins allow for real-time monitoring, efficient resource management, and proactive maintenance. This holistic approach contributes to more efficient, sustainable, and livable cities, aligning with broader goals of urban development and environmental sustainability.

Data Fabric. Data Fabric, including Data Lakes and Data Lakehouses, represents a significant leap in managing complex data environments. It ensures data is accessible for various analyses and processing needs across platforms. Data Lakes store raw data in its original format, crucial for initial data collection when future data uses are uncertain. In Cloud DB or Data Fabric setups, Data Lakes play a foundational role by storing unprocessed or semi-structured data. Data Lakehouses combine Data Lakes’ storage with data warehouses’ querying capabilities, offering flexibility, and efficiency for handling different types of data in sophisticated environments.

Data Exchange and MOUs. Even with advanced data management technologies like data fabrics, Data Lakes, and Data Lakehouses, achieving higher maturity in digital government ecosystems often depends on establishing data-sharing agreements. Memorandums of Understanding (MoUs) exemplify these agreements, crucial for maximising efficiency and collaboration. MoUs outline terms, conditions, and protocols for sharing data beyond regulatory requirements, defining its scope, permitted uses, governance standards, and responsibilities of each party. This alignment ensures data integrity, privacy, and security while facilitating collaboration that enhances innovation and service delivery. Such agreements also pave the way for potential commercialisation of shared data resources, opening new market opportunities.

Future-Forward Capabilities: Pioneering New Frontiers

Data Mesh. Data Mesh is a decentralised approach to data architecture and organisational design, ideal for complex stakeholder ecosystems like digital conveyancing solutions. Unlike centralised models, Data Mesh allows each domain to manage its data independently. This fosters collaboration while ensuring secure and governed data sharing, essential for efficient conveyancing processes. Data Mesh enhances data quality and relevance by holding stakeholders directly accountable for their data, promoting integrity and adaptability to market changes. Its focus on interoperability and self-service data access enhances user satisfaction and operational efficiency, catering flexibly to diverse user needs within the conveyancing ecosystem.

Data Embassies. A Data Embassy stores and processes data in a foreign country under the legal jurisdiction of its origin country, beneficial for digital conveyancing solutions serving international markets. This approach ensures data security and sovereignty, governed by the originating nation’s laws to uphold privacy and legal integrity in conveyancing transactions. Data Embassies enhance resilience against physical and cyber threats by distributing data across international locations, ensuring continuous operation despite disruptions. They also foster international collaboration and trust, potentially attracting more investment and participation in global real estate markets. Technologically, Data Embassies rely on advanced data centres, encryption, cybersecurity, cloud, and robust disaster recovery solutions to maintain uninterrupted conveyancing services and compliance with global standards.

Conclusion

By developing a cohesive roadmap that progressively integrates cutting-edge architectures, cross-stakeholder partnerships, and avant-garde juridical models, agencies can construct a solid data ecosystem. One where information doesn’t just endure disruption, but actively facilitates organisational resilience and accelerates mission impact. Investing in an evolutionary data strategy today lays the crucial groundwork for delivering intelligent, insight-driven public services for decades to come. The time to fortify data’s transformative potential is now.

The data architecture outlines how data is managed in an organisation and is crucial for defining the data flow, data management systems required, the data processing operations, and AI applications. Data architects and engineers define data models and structures based on these requirements, supporting initiatives like data science. Before we delve into the right data architecture for your AI journey, let’s talk about the data management options. Technology leaders have the challenge of deciding on a data management system that takes into consideration factors such as current and future data needs, available skills, costs, and scalability. As data strategies become vital to business success, selecting the right data management system is crucial for enabling data-driven decisions and innovation.

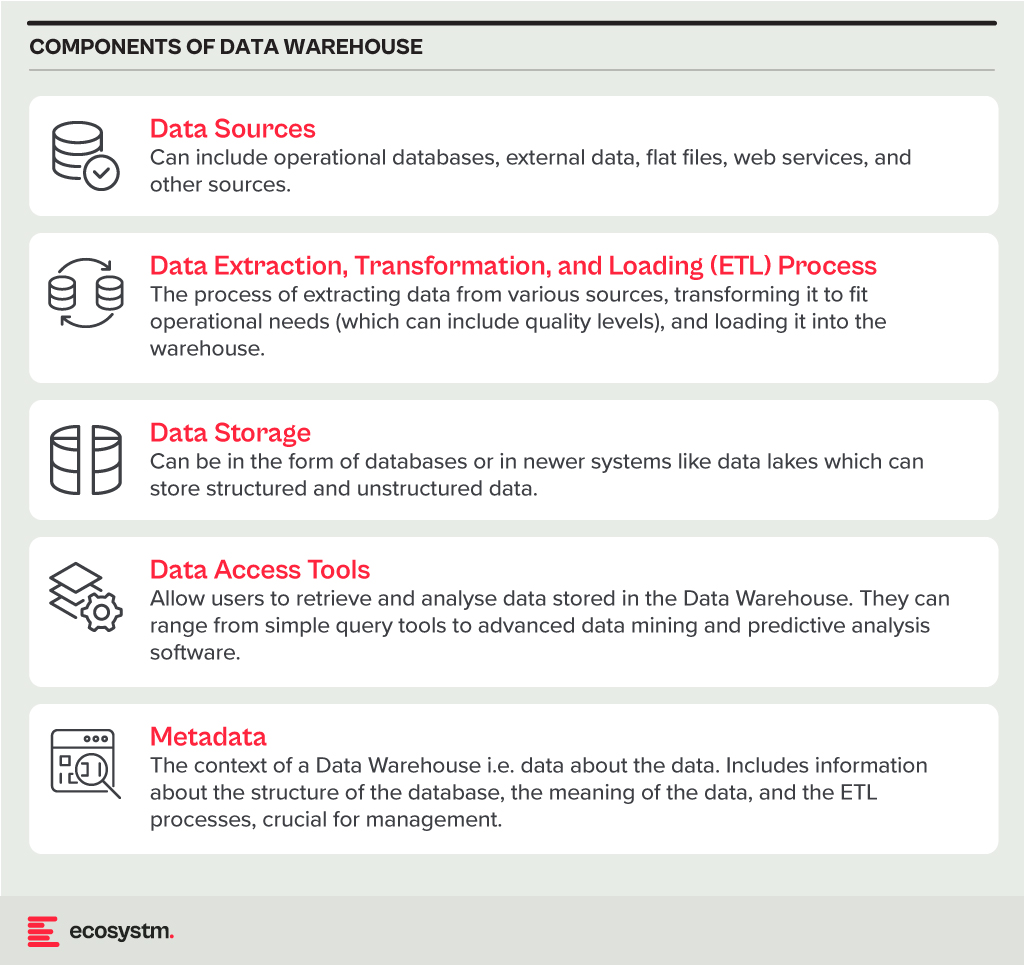

Data Warehouse

A Data Warehouse is a centralised repository that stores vast amounts of data from diverse sources within an organisation. Its main function is to support reporting and data analysis, aiding businesses in making informed decisions. This concept encompasses both data storage and the consolidation and management of data from various sources to offer valuable business insights. Data Warehousing evolves alongside technological advancements, with trends like cloud-based solutions, real-time capabilities, and the integration of AI and machine learning for predictive analytics shaping its future.

Core Characteristics

- Integrated. It integrates data from multiple sources, ensuring consistent definitions and formats. This often includes data cleansing and transformation for analysis suitability.

- Subject-Oriented. Unlike operational databases, which prioritise transaction processing, it is structured around key business subjects like customers, products, and sales. This organisation facilitates complex queries and analysis.

- Non-Volatile. Data in a Data Warehouse is stable; once entered, it is not deleted. Historical data is retained for analysis, allowing for trend identification over time.

- Time-Variant. It retains historical data for trend analysis across various time periods. Each entry is time-stamped, enabling change tracking and trend analysis.

Benefits

- Better Decision Making. Data Warehouses consolidate data from multiple sources, offering a comprehensive business view for improved decision-making.

- Enhanced Data Quality. The ETL process ensures clean and consistent data entry, crucial for accurate analysis.

- Historical Analysis. Storing historical data enables trend analysis over time, informing future strategies.

- Improved Efficiency. Data Warehouses enable swift access and analysis of relevant data, enhancing efficiency and productivity.

Challenges

- Complexity. Designing and implementing a Data Warehouse can be complex and time-consuming.

- Cost. The cost of hardware, software, and specialised personnel can be significant.

- Data Security. Storing large amounts of sensitive data in one place poses security risks, requiring robust security measures.

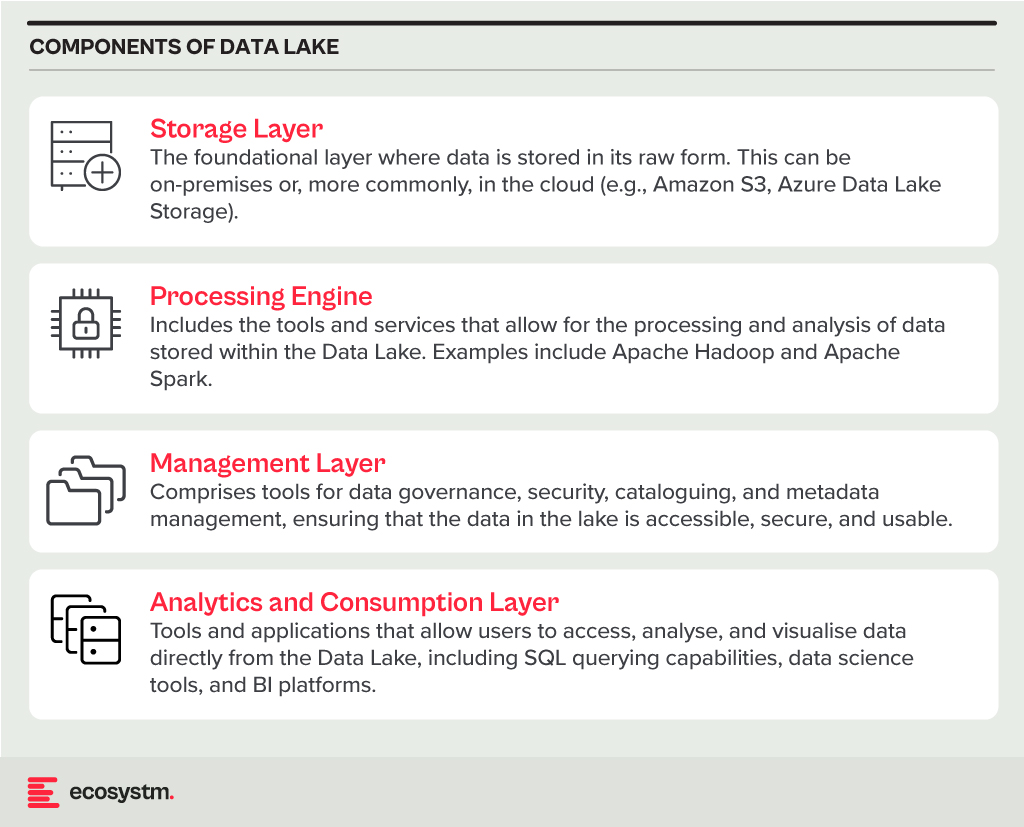

Data Lake

A Data Lake is a centralised repository for storing, processing, and securing large volumes of structured and unstructured data. Unlike traditional Data Warehouses, which are structured and optimised for analytics with predefined schemas, Data Lakes retain raw data in its native format. This flexibility in data usage and analysis makes them crucial in modern data architecture, particularly in the age of big data and cloud.

Core Characteristics

- Schema-on-Read Approach. This means the data structure is not defined until the data is read for analysis. This offers more flexible data storage compared to the schema-on-write approach of Data Warehouses.

- Support for Multiple Data Types. Data Lakes accommodate diverse data types, including structured (like databases), semi-structured (like JSON, XML files), unstructured (like text and multimedia files), and binary data.

- Scalability. Designed to handle vast amounts of data, Data Lakes can easily scale up or down based on storage needs and computational demands, making them ideal for big data applications.

- Versatility. Data Lakes support various data operations, including batch processing, real-time analytics, machine learning, and data visualisation, providing a versatile platform for data science and analytics.

Benefits

- Flexibility. Data Lakes offer diverse storage formats and a schema-on-read approach for flexible analysis.

- Cost-Effectiveness. Cloud-hosted Data Lakes are cost-effective with scalable storage solutions.

- Advanced Analytics Capabilities. The raw, granular data in Data Lakes is ideal for advanced analytics, machine learning, and AI applications, providing deeper insights than traditional data warehouses.

Challenges

- Complexity and Management. Without proper management, a Data Lake can quickly become a “Data Swamp” where data is disorganised and unusable.

- Data Quality and Governance. Ensuring the quality and governance of data within a Data Lake can be challenging, requiring robust processes and tools.

- Security. Protecting sensitive data within a Data Lake is crucial, requiring comprehensive security measures.

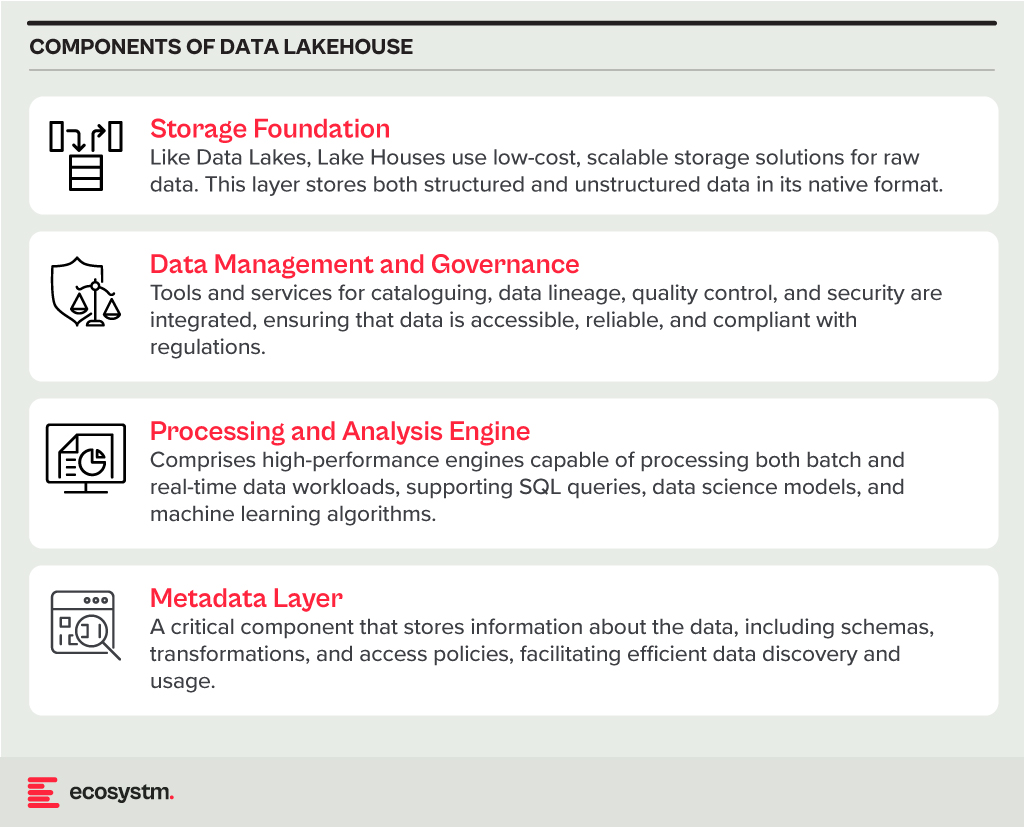

Data Lakehouse

A Data Lakehouse is an innovative data management system that merges the strengths of Data Lakes and Data Warehouses. This hybrid approach strives to offer the adaptability and expansiveness of a Data Lake for housing extensive volumes of raw, unstructured data, while also providing the structured, refined data functionalities typical of a Data Warehouse. By bridging the gap between these two traditional data storage paradigms, Lakehouses enable more efficient data analytics, machine learning, and business intelligence operations across diverse data types and use cases.

Core Characteristics

- Unified Data Management. A Lakehouse streamlines data governance and security by managing both structured and unstructured data on one platform, reducing organizational data silos.

- Schema Flexibility. It supports schema-on-read and schema-on-write, allowing data to be stored and analysed flexibly. Data can be ingested in raw form and structured later or structured at ingestion.

- Scalability and Performance. Lakehouses scale storage and compute resources independently, handling large data volumes and complex analytics without performance compromise.

- Advanced Analytics and Machine Learning Integration. By providing direct access to both raw and processed data on a unified platform, Lakehouses facilitate advanced analytics, real-time analytics, and machine learning.

Benefits

- Versatility in Data Analysis. Lakehouses support diverse data analytics, spanning from traditional BI to advanced machine learning, all within one platform.

- Cost-Effective Scalability. The ability to scale storage and compute independently, often in a cloud environment, makes Lakehouses cost-effective for growing data needs.

- Improved Data Governance. Centralising data management enhances governance, security, and quality across all types of data.

Challenges

- Complexity in Implementation. Designing and implementing a Lakehouse architecture can be complex, requiring expertise in both Data Lakes and Data Warehouses.

- Data Consistency and Quality. Though crucial for reliable analytics, ensuring data consistency and quality across diverse data types and sources can be challenging.

- Governance and Security. Comprehensive data governance and security strategies are required to protect sensitive information and comply with regulations.

The choice between Data Warehouse, Data Lake, or Lakehouse systems is pivotal for businesses in harnessing the power of their data. Each option offers distinct advantages and challenges, requiring careful consideration of organisational needs and goals. By embracing the right data management system, organisations can pave the way for informed decision-making, operational efficiency, and innovation in the digital age.

Over the past year, many organisations have explored Generative AI and LLMs, with some successfully identifying, piloting, and integrating suitable use cases. As business leaders push tech teams to implement additional use cases, the repercussions on their roles will become more pronounced. Embracing GenAI will require a mindset reorientation, and tech leaders will see substantial impact across various ‘traditional’ domains.

AIOps and GenAI Synergy: Shaping the Future of IT Operations

When discussing AIOps adoption, there are commonly two responses: “Show me what you’ve got” or “We already have a team of Data Scientists building models”. The former usually demonstrates executive sponsorship without a specific business case, resulting in a lukewarm response to many pre-built AIOps solutions due to their lack of a defined business problem. On the other hand, organisations with dedicated Data Scientist teams face a different challenge. While these teams can create impressive models, they often face pushback from the business as the solutions may not often address operational or business needs. The challenge arises from Data Scientists’ limited understanding of the data, hindering the development of use cases that effectively align with business needs.

The most effective approach lies in adopting an AIOps Framework. Incorporating GenAI into AIOps frameworks can enhance their effectiveness, enabling improved automation, intelligent decision-making, and streamlined operational processes within IT operations.

This allows active business involvement in defining and validating use-cases, while enabling Data Scientists to focus on model building. It bridges the gap between technical expertise and business requirements, ensuring AIOps initiatives are influenced by the capabilities of GenAI, address specific operational challenges and resonate with the organisation’s goals.

The Next Frontier of IT Infrastructure

Many companies adopting GenAI are openly evaluating public cloud-based solutions like ChatGPT or Microsoft Copilot against on-premises alternatives, grappling with the trade-offs between scalability and convenience versus control and data security.

Cloud-based GenAI offers easy access to computing resources without substantial upfront investments. However, companies face challenges in relinquishing control over training data, potentially leading to inaccurate results or “AI hallucinations,” and concerns about exposing confidential data. On-premises GenAI solutions provide greater control, customisation, and enhanced data security, ensuring data privacy, but require significant hardware investments due to unexpectedly high GPU demands during both the training and inferencing stages of AI models.

Hardware companies are focusing on innovating and enhancing their offerings to meet the increasing demands of GenAI. The evolution and availability of powerful and scalable GPU-centric hardware solutions are essential for organisations to effectively adopt on-premises deployments, enabling them to access the necessary computational resources to fully unleash the potential of GenAI. Collaboration between hardware development and AI innovation is crucial for maximising the benefits of GenAI and ensuring that the hardware infrastructure can adequately support the computational demands required for widespread adoption across diverse industries. Innovations in hardware architecture, such as neuromorphic computing and quantum computing, hold promise in addressing the complex computing requirements of advanced AI models.

The synchronisation between hardware innovation and GenAI demands will require technology leaders to re-skill themselves on what they have done for years – infrastructure management.

The Rise of Event-Driven Designs in IT Architecture

IT leaders traditionally relied on three-tier architectures – presentation for user interface, application for logic and processing, and data for storage. Despite their structured approach, these architectures often lacked scalability and real-time responsiveness. The advent of microservices, containerisation, and serverless computing facilitated event-driven designs, enabling dynamic responses to real-time events, and enhancing agility and scalability. Event-driven designs, are a paradigm shift away from traditional approaches, decoupling components and using events as a central communication mechanism. User actions, system notifications, or data updates trigger actions across distributed services, adding flexibility to the system.

However, adopting event-driven designs presents challenges, particularly in higher transaction-driven workloads where the speed of serverless function calls can significantly impact architectural design. While serverless computing offers scalability and flexibility, the latency introduced by initiating and executing serverless functions may pose challenges for systems that demand rapid, real-time responses. Increasing reliance on event-driven architectures underscores the need for advancements in hardware and compute power. Transitioning from legacy architectures can also be complex and may require a phased approach, with cultural shifts demanding adjustments and comprehensive training initiatives.

The shift to event-driven designs challenges IT Architects, whose traditional roles involved designing, planning, and overseeing complex systems. With Gen AI and automation enhancing design tasks, Architects will need to transition to more strategic and visionary roles. Gen AI showcases capabilities in pattern recognition, predictive analytics, and automated decision-making, promoting a symbiotic relationship with human expertise. This evolution doesn’t replace Architects but signifies a shift toward collaboration with AI-driven insights.

IT Architects need to evolve their skill set, blending technical expertise with strategic thinking and collaboration. This changing role will drive innovation, creating resilient, scalable, and responsive systems to meet the dynamic demands of the digital age.

Whether your organisation is evaluating or implementing GenAI, the need to upskill your tech team remains imperative. The evolution of AI technologies has disrupted the tech industry, impacting people in tech. Now is the opportune moment to acquire new skills and adapt tech roles to leverage the potential of GenAI rather than being disrupted by it.

“AI Guardrails” are often used as a method to not only get AI programs on track, but also as a way to accelerate AI investments. Projects and programs that fall within the guardrails should be easy to approve, govern, and manage – whereas those outside of the guardrails require further review by a governance team or approval body. The concept of guardrails is familiar to many tech businesses and are often applied in areas such as cybersecurity, digital initiatives, data analytics, governance, and management.

While guidance on implementing guardrails is common, organisations often leave the task of defining their specifics, including their components and functionalities, to their AI and data teams. To assist with this, Ecosystm has surveyed some leading AI users among our customers to get their insights on the guardrails that can provide added value.

Data Security, Governance, and Bias

- Data Assurance. Has the organisation implemented robust data collection and processing procedures to ensure data accuracy, completeness, and relevance for the purpose of the AI model? This includes addressing issues like missing values, inconsistencies, and outliers.

- Bias Analysis. Does the organisation analyse training data for potential biases – demographic, cultural and so on – that could lead to unfair or discriminatory outputs?

- Bias Mitigation. Is the organisation implementing techniques like debiasing algorithms and diverse data augmentation to mitigate bias in model training?

- Data Security. Does the organisation use strong data security measures to protect sensitive information used in training and running AI models?

- Privacy Compliance. Is the AI opportunity compliant with relevant data privacy regulations (country and industry-specific as well as international standards) when collecting, storing, and utilising data?

Model Development and Explainability

- Explainable AI. Does the model use explainable AI (XAI) techniques to understand and explain how AI models reach their decisions, fostering trust and transparency?

- Fair Algorithms. Are algorithms and models designed with fairness in mind, considering factors like equal opportunity and non-discrimination?

- Rigorous Testing. Does the organisation conduct thorough testing and validation of AI models before deployment, ensuring they perform as intended, are robust to unexpected inputs, and avoid generating harmful outputs?

AI Deployment and Monitoring

- Oversight Accountability. Has the organisation established clear roles and responsibilities for human oversight throughout the AI lifecycle, ensuring human control over critical decisions and mitigation of potential harm?

- Continuous Monitoring. Are there mechanisms to continuously monitor AI systems for performance, bias drift, and unintended consequences, addressing any issues promptly?

- Robust Safety. Can the organisation ensure AI systems are robust and safe, able to handle errors or unexpected situations without causing harm? This includes thorough testing and validation of AI models under diverse conditions before deployment.

- Transparency Disclosure. Is the organisation transparent with stakeholders about AI use, including its limitations, potential risks, and how decisions made by the system are reached?

Other AI Considerations

- Ethical Guidelines. Has the organisation developed and adhered to ethical principles for AI development and use, considering areas like privacy, fairness, accountability, and transparency?

- Legal Compliance. Has the organisation created mechanisms to stay updated on and compliant with relevant legal and regulatory frameworks governing AI development and deployment?

- Public Engagement. What mechanisms are there in place to encourage open discussion and engage with the public regarding the use of AI, addressing concerns and building trust?

- Social Responsibility. Has the organisation considered the environmental and social impact of AI systems, including energy consumption, ecological footprint, and potential societal consequences?

Implementing these guardrails requires a comprehensive approach that includes policy formulation, technical measures, and ongoing oversight. It might take a little longer to set up this capability, but in the mid to longer term, it will allow organisations to accelerate AI implementations and drive a culture of responsible AI use and deployment.

COVID-19 has been a major disruption for people-intensive industries and the BPO sector is no exception. However, some of the forward-looking BPO organisations are using this disruption as an opportunity to re-evaluate how they do business and how they can make themselves resilient and future-proof. In many of these conversations, technology and process reengineering are emerging as the two common themes in their journey to transform into a New Age BPO provider.

In 2022 BPO providers will focus on mitigating their key challenges around handling client expectations, better people management and investing in the right technologies for their own transformation journeys.

Read on to find out what Ecosystm Advisors Audrey William and Venu Reddy think will be the key trends for the New Age BPO in 2022.

Click here to download Ecosystm Predicts: The Top 5 Trends for the New Age BPO in 2022 as PDF

Last week, the Australia Government announced that they have been monitoring persistent and increasing volumes of cyber-attacks by a foreign state-based actor on both government and private sector businesses. The Australian Cyber Security Centre (ACSC) reported that most of the attacks make use of existing open-source tools and packages, which ACSC has dubbed as “copy-paste compromises”. The attackers are also using other methods to exploit such as spear phishing, sending malicious files and using various websites to harvest passwords and more, to exploit systems.

Cybercrime has been escalating in other parts of the world as well. The World Health Organisation (WHO) witnessed a dramatic increase in cyber-attacks directed with scammers impersonating WHO personnel’s official emails targeting the public. The National Cyber Security Centre (NCSC) in the UK alerted the country’s educational institutions and scientific facilities on increased cyber-attacks attempting to steal research associated with the coronavirus. Earlier this month, the Singapore Computer Emergency Response Team (SingCERT) issued an advisory on potential phishing campaigns targeting six countries, including Singapore that exploit government support initiatives for businesses and individuals in the wake of the COVID-19 crisis.

Such announcements are a timely reminder to government agencies and private organisations to implement the right cybersecurity measures against the backdrop of an increased attack surface. These cyber attacks can have business impacts such as theft of business data and destruction or impairment to financial data, creating extended business interruptions. The ramifications can be far-reaching including financial and reputational loss, compliance breaches and potentially even legal action.

A Rise in Spear-Phishing

In Australia, we’re seeing attackers targeting internet-facing infrastructure relating to vulnerabilities in Citrix, Windows IIS web server, Microsoft Sharepoint, and Telerik UI.

Where these attacks fail, they are moving to spear-phishing attacks. Spear phishing is most commonly an email or SMS scam targeted towards a specific individual or organisation but can be delivered to a target via any number of electronic communication mediums. In the spear-phishing emails, the attacker attaches files or includes links to a variety of destinations that include:

- Credential harvesting sites. These genuine-looking but fake web sites prompt targets to enter username and password. Once the gullible target provides the credentials, these are then stored in the attackers’ database and are used to launch credential-based attacks against the organisation’s IT infrastructure and applications.

- Malicious files. These file attachments to emails look legitimate but once downloaded, they execute a malicious malware on the target device. Common file types are .doc, .docx, .xls, .xlsx, .ppt, .pptx, .jpg, .jpeg, .gif, .mpg, .mp4, .wav

- OAuth Token Theft. OAuth is commonly used on the internet to authenticate a user to a wide variety of other platforms. This attack technique uses OAuth tokens generated by a platform and shares with other platforms. An example of this is a website that asks users to authenticate using their Facebook or Google accounts in order to use its own services. Faulty implementation of OAuth renders such integration to cyber-attacks.

- Link Shimming. The technique includes using email tracking services to launch an attack. The attackers send fake emails with valid looking links and images inside, using email tracking services. Once the user receives the email, it tracks the actions related to opening the email and clicking on the links. Such tracking services can reveal when the email was opened, location data, device used, links clicked, and IP addresses used. The links once clicked-on, can in- turn, lead to malicious software being stealthily downloaded on the target system and/or luring the user for credential harvesting.

How do you safeguard against Cyber-Attacks?

The most common vectors for such cyber-attacks are lack of user awareness AND/OR exploitable internet-facing systems and applications. Unpatched or out-of-support internet-facing systems, application or system misconfiguration, inadequate or poorly maintained device security controls and weak threat detection and response programs, compound the threat to your organisation.

Governments across the world are coming up with advisories and guidelines to spread cybersecurity awareness and prevent threats and attacks. ACSC’s Australian Signals Directorates ‘Essential 8’ are effective mitigations for a large majority of present-day attacks. There were also guidelines published earlier this year, specifically with the COVID-19 crisis in mind. The Cyber Security Agency in Singapore (CSA) promotes the ‘Go Safe Online’ campaign that provides regular guidance and best practices on cybersecurity measures.

Ecosystm’s ongoing “Digital Priorities in the New Normal” study evaluates the impact of the COVID-19 pandemic on organisations, and how digital priorities are being initiated or aligned to adapt to the New Normal that has emerged. 41% of organisations in Asia Pacific re-evaluated cybersecurity risks and measures, in the wake of the pandemic. Identity & Access Management (IDAM), Data Security and Threat Analytics & Intelligence saw increased investments in many organisations in the region (Figure 1).

However, technology implementation has to be backed by a rigorous process that constantly evaluates the organisation’s risk positions. The following preventive measures will help you address the risks to your organisation:

- Conduct regular user awareness training on common cyber threats

- Conduct regular phishing tests to check user awareness level

- Patch the internet-facing products as recommended by their vendors

- Establish baseline security standards for applications and systems

- Apply multi-factor authentication to access critical applications and systems – especially internet-facing and SaaS products widely used in the organisation like O365

- Follow regular vulnerability scanning and remediation regimes

- Conduct regular penetration testing on internet-facing applications and systems

- Apply security settings on endpoints and internet gateways that disallow download and execution of files from unfamiliar sources

- Maintain an active threat detection and response program that provides for intrusion detection, integrity checks, user and system behaviour monitoring and tools to maintain visibility of potential attacks and incidents – e.g Security Information & Event Monitoring (SIEM) tools

- Consider managed services such as Managed Threat Detection and Response delivered via security operations (SOC)

- Maintain a robust incident management program that is reviewed and tested at least annually

- Maintain a comprehensive backup regime – especially for critical data – including offsite/offline backups, and regular testing of backups for data integrity

- Restrict and monitor the usage of administrative credentials

A group of some of the world’s largest technology companies including Alibaba, ARM, Baidu, Google Cloud, IBM, Intel, Red Hat, Swisscom, and Tencent have come together to form “Confidential Computing Consortium”, an association established by the Linux Foundation to improve the security of data while in use, and hosted by them at ConfidentialComputing.io.

Confidential Computing Consortium aims to define and accelerate the adoption of Confidential Computing by bringing together a community of open-source technologies and experts.

New Cross-Industry Effort

There are many government agencies, consortiums, and software and hardware vendors working on data security so a key question here is “who needs it?”

Commenting on CCC, Claus Mortensen, Principal Advisor at Ecosystm said, “whether this is really ‘needed’ is a matter of perspective though. Many would argue, that a project driven by technology giants is contrary to the grassroots approach of earlier open source projects. However, Confidential Computing is a complex area that involves both hardware and software. Also, the stakes have become high for businesses and consumers alike, as data privacy has become the focal point. Arguably, the big tech companies need this Open Source initiative more than anyone at the moment.”

How CCC would benefit enterprises and business users?

With the increasing adoption of the cloud environments, on-premise servers, the edge, IoT and other technologies, it’s crucial for enterprises to increase the security of the data. While there have been approaches to encrypt data at rest (storage) and in transit (network) it’s quite challenging to fully encrypt the data life cycle and that’s what Confidential Computing aims to solve.

Mortensen said, “when it comes to data security, the actual computing part of the data life-cycle is arguably the weakest link and with the recent increased focus on privacy among the public, this ‘weakness’ could possibly be a stumbling block in the further development and update of cloud computing.” Mortensen added, “that doing it as part of an open-source initiative makes sense, not only because the open-source approach is a good development and collaboration environment – but, crucially, it also gives the initiative an air of openness and trustworthiness.”

To drive the initiative, members have planned to make a series of open source project contributions to the Confidential Computing Consortium.

- Intel will contribute Software Guard Extensions (SGX) SDK to the project. This is hardware-based memory level data protection, where data and operations are encrypted in the memory and isolated from the rest of the system resources and software components.

- Microsoft will be sharing the Open Enclave SDK to develop broader industry collaboration and ensure a truly open development approach. The open-source framework allows developers to build a Trusted Execution Environment (TEE) applications.

- Red Hat will provide its Enarx to the consortium. – an open-source project aimed at reducing the number of layers (application, kernel, container engine, bootloader) in a running workload environment.

Mortensen said, “like other open-source initiatives, this would allow businesses to contribute and further develop Confidential Computing. This, in turn, can ensure further uptake and the development of new use cases for the technology.”

How Data Security Efforts will shape up

Confidential Computing is a part of a bigger approach to privacy and data and we may see other possible developments around AI, in distributed computing, and with Big Data analysis.

“Initiatives that can be seen as part of the same bucket include Google’s “Federated Learning” where AI learning on data is distributed privately. This allows Google to apply AI to data on the users’ devices without Google actually seeing the data. The data remains on the user’s device and all that is sent back to Google is the input or learning that the data has provided” said Mortensen.

Consequently, Confidential Computing seems to ease matters for data security at this point and the collaboration expects the results will lead to greater control and transparency of data for users.

Let us know your opinion on the Confidential Computing Consortium in the comments.