When OpenAI released ChatGPT, it became obvious – and very fast – that we were entering a new era of AI. Every tech company scrambled to release a comparable service or to infuse their products with some form of GenAI. Microsoft, piggybacking on its investment in OpenAI was the fastest to market with impressive text and image generation for the mainstream. Copilot is now embedded across its software, including Microsoft 365, Teams, GitHub, and Dynamics to supercharge the productivity of developers and knowledge workers. However, the race is on – AWS and Google are actively developing their own GenAI capabilities.

AWS Catches Up as Enterprise Gains Importance

Without a consumer-facing AI assistant, AWS was less visible during the early stages of the GenAI boom. They have since rectified this with a USD 4B investment into Anthropic, the makers of Claude. This partnership will benefit both Amazon and Anthropic, bringing the Claude 3 family of models to enterprise customers, hosted on AWS infrastructure.

As GenAI quickly emerges from shadow IT to an enterprise-grade tool, AWS is catching up by capitalising on their position as cloud leader. Many organisations view AWS as a strategic partner, already housing their data, powering critical applications, and providing an environment that developers are accustomed to. The ability to augment models with private data already residing in AWS data repositories will make it an attractive GenAI partner.

AWS has announced the general availability of Amazon Q, their suite of GenAI tools aimed at developers and businesses. Amazon Q Developer expands on what was launched as Code Whisperer last year. It helps developers accelerate the process of building, testing, and troubleshooting code, allowing them to focus on higher-value work. The tool, which can directly integrate with a developer’s chosen IDE, uses NLP to develop new functions, modernise legacy code, write security tests, and explain code.

Amazon Q Business is an AI assistant that can safely ingest an organisation’s internal data and connect with popular applications, such as Amazon S3, Salesforce, Microsoft Exchange, Slack, ServiceNow, and Jira. Access controls can be implemented to ensure data is only shared with authorised users. It leverages AWS’s visualisation tool, QuickSight, to summarise findings. It also integrates directly with applications like Slack, allowing users to query it directly.

Going a step further, Amazon Q Apps (in preview) allows employees to build their own lightweight GenAI apps using natural language. These employee-created apps can then be published to an enterprise’s app library for broader use. This no-code approach to development and deployment is part of a drive to use AI to increase productivity across lines of business.

AWS continues to expand on Bedrock, their managed service providing access to foundational models from companies like Mistral AI, Stability AI, Meta, and Anthropic. The service also allows customers to bring their own model in cases where they have already pre-trained their own LLM. Once a model is selected, organisations can extend its knowledge base using Retrieval-Augmented Generation (RAG) to privately access proprietary data. Models can also be refined over time to improve results and offer personalised experiences for users. Another feature, Agents for Amazon Bedrock, allows multi-step tasks to be performed by invoking APIs or searching knowledge bases.

To address AI safety concerns, Guardrails for Amazon Bedrock is now available to minimise harmful content generation and avoid negative outcomes for users and brands. Contentious topics can be filtered by varying thresholds, and Personally Identifiable Information (PII) can be masked. Enterprise-wide policies can be defined centrally and enforced across multiple Bedrock models.

Google Targeting Creators

Due to the potential impact on their core search business, Google took a measured approach to entering the GenAI field, compared to newer players like OpenAI and Perplexity. The useability of Google’s chatbot, Gemini, has improved significantly since its initial launch under the moniker Bard. Its image generator, however, was pulled earlier this year while it works out how to carefully tread the line between creativity and sensitivity. Based on recent demos though, it plans to target content creators with images (Imagen 3), video generation (Veo), and music (Lyria).

Like Microsoft, Google has seen that GenAI is a natural fit for collaboration and office productivity. Gemini can now assist the sidebar of Workspace apps, like Docs, Sheets, Slides, Drive, Gmail, and Meet. With Google Search already a critical productivity tool for most knowledge workers, it is determined to remain a leader in the GenAI era.

At their recent Cloud Next event, Google announced the Gemini Code Assist, a GenAI-powered development tool that is more robust than its previous offering. Using RAG, it can customise suggestions for developers by accessing an organisation’s private codebase. With a one-million-token large context window, it also has full codebase awareness making it possible to make extensive changes at once.

The Hardware Problem of AI

The demands that GenAI places on compute and memory have created a shortage of AI chips, causing the valuation of GPU giant, NVIDIA, to skyrocket into the trillions of dollars. Though the initial training is most hardware-intensive, its importance will only rise as organisations leverage proprietary data for custom model development. Inferencing is less compute-heavy for early use cases, such as text generation and coding, but will be dwarfed by the needs of image, video, and audio creation.

Realising compute and memory will be a bottleneck, the hyperscalers are looking to solve this constraint by innovating with new chip designs of their own. AWS has custom-built specialised chips – Trainium2 and Inferentia2 – to bring down costs compared to traditional compute instances. Similarly, Microsoft announced the Maia 100, which it developed in conjunction with OpenAI. Google also revealed its 6th-generation tensor processing unit (TPU), Trillium, with significant increase in power efficiency, high bandwidth memory capacity, and peak compute performance.

The Future of the GenAI Landscape

As enterprises gain experience with GenAI, they will look to partner with providers that they can trust. Challenges around data security, governance, lineage, model transparency, and hallucination management will all need to be resolved. Additionally, controlling compute costs will begin to matter as GenAI initiatives start to scale. Enterprises should explore a multi-provider approach and leverage specialised data management vendors to ensure a successful GenAI journey.

ASEAN, poised to become the world’s 4th largest economy by 2030, is experiencing a digital boom. With an estimated 125,000 new internet users joining daily, it is the fastest-growing digital market globally. These users are not just browsing, but are actively engaged in data-intensive activities like gaming, eCommerce, and mobile business. As a result, monthly data usage is projected to soar from 9.2 GB per user in 2020 to 28.9 GB per user by 2025, according to the World Economic Forum. Businesses and governments are further fuelling this transformation by embracing Cloud, AI, and digitisation.

Investments in data centre capacity across Southeast Asia are estimated to grow at a staggering pace to meet this growing demand for data. While large hyperscale facilities are currently handling much of the data needs, edge computing – a distributed model placing data centres closer to users – is fast becoming crucial in supporting tomorrow’s low-latency applications and services.

The Big & the Small: The Evolving Data Centre Landscape

As technology pushes boundaries with applications like augmented reality, telesurgery, and autonomous vehicles, the demand for ultra-low latency response times is skyrocketing. Consider driverless cars, which generate a staggering 5 TB of data per hour and rely heavily on real-time processing for split-second decisions. This is where edge data centres come in. Unlike hyperscale data centres, edge data centres are strategically positioned closer to users and devices, minimising data travel distances and enabling near-instantaneous responses; and are typically smaller with a capacity ranging from 500 KW to 2 MW. In comparison, large data centres have a capacity of more than 80MW.

While edge data centres are gaining traction, cloud-based hyperscalers such as AWS, Microsoft Azure, and Google Cloud remain a dominant force in the Southeast Asian data centre landscape. These facilities require substantial capital investment – for instance, it took almost USD 1 billion to build Meta’s 150 MW hyperscale facility in Singapore – but offer immense processing power and scalability. While hyperscalers have the resources to build their own data centres in edge locations or emerging markets, they often opt for colocation facilities to familiarise themselves with local markets, build out operations, and take a “wait and see” approach before committing significant investments in the new market.

The growth of data centres in Southeast Asia – whether edge, cloud, hyperscale, or colocation – can be attributed to a range of factors. The region’s rapidly expanding digital economy and increasing internet penetration are the prime reasons behind the demand for data storage and processing capabilities. Additionally, stringent data sovereignty regulations in many Southeast Asian countries require the presence of local data centres to ensure compliance with data protection laws. Indonesia’s Personal Data Protection Law, for instance, allows personal data to be transferred outside of the country only where certain stringent security measures are fulfilled. Finally, the rising adoption of cloud services is also fuelling the need for onshore data centres to support cloud infrastructure and services.

Notable Regional Data Centre Hubs

Singapore. Singapore imposed a moratorium on new data centre developments between 2019 to 2022 due to concerns over energy consumption and sustainability. However, the city-state has recently relaxed this ban and announced a pilot scheme allowing companies to bid for permission to develop new facilities.

In 2023, the Singapore Economic Development Board (EDB) and the Infocomm Media Development Authority (IMDA) provisionally awarded around 80 MW of new capacity to four data centre operators: Equinix, GDS, Microsoft, and a consortium of AirTrunk and ByteDance (TikTok’s parent company). Singapore boasts a formidable digital infrastructure with 100 data centres, 1,195 cloud service providers, and 22 network fabrics. Its robust network, supported by 24 submarine cables, has made it a global cloud connectivity leader, hosting major players like AWS, Azure, IBM Softlayer, and Google Cloud.

Aware of the high energy consumption of data centres, Singapore has taken a proactive stance towards green data centre practices. A collaborative effort between the IMDA, government agencies, and industries led to the development of a “Green Data Centre Standard“. This framework guides organisations in improving data centre energy efficiency, leveraging the established ISO 50001 standard with customisations for Singapore’s context. The standard defines key performance metrics for tracking progress and includes best practices for design and operation. By prioritising green data centres, Singapore strives to reconcile its digital ambitions with environmental responsibility, solidifying its position as a leading Asian data centre hub.

Malaysia. Initiatives like MyGovCloud and the Digital Economy Blueprint are driving Malaysia’s public sector towards cloud-based solutions, aiming for 80% use of cloud storage. Tenaga Nasional Berhad also established a “green lane” for data centres, solidifying Malaysia’s commitment to environmentally responsible solutions and streamlined operations. Some of the big companies already operating include NTT Data Centers, Bridge Data Centers and Equinix.

The district of Kulai in Johor has emerged as a hotspot for data centre activity, attracting major players like Nvidia and AirTrunk. Conditional approvals have been granted to industry giants like AWS, Microsoft, Google, and Telekom Malaysia to build hyperscale data centres, aimed at making the country a leading hub for cloud services in the region. AWS also announced a new AWS Region in the country that will meet the high demand for cloud services in Malaysia.

Indonesia. With over 200 million internet users, Indonesia boasts one of the world’s largest online populations. This expanding internet economy is leading to a spike in the demand for data centre services. The Indonesian government has also implemented policies, including tax incentives and a national data centre roadmap, to stimulate growth in this sector.

Microsoft, for instance, is set to open its first regional data centre in Thailand and has also announced plans to invest USD 1.7 billion in cloud and AI infrastructure in Indonesia. The government also plans to operate 40 MW of national data centres across West Java, Batam, East Kalimantan, and East Nusa Tenggara by 2026.

Thailand. Remote work and increasing online services have led to a data centre boom, with major industry players racing to meet Thailand’s soaring data demands.

In 2021, Singapore’s ST Telemedia Global Data Centres launched its first 20 MW hyperscale facility in Bangkok. Soon after, AWS announced a USD 5 billion investment plan to bolster its cloud capacity in Thailand and the region over the next 15 years. Heavyweights like TCC Technology Group, CAT Telecom, and True Internet Data Centre are also fortifying their data centre footprints to capitalise on this explosive growth. Microsoft is also set to open its first regional data centre in the country.

Conclusion

Southeast Asia’s booming data centre market presents a goldmine of opportunity for tech investment and innovation. However, navigating this lucrative landscape requires careful consideration of legal hurdles. Data protection regulations, cross-border data transfer restrictions, and local policies all pose challenges for investors. Beyond legal complexities, infrastructure development needs and investment considerations must also be addressed. Despite these challenges, the potential rewards for companies that can navigate them are substantial.

For many organisations migrating to cloud, the opportunity to run workloads from energy-efficient cloud data centres is a significant advantage. However, carbon emissions can vary from one country to another and if left unmonitored, will gradually increase over time as cloud use grows. This issue will become increasingly important as we move into the era of compute-intensive AI and the burden of cloud on natural resources will shift further into the spotlight.

The International Energy Agency (IEA) estimates that data centres are responsible for up to 1.5% of global electricity use and 1% of GHG emissions. Cloud providers have recognised this and are committed to change. Between 2025 and 2030, all hyperscalers – AWS, Azure, Google, and Oracle included – expect to power their global cloud operations entirely with renewable sources.

Chasing the Sun

Cloud providers are shifting their sights from simply matching electricity use with renewable power purchase agreements (PPA) to the more ambitious goal of operating 24/7 on carbon-free sources. A defining characteristic of renewables though is intermittency, with production levels fluctuating based on the availability of sunlight and wind. Leading cloud providers are using AI to dynamically distribute compute workloads throughout the day to regions with lower carbon intensity. Workloads that are processed with solar power during daylight can be shifted to nearby regions with abundant wind energy at night.

Addressing Water Scarcity

Many of the largest cloud data centres are situated in sunny locations to take advantage of solar power and proximity to population centres. Unfortunately, this often means that they are also in areas where water is scarce. While liquid-cooled facilities are energy efficient, local communities are concerned on the strain on water sources. Data centre operators are now committing to reduce consumption and restore water supplies. Simple measures, such as expanding humidity (below 20% RH) and temperature tolerances (above 30°C) in server rooms have helped companies like Meta to cut wastage. Similarly, Google has increased their reliance on non-potable sources, such as grey water and sea water.

From Waste to Worth

Data centre operators have identified innovative ways to reuse the excess heat generated by their computing equipment. Some have used it to heat adjacent swimming pools while others have warmed rooms that house vertical farms. Although these initiatives currently have little impact on the environmental impact of cloud, they suggest a future where waste is significantly reduced.

Greening the Grid

The giant facilities that cloud providers use to house their computing infrastructure are also set to change. Building materials and construction account for an astonishing 11% of global carbon emissions. The use of recycled materials in concrete and investing in greener methods of manufacturing steel are approaches the construction industry are attempting to lessen their impact. Smaller data centres have been 3D printed to accelerate construction and use recyclable printing concrete. While this approach may not be suitable for hyperscale facilities, it holds potential for smaller edge locations.

Rethinking Hardware Management

Cloud providers rely on their scale to provide fast, resilient, and cost-effective computing. In many cases, simply replacing malfunctioning or obsolete equipment would achieve these goals better than performing maintenance. However, the relentless growth of e-waste is putting pressure on cloud providers to participate in the circular economy. Microsoft, for example, has launched three Circular Centres to repurpose cloud equipment. During the pilot of their Amsterdam centre, it achieved 83% reuse and 17% recycling of critical parts. The lifecycle of equipment in the cloud is largely hidden but environmentally conscious users will start demanding greater transparency.

Recommendations

Organisations should be aware of their cloud-derived scope 3 emissions and consider broader environmental issues around water use and recycling. Here are the steps that can be taken immediately:

- Monitor GreenOps. Cloud providers are adding GreenOps tools, such as the AWS Customer Carbon Footprint Tool, to help organisations measure the environmental impact of their cloud operations. Understanding the relationship between cloud use and emissions is the first step towards sustainable cloud operations.

- Adopt Cloud FinOps for Quick ROI. Eliminating wasted cloud resources not only cuts costs but also reduces electricity-related emissions. Tools such as CloudVerse provide visibility into cloud spend, identifies unused instances, and helps to optimise cloud operations.

- Take a Holistic View. Cloud providers are being forced to improve transparency and reduce their environmental impact by their biggest customers. Getting educated on the actions that cloud partners are taking to minimise emissions, water use, and waste to landfill is crucial. In most cases, dedicated cloud providers should reduce waste rather than offset it.

- Enable Remote Workforce. Cloud-enabled security and networking solutions, such as SASE, allow employees to work securely from remote locations and reduce their transportation emissions. With a SASE deployed in the cloud, routine management tasks can be performed by IT remotely rather than at the branch, further reducing transportation emissions.

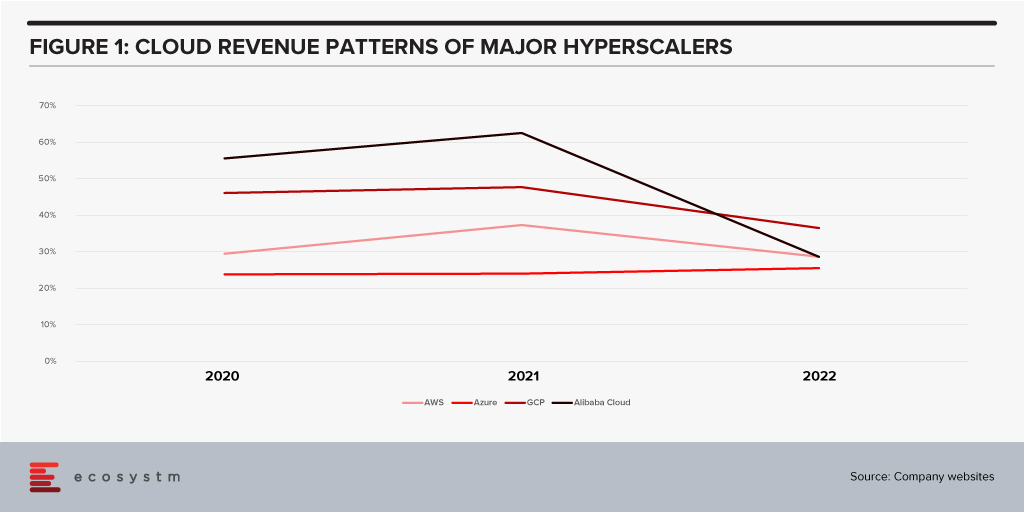

All growth must end eventually. But it is a brave person who will predict the end of growth for the public cloud hyperscalers. The hyperscaler cloud revenues have been growing at between 25-60% the past few years (off very different bases – and often including and counting different revenue streams). Even the current softening of economic spend we are seeing across many economies is only causing a slight slowdown.

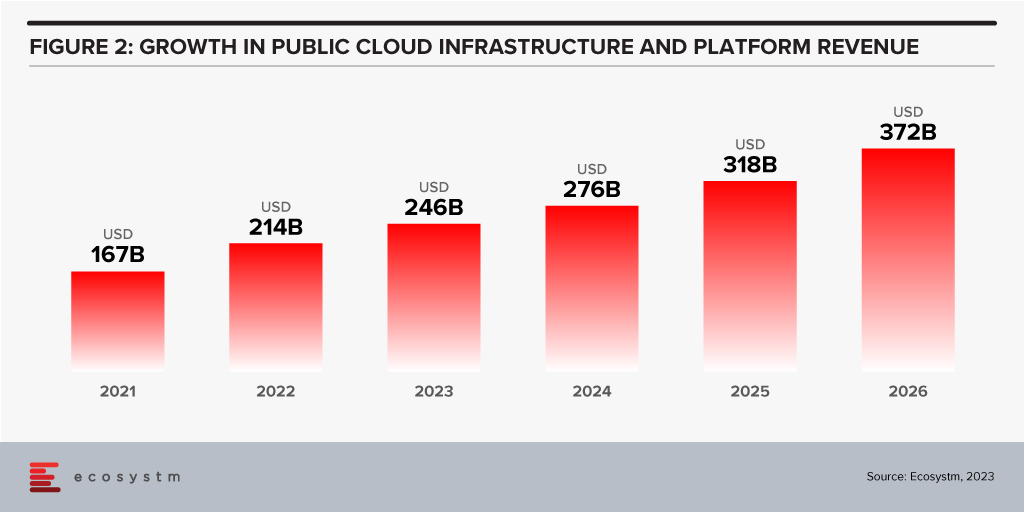

Looking forward, we expect growth in public cloud infrastructure and platform spend to continue to decline in 2024, but to accelerate in 2025 and 2026 as businesses take advantage of new cloud services and capabilities. However, the sheer size of the market means that we will see slower growth going forward – but we forecast 2026 to see the highest revenue growth of any year since public cloud services were founded.

The factors driving this growth include:

- Acceleration of digital intensity. As countries come out of their economic slowdowns and economic activity increases, so too will digital activity. And greater volumes of digital activity will require an increase in the capacity of cloud environments on which the applications and processes are hosted.

- Increased use of AI services. Businesses and AI service providers will need access to GPUs – and eventually, specialised AI chipsets – which will see cloud bills increase significantly. The extra data storage to drive the algorithms – and the increase in CPU required to deliver customised or personalised experiences that these algorithms will direct will also drive increased cloud usage.

- Further movement of applications from on-premises to cloud. Many organisations – particularly those in the Asia Pacific region – still have the majority of their applications and tech systems sitting in data centre environments. Over the next few years, more of these applications will move to hyperscalers.

- Edge applications moving to the cloud. As the public cloud giants improve their edge computing capabilities – in partnership with hardware providers, telcos, and a broader expansion of their own networks – there will be greater opportunity to move edge applications to public cloud environments.

- Increasing number of ISVs hosting on these platforms. The move from on-premise to cloud will drive some growth in hyperscaler revenues and activities – but the ISVs born in the cloud will also drive significant growth. SaaS and PaaS are typically seeing growth above the rates of IaaS – but are also drivers of the growth of cloud infrastructure services.

- Improving cloud marketplaces. Continuing on the topic of ISV partners, as the cloud hyperscalers make it easier and faster to find, buy, and integrate new services from their cloud marketplace, the adoption of cloud infrastructure services will continue to grow.

- New cloud services. No one has a crystal ball, and few people know what is being developed by Microsoft, AWS, Google, and the other cloud providers. New services will exist in the next few years that aren’t even being considered today. Perhaps Quantum Computing will start to see real business adoption? But these new services will help to drive growth – even if “legacy” cloud service adoption slows down or services are retired.

Hybrid Cloud Will Play an Important Role for Many Businesses

Growth in hyperscalers doesn’t mean that the hybrid cloud will disappear. Many organisations will hit a natural “ceiling” for their public cloud services. Regulations, proximity, cost, volumes of data, and “gravity” will see some applications remain in data centres. However, businesses will want to manage, secure, transform, and modernise these applications at the same rate and use the same tools as their public cloud environments. Therefore, hybrid and private cloud will remain important elements of the overall cloud market. Their success will be the ability to integrate with and support public cloud environments.

The future of cloud is big – but like all infrastructure and platforms, they are not a goal in themselves. It is what cloud is and will further enable businesses and customers which is exciting. As the rates of digitisation and digital intensity increase, the opportunities for the cloud infrastructure and platform providers will blossom. Sometimes they will be the driver of the growth, and other times they will just be supporting actors. But either way, in 2026 – 20 years after the birth of AWS – the growth in cloud services will be bigger than ever.

Google recently extended its Generative AI, Bard, to include coding in more than 20 programming languages, including C++, Go, Java, Javascript, and Python. The search giant has been eager to respond to last year’s launch of ChatGPT but as the trusted incumbent, it has naturally been hesitant to move too quickly. The tendency for large language models (LLMs) to produce controversial and erroneous outputs has the potential to tarnish established brands. Google Bard was released in March in the US and the UK as an LLM but lacked the coding ability of OpenAI’s ChatGPT and Microsoft’s Bing Chat.

Bard’s new features include code generation, optimisation, debugging, and explanation. Using natural language processing (NLP), users can explain their requirements to the AI and ask it to generate code that can then be exported to an integrated development environment (IDE) or executed directly in the browser with Google Colab. Similarly, users can request Bard to debug already existing code, explain code snippets, or optimise code to improve performance.

Google continues to refer to Bard as an experiment and highlights that as is the case with generated text, code produced by the AI may not function as expected. Regardless, the new functionality will be useful for both beginner and experienced developers. Those learning to code can use Generative AI to debug and explain their mistakes or write simple programs. More experienced developers can use the tool to perform lower-value work, such as commenting on code, or scaffolding to identify potential problems.

GitHub Copilot X to Face Competition

While the ability for Bard, Bing, and ChatGPT to generate code is one of their most important use cases, developers are now demanding AI directly in their IDEs.

In March, Microsoft made one of its most significant announcements of the year when it demonstrated GitHub Copilot X, which embeds GPT-4 in the development environment. Earlier this year, Microsoft invested $10 billion into OpenAI to add to the $1 billion from 2019, cementing the partnership between the two AI heavyweights. Among other benefits, this agreement makes Azure the exclusive cloud provider to OpenAI and provides Microsoft with the opportunity to enhance its software with AI co-pilots.

Currently, under technical preview, when Copilot X eventually launches, it will integrate into Visual Studio — Microsoft’s IDE. Presented as a sidebar or chat directly in the IDE, Copilot X will be able to generate, explain, and comment on code, debug, write unit tests, and identify vulnerabilities. The “Hey, GitHub” functionality will allow users to chat using voice, suitable for mobile users or more natural interaction on a desktop.

Not to be outdone by its cloud rivals, in April, AWS announced the general availability of what it describes as a real-time AI coding companion. Amazon CodeWhisperer, integrates with a range of IDEs, namely Visual Studio Code, IntelliJ IDEA, CLion, GoLand, WebStorm, Rider, PhpStorm, PyCharm, RubyMine, and DataGrip, or natively in AWS Cloud9 and AWS Lambda console. While the preview worked for Python, Java, JavaScript, TypeScript, and C#, the general release extends support for most languages. Amazon’s key differentiation is that it is available for free to individual users, while GitHub Copilot is currently subscription-based with exceptions only for teachers, students, and maintainers of open-source projects.

The Next Step: Generative AI in Security

The next battleground for Generative AI will be assisting overworked security analysts. Currently, some of the greatest challenges that Security Operations Centres (SOCs) face are being understaffed and overwhelmed with the number of alerts. Security vendors, such as IBM and Securonix, have already deployed automation to reduce alert noise and help analysts prioritise tasks to avoid responding to false threats.

Google recently introduced Sec-PaLM and Microsoft announced Security Copilot, bringing the power of Generative AI to the SOC. These tools will help analysts interact conversationally with their threat management systems and will explain alerts in natural language. How effective these tools will be is yet to be seen, considering hallucinations in security is far riskier than writing an essay with ChatGPT.

The Future of AI Code Generators

Although GitHub Copilot and Amazon CodeWhisperer had already launched with limited feature sets, it was the release of ChatGPT last year that ushered in a new era in AI code generation. There is now a race between the cloud hyperscalers to win over developers and to provide AI that supports other functions, such as security.

Despite fears that AI will replace humans, in their current state it is more likely that they will be used as tools to augment developers. Although AI and automated testing reduce the burden on the already stretched workforce, humans will continue to be in demand to ensure code is secure and satisfies requirements. A likely scenario is that with coding becoming simpler, rather than the number of developers shrinking, the volume and quality of code written will increase. AI will generate a new wave of citizen developers able to work on projects that would previously have been impossible to start. This may, in turn, increase demand for developers to build on these proofs-of-concept.

How the Generative AI landscape evolves over the next year will be interesting. In a recent interview, OpenAI’s founder, Sam Altman, explained that the non-profit model it initially pursued is not feasible, necessitating the launch of a capped-for-profit subsidiary. The company retains its values, however, focusing on advancing AI responsibly and transparently with public consultation. The appearance of Microsoft, Google, and AWS will undoubtedly change the market dynamics and may force OpenAI to at least reconsider its approach once again.

Last week, Kyndryl became a Premier Global Alliance Partner for AWS. This follows other recent similar partnerships for Kyndryl with Google and Microsoft. This now gives Kyndryl premier or similar partner status at the big three hyperscalers.

The Partnership

This new partnership was essential for Kyndryl to provide legitimacy to their independent reputation and their global presence. And in many respects, it is a partnership that AWS needs as much as Kyndryl does. As one of the largest global managed services providers, Kyndryl manages a huge amount of infrastructure and thousands of applications. Today, most of these applications sit outside public cloud environments, but at some stage in the future, many of these applications will move to the public cloud. AWS has positioned itself to benefit from this transition – as Kyndryl will be advising clients on which cloud environment best suits their needs, and in many cases Kyndryl will also be running the application migration and managing the application when it resides in the cloud. To that end, the further investment in developing an accelerator for VMware Cloud on AWS will also help to differentiate Kyndryl on AWS. With a high proportion of Kyndryl customers running VMware, this capability will help VMware users to migrate these workloads to the cloud and run core businesses services on AWS.

The Future

Beyond the typical partnership activities, Kyndryl will build out its own internal infrastructure in the cloud, leveraging AWS as its preferred cloud provider. This experience will mean that Kyndryl “drinks its own champagne” – many other managed services providers have not yet taken the majority of their infrastructure to the cloud, so this experience will help to set Kyndryl apart from their competitors, along with providing deep learning and best practices.

By the end of 2022, Kyndryl expects to have trained more than 10,000 professionals on AWS. Assuming the company hits these targets, they will be one of AWS’s largest partners. However, experience trumps training, and their relatively recent entry into the broader cloud ecosystem space (after coming out from under IBM’s wing at the end of 2021) means they have some way to go to have the depth and breadth of experience that other Premier Alliance Partners have today.

Ecosystm Opinion

In my recent interactions with Kyndryl, what sets them apart is the fact that they are completely customer-focused. They start with a client problem and find the best solution for that problem. Yes – some of the “best solutions” will be partner specific (such as SAP on Azure, VMware on AWS), but they aren’t pushing every customer down a specific path. They are not just an AWS partner – where every solution to every problem starts and ends with AWS. The importance of this new partnership is it expands the capabilities of Kyndryl and hence expands the possibilities and opportunities for Kyndryl clients to benefit from the best solutions in the market – regardless of whether they are on-premises or in one of the big three hyperscalers.