Over a century ago, the advent of commercial flights marked a pivotal moment in globalisation, shrinking the time-distance between cities and nations. Less than a century later, the first video call foreshadowed a future where conversations could span continents in real time, compressing the space-distance between people.

The world feels smaller, not literally, but in how we experience space and time. Messages that once took days to deliver arrive instantly. Distances between cities are now measured in hours, not miles. A product designed in New York is manufactured in Shenzhen and reaches London shelves within weeks. In essence, things traverse the world with far less friction than it once did.

Welcome to The Immediate Economy!

The gap between desire and fulfilment has narrowed, driven by technology’s speed and convenience. This time-space annihilation has ushered in what we now call The Immediate Economy.

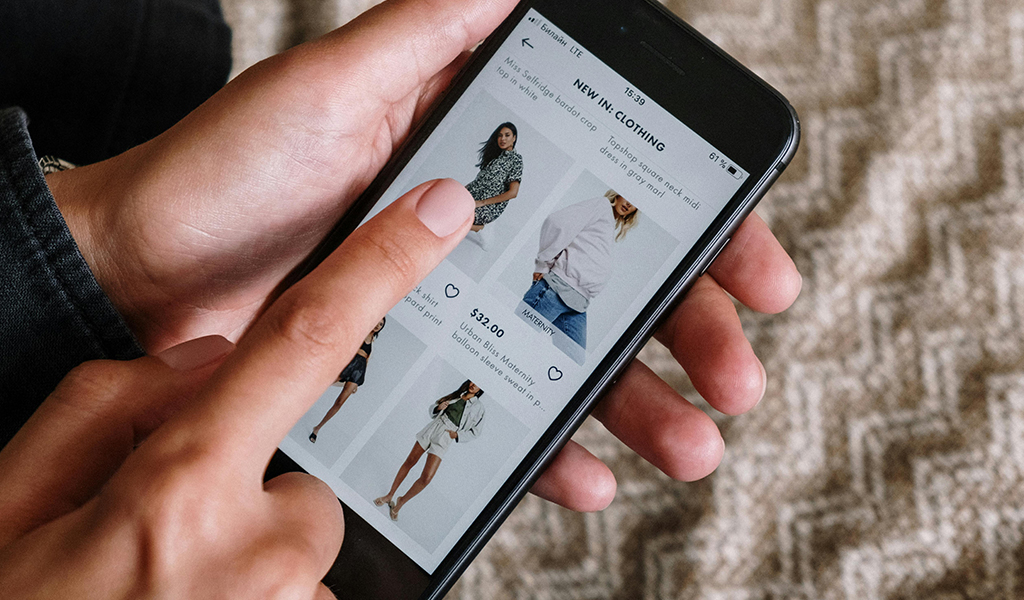

Such transformations haven’t gone unnoticed, at the click of a button is now a native (sort of cliché) expression. Amidst all this innovation, a new type of consumer has emerged – one whose attention is fleeting and easy to lose. Modern consumers have compelled industries, especially retail and ecommerce, to evolve, creating experiences that not only capture but also hold their interest.

Beyond Usability: Crafting a Memorable User Experience

Selling a product is no longer about just the product itself; it’s about the lifestyle, the experience, and the rush of dopamine with every interaction. And it’s all because of technology.

In a podcast interview with the American Psychological Association, Professor Gloria Mark from the University of California, Irvine, revealed a significant decline in attention spans on screens, from 150 seconds in 2004 to 40 seconds in the last five years. Social media platforms have spoiled the modern consumer by curating content that caters instantly to desires. Influence spills into the retail sector, compelling retailers to create experiences matching the immediacy and personalisation people now expect.

Modern consumers require modern retail experiences. Take Whole Foods, and their recent partnership with Amazon’s Dash Cart, transforming the mundane act of grocery shopping into a seamless dance of efficiency. Shoppers can now glide through aisles with carts that tally selections and debit totals directly from their accounts, rendering checkout lines obsolete. It’s more than convenience; it reimagines retail – a choreography of consumerism where every step is both effortless and calculated.

Whole Foods can analyse data from their Dash Cart technology to gain valuable insights into shopping patterns. The Immediate Economy revolutionises retail, transforming it into a hyper-efficient, personalised experience.

Retail’s new Reality: The Rise of Experiential Shopping

Just as Netflix queues up a binge-worthy series; retailers create shopping experiences as engaging and addictive as your favourite shows.

It’s been a financially rough year for Nike, but that hasn’t stopped them from expanding their immersive retail experience. Nike’s “House of Innovation” leverages 3D holographic tech. Customers can inspect intricate details of sneakers, including the texture of the fabric, the design of the laces, and the construction of the sole. The holographic display can also adjust to different lighting conditions and present the sneaker in various colours, providing a truly immersive and personalised shopping experience.

Fashion commerce platforms like Farfetch are among many integrating Virtual Try-On (VTO) technology. Leveraging the camera and sensors of customer devices, their AR technology overlays a digital image of a handbag onto a live view of a customer, enabling them to see how different styles and sizes would look on you. This approach to ecommerce enhances experiences, elevating interaction.

The 3D holographic display and the AR tech, are unique and visually appealing ways to showcase products, allowing customers to interact with products in a way that is not possible with traditional displays. Each shopping trip feels like the next episode of retail therapy.

The Evolution of Shopptertainment

The bar for quick content consumption is higher than ever thanks to platforms like TikTok and Instagram.

A prime example of this trend is Styl, a tech startup from two Duke students, with their “Tinder for shopping” application. Styl offers a swipeable interface for discovering and purchasing fashion items, seamlessly integrating the convenience and engagement of social media into the retail experience.

Styl goes beyond a simple swipe. By leveraging AI algorithms, it learns your preferences and curates a personalised feed of clothing items that align with your taste. Streamlining the shopping process, they deliver a tailored experience that caters to the modern consumer’s desire for convenience and personalisation.

Interestingly, Styl isn’t even a retail company; it pools items from websites, redirecting the users with relevant interest. They combine ecommerce with AI, creating the ultimate shopping experience for today’s customer. It’s fast, customised, and changing the way we shop.

Styl is not the first ones to do this, Instagram and TikTok provide individualised suggestions within their marketplace. But they differ by selling an experience, a vibe. That’s what sets them apart.

Tech-Powered Retail: The Heart of the Immediate Economy

History is filled with examples of societal innovation, but the Immediate Economy is transforming retail in exciting ways. In the 21st century, technology is both the catalyst and the consequence of the retail industry transformation. It began by capturing and fragmenting the average consumer’s attention, and now it’s reshaping consumer-brand relationships.

Today’s consumers crave personalised shopping. Whole Foods, with its AI-driven Dash Carts, is redefining convenience. Nike and Farfetch, through immersive AR and 3D tech, is making shopping an interactive adventure. Meanwhile, startups like Styl are leveraging AI to bring personalized fashion choices directly to consumers’ smartphones. The world is shrinking, not just in miles, but in the milliseconds it takes to satisfy a desire. From the aisles of Whole Foods to the virtual showrooms of Farfetch, The Immediate Economy offers an immersive world, where time and space bend to technology’s will, and instant gratification is no longer a perk; it’s an expectation. The Immediate Economy is here, and it’s changing how we interact with the world around us. Welcome to the future of retail, and everything else.

The increasing alignment between IT and business functions, while crucial for organisational success, complicates the management of enterprise systems. Tech leaders must balance rapidly evolving business needs with maintaining system stability and efficiency. This dynamic adds pressure to deliver agility while ensuring long-term ERP health, making management increasingly complex.

As tech providers such as SAP enhance their capabilities and products, they will impact business processes, technology skills, and the tech landscape.

At SAP NOW Southeast Asia in Singapore, SAP presented their future roadmap, with a focus on empowering their customers to transform with agility. Ecosystm Advisors Sash Mukherjee and Tim Sheedy provide insights on SAP’s recent announcements and messaging.

Click here to download SAP NOW Southeast Asia: Highlights

What was your key takeaway from the event?

TIM SHEEDY. SAP is making a strong comeback in Asia Pacific, ramping up their RISE with SAP program after years of incremental progress. The focus is on transitioning customers from complex, highly customised legacy systems to cloud ERP, aligning with the region’s appetite for simplifying core processes, reducing customisations, and leveraging cloud benefits. Many on-prem SAP users have fallen behind on updates due to over-customisation, turning even minor upgrades into major projects – and SAP’s offerings aim to solve for these challenges.

SASH MUKHERJEE. A standout feature of the session was the compelling customer case studies. Unlike many industry events where customer stories can be generic, the stories shared were examples of SAP’s impact. From Mitr Phol’s use of SAP RISE to enhance farm-to-table transparency to CP Group’s ambitious sustainability goals aligned with the SBTi, and Standard Chartered Bank’s focus on empowering data analytics teams, these testimonials offered concrete illustrations of SAP’s value proposition.

How is SAP integrating AI into their offerings?

TIM SHEEDY. SAP, like other tech platforms, is ramping up their AI capabilities – but with a twist.

They are not only highlighting GenAI but also emphasising their predictive AI features. SAP’s approach focuses on embedded AI, integrating it directly into systems and processes to offer low-risk, user-friendly solutions.

Joule, their AI copilot, is enterprise-ready, providing seamless integration with SAP backend systems and meeting strict compliance standards like GDPR and SOC-II. By also integrating with Microsoft 365, Joule extends its reach to daily tools like Outlook, Teams, Word, and Excel.

While SAP AI may lack the flash of other platforms, it is designed for SAP users – managers and board members – who prioritise consistency, reliability, and auditability alongside business value.

What is the value proposition of SAP’s Clean Core?

SASH MUKHERJEE. SAP’s Clean Core marks a strategic shift in ERP management.

Traditionally, businesses heavily customised SAP to meet specific needs, resulting in complex and costly IT landscapes. Clean Core advocates for a standardised system with minimal customisations, offering benefits like increased agility, lower costs, and reduced risk during upgrades. However, necessary customisations can still be achieved using SAP’s BTP.

The move to the Clean Core is often driven by CEO mandates, as legacy SAP solutions have become too complex to fully leverage data. For example, an Australian mining company reduced customisations from 27,000 to 200, and Standard Chartered Bank used Clean Core data to launch a carbon program within four months.

However, the transition can be challenging and will require enhanced developer productivity, expansion of tooling, and clear migration paths.

How is SAP shifting their partner strategy?

As SAP customers face significant transformations, tech partners – cloud hyperscalers, systems integrators, consulting firms and managed services providers – will play a crucial role in executing these changes before SAP ECC loses support in 2027.

TIM SHEEDY. SAP has always relied on partners for implementations, but with fewer large-scale upgrade projects in recent years, many partner teams have shrunk. Recognising this, SAP is working to upskill partners on RISE with SAP. This effort aims to ensure they can effectively manage and optimise the modern Cloud ERP platform, utilise assets, templates, accelerators, and tools for rapid migration, and foster continuous innovation post-migration. The availability of these skills in the market will be essential for SAP customers to ensure successful transitions to the Cloud ERP platform.

SASH MUKHERJEE. SAP’s partner strategy emphasises business transformation over technology migration. This shift requires partners to focus on delivering measurable business outcomes rather than solely selling technology. Given the prevalence of partner-led sales in Southeast Asia, there is a need to empower partners with tools and resources to effectively communicate the value proposition to business decision-makers. While RISE certifications will be beneficial for larger partners, a significant portion of the market comprises SMEs that rely on smaller, local partners – and they will need support mechanisms too.

What strategies should SAP prioritise to maintain market leadership?

TIM SHEEDY. Any major platform change gives customers an opportunity to explore alternatives.

Established players like Oracle, Microsoft, and Salesforce are aggressively pursuing the ERP market. Meanwhile, industry-specific solutions, third-party support providers, and even emerging technologies like those offered by ServiceNow are challenging the traditional ERP landscape.

However, SAP has made significant strides in easing the transition from legacy platforms and is expected to continue innovating around RISE with SAP. By offering incentives and simplifying migration, SAP aims to retain their customer base. While SAP’s focus on renewal and migration could pose challenges for growth, the company’s commitment to execution suggests they will retain most of their customers. GROW with SAP is likely to be a key driver of new business, particularly in mid-sized organisations, especially if SAP can tailor offerings for the cost-sensitive markets in the region.

The global data protection landscape is growing increasingly complex. With the proliferation of privacy laws across jurisdictions, organisations face a daunting challenge in ensuring compliance.

From the foundational GDPR, the evolving US state-level regulations, to new regulations in emerging markets, businesses with cross-border presence must navigate a maze of requirements to protect consumer data. This complexity, coupled with the rapid pace of regulatory change, requires proactive and strategic approaches to data management and protection.

GDPR: The Catalyst for Global Data Privacy

At the forefront of this global push for data privacy stands the General Data Protection Regulation (GDPR) – a landmark legislation that has reshaped data governance both within the EU and beyond. It has become a de facto standard for data management, influencing the creation of similar laws in countries like India, China, and regions such as Southeast Asia and the US.

However, the GDPR is evolving to tackle new challenges and incorporate lessons from past data breaches. Amendments aim to enhance enforcement, especially in cross-border cases, expedite complaint handling, and strengthen breach penalties. Amendments to the GDPR in 2024 focus on improving enforcement efficiency. The One-Stop-Shop mechanism will be strengthened for better handling of cross-border data processing, with clearer guidelines for lead supervisory authority and faster information sharing. Deadlines for cross-border decisions will be shortened, and Data Protection Authorities (DPAs) must cooperate more closely. Rules for data transfers to third countries will be clarified, and DPAs will have stronger enforcement powers, including higher fines for non-compliance.

For organisations, these changes mean increased scrutiny and potential penalties due to faster investigations. Improved DPA cooperation can lead to more consistent enforcement across the EU, making it crucial to stay updated and adjust data protection practices. While aiming for more efficient GDPR enforcement, these changes may also increase compliance costs.

GDPR’s Global Impact: Shaping Data Privacy Laws Worldwide

Despite being drafted by the EU, the GDPR has global implications, influencing data privacy laws worldwide, including in Canada and the US.

Canada’s Personal Information Protection and Electronic Documents Act (PIPEDA) governs how the private sector handles personal data, emphasising data minimisation and imposing fines of up to USD 75,000 for non-compliance.

The US data protection landscape is a patchwork of state laws influenced by the GDPR and PIPEDA. The California Privacy Rights Act (CPRA) and other state laws like Virginia’s CDPA and Colorado’s CPA reflect GDPR principles, requiring transparency and limiting data use. Proposed federal legislation, such as the American Data Privacy and Protection Act (ADPPA), aims to establish a national standard similar to PIPEDA.

The GDPR’s impact extends beyond EU borders, significantly influencing data protection laws in non-EU European countries. Countries like Switzerland, Norway, and Iceland have closely aligned their regulations with GDPR to maintain data flows with the EU. Switzerland, for instance, revised its Federal Data Protection Act to ensure compatibility with GDPR standards. The UK, post-Brexit, retained a modified version of GDPR in its domestic law through the UK GDPR and Data Protection Act 2018. Even countries like Serbia and North Macedonia, aspiring for EU membership, have modeled their data protection laws on GDPR principles.

Data Privacy: A Local Flavour in Emerging Markets

Emerging markets are recognising the critical need for robust data protection frameworks. These countries are not just following in the footsteps of established regulations but are creating laws that address their unique economic and cultural contexts while aligning with global standards.

Brazil has over 140 million internet users – the 4th largest in the world. Any data collection or processing within the country is protected by the Lei Geral de Proteção de Dados (or LGPD), even from data processors located outside of Brazil. The LGPD also mandates organisations to appoint a Data Protection Officer (DPO) and establishes the National Data Protection Authority (ANPD) to oversee compliance and enforcement.

Saudi Arabia’s Personal Data Protection Law (PDPL) requires explicit consent for data collection and use, aligning with global norms. However, it is tailored to support Saudi Arabia’s digital transformation goals. The PDPL is overseen by the Saudi Data and Artificial Intelligence Authority (SDAIA), linking data protection with the country’s broader AI and digital innovation initiatives.

Closer Home: Changes in Asia Pacific Regulations

The Asia Pacific region is experiencing a surge in data privacy regulations as countries strive to protect consumer rights and align with global standards.

Japan. Japan’s Act on the Protection of Personal Information (APPI) is set for a major overhaul in 2025. Certified organisations will have more time to report data breaches, while personal data might be used for AI training without consent. Enhanced data rights are also being considered, giving individuals greater control over biometric and children’s data. The government is still contemplating the introduction of administrative fines and collective action rights, though businesses have expressed concerns about potential negative impacts.

South Korea. South Korea has strengthened its data protection laws with significant amendments to the Personal Information Protection Act (PIPA), aiming to provide stronger safeguards for individual personal data. Key changes include stricter consent requirements, mandatory breach notifications within 72 hours, expanded data subject rights, refined data processing guidelines, and robust safeguards for emerging technologies like AI and IoT. There are also increased penalties for non-compliance.

China. China’s Personal Information Protection Law (PIPL) imposes stringent data privacy controls, emphasising user consent, data minimisation, and restricted cross-border data transfers. Severe penalties underscore the nation’s determination to safeguard personal information.

Southeast Asia. Southeast Asian countries are actively enhancing their data privacy landscapes. Singapore’s PDPA mandates breach notifications and increased fines. Malaysia is overhauling its data protection law, while Thailand’s PDPA has also recently come into effect.

Spotlight: India’s DPDP Act

The Digital Personal Data Protection Act, 2023 (DPDP Act), officially notified about a year ago, is anticipated to come into effect soon. This principles-based legislation shares similarities with the GDPR and applies to personal data that identifies individuals, whether collected digitally or digitised later. It excludes data used for personal or domestic purposes, aggregated research data, and publicly available information. The Act adopts GDPR-like territorial rules but does not extend to entities outside India that monitor behaviour within the country.

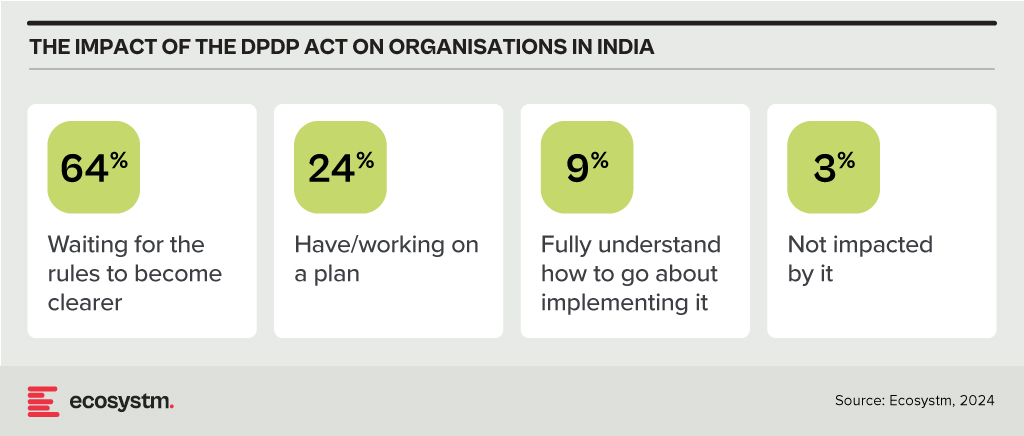

Consent under the DPDP Act must be free, informed, and specific, with companies required to provide a clear and itemised notice. Unlike the GDPR, the Act permits processing without consent for certain legitimate uses, such as legal obligations or emergencies. It also categorises data fiduciaries based on the volume and sensitivity of the data they handle, imposing additional obligations on significant data fiduciaries while offering exemptions for smaller entities. The Act simplifies cross-border data transfers compared to the GDPR, allowing transfers to all countries unless restricted by the Indian Government. It also provides broad exemptions to the State for data processing under specific conditions. Penalties for breaches are turnover agnostic, with considerations for breach severity and mitigating actions. The full impact of the DPDP Act will be clearer once the rules are finalised and the Board becomes operational, but 97% of Indian organisations acknowledge that it will affect them.

Conclusion

Data breaches pose significant risks to organisations, requiring a strong data protection strategy that combines technology and best practices. Key technological safeguards include encryption, identity access management (IAM), firewalls, data loss prevention (DLP) tools, tokenisation, and endpoint protection platforms (EPP). Along with technology, organisations should adopt best practices such as inventorying and classifying data, minimising data collection, maintaining transparency with customers, providing choices, and developing comprehensive privacy policies. Training employees and designing privacy-focused processes are also essential. By integrating robust technology with informed human practices, organisations can enhance their overall data protection strategy.

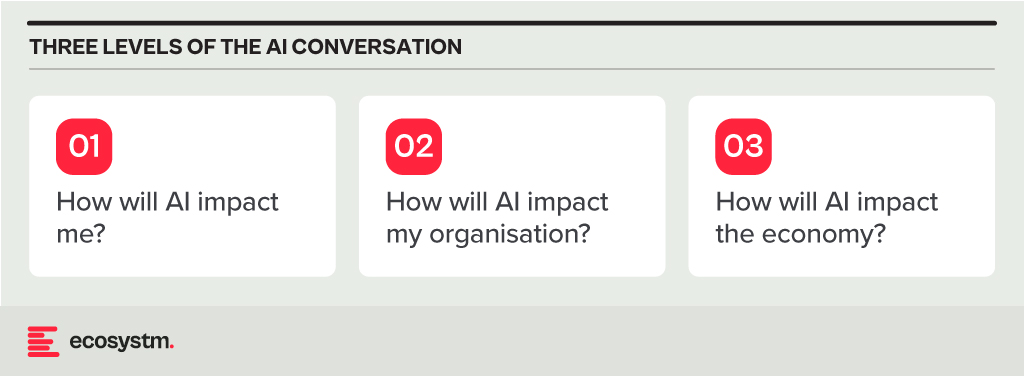

In my earlier post this week, I referred to the need for a grown-up conversation on AI. Here, I will focus on what conversations we need to have and what the solutions to AI disruption might be.

The Impact of AI on Individuals

AI is likely to impact people a lot! You might lose your job to AI. Even if it is not that extreme, it’s likely AI will do a lot of your job. And it might not be the “boring bits” – and sometimes the boring bits make a job manageable! IT helpdesk professionals, for instance, are already reporting that AIOps means they only deal with the difficult challenges. While that might be fun to start with, some personality types find this draining, knowing that every problem that ends up in the queue might take hours or days to resolve.

Your job will change. You will need new skills. Many organisations don’t invest in their employees, so you’ll need to upskill yourself in your own time and at your own cost. Look for employers who put new skill acquisition at the core of their employee offering. They are likelier to be more successful in the medium-to-long term and will also be the better employers with a happier workforce.

The Impact of AI on Organisations

Again – the impact on organisations will be huge. It will change the shape and size of organisations. We have already seen the impact in many industries. The legal sector is a major example where AI can do much of the job of a paralegal. Even in the IT helpdesk example shared earlier, where organisations with a mature tech environment will employ higher skilled professionals in most roles. These sectors need to think where their next generation of senior employees will come from, if junior roles go to AI. Software developers and coders are seeing greater demand for their skills now, even as AI tools increasingly augment their work. However, these skills are at an inflection point, as solutions like TuringBots have already started performing developer roles and are likely to take over the job of many developers and even designers in the near future.

Some industries will find that AI helps junior roles act more like senior employees, while others will use AI to perform the junior roles. AI will also create new roles (such as “prompt engineers”), but even those jobs will be done by AI in the future (and we are starting to see that).

HR teams, senior leadership, and investors need to work together to understand what the future might look like for their organisations. They need to start planning today for that future. Hint: invest in skills development and acquisition – that’s what will help you to succeed in the future.

The Impact of AI on the Economy

Assuming the individual and organisational impacts play out as described, the economic impacts of widespread AI adoption will be significant, similar to the “Great Depression”. If organisations lay off 30% of their employees, that means 30% of the economy is impacted, potentially leading to drying up of some government and an increase in government spend on welfare etc. – basically leading to major societal disruption.

The “AI won’t displace workers” narrative strikes me as the technological equivalent of climate change denial. Just like ignoring environmental warnings, dismissing the potential for AI to significantly impact the workforce is a recipe for disaster. Let’s not fall into the same trap and be an “AI denier”.

What is the Solution?

The solutions revolve around two ideas, and these need to be adopted at an industry level and driven by governments, unions, and businesses:

- Pay a living salary (for all citizens). Some countries already do this, with the Nordic nations leading the charge. And it is no surprise that some of these countries have had the most consistent long-term economic growth. The challenge today is that many governments cannot afford this – and it will become even less affordable as unemployment grows. The solution? Changing tax structures, taxing organisational earnings in-country (to stop them recognising local earnings in low-tax locations), and taxing wealth (not incomes). Also, paying essential workers who will not be replaced by AI (nurses, police, teachers etc.) better salaries will also help keep economies afloat. Easier said than done, of course!

- Move to a shorter work week (but pay full salaries). It is in the economic interest of every organisation that people stay gainfully employed. We have already discussed the ripple effect of job cuts. But if employees are given more flexibility, and working 3-day weeks, this not only spreads the work around more workers, but means that these workers have more time to spend money – ensuring continuing economic growth. Can every company do this? Probably not. But many can and they might have to. The concept of a 5-day work week isn’t that old (less than 100 years in fact – a 40-hour work week was only legislated in the US in the 1930s, and many companies had as little as 6-hour working days even in the 1950s). Just because we have worked this way for 80 years doesn’t mean that we will always have to. There is already a move towards 4-day work weeks. Tech.co surveyed over 1,000 US business leaders and found that 29% of companies with 4-day workweeks use AI extensively. In contrast, only 8% of organisations with a 5-day workweek use AI to the same degree.

AI Changes Everything

We are only at the beginning of the AI era. We have had a glimpse into the future, and it is both frightening and exciting. The opportunities for organisations to benefit from AI are already significant and will become even more as the technology improves and businesses learn to better adopt AI in areas where it can make an impact. But there will be consequences to this adoption. We already know what many of those consequences will be, so let’s start having those grown-up conversations today.

If you have seen me present recently – or even spoken to me for more than a few minutes, you’ve probably heard me go on about how the AI discussions need to change! At the moment, most senior executives, board rooms, governments, think tanks and tech evangelists are running around screaming with their hands on their ears when it comes to the impact of AI on jobs and society.

We are constantly being bombarded with the message that AI will help make knowledge workers more productive. AI won’t take people’s jobs – in fact it will help to create new jobs – you get the drift; you’ve been part of these conversations!

I was at an event recently where a leading cloud provider had a huge slide with the words: “Humans + AI Together” in large font across the screen. They then went on to demonstrate an opportunity for AI. In a live demo, they had the customer of a retailer call a store to check for stock of a dress. The call was handled by an AI solution, which engaged in a natural conversation with the customer. It verified their identity, checked dress stock at the store, processed the order, and even confirmed the customer’s intent to use their stored credit card.

So, in effect, on one slide, the tech provider emphasised that AI was not going to take our jobs, and two minutes later they showed how current AI capabilities could replace humans – today!

At an analyst event last week, representatives from three different tech providers told analysts how Microsoft Copilot is freeing up 10-15 hours a week. For a 40-hour work week, that’s a 25-38 time saving. In France (where the work week is 35 hours), that’s up to 43% of their time saved. So, by using a single AI platform, we can save 25-43% of our time – giving us the ability to work on other things.

What are the Real Benefits of AI?

The critical question is: What will we do with this saved time? Will it improve revenue or profit for businesses? AI might make us more agile, faster, more innovative but unless that translates to benefits on the bottom line, it is pointless. For example, adopting AI might mean we can create three times as many products. However, if we don’t make any more revenue and/or profit by having three times as many products, then any productivity benefit is worthless. UNLESS it is delivered through decreased costs.

We won’t need as many humans in our contact centres if AI is taking calls. Ideally, AI will lead to more personalised customer experiences – which will drive less calls to the contact centre in the first place! Even sales-related calls may disappear as personal AI bots will find deals and automatically sign us up. Of course, AI also costs money, particularly in terms of computing power. Some of the productivity uplift will be offset by the extra cost of the AI tools and platforms.

Many benefits that AI delivers will become table stakes. For example, if your competitor is updating their product four times a year and you are updating it annually, you might lose market share – so the benefits of AI might be just “keeping up with the competition”. But there are many areas where additional activity won’t deliver benefits. Organisations are unlikely to benefit from three times more promotional SMSs or EDMs and design work or brand redesigns.

I also believe that AI will create new roles. But you know what? AI will eventually do those jobs too. When automation came to agriculture, workers moved to factories. When automation came to factories, workers moved to offices. The (literally) trillion-dollar question is where workers go when automation comes to the office.

The Wider Impact of AI

The issue is that very few senior people in businesses or governments are planning for a future where maybe 30% of jobs done by knowledge workers go to AI. This could lead to the failure of economies. Government income will fall off a cliff. It will be unemployment on levels not seen since the great depression – or worse. And if we have not acknowledged these possible outcomes, how can we plan for it?

This is what I call the “grown up conversation about AI”. This is acknowledging the opportunity for AI and its impacts on companies, industries, governments and societies. Once we acknowledge these likely outcomes we can plan for it.

And that’s what I’ll discuss shortly – look out for my next Ecosystm Insight: The Three Possible Solutions for AI-driven Mass Unemployment.

With over 70% of the world’s population predicted to live in cities by 2050, smart cities that use data, technology, and AI to streamline services are key to ensuring a healthy and safe environment for all who live, work, or visit them.

Fueled by rapid urbanisation, Southeast Asia is experiencing a smart city boom with an estimated 100 million people expected to move from rural areas to cities by 2030.

Despite their diverse populations and varying economic stages, ASEAN member countries are increasingly on the same page: they are all united by the belief that smart cities offer a solution to the complex urban and socio-economic challenges they face.

Read on to discover how Southeast Asian countries are using new tools to manage growth and deliver a better quality of life to hundreds of millions of people.

ASCN: A Network for Smarter Cities

The ASEAN Smart Cities Network (ASCN) is a collaborative platform where cities in the region exchange insights on adopting smart technology, finding solutions, and involving industry and global partners. They work towards the shared objective of fostering sustainable urban development and enhancing livability in their cities.

As of 2024, the ASCN includes 30 members, with new additions from Thailand and Indonesia.

“The ASEAN Smart Cities Network provides the sort of open platform needed to drive the smart city agenda. Different cities are at different levels of developments and “smartness” and ASEAN’s diversity is well suited for such a network that allows for cities to learn from one another.”

Taimur Khilji

UNITED NATIONS DEVELOPMENT PROGRAMME (UNDP)

Singapore’s Tech-Driven Future

The Smart Nation Initiative harnesses technology and data to improve citizens’ lives, boost economic competitiveness, and tackle urban challenges.

Smart mobility solutions, including sensor networks, real-time traffic management, and integrated public transportation with smart cards and mobile apps, have reduced congestion and travel times.

Ranked 5th globally and Asia’s smartest city, Singapore is developing a national digital twin to for better urban management. The 3D maps and subsurface model, created by the Singapore Land Authority, will help in managing infrastructure and assets.

The Smart City Initiative promotes sustainability with innovative systems like automated pneumatic waste collection and investments in water management and energy-efficient solutions.

Malaysia’s Holistic Smart City Approach

With aspirations to become a Smart Nation by 2040 (outlined in their Fourth National Physical Plan – NPP4), Malaysia is making strides.

Five pilot cities, including Kuala Lumpur and Johor Bahru, are testing the waters by integrating advanced technologies to modernise infrastructure.

Pilots embrace sustainability, with projects like Gamuda Cove showcasing smart technologies for intelligent traffic management and centralised security within eco-friendly developments.

Malaysia’s Smart Cities go beyond infrastructure, adopting international standards like the WELL Building Standard to enhance resident health, well-being, and productivity. The Ministry of Housing and Local Government, collaborating with PLANMalaysia and the Department of Standards Malaysia, has established clear indicators for Smart City development.

Indonesia’s Green Smart City Ambitions

Eyeing carbon neutrality by 2060, Indonesia is pushing its Smart City initiatives.

Their National Long-Term Development Plan prioritises economic growth and improved quality of life through digital infrastructure and innovative public services.

The goal is 100 smart cities that integrate green technology and sustainable infrastructure, reflecting their climate commitment.

Leaving behind congested Jakarta, Indonesia is building Nusantara, the world’s first “smart forest city“. Spanning 250,000 hectares, Nusantara will boast high-capacity infrastructure, high-speed internet, and cutting-edge technology to support the archipelago’s activities.

Thailand’s Smart City Boom

Thailand’s national agenda goes big on smart cities.

They aim for 105 smart cities by 2027, with a focus on transportation, environment, and safety.

Key projects include:

- USD 37 billion smart city in Huai Yai with business centres and housing for 350,000.

- A 5G-powered smart city in Ban Chang for enhanced environmental and traffic management.

- USD $40 billion investment to create a smart regional financial centre across Chonburi, Rayong, and Chachoengsao.

Philippines Fights Urban Challenges with Smart Solutions

By 2050, population in cities is expected to soar to nearly 102 million – twice the current figure.

A glimmer of optimism emerges with the rise of smart city solutions championed by local governments (LGUs).

Rapid urbanisation burdens the Philippines with escalating waste. By 2025, daily waste production could reach a staggering 28,000 tonnes. Smart waste management solutions are being implemented to optimise collection and reduce fuel consumption.

Smart city developer Iveda is injecting innovation. Their ambitious USD 5 million project brings AI-powered technology to cities like Cebu, Bacolod, Iloilo, and Davao. The focus: leverage technology to modernise airports, roads, and sidewalks, paving the way for a more sustainable and efficient urban future.

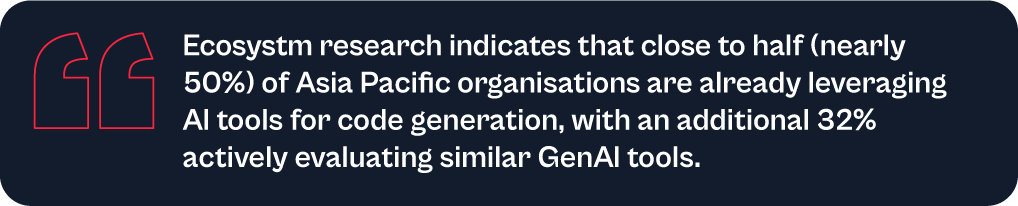

AI tools have become a game-changer for the technology industry, enhancing developer productivity and software quality. Leveraging advanced machine learning models and natural language processing, these tools offer a wide range of capabilities, from code completion to generating entire blocks of code, significantly reducing the cognitive load on developers. AI-powered tools not only accelerate the coding process but also ensure higher code quality and consistency, aligning seamlessly with modern development practices. Organisations are reaping the benefits of these tools, which have transformed the software development lifecycle.

Impact on Developer Productivity

AI tools are becoming an indispensable part of software development owing to their:

- Speed and Efficiency. AI-powered tools provide real-time code suggestions, which dramatically reduces the time developers spend writing boilerplate code and debugging. For example, Tabnine can suggest complete blocks of code based on the comments or a partial code snippet, which accelerates the development process.

- Quality and Accuracy. By analysing vast datasets of code, AI tools can offer not only syntactically correct but also contextually appropriate code suggestions. This capability reduces bugs and improves the overall quality of the software.

- Learning and Collaboration. AI tools also serve as learning aids for developers by exposing them to new or better coding practices and patterns. Novice developers, in particular, can benefit from real-time feedback and examples, accelerating their professional growth. These tools can also help maintain consistency in coding standards across teams, fostering better collaboration.

Advantages of Using AI Tools in Development

- Reduced Time to Market. Faster coding and debugging directly contribute to shorter development cycles, enabling organisations to launch products faster. This reduction in time to market is crucial in today’s competitive business environment where speed often translates to a significant market advantage.

- Cost Efficiency. While there is an upfront cost in integrating these AI tools, the overall return on investment (ROI) is enhanced through the reduced need for extensive manual code reviews, decreased dependency on large development teams, and lower maintenance costs due to improved code quality.

- Scalability and Adaptability. AI tools learn and adapt over time, becoming more efficient and aligned with specific team or project needs. This adaptability ensures that the tools remain effective as the complexity of projects increases or as new technologies emerge.

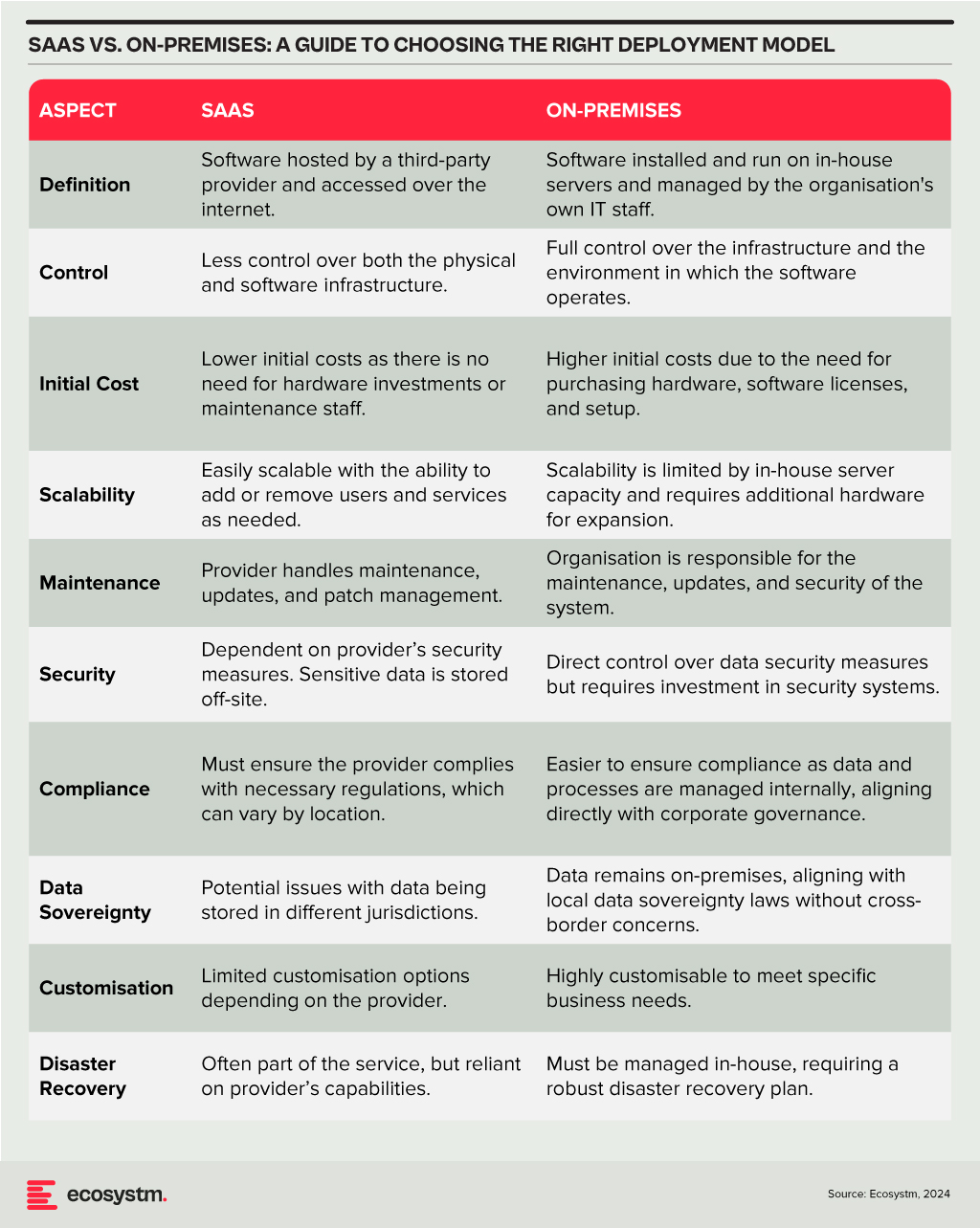

Deployment Models

The choice between SaaS and on-premises deployment models involves a trade-off between control, cost, and flexibility. Organisations need to consider their specific requirements, including the level of control desired over the infrastructure, sensitivity of the data, compliance needs, and available IT resources. A thorough assessment will guide the decision, ensuring that the deployment model chosen aligns with the organisation’s operational objectives and strategic goals.

Technology teams must consider challenges such as the reliability of generated code, the potential for generating biased or insecure code, and the dependency on external APIs or services. Proper oversight, regular evaluations, and a balanced integration of AI tools with human oversight are recommended to mitigate these risks.

A Roadmap for AI Integration

The strategic integration of AI tools in software development offers a significant opportunity for companies to achieve a competitive edge. By starting with pilot projects, organisations can assess the impact and utility of AI within specific teams. Encouraging continuous training in AI advancements empowers developers to leverage these tools effectively. Regular audits ensure that AI-generated code adheres to security standards and company policies, while feedback mechanisms facilitate the refinement of tool usage and address any emerging issues.

Technology teams have the opportunity to not only boost operational efficiency but also cultivate a culture of innovation and continuous improvement in their software development practices. As AI technology matures, even more sophisticated tools are expected to emerge, further propelling developer capabilities and software development to new heights.

Southeast Asia’s massive workforce – 3rd largest globally – faces a critical upskilling gap, especially with the rise of AI. While AI adoption promises a USD 1 trillion GDP boost by 2030, unlocking this potential requires a future-proof workforce equipped with AI expertise.

Governments and technology providers are joining forces to build strong AI ecosystems, accelerating R&D and nurturing homegrown talent. It’s a tight race, but with focused investments, Southeast Asia can bridge the digital gap and turn its AI aspirations into reality.

Read on to find out how countries like Singapore, Thailand, Vietnam, and The Philippines are implementing comprehensive strategies to build AI literacy and expertise among their populations.

Download ‘Upskilling for the Future: Building AI Capabilities in Southeast Asia’ as a PDF

Big Tech Invests in AI Workforce

Southeast Asia’s tech scene heats up as Big Tech giants scramble for dominance in emerging tech adoption.

Microsoft is partnering with governments, nonprofits, and corporations across Indonesia, Malaysia, the Philippines, Thailand, and Vietnam to equip 2.5M people with AI skills by 2025. Additionally, the organisation will also train 100,000 Filipino women in AI and cybersecurity.

Singapore sets ambitious goal to triple its AI workforce by 2028. To achieve this, AWS will train 5,000 individuals annually in AI skills over the next three years.

NVIDIA has partnered with FPT Software to build an AI factory, while also championing AI education through Vietnamese schools and universities. In Malaysia, they have launched an AI sandbox to nurture 100 AI companies targeting USD 209M by 2030.

Singapore Aims to be a Global AI Hub

Singapore is doubling down on upskilling, global leadership, and building an AI-ready nation.

Singapore has launched its second National AI Strategy (NAIS 2.0) to solidify its global AI leadership. The aim is to triple the AI talent pool to 15,000, establish AI Centres of Excellence, and accelerate public sector AI adoption. The strategy focuses on developing AI “peaks of excellence” and empowering people and businesses to use AI confidently.

In keeping with this vision, the country’s 2024 budget is set to train workers who are over 40 on in-demand skills to prepare the workforce for AI. The country will also invest USD 27M to build AI expertise, by offering 100 AI scholarships for students and attracting experts from all over the globe to collaborate with the country.

Thailand Aims for AI Independence

Thailand’s ‘Ignite Thailand’ 2030 vision focuses on boosting innovation, R&D, and the tech workforce.

Thailand is launching the second phase of its National AI Strategy, with a USD 42M budget to develop an AI workforce and create a Thai Large Language Model (ThaiLLM). The plan aims to train 30,000 workers in sectors like tourism and finance, reducing reliance on foreign AI.

The Thai government is partnering with Microsoft to build a new data centre in Thailand, offering AI training for over 100,000 individuals and supporting the growing developer community.

Building a Digital Vietnam

Vietnam focuses on AI education, policy, and empowering women in tech.

Vietnam’s National Digital Transformation Programme aims to create a digital society by 2030, focusing on integrating AI into education and workforce training. It supports AI research through universities and looks to address challenges like addressing skill gaps, building digital infrastructure, and establishing comprehensive policies.

The Vietnamese government and UNDP launched Empower Her Tech, a digital skills initiative for female entrepreneurs, offering 10 online sessions on GenAI and no-code website creation tools.

The Philippines Gears Up for AI

The country focuses on investment, public-private partnerships, and building a tech-ready workforce.

With its strong STEM education and programming skills, the Philippines is well-positioned for an AI-driven market, allocating USD 30M for AI research and development.

The Philippine government is partnering with entities like IBPAP, Google, AWS, and Microsoft to train thousands in AI skills by 2025, offering both training and hands-on experience with cutting-edge technologies.

The strategy also funds AI research projects and partners with universities to expand AI education. Companies like KMC Teams will help establish and manage offshore AI teams, providing infrastructure and support.