As AI adoption continues to surge, the tech infrastructure market is undergoing a significant transformation. Traditional IT infrastructure providers are facing increasing pressure to innovate and adapt to the evolving demands of AI-powered applications. This shift is driving the development of new technologies and solutions that can support the intensive computational requirements and data-intensive nature of AI workloads.

At Lenovo’s recently held Asia Pacific summit in Shanghai they detailed their ‘AI for All’ strategy as they prepare for the next computing era. Building on their history as a major force in the hardware market, new AI-ready offerings will be prominent in their enhanced portfolio.

At the same time, Lenovo is adding software and services, both homegrown and with partners, to leverage their already well-established relationships with client IT teams. Sustainability is also a crucial message as it seeks to address the need for power efficiency and zero waste lifecycle management in their products.

Ecosystm Advisor Darian Bird comment on Lenovo’s recent announcements and messaging.

Click here to download Lenovo’s Innovation Roadmap: Takeaways from the APAC Analyst Summit as a PDF

1. Lenovo’s AI Strategy

Lenovo’s AI strategy focuses on launching AI PCs that leverage their computing legacy.

As the adoption of GenAI increases, there’s a growing need for edge processing to enhance privacy and performance. Lenovo, along with Microsoft, is introducing AI PCs with specialised components like CPUs, GPUs, and AI accelerators (NPUs) optimised for AI workloads.

Energy efficiency is vital for AI applications, opening doors for mobile-chip makers like Qualcomm. Lenovo’s latest ThinkPads, featuring Qualcomm’s Snapdragon X Elite processors, support Microsoft’s Copilot+ features while maximising battery life during AI tasks.

Lenovo is also investing in small language models (SLMs) that run directly on laptops, offering GenAI capabilities with lower resource demands. This allows users to interact with PCs using natural language for tasks like file searches, tech support, and personal management.

2. Lenovo’s Computer Vision Solutions

Lenovo stands out as one of the few computing hardware vendors that manufactures its own systems.

Leveraging precision engineering, Lenovo has developed solutions to automate production lines. By embedding computer vision in processes like quality inspection, equipment monitoring, and safety supervision, Lenovo customises ML algorithms using customer-specific data. Clients like McLaren Automotive use this technology to detect flaws beyond human capability, enhancing product quality and speeding up production.

Lenovo extends their computer vision expertise to retail, partnering with Sensormatic and Everseen to digitise branch operations. By analysing camera feeds, Lenovo’s solutions optimise merchandising, staffing, and design, while their checkout monitoring system detects theft and scanning errors in real-time. Australian customers have seen significant reductions in retail shrinkage after implementation.

3. AI in Action: Autonomous Robots

Like other hardware companies, Lenovo is experimenting with new devices to futureproof their portfolio.

Earlier this year, Lenovo unveiled the Daystar Bot GS, a six-legged robotic dog and an upgrade from their previous wheeled model. Resembling Boston Dynamics’ Spot but with added legs inspired by insects for enhanced stability, the bot is designed for challenging environments. Lenovo is positioning it as an automated monitoring assistant for equipment inspection and surveillance, reducing the need for additional staff. Power stations in China are already using the robot to read meters, detect temperature anomalies, and identify defective equipment.

Although it is likely to remain a niche product in the short term, the robot is an avenue for Lenovo to showcase their AI wares on a physical device, incorporating computer vision and self-guided movement.

Considerations for Lenovo’s Future Growth

Lenovo outlined an AI vision leveraging their expertise in end user computing, manufacturing, and retail. While the strategy aligns with Lenovo’s background, they should consider the following:

Hybrid AI. Initially, AI on PCs will address performance and privacy issues, but hybrid AI – integrating data across devices, clouds, and APIs – will eventually dominate.

Data Transparency & Control. The balance between convenience and privacy in AI is still unclear. Evolving transparency and control will be crucial as users adapt to new AI tools.

AI Ecosystem. AI’s value lies in data, applications, and integration, not just hardware. Hardware vendors must form deeper partnerships in these areas, as Lenovo’s focus on industry-specific solutions demonstrates.

Enhanced Experience. AI enhances operational efficiency and customer experience. Offloading level one support to AI not only cuts costs but also resolves issues faster than live agents.

If you have seen me present recently – or even spoken to me for more than a few minutes, you’ve probably heard me go on about how the AI discussions need to change! At the moment, most senior executives, board rooms, governments, think tanks and tech evangelists are running around screaming with their hands on their ears when it comes to the impact of AI on jobs and society.

We are constantly being bombarded with the message that AI will help make knowledge workers more productive. AI won’t take people’s jobs – in fact it will help to create new jobs – you get the drift; you’ve been part of these conversations!

I was at an event recently where a leading cloud provider had a huge slide with the words: “Humans + AI Together” in large font across the screen. They then went on to demonstrate an opportunity for AI. In a live demo, they had the customer of a retailer call a store to check for stock of a dress. The call was handled by an AI solution, which engaged in a natural conversation with the customer. It verified their identity, checked dress stock at the store, processed the order, and even confirmed the customer’s intent to use their stored credit card.

So, in effect, on one slide, the tech provider emphasised that AI was not going to take our jobs, and two minutes later they showed how current AI capabilities could replace humans – today!

At an analyst event last week, representatives from three different tech providers told analysts how Microsoft Copilot is freeing up 10-15 hours a week. For a 40-hour work week, that’s a 25-38 time saving. In France (where the work week is 35 hours), that’s up to 43% of their time saved. So, by using a single AI platform, we can save 25-43% of our time – giving us the ability to work on other things.

What are the Real Benefits of AI?

The critical question is: What will we do with this saved time? Will it improve revenue or profit for businesses? AI might make us more agile, faster, more innovative but unless that translates to benefits on the bottom line, it is pointless. For example, adopting AI might mean we can create three times as many products. However, if we don’t make any more revenue and/or profit by having three times as many products, then any productivity benefit is worthless. UNLESS it is delivered through decreased costs.

We won’t need as many humans in our contact centres if AI is taking calls. Ideally, AI will lead to more personalised customer experiences – which will drive less calls to the contact centre in the first place! Even sales-related calls may disappear as personal AI bots will find deals and automatically sign us up. Of course, AI also costs money, particularly in terms of computing power. Some of the productivity uplift will be offset by the extra cost of the AI tools and platforms.

Many benefits that AI delivers will become table stakes. For example, if your competitor is updating their product four times a year and you are updating it annually, you might lose market share – so the benefits of AI might be just “keeping up with the competition”. But there are many areas where additional activity won’t deliver benefits. Organisations are unlikely to benefit from three times more promotional SMSs or EDMs and design work or brand redesigns.

I also believe that AI will create new roles. But you know what? AI will eventually do those jobs too. When automation came to agriculture, workers moved to factories. When automation came to factories, workers moved to offices. The (literally) trillion-dollar question is where workers go when automation comes to the office.

The Wider Impact of AI

The issue is that very few senior people in businesses or governments are planning for a future where maybe 30% of jobs done by knowledge workers go to AI. This could lead to the failure of economies. Government income will fall off a cliff. It will be unemployment on levels not seen since the great depression – or worse. And if we have not acknowledged these possible outcomes, how can we plan for it?

This is what I call the “grown up conversation about AI”. This is acknowledging the opportunity for AI and its impacts on companies, industries, governments and societies. Once we acknowledge these likely outcomes we can plan for it.

And that’s what I’ll discuss shortly – look out for my next Ecosystm Insight: The Three Possible Solutions for AI-driven Mass Unemployment.

Over the past year, many organisations have explored Generative AI and LLMs, with some successfully identifying, piloting, and integrating suitable use cases. As business leaders push tech teams to implement additional use cases, the repercussions on their roles will become more pronounced. Embracing GenAI will require a mindset reorientation, and tech leaders will see substantial impact across various ‘traditional’ domains.

AIOps and GenAI Synergy: Shaping the Future of IT Operations

When discussing AIOps adoption, there are commonly two responses: “Show me what you’ve got” or “We already have a team of Data Scientists building models”. The former usually demonstrates executive sponsorship without a specific business case, resulting in a lukewarm response to many pre-built AIOps solutions due to their lack of a defined business problem. On the other hand, organisations with dedicated Data Scientist teams face a different challenge. While these teams can create impressive models, they often face pushback from the business as the solutions may not often address operational or business needs. The challenge arises from Data Scientists’ limited understanding of the data, hindering the development of use cases that effectively align with business needs.

The most effective approach lies in adopting an AIOps Framework. Incorporating GenAI into AIOps frameworks can enhance their effectiveness, enabling improved automation, intelligent decision-making, and streamlined operational processes within IT operations.

This allows active business involvement in defining and validating use-cases, while enabling Data Scientists to focus on model building. It bridges the gap between technical expertise and business requirements, ensuring AIOps initiatives are influenced by the capabilities of GenAI, address specific operational challenges and resonate with the organisation’s goals.

The Next Frontier of IT Infrastructure

Many companies adopting GenAI are openly evaluating public cloud-based solutions like ChatGPT or Microsoft Copilot against on-premises alternatives, grappling with the trade-offs between scalability and convenience versus control and data security.

Cloud-based GenAI offers easy access to computing resources without substantial upfront investments. However, companies face challenges in relinquishing control over training data, potentially leading to inaccurate results or “AI hallucinations,” and concerns about exposing confidential data. On-premises GenAI solutions provide greater control, customisation, and enhanced data security, ensuring data privacy, but require significant hardware investments due to unexpectedly high GPU demands during both the training and inferencing stages of AI models.

Hardware companies are focusing on innovating and enhancing their offerings to meet the increasing demands of GenAI. The evolution and availability of powerful and scalable GPU-centric hardware solutions are essential for organisations to effectively adopt on-premises deployments, enabling them to access the necessary computational resources to fully unleash the potential of GenAI. Collaboration between hardware development and AI innovation is crucial for maximising the benefits of GenAI and ensuring that the hardware infrastructure can adequately support the computational demands required for widespread adoption across diverse industries. Innovations in hardware architecture, such as neuromorphic computing and quantum computing, hold promise in addressing the complex computing requirements of advanced AI models.

The synchronisation between hardware innovation and GenAI demands will require technology leaders to re-skill themselves on what they have done for years – infrastructure management.

The Rise of Event-Driven Designs in IT Architecture

IT leaders traditionally relied on three-tier architectures – presentation for user interface, application for logic and processing, and data for storage. Despite their structured approach, these architectures often lacked scalability and real-time responsiveness. The advent of microservices, containerisation, and serverless computing facilitated event-driven designs, enabling dynamic responses to real-time events, and enhancing agility and scalability. Event-driven designs, are a paradigm shift away from traditional approaches, decoupling components and using events as a central communication mechanism. User actions, system notifications, or data updates trigger actions across distributed services, adding flexibility to the system.

However, adopting event-driven designs presents challenges, particularly in higher transaction-driven workloads where the speed of serverless function calls can significantly impact architectural design. While serverless computing offers scalability and flexibility, the latency introduced by initiating and executing serverless functions may pose challenges for systems that demand rapid, real-time responses. Increasing reliance on event-driven architectures underscores the need for advancements in hardware and compute power. Transitioning from legacy architectures can also be complex and may require a phased approach, with cultural shifts demanding adjustments and comprehensive training initiatives.

The shift to event-driven designs challenges IT Architects, whose traditional roles involved designing, planning, and overseeing complex systems. With Gen AI and automation enhancing design tasks, Architects will need to transition to more strategic and visionary roles. Gen AI showcases capabilities in pattern recognition, predictive analytics, and automated decision-making, promoting a symbiotic relationship with human expertise. This evolution doesn’t replace Architects but signifies a shift toward collaboration with AI-driven insights.

IT Architects need to evolve their skill set, blending technical expertise with strategic thinking and collaboration. This changing role will drive innovation, creating resilient, scalable, and responsive systems to meet the dynamic demands of the digital age.

Whether your organisation is evaluating or implementing GenAI, the need to upskill your tech team remains imperative. The evolution of AI technologies has disrupted the tech industry, impacting people in tech. Now is the opportune moment to acquire new skills and adapt tech roles to leverage the potential of GenAI rather than being disrupted by it.

As tech providers such as Microsoft enhance their capabilities and products, they will impact business processes and technology skills, and influence other tech providers to reshape their product and service offerings. Microsoft recently organised briefing sessions in Sydney and Singapore, to present their future roadmap, with a focus on their AI capabilities.

Ecosystm Advisors Achim Granzen, Peter Carr, and Tim Sheedy provide insights on Microsoft’s recent announcements and messaging.

Click here to download Ecosystm VendorSphere: Microsoft’s AI Vision – Initiatives & Impact

Ecosystm Question: What are your thoughts on Microsoft Copilot?

Tim Sheedy. The future of GenAI will not be about single LLMs getting bigger and better – it will be about the use of multiple large and small language models working together to solve specific challenges. It is wasteful to use a large and complex LLM to solve a problem that is simpler. Getting these models to work together will be key to solving industry and use case specific business and customer challenges in the future. Microsoft is already doing this with Microsoft 365 Copilot.

Achim Granzen. Microsoft’s Copilot – a shrink-wrapped GenAI tool based on OpenAI – has become a mainstream product. Microsoft has made it available to their enterprise clients in multiple ways: for personal productivity in Microsoft 365, for enterprise applications in Dynamics 365, for developers in Github and Copilot Studio, and to partners to integrate Copilot into their applications suites (E.g. Amdocs’ Customer Engagement Platform).

Ecosystm Question: How, in your opinion, is the Microsoft Copilot a game changer?

Microsoft’s Customer Copyright Commitment, initially launched as Copilot Copyright Commitment, is the true game changer.

Achim Granzen. It safeguards Copilot users from potential copyright infringement lawsuits related to data used for algorithm training or output results. In November 2023, Microsoft expanded its scope to cover commercial usage of their OpenAI interface as well.

This move not only protects commercial clients using Microsoft’s GenAI products but also extends to any GenAI solutions built by their clients. This initiative significantly reduces a key risk associated with GenAI adoption, outlined in the product terms and conditions.

However, compliance with a set of Required Mitigations and Codes of Conduct is necessary for clients to benefit from this commitment, aligning with responsible AI guidelines and best practices.

Ecosystm Question: Where will organisations need most help on their AI journeys?

Peter Carr. Unfortunately, there is no playbook for AI.

- The path to integrating AI into business strategies and operations lacks a one-size-fits-all guide. Organisations will have to navigate uncharted territories for the time being. This means experimenting with AI applications and learning from successes and failures. This exploratory approach is crucial for leveraging AI’s potential while adapting to unique organisational challenges and opportunities. So, companies that are better at agile innovation will do better in the short term.

- The effectiveness of AI is deeply tied to the availability and quality of connected data. AI systems require extensive datasets to learn and make informed decisions. Ensuring data is accessible, clean, and integrated is fundamental for AI to accurately analyse trends, predict outcomes, and drive intelligent automation across various applications.

Ecosystm Question: What advice would you give organisations adopting AI?

Tim Sheedy. It is all about opportunities and responsibility.

- There is a strong need for responsible AI – at a global level, at a country level, at an industry level and at an organisational level. Microsoft (and other AI leaders) are helping to create responsible AI systems that are fair, reliable, safe, private, secure, and inclusive. There is still a long way to go, but these capabilities do not completely indemnify users of AI. They still have a responsibility to set guardrails in their own businesses about the use and opportunities for AI.

- AI and hybrid work are often discussed as different trends in the market, with different solution sets. But in reality, they are deeply linked. AI can help enhance and improve hybrid work in businesses – and is a great opportunity to demonstrate the value of AI and tools such as Copilot.

Ecosystm Question: What should Microsoft focus on?

Tim Sheedy. Microsoft faces a challenge in educating the market about adopting AI, especially Copilot. They need to educate business, IT, and AI users on embracing AI effectively. Additionally, they must educate existing partners and find new AI partners to drive change in their client base. Success in the race for knowledge workers requires not only being first but also helping users maximise solutions. Customers have limited visibility of Copilot’s capabilities, today. Improving customer upskilling and enhancing tools to prompt users to leverage capabilities will contribute to Microsoft’s (or their competitors’) success in dominating the AI tool market.

Peter Carr. Grassroots businesses form the economic foundation of the Asia Pacific economies. Typically, these businesses do not engage with global SIs (GSIs), which drive Microsoft’s new service offerings. This leads to an adoption gap in the sector that could benefit most from operational efficiencies. To bridge this gap, Microsoft must empower non-GSI partners and managed service providers (MSPs) at the local and regional levels. They won’t achieve their goal of democratising AI, unless they do. Microsoft has the potential to advance AI technology while ensuring fair and widespread adoption.

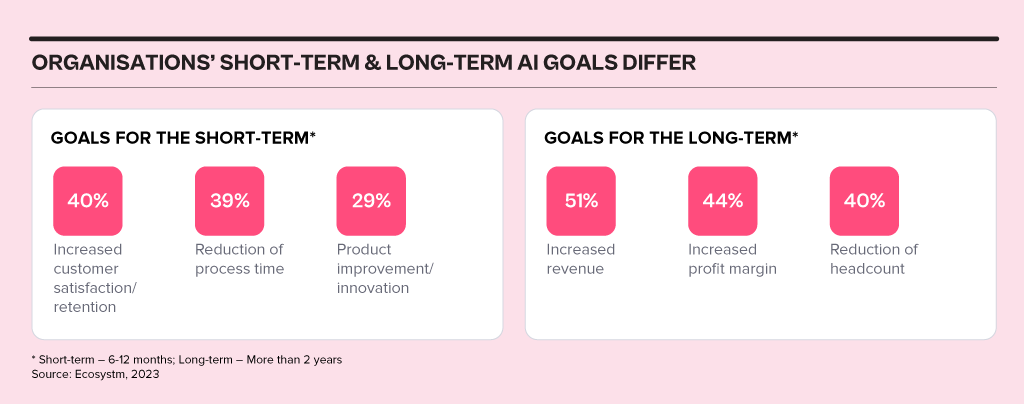

In 2024, business and technology leaders will leverage the opportunity presented by the attention being received by Generative AI engines to test and integrate AI comprehensively across the business. Many organisations will prioritise the alignment of their initial Generative AI initiatives with broader AI strategies, establishing distinct short-term and long-term goals for their AI investments.

AI adoption will influence business processes, technology skills, and, in turn, reshape the product/service offerings of AI providers.

Ecosystm analysts Achim Granzen, Peter Carr, Richard Wilkins, Tim Sheedy, and Ullrich Loeffler present the top 5 AI trends in 2024.

Click here to download ‘Ecosystm Predicts: Top 5 AI Trends in 2024.

#1 By the End of 2024, Gen AI Will Become a ‘Hygiene Factor’ for Tech Providers

AI has widely been commended as the ‘game changer’ that will create and extend the divide between adopters and laggards and be the deciding factor for success and failure.

Cutting through the hype, strategic adoption of AI is still at a nascent stage and 2024 will be another year where companies identify use cases, experiment with POCs, and commit renewed efforts to get their data assets in order.

The biggest impact of AI will be derived from integrated AI capability in standard packaged software and products – and this will include Generative AI. We will see a plethora of product releases that seamlessly weave Generative AI into everyday tools generating new value through increased efficiency and user-friendliness.

Technology will be the first industry where AI becomes the deciding factor between success and failure; tech providers will be forced to deliver on their AI promises or be left behind.

#2 Gen AI Will Disrupt the Role of IT Architects

Traditionally, IT has relied on three-tier architectures for applications, that faced limitations in scalability and real-time responsiveness. The emergence of microservices, containerisation, and serverless computing has paved the way for event-driven designs, a paradigm shift that decouples components and use events like user actions or data updates as triggers for actions across distributed services. This approach enhances agility, scalability, and flexibility in the system.

The shift towards event-driven designs and advanced architectural patterns presents a compelling challenge for IT Architects, as traditionally their role revolved around designing, planning and overseeing complex systems.

Generative AI is progressively demonstrating capabilities in architectural design through pattern recognition, predictive analytics, and automated decision-making.

With the adoption of Generative AI, the role of an IT Architect will change into a symbiotic relationship where human expertise collaborates with AI insights.

#3 Gen AI Adoption Will be Confined to Specific Use Cases

A little over a year ago, a new era in AI began with the initial release of OpenAI’s ChatGPT. Since then, many organisations have launched Generative AI pilots.

In its second-year enterprises will start adoption – but in strictly defined and limited use cases. Examples such as Microsoft Copilot demonstrate an early adopter route. While productivity increases for individuals can be significant, its enterprise impact is unclear (at this time).

But there are impactful use cases in enterprise knowledge and document management. Organisations across industries have decades (or even a century) of information, including digitised documents and staff expertise. That treasure trove of information can be made accessible through cognitive search and semantic answering, driven by Generative AI.

Generative AI will provide organisations with a way to access, distill, and create value out of that data – a task that may well be impossible to achieve in any other way.

#4 Gen AI Will Get Press Inches; ‘Traditional’ AI Will Do the Hard Work

While the use cases for Generative AI will continue to expand, the deployment models and architectures for enterprise Generative AI do not add up – yet.

Running Generative AI in organisations’ data centres is costly and using public models for all but the most obvious use cases is too risky. Most organisations opt for a “small target” strategy, implementing Generative AI in isolated use cases within specific processes, teams, or functions. Justifying investment in hardware, software, and services for an internal AI platform is challenging when the payback for each AI initiative is not substantial.

“Traditional AI/ML” will remain the workhorse, with a significant rise in use cases and deployments. Organisations are used to investing for AI by individual use cases. Managing process change and training is also more straightforward with traditional AI, as the changes are implemented in a system or platform, eliminating the need to retrain multiple knowledge workers.

#5 AI Will Pioneer a 21st Century BPM Renaissance

As we near the 25-year milestone of the 21st century, it becomes clear that many businesses are still operating with 20th-century practices and philosophies.

AI, however, represents more than a technological breakthrough; it offers a new perspective on how businesses operate and is akin to a modern interpretation of Business Process Management (BPM). This development carries substantial consequences for digital transformation strategies. To fully exploit the potential of AI, organisations need to commit to an extensive and ongoing process spanning the collection, organisation, and expansion of data, to integrating these insights at an application and workflow level.

The role of AI will transcend technological innovation, becoming a driving force for substantial business transformation. Sectors that specialise in workflow, data management, and organisational transformation are poised to see the most growth in 2024 because of this shift.

In recent years, organisations have had to swiftly transition to providing digital experiences due to limitations on physical interactions; competed fiercely based on the customer experiences offered; and invested significantly in the latest CX technologies. However, in 2024, organisations will pivot their competitive efforts towards product innovation rather than solely focusing on enhancing the CX.

This does not mean that organisations will not focus on CX – they will just be smarter about it!

Ecosystm analysts Audrey William, Melanie Disse, and Tim Sheedy present the top 5 Customer Experience trends in 2024.

Click here to download ‘Ecosystm Predicts: Top 5 CX Trends in 2024’ as a PDF.

#1 Customer Experience is Due for a Reset

Organisations aiming to improve customer experience are seeing diminishing returns, moving away from the significant gains before and during the pandemic to incremental improvements. Many organisations experience stagnant or declining CX and NPS scores as they prioritise profit over customer growth and face a convergence of undifferentiated digital experiences. The evolving digital landscape has also heightened baseline customer expectations.

In 2024, CX programs will be focused and measurable – with greater involvement of Sales, Marketing, Brand, and Customer Service to ensure CX initiatives are unified across the entire customer journey.

Organisations will reassess CX strategies, choosing impactful initiatives and aligning with brand values. This recalibration, unique to each organisation, may include reinvesting in human channels, improving digital experiences, or reimagining customer ecosystems.

#2 Sentiment Analysis Will Fuel CX Improvement

Organisations strive to design seamless customer journeys – yet they often miss the mark in crafting truly memorable experiences that forge emotional connections and turn customers into brand advocates.

Customers want on-demand information and service; failure to meet these expectations often leads to discontent and frustration. This is further heightened when organisations fail to recognise and respond to these emotions.

Sentiment analysis will shape CX improvements – and technological advancements such as in neural network, promise higher accuracy in sentiment analysis by detecting intricate relationships between emotions, phrases, and words.

These models explore multiple permutations, delving deeper to interpret the meaning behind different sentiment clusters.

#3 AI Will Elevate VoC from Surveys to Experience Improvement

In 2024, AI technologies will transform Voice of Customer (VoC) programs from measurement practices into the engine room of the experience improvement function.

The focus will move from measurement to action – backed by AI. AI is already playing a pivotal role in analysing vast volumes of data, including unstructured and unsolicited feedback. In 2024, VoC programs will shift gear to focus on driving a customer centric culture and business change. AI will augment insight interpretation, recommend actions, and predict customer behaviour, sentiment, and churn to elevate customer experiences (CX).

Organisations that don’t embrace an AI-driven paradigm will get left behind as they fail to showcase and deliver ROI to the business.

#4 Generative AI Platforms Will Replace Knowledge Management Tools

Most organisations have more customer knowledge management tools and platforms than they should. They exist in the contact centre, on the website, the mobile app, in-store, at branches, and within customer service. There are two challenges that this creates:

- Inconsistent knowledge. The information in the different knowledge bases is different and sometimes conflicting.

- Difficult to extract answers. The knowledge contained in these platforms is often in PDFs and long form documents.

Generative AI tools will consolidate organisational knowledge, enhancing searchability.

Customers and contact centre agents will be able to get actual answers to questions and they will be consistent across touchpoints (assuming they are comprehensive, customer-journey and organisation-wide initiatives).

#5 Experience Orchestration Will

Accelerate

Despite the ongoing effort to streamline and simplify the CX, organisations often implement new technologies, such as conversational AI, digital and social channels, as independent projects. This fragmented approach, driven by the desire for quick wins using best-in-class point solutions results in a complex CX technology architecture.

With the proliferation of point solution vendors, it is becoming critical to eliminate the silos. The fragmentation hampers CX teams from achieving their goals, leading to increased costs, limited insights, a weak understanding of customer journeys, and inconsistent services.

Embracing CX unification through an orchestration platform enables organisations to enhance the CX rapidly, with reduced concerns about tech debt and legacy issues.

2023 has been an eventful year. In May, the WHO announced that the pandemic was no longer a global public health emergency. However, other influencers in 2023 will continue to impact the market, well into 2024 and beyond.

Global Conflicts. The Russian invasion of Ukraine persisted; the Israeli-Palestinian conflict escalated into war; African nations continued to see armed conflicts and political crises; there has been significant population displacement.

Banking Crisis. American regional banks collapsed – Silicon Valley Bank and First Republic Bank collapses ranking as the third and second-largest banking collapses in US history; Credit Suisse was acquired by UBS in Switzerland.

Climate Emergency. The UN’s synthesis report found that there’s still a chance to limit global temperature increases by 1.5°C; Loss and Damage conversations continued without a significant impact.

Power of AI. The interest in generative AI models heated up; tech vendors incorporated foundational models in their enterprise offerings – Microsoft Copilot was launched; awareness of AI risks strengthened calls for Ethical/Responsible AI.

Click below to find out what Ecosystm analysts Achim Granzen, Darian Bird, Peter Carr, Sash Mukherjee and Tim Sheedy consider the top 5 tech market forces that will impact organisations in 2024.

Click here to download ‘Ecosystm Predicts: Tech Market Dynamics 2024’ as a PDF

#1 State-sponsored Attacks Will Alter the Nature Of Security Threats

It is becoming clearer that the post-Cold-War era is over, and we are transitioning to a multi-polar world. In this new age, malevolent governments will become increasingly emboldened to carry out cyber and physical attacks without the concern of sanction.

Unlike most malicious actors driven by profit today, state adversaries will be motivated to maximise disruption.

Rather than encrypting valuable data with ransomware, wiper malware will be deployed. State-sponsored attacks against critical infrastructure, such as transportation, energy, and undersea cables will be designed to inflict irreversible damage. The recent 23andme breach is an example of how ethnically directed attacks could be designed to sow fear and distrust. Additionally, even the threat of spyware and phishing will cause some activists, journalists, and politicians to self-censor.

#2 AI Legislation Breaches Will Occur, But Will Go Unpunished

With US President Biden’s recently published “Executive order on Safe, Secure and Trustworthy AI” and the European Union’s “AI Act” set for adoption by the European Parliament in mid-2024, codified and enforceable AI legislation is on the verge of becoming reality. However, oversight structures with powers to enforce the rules are currently not in place for either initiative and will take time to build out.

In 2024, the first instances of AI legislation violations will surface – potentially revealed by whistleblowers or significant public AI failures – but no legal action will be taken yet.

#3 AI Will Increase Net-New Carbon Emissions

In an age focused on reducing carbon and greenhouse gas emissions, AI is contributing to the opposite. Organisations often fail to track these emissions under the broader “Scope 3” category. Researchers at the University of Massachusetts, Amherst, found that training a single AI model can emit over 283T of carbon dioxide, equal to emissions from 62.6 gasoline-powered vehicles in a year.

Organisations rely on cloud providers for carbon emission reduction (Amazon targets net-zero by 2040, and Microsoft and Google aim for 2030, with the trajectory influencing global climate change); yet transparency on AI greenhouse gas emissions is limited. Diverse routes to net-zero will determine the level of greenhouse gas emissions.

Some argue that AI can help in better mapping a path to net-zero, but there is concern about whether the damage caused in the process will outweigh the benefits.

#4 ESG Will Transform into GSE to Become the Future of GRC

Previously viewed as a standalone concept, ESG will be increasingly recognised as integral to Governance, Risk, and Compliance (GRC) practices. The ‘E’ in ESG, representing environmental concerns, is becoming synonymous with compliance due to growing environmental regulations. The ‘S’, or social aspect, is merging with risk management, addressing contemporary issues such as ethical supply chains, workplace equity, and modern slavery, which traditional GRC models often overlook. Governance continues to be a crucial component.

The key to organisational adoption and transformation will be understanding that ESG is not an isolated function but is intricately linked with existing GRC capabilities.

This will present opportunities for GRC and Risk Management providers to adapt their current solutions, already deployed within organisations, to enhance ESG effectiveness. This strategy promises mutual benefits, improving compliance and risk management while simultaneously advancing ESG initiatives.

#5 Productivity Will Dominate Workforce Conversations

The skills discussions have shifted significantly over 2023. At the start of the year, HR leaders were still dealing with the ‘productivity conundrum’ – balancing employee flexibility and productivity in a hybrid work setting. There were also concerns about skills shortage, particularly in IT, as organisations prioritised tech-driven transformation and innovation.

Now, the focus is on assessing the pros and cons (mainly ROI) of providing employees with advanced productivity tools. For example, early studies on Microsoft Copilot showed that 70% of users experienced increased productivity. Discussions, including Narayana Murthy’s remarks on 70-hour work weeks, have re-ignited conversations about employee well-being and the impact of technology in enabling employees to achieve more in less time.

Against the backdrop of skills shortages and the need for better employee experience to retain talent, organisations are increasingly adopting/upgrading their productivity tools – starting with their Sales & Marketing functions.

Earlier in the year, Microsoft unveiled its vision for Copilot, a digital companion that aims to provide a unified user experience across Bing, Edge, Microsoft 365, and Windows. This vision includes a consistent user experience. The rollout began with Windows in September and expanded to Microsoft 365 Copilot for enterprise customers this month.

Many organisations across Asia Pacific will soon face the question on whether to invest in Microsoft 365 Copilot – despite its current limitations in supporting all regional languages. Copilot is currently supported in English (US, GB, AU, CA, IN), Japanese, and Chinese Simplified. Microsoft plans to support more languages such as Arabic, Chinese Traditional, Korean and Thai over the first half of 2024. There are still several languages used across Asia Pacific that will not be supported until at least the second half of 2024 or later.

Access to Microsoft 365 Copilot comes with certain prerequisites. Organisations need to have either a Microsoft 365 E3 or E5 license and an Azure Active Directory account. F3 licenses do not currently have access to 365 Copilot. For E3 license holders the cost per user for adding Copilot would nearly double – so it is a significant extra spend and will need to deliver measurable and tangible benefits and a strong business case. It is doubtful whether most organisations will be able to justify this extra spend.

However, Copilot has the potential to significantly enhance the productivity of knowledge workers, saving them many hours each week, with hundreds of use cases already emerging for different industries and user profiles. Microsoft is offering a plethora of information on how to best adopt, deploy, and use Copilot. The key focus when building a business case should revolve around how knowledge workers will use this extra time.

Maximising Copilot Integration: Steps to Drive Adoption and Enhance Productivity

Identifying use cases, building the business proposal, and securing funding for Copilot is only half the battle. Driving the change and ensuring all relevant employees use the new processes will be significantly harder. Consider how employees currently use their productivity tools compared to 15 years ago, with many still relying on the same features and capabilities in their Office suites as they did in earlier versions. In cases where new features were embraced, it typically occurred because knowledge workers didn’t have to make any additional efforts to incorporate them, such as the auto-type ahead functions in email or the seamless integration of Teams calls.

The ability of your organisation to seamlessly integrate Copilot into daily workflows, optimising productivity and efficiency while harnessing AI-generated data and insights for decision-making will be of paramount importance. It will be equally important to be watchful to mitigate potential risks associated with an over-reliance on AI without sufficient oversight.

Implementing Copilot will require some essential steps:

- Training and onboarding. Provide comprehensive training to employees on how to use Copilot’s features within Microsoft 365 applications.

- Integration into daily tasks. Encourage employees to use Copilot for drafting emails, documents, and generating meeting notes to familiarise them with its capabilities.

- Customisation. Tailor Copilot’s settings and suggestions to align with company-specific needs and workflows.

- Automation. Create bots, templates, integrations, and other automation functions for multiple use cases. For example, when users first log onto their PC, they could get a summary of missed emails, chats – without the need to request it.

- Feedback loop. Implement a feedback mechanism to monitor how Copilot is used and to make adjustments based on user experiences.

- Evaluating effectiveness. Gauge how Copilot’s features are enhancing productivity regularly and adjust usage strategies accordingly. Focus on the increased productivity – what knowledge workers now achieve with the time made available by Copilot.

Changing the behaviours of knowledge workers can be challenging – particularly for basic processes that they have been using for years or even decades. Knowledge of use cases and opportunities for Copilot will not just filter across the organisation. Implementing formal training and educational programs and backing them up with refresher courses is important to ensure compliance and productivity gains.