AI has become a business necessity today, catalysing innovation, efficiency, and growth by transforming extensive data into actionable insights, automating tasks, improving decision-making, boosting productivity, and enabling the creation of new products and services.

Generative AI stole the limelight in 2023 given its remarkable advancements and potential to automate various cognitive processes. However, now the real opportunity lies in leveraging this increased focus and attention to shine the AI lens on all business processes and capabilities. As organisations grasp the potential for productivity enhancements, accelerated operations, improved customer outcomes, and enhanced business performance, investment in AI capabilities is expected to surge.

In this eBook, Ecosystm VP Research Tim Sheedy and Vinod Bijlani and Aman Deep from HPE APAC share their insights on why it is crucial to establish tailored AI capabilities within the organisation.

At the end of last year, I had the privilege of attending a session organised by Red Hat where they shared their Asia Pacific roadmap with the tech analyst community. The company’s approach of providing a hybrid cloud application platform centred around OpenShift has worked well with clients who favour a hybrid cloud approach. Going forward, Red Hat is looking to build and expand their business around three product innovation focus areas. At the core is their platform engineering, flanked by focus areas on AI/ML and the Edge.

The Opportunities

Besides the product innovation focus, Red Hat is also looking into several emerging areas, where they’ve seen initial client success in 2023. While use cases such as operational resilience or edge lifecycle management are long-existing trends, carbon-aware workload scheduling may just have appeared over the horizon. But two others stood out for me with a potentially huge demand in 2024.

GPU-as-a-Service. GPUaaS addresses a massive demand driven by the meteoric rise of Generative AI over the past 12 months. Any innovation that would allow customers a more flexible use of scarce and expensive resources such as GPUs can create an immediate opportunity and Red Hat might have a first mover and established base advantage. Particularly GPUaaS is an opportunity in fast growing markets, where cost and availability are strong inhibitors.

Digital Sovereignty. Digital sovereignty has been a strong driver in some markets – for example in Indonesia, which has led to most cloud hyperscalers opening their data centres onshore over the past years. Yet not the least due to the geography of Indonesia, hybrid cloud remains an important consideration, where digital sovereignty needs to be managed across a diverse infrastructure. Other fast-growing markets have similar challenges and a strong drive for digital sovereignty. Crucially, Red Hat may well have an advantage where onshore hyperscalers are not yet available (for example in Malaysia).

Strategic Focus Areas for Red Hat

Red Hat’s product innovation strategy is robust at its core, particularly in platform engineering, but needs more clarity at the periphery. They have already been addressing Edge use cases as an extension of their core platform, especially in the Automotive sector, establishing a solid foundation in this area. Their focus on AI/ML may be a bit more aspirational, as they are looking to not only AI-enable their core platform but also expand it into a platform to run AI workloads. AI may drive interest in hybrid cloud, but it will be in very specific use cases.

For Red Hat to be successful in the AI space, it must steer away from competing straight out with the cloud-native AI platforms. They must identify the use cases where AI on hybrid cloud has a true advantage. Such use cases will mainly exist in industries with a strong Edge component, potentially also with a still heavy reliance on on-site data centres. Manufacturing is the prime example.

Red Hat’s success in AI/ML use cases is tightly connected to their (continuing) success in Edge use cases, all build on the solid platform engineering foundation.

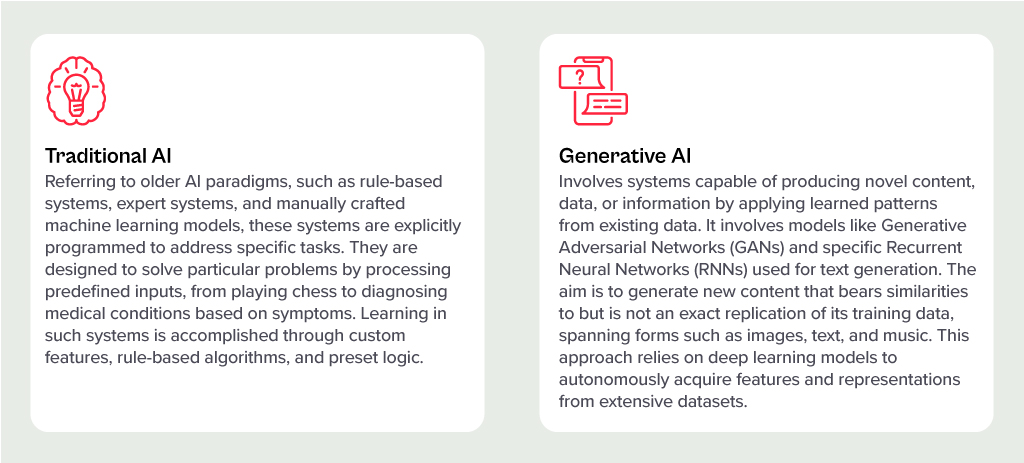

Generative AI has stolen the limelight in 2023 from nearly every other technology – and for good reason. The advances made by Generative AI providers have been incredible, with many human “thinking” processes now in line to be automated.

But before we had Generative AI, there was the run-of-the-mill “traditional AI”. However, despite the traditional tag, these capabilities have a long way to run within your organisation. In fact, they are often easier to implement, have less risk (and more predictability) and are easier to generate business cases for. Traditional AI systems are often already embedded in many applications, systems, and processes, and can easily be purchased as-a-service from many providers.

Unlocking the Potential of AI Across Industries

Many organisations around the world are exploring AI solutions today, and the opportunities for improvement are significant:

- Manufacturers are designing, developing and testing in digital environments, relying on AI to predict product responses to stress and environments. In the future, Generative AI will be called upon to suggest improvements.

- Retailers are using AI to monitor customer behaviours and predict next steps. Algorithms are being used to drive the best outcome for the customer and the retailer, based on previous behaviours and trained outcomes.

- Transport and logistics businesses are using AI to minimise fuel usage and driver expenses while maximising delivery loads. Smart route planning and scheduling is ensuring timely deliveries while reducing costs and saving on vehicle maintenance.

- Warehouses are enhancing the safety of their environments and efficiently moving goods with AI. Through a combination of video analytics, connected IoT devices, and logistical software, they are maximising the potential of their limited space.

- Public infrastructure providers (such as shopping centres, public transport providers etc) are using AI to monitor public safety. Video analytics and sensors is helping safety and security teams take public safety beyond traditional human monitoring.

AI Impacts Multiple Roles

Even within the organisation, different lines of business expect different outcomes for AI implementations.

- IT teams are monitoring infrastructure, applications, and transactions – to better understand root-cause analysis and predict upcoming failures – using AI. In fact, AIOps, one of the fastest-growing areas of AI, yields substantial productivity gains for tech teams and boosts reliability for both customers and employees.

- Finance teams are leveraging AI to understand customer payment patterns and automate the issuance of invoices and reminders, a capability increasingly being integrated into modern finance systems.

- Sales teams are using AI to discover the best prospects to target and what offers they are most likely to respond to.

- Contact centres are monitoring calls, automating suggestions, summarising records, and scheduling follow-up actions through conversational AI. This is allowing to get agents up to speed in a shorter period, ensuring greater customer satisfaction and increased brand loyalty.

Transitioning from Low-Risk to AI-Infused Growth

These are just a tiny selection of the opportunities for AI. And few of these need testing or business cases – many of these capabilities are available out-of-the-box or out of the cloud. They don’t need deep analysis by risk, legal, or cybersecurity teams. They just need a champion to make the call and switch them on.

One potential downside of Generative AI is that it is drawing unwarranted attention to well-established, low-risk AI applications. Many of these do not require much time from data scientists – and if they do, the challenge is often finding the data and creating the algorithm. Humans can typically understand the logic and rules that the models create – unlike Generative AI, where the outcome cannot be reverse-engineered.

The opportunity today is to take advantage of the attention that LLMs and other Generative AI engines are getting to incorporate AI into every conceivable aspect of a business. When organisations understand the opportunities for productivity improvements, speed enhancement, better customer outcomes and improved business performance, the spend on AI capabilities will skyrocket. Ecosystm estimates that for most organisations, AI spend will be less than 5% of their total tech spend in 2024 – but it is likely to grow to over 20% within the next 4-5 years.

Generative AI is seeing enterprise interest and early adoption enhancing efficiency, fostering innovation, and pushing the boundaries of possibility. It has the potential of reshaping industries – and fast!

However, alongside its immense potential, Generative AI also raises concerns. Ethical considerations surrounding data privacy and security come to the forefront, as powerful AI systems handle vast amounts of sensitive information.

Addressing these concerns through responsible AI development and thoughtful regulation will be crucial to harnessing the full transformative power of Generative AI.

Read on to find out the key challenges faced in implementing Generative AI and explore emerging use cases in industries such as Financial Services, Retail, Manufacturing, and Healthcare.

Download ‘Generative AI: Industry Adoption’ as a PDF

The Manufacturing industry is at crossroads today. It faces challenges such as geopolitical risks, supply chain disruptions, changing regulatory environments, workforce shortages, and changing consumer demands. Overcoming these requires innovation, collaboration, and proactive adaptation.

Fortunately, many of these challenges can be mitigated by technology. The future of Manufacturing will be shaped by advanced technology, automation, and AI. We are seeing early evidence of how smart factories, robotics, and 3D printing are transforming production processes for increased efficiency and customisation.

Manufacturing is all set to become more agile, efficient, and sustainable.

Read on to find out the changing priorities and key trends in Manufacturing; about the World Economic Forum’s Global Lighthouse Network initiative; and where Ecosystm advisor Kaushik Ghatak sees as the Future of Manufacturing.

Click here to download ‘The Future of Manufacturing’ as a PDF

When non-organic (man-made) fabric was introduced into fashion, there were a number of harsh warnings about using polyester and man-made synthetic fibres, including their flammability.

In creating non-organic data sets, should we also be creating warnings on their use and flammability? Let’s look at why synthetic data is used in industries such as Financial Services, Automotive as well as for new product development in Manufacturing.

Synthetic Data Defined

Synthetic data can be defined as data that is artificially developed rather than being generated by actual interactions. It is often created with the help of algorithms and is used for a wide range of activities, including as test data for new products and tools, for model validation, and in AI model training. Synthetic data is a type of data augmentation which involves creating new and representative data.

Why is it used?

The main reasons why synthetic data is used instead of real data are cost, privacy, and testing. Let’s look at more specifics on this:

- Data privacy. When privacy requirements limit data availability or how it can be used. For example, in Financial Services where restrictions around data usage and customer privacy are particularly limiting, companies are starting to use synthetic data to help them identify and eliminate bias in how they treat customers – without contravening data privacy regulations.

- Data availability. When the data needed for testing a product does not exist or is not available to the testers. This is often the case for new releases.

- Data for testing. When training data is needed for machine learning algorithms. However, in many instances, such as in the case of autonomous vehicles, the data is expensive to generate in real life.

- Training across third parties using cloud. When moving private data to cloud infrastructures involves security and compliance risks. Moving synthetic versions of sensitive data to the cloud can enable organisations to share data sets with third parties for training across cloud infrastructures.

- Data cost. Producing synthetic data through a generative model is significantly more cost-effective and efficient than collecting real-world data. With synthetic data, it becomes cheaper and faster to produce new data once the generative model is set up.

Why should it cause concern?

If real dataset contains biases, data augmented from it will contain biases, too. So, identification of optimal data augmentation strategy is important.

If the synthetic set doesn’t truly represent the original customer data set, it might contain the wrong buying signals regarding what customers are interested in or are inclined to buy.

Synthetic data also requires some form of output/quality control and internal regulation, specifically in highly regulated industries such as the Financial Services.

Creating incorrect synthetic data also can get a company in hot water with external regulators. For example, if a company created a product that harmed someone or didn’t work as advertised, it could lead to substantial financial penalties and, possibly, closer scrutiny in the future.

Conclusion

Synthetic data allows us to continue developing new and innovative products and solutions when the data necessary to do so wouldn’t otherwise be present or available due to volume, data sensitivity or user privacy challenges. Generating synthetic data comes with the flexibility to adjust its nature and environment as and when required in order to improve the performance of the model to create opportunities to check for outliers and extreme conditions.

As we return to the office, there is a growing reliance on devices to tell us how safe and secure the environment is for our return. And in specific application areas, such as Healthcare and Manufacturing, IoT data is critical for decision-making. In some sectors such as Health and Wellness, IoT devices collect personally identifiable information (PII). IoT technology is so critical to our current infrastructures that the physical wellbeing of both individuals and organisations can be at risk.

Trust & Data

IoT are also vulnerable to breaches if not properly secured. And with a significant increase in cybersecurity events over the last year, the reliance on data from IoT is driving the need for better data integrity. Security features such as data integrity and device authentication can be accomplished through the use of digital certificates and these features need to be designed as part of the device prior to manufacturing. Because if you cannot trust either the IoT devices and their data, there is no point in collecting, running analytics, and executing decisions based on the information collected.

We discuss the role of embedding digital certificates into the IoT device at manufacture to enable better security and ongoing management of the device.

Securing IoT Data from the Edge

So much of what is happening on networks in terms of real-time data collection happens at the Edge. But because of the vast array of IoT devices connecting at the Edge, there has not been a way of baking trust into the manufacture of the devices. With a push to get the devices to market, many manufacturers historically have bypassed efforts on security. Devices have been added on the network at different times from different sources.

There is a need to verify the IoT devices and secure them, making sure to have an audit trail on what you are connecting to and communicating with.

So from a product design perspective, this leads us to several questions:

- How do we ensure the integrity of data from devices if we cannot authenticate them?

- How do we ensure that the operational systems being automated are controlled as intended?

- How do we authenticate the device on the network making the data request?

Using a Public Key Infrastructure (PKI) approach maintains assurance, integrity and confidentiality of data streams. PKI has become an important way to secure IoT device applications, and this needs to be built into the design of the device. Device authentication is also an important component, in addition to securing data streams. With good design and a PKI management that is up to the task you should be able to proceed with confidence in the data created at the Edge.

Johnson Controls/DigiCert have designed a new way of managing PKI certification for IoT devices through their partnership and integration of the DigiCert ONE™ PKI management platform and the Johnson Controls OpenBlue IoT device platform. Based on an advanced, container-based design, DigiCert ONE allows organisations to implement robust PKI deployment and management in any environment, roll out new services and manage users and devices across your organisation at any scale no matter the stage of their lifecycle. This creates an operational synergy within the Operational Technology (OT) and IoT spaces to ensure that hardware, software and communication remains trusted throughout the lifecycle.

Rationale on the Role of Certification in IoT Management

Digital certificates ensure the integrity of data and device communications through encryption and authentication, ensuring that transmitted data are genuine and have not been altered or tampered with. With government regulations worldwide mandating secure transit (and storage) of PII data, PKI can help ensure compliance with the regulations by securing the communication channel between the device and the gateway.

Connected IoT devices interact with each other through machine to machine (M2M) communication. Each of these billions of interactions will require authentication of device credentials for the endpoints to prove the device’s digital identity. In such scenarios, an identity management approach based on passwords or passcodes is not practical, and PKI digital certificates are by far the best option for IoT credential management today.

Creating lifecycle management for connected devices, including revocation of expired certificates, is another example where PKI can help to secure IoT devices. Having a robust management platform that enables device management, revocation and renewal of certificates is a critical component of a successful PKI. IoT devices will also need regular patches and upgrades to their firmware, with code signing being critical to ensure the integrity of the downloaded firmware – another example of the close linkage between the IoT world and the PKI world.

Summary

PKI certification benefits both people and processes. PKI enables identity assurance while digital certificates validate the identity of the connected device. Use of PKI for IoT is a necessary trend for sense of trust in the network and for quality control of device management.

Identifying the IoT device is critical in managing its lifespan and recognizing its legitimacy in the network. Building in the ability for PKI at the device’s manufacture is critical to enable the device for its lifetime. By recognizing a device, information on it can be maintained in an inventory and its lifecycle and replacement can be better managed. Once a certificate has been distributed and certified, having the control of PKI systems creates life-cycle management.

In this Insight, our guest author Anupam Verma talks about how the Global Capability Centres (GCCs) in India are poised to become Global Transformation Centres. “In the post-COVID world, industry boundaries are blurring, and business models are being transformed for the digital age. While traditional functions of GCCs will continue to be providing efficiencies, GCCs will be ‘Digital Transformation Centres’ for global businesses.”

India has a lot to offer to the world of technology and transformation. Attracted by the talent pool, enabling policies, digital infrastructure, and competitive cost structure, MNCs have long embraced India as a preferred destination for Global Capability Centres (GCCs). It has been reported that India has more than 1,700 GCCs with an estimated global market share of over 50%.

GCCs employ around 1 million Indian professionals and has an immense impact on the economy, contributing an estimated USD 30 billion. US MNCs have the largest presence in the market and the dominating industries are BSFI, Engineering & Manufacturing, Tech & Consulting.

GCC capabilities have always been evolving

The journey began with MNCs setting up captives for cost optimisation & operational excellence. GCCs started handling operations (such as back-office and business support functions), IT support (such as app development and maintenance, remote IT infrastructure, and help desk) and customer service contact centres for the parent organisation.

In the second phase, MNCs started leveraging GCCs as centers of excellence (CoE). The focus then was product innovation, Engineering Design & R&D. BFSI and Professional Services firms started expanding the scope to cover research, underwriting, and consulting etc. Some global MNCs that have large GCCs in India are Apple, Microsoft, Google, Nissan, Ford, Qualcomm, Cisco, Wells Fargo, Bank of America, Barclays, Standard Chartered, and KPMG.

In the post-COVID world, industry boundaries are blurring, and business models are being transformed for the digital age. While traditional functions of GCCs will continue to be providing efficiencies, GCCs will be “Digital Transformation Centres” for global businesses.

The New Age GCC in the post-COVID world

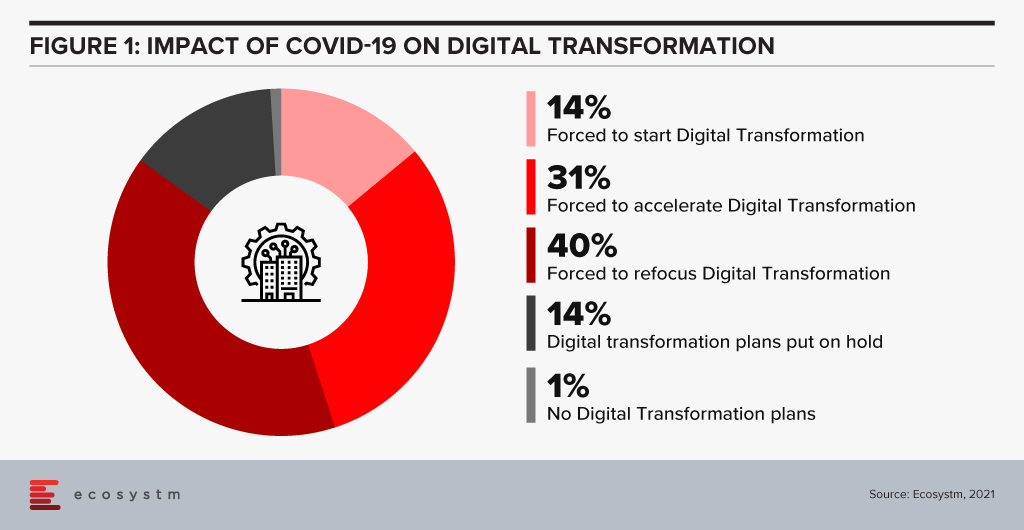

On one hand, the pandemic broke through cultural barriers that had prevented remote operations and work. The world became remote everything! On the other hand, it accelerated digital adoption in organisations. Businesses are re-imagining customer experiences and fast-tracking digital transformation enabled by technology (Figure 1). High digital adoption and rising customer expectations will also be a big catalyst for change.

In last few years, India has seen a surge in talent pool in emerging technologies such as data analytics, experience design, AI/ML, robotic process automation, IoT, cloud, blockchain and cybersecurity. GCCs in India will leverage this talent pool and play a pivotal role in enabling digital transformation at a global scale. GCCs will have direct and significant impacts on global business performance and top line growth creating long-term stakeholder value – and not be only about cost optimisation.

GCCs in India will also play an important role in digitisation and automation of existing processes, risk management and fraud prevention using data analytics and managing new risks like cybersecurity.

More and more MNCs in traditional businesses will add GCCs in India over the next decade and the existing 1,700 plus GCCs will grow in scale and scope focussing on innovation. Shift of supply chains to India will also be supported by Engineering R & D Centres. GCCs passed the pandemic test with flying colours when an exceptionally large workforce transitioned to the Work from Home model. In a matter of weeks, the resilience, continuity, and efficiency of GCCs returned to pre-pandemic levels with a distributed and remote workforce.

A Final Take

Having said that, I believe the growth spurt in GCCs in India will come from new-age businesses. Consumer-facing platforms (eCommerce marketplaces, Healthtechs, Edtechs, and Fintechs) are creating digital native businesses. As of June 2021, there are more than 700 unicorns trying to solve different problems using technology and data. Currently, very few unicorns have GCCs in India (notable names being Uber, Grab, Gojek). However, this segment will be one of the biggest growth drivers.

Currently, only 10% of the GCCs in India are from Asia Pacific organisations. Some of the prominent names being Hitachi, Rakuten, Panasonic, Samsung, LG, and Foxconn. Asian MNCs have an opportunity to move fast and stay relevant. This segment is also expected to grow disproportionately.

New age GCCs in India have the potential to be the crown jewel for global MNCs. For India, this has a huge potential for job creation and development of Smart City ecosystems. In this decade, growth of GCCs will be one of the core pillars of India’s journey to a USD 5 trillion economy.

The views and opinions mentioned in the article are personal.

Anupam Verma is part of the Senior Leadership team at ICICI Bank and his responsibilities have included leading the Bank’s strategy in South East Asia to play a significant role in capturing Investment, NRI remittance, and trade flows between SEA and India.

In 2020, much of the focus for organisations were on business continuity, and on empowering their employees to work remotely. Their primary focus in managing customer experience was on re-inventing their product and service delivery to their customers as regular modes were disrupted. As they emerge from the crisis, organisations will realise that it is not only their customer experience delivery models that have changed – but customer expectations have also evolved in the last few months. They are more open to digital interactions and in many cases the concept of brand loyalty has been diluted. This will change everything for organisations’ customer strategies. And digital technology will play a significant role as they continue to pivot to succeed in 2021 – across regions, industries and organisations.

Ecosystm Advisors Audrey William, Niloy Mukherjee and Tim Sheedy present the top 5 Ecosystm predictions for Customer Experience in 2021. This is a summary of the predictions – the full report (including the implications) is available to download for free on the Ecosystm platform.

The Top 5 Customer Experience Trends for 2021

- Customer Experience Will Go Truly Digital

COVID-19 made the few businesses that did not have an online presence acutely aware that they need one – yesterday! We have seen at least 4 years of digital growth squeezed into six months of 2020. And this is only the beginning. While in 2020, the focus was primarily on eCommerce and digital payments, there will now be a huge demand for new platforms to be able to interact digitally with the customer, not just to be able to sell something online.

Digital customer interactions with brands and products – through social media, online influencers, interactive AI-driven apps, online marketplaces and the like will accelerate dramatically in 2021. The organisations that will be successful will be the ones that are able to interact with their customers and connect with them at multiple touchpoints across the customer journey. Companies unable to do that will struggle.

- Digital Engagement Will Expand Beyond the Traditional Customer-focused Industries

One of the biggest changes in 2020 has been the increase in digital engagement by industries that have not traditionally had a strong eye on CX. This trend is likely to accelerate and be further enhanced in 2021.

Healthcare has traditionally been focused on improving clinical outcomes – and patient experience has been a byproduct of that focus. Many remote care initiatives have the core objective of keeping patients out of the already over-crowded healthcare provider organisations. These initiatives will now have a strong CX element to them. The need to disseminate information to citizens has also heightened expectations on how people want their healthcare organisations and Public Health to interact with them. The public sector will dramatically increase digital interactions with citizens, having been forced to look at digital solutions during the pandemic.

Other industries that have not had a traditional focus on CX will not be far behind. The Primary & Resources industries are showing an interest in Digital CX almost for the first time. Most of these businesses are looking to transform how they manage their supply chains from mine/farm to the end customer. Energy and Utilities and Manufacturing industries will also begin to benefit from a customer focus – primarily looking at technology – including 3D printing – to customise their products and services for better CX and a larger share of the market.

- Brands that Establish a Trusted Relationship Can Start Having Fun Again

Building trust was at the core of most businesses’ CX strategies in 2020 as they attempted to provide certainty in a world generally devoid of it. But in the struggle to build a trusted experience and brand, most businesses lost the “fun”. In fact, for many businesses, fun was off the agenda entirely. Soft drink brands, travel providers, clothing retailers and many other brands typically known for their fun or cheeky experiences moved the needle to “trust” and dialed it up to 11. But with a number of vaccines on the horizon, many CX professionals will look to return to pre-pandemic experiences, that look to delight and sometimes even surprise customers.

However, many companies will get this wrong. Customers will not be looking for just fun or just great experiences. Trust still needs to be at the core of the experience. Customers will not return to pre-pandemic thinking – not immediately anyway. You can create a fun experience only if you have earned their trust first. And trust is earned by not only providing easy and effective experiences, but by being authentic.

- Customer Data Platforms Will See Increased Adoption

Enterprises continue to struggle to have a single view of the customer. There is an immense interest in making better sense of data across every touchpoint – from mobile apps, websites, social media, in-store interactions and the calls to the contact centre – to be able to create deeper customer profiles. CRM systems have been the traditional repositories of customer data, helping build a sales pipeline, and providing Marketing teams with the information they need for lead generation and marketing campaigns. However, CRM systems have an incomplete view of the customer journey. They often collect and store the same data from limited touchpoints – getting richer insights and targeted action recommendations from the same datasets is not possible in today’s world. And organisations struggled to pivot their customer strategies during COVID-19. Data residing in silos was an obstacle to driving better customer experience.

We are living in an age where customer journeys and preferences are becoming complex to decipher. An API-based CDP can ingest data from any channel of interaction across multiple journeys and create unique and detailed customer profiles. A complete overhaul of how data can be segregated based on a more accurate and targeted profile of the customer from multiple sources will be the way forward in order to drive a more proactive CX engagement.

- Voice of the Customer Programs Will be Transformed

Designing surveys and Voice of Customer programs can be time-consuming and many organisations that have a routine of running these surveys use a fixed pattern for the data they collect and analyse. However, some organisations understand that just analysing results from a survey or CSAT score does not say much about what customers’ next plan of action will be. While it may give an idea of whether particular interactions were satisfactory, it gives no indication of whether they are likely to move to another brand; if they needed more assistance; if there was an opportunity to upsell or cross sell; or even what new products and services need to be introduced. Some customers will just tick the box as a way of closing off a feedback form or survey. Leading organisations realise that this may not be a good enough indication of a brand’s health.

Organisations will look beyond CSAT to other parameters and attributes. It is the time to pay greater attention to the Voice of the Customer – and old methods alone will not suffice. They want a 360-degree view of their customers’ opinions.