Southeast Asia’s massive workforce – 3rd largest globally – faces a critical upskilling gap, especially with the rise of AI. While AI adoption promises a USD 1 trillion GDP boost by 2030, unlocking this potential requires a future-proof workforce equipped with AI expertise.

Governments and technology providers are joining forces to build strong AI ecosystems, accelerating R&D and nurturing homegrown talent. It’s a tight race, but with focused investments, Southeast Asia can bridge the digital gap and turn its AI aspirations into reality.

Read on to find out how countries like Singapore, Thailand, Vietnam, and The Philippines are implementing comprehensive strategies to build AI literacy and expertise among their populations.

Download ‘Upskilling for the Future: Building AI Capabilities in Southeast Asia’ as a PDF

Big Tech Invests in AI Workforce

Southeast Asia’s tech scene heats up as Big Tech giants scramble for dominance in emerging tech adoption.

Microsoft is partnering with governments, nonprofits, and corporations across Indonesia, Malaysia, the Philippines, Thailand, and Vietnam to equip 2.5M people with AI skills by 2025. Additionally, the organisation will also train 100,000 Filipino women in AI and cybersecurity.

Singapore sets ambitious goal to triple its AI workforce by 2028. To achieve this, AWS will train 5,000 individuals annually in AI skills over the next three years.

NVIDIA has partnered with FPT Software to build an AI factory, while also championing AI education through Vietnamese schools and universities. In Malaysia, they have launched an AI sandbox to nurture 100 AI companies targeting USD 209M by 2030.

Singapore Aims to be a Global AI Hub

Singapore is doubling down on upskilling, global leadership, and building an AI-ready nation.

Singapore has launched its second National AI Strategy (NAIS 2.0) to solidify its global AI leadership. The aim is to triple the AI talent pool to 15,000, establish AI Centres of Excellence, and accelerate public sector AI adoption. The strategy focuses on developing AI “peaks of excellence” and empowering people and businesses to use AI confidently.

In keeping with this vision, the country’s 2024 budget is set to train workers who are over 40 on in-demand skills to prepare the workforce for AI. The country will also invest USD 27M to build AI expertise, by offering 100 AI scholarships for students and attracting experts from all over the globe to collaborate with the country.

Thailand Aims for AI Independence

Thailand’s ‘Ignite Thailand’ 2030 vision focuses on boosting innovation, R&D, and the tech workforce.

Thailand is launching the second phase of its National AI Strategy, with a USD 42M budget to develop an AI workforce and create a Thai Large Language Model (ThaiLLM). The plan aims to train 30,000 workers in sectors like tourism and finance, reducing reliance on foreign AI.

The Thai government is partnering with Microsoft to build a new data centre in Thailand, offering AI training for over 100,000 individuals and supporting the growing developer community.

Building a Digital Vietnam

Vietnam focuses on AI education, policy, and empowering women in tech.

Vietnam’s National Digital Transformation Programme aims to create a digital society by 2030, focusing on integrating AI into education and workforce training. It supports AI research through universities and looks to address challenges like addressing skill gaps, building digital infrastructure, and establishing comprehensive policies.

The Vietnamese government and UNDP launched Empower Her Tech, a digital skills initiative for female entrepreneurs, offering 10 online sessions on GenAI and no-code website creation tools.

The Philippines Gears Up for AI

The country focuses on investment, public-private partnerships, and building a tech-ready workforce.

With its strong STEM education and programming skills, the Philippines is well-positioned for an AI-driven market, allocating USD 30M for AI research and development.

The Philippine government is partnering with entities like IBPAP, Google, AWS, and Microsoft to train thousands in AI skills by 2025, offering both training and hands-on experience with cutting-edge technologies.

The strategy also funds AI research projects and partners with universities to expand AI education. Companies like KMC Teams will help establish and manage offshore AI teams, providing infrastructure and support.

When OpenAI released ChatGPT, it became obvious – and very fast – that we were entering a new era of AI. Every tech company scrambled to release a comparable service or to infuse their products with some form of GenAI. Microsoft, piggybacking on its investment in OpenAI was the fastest to market with impressive text and image generation for the mainstream. Copilot is now embedded across its software, including Microsoft 365, Teams, GitHub, and Dynamics to supercharge the productivity of developers and knowledge workers. However, the race is on – AWS and Google are actively developing their own GenAI capabilities.

AWS Catches Up as Enterprise Gains Importance

Without a consumer-facing AI assistant, AWS was less visible during the early stages of the GenAI boom. They have since rectified this with a USD 4B investment into Anthropic, the makers of Claude. This partnership will benefit both Amazon and Anthropic, bringing the Claude 3 family of models to enterprise customers, hosted on AWS infrastructure.

As GenAI quickly emerges from shadow IT to an enterprise-grade tool, AWS is catching up by capitalising on their position as cloud leader. Many organisations view AWS as a strategic partner, already housing their data, powering critical applications, and providing an environment that developers are accustomed to. The ability to augment models with private data already residing in AWS data repositories will make it an attractive GenAI partner.

AWS has announced the general availability of Amazon Q, their suite of GenAI tools aimed at developers and businesses. Amazon Q Developer expands on what was launched as Code Whisperer last year. It helps developers accelerate the process of building, testing, and troubleshooting code, allowing them to focus on higher-value work. The tool, which can directly integrate with a developer’s chosen IDE, uses NLP to develop new functions, modernise legacy code, write security tests, and explain code.

Amazon Q Business is an AI assistant that can safely ingest an organisation’s internal data and connect with popular applications, such as Amazon S3, Salesforce, Microsoft Exchange, Slack, ServiceNow, and Jira. Access controls can be implemented to ensure data is only shared with authorised users. It leverages AWS’s visualisation tool, QuickSight, to summarise findings. It also integrates directly with applications like Slack, allowing users to query it directly.

Going a step further, Amazon Q Apps (in preview) allows employees to build their own lightweight GenAI apps using natural language. These employee-created apps can then be published to an enterprise’s app library for broader use. This no-code approach to development and deployment is part of a drive to use AI to increase productivity across lines of business.

AWS continues to expand on Bedrock, their managed service providing access to foundational models from companies like Mistral AI, Stability AI, Meta, and Anthropic. The service also allows customers to bring their own model in cases where they have already pre-trained their own LLM. Once a model is selected, organisations can extend its knowledge base using Retrieval-Augmented Generation (RAG) to privately access proprietary data. Models can also be refined over time to improve results and offer personalised experiences for users. Another feature, Agents for Amazon Bedrock, allows multi-step tasks to be performed by invoking APIs or searching knowledge bases.

To address AI safety concerns, Guardrails for Amazon Bedrock is now available to minimise harmful content generation and avoid negative outcomes for users and brands. Contentious topics can be filtered by varying thresholds, and Personally Identifiable Information (PII) can be masked. Enterprise-wide policies can be defined centrally and enforced across multiple Bedrock models.

Google Targeting Creators

Due to the potential impact on their core search business, Google took a measured approach to entering the GenAI field, compared to newer players like OpenAI and Perplexity. The useability of Google’s chatbot, Gemini, has improved significantly since its initial launch under the moniker Bard. Its image generator, however, was pulled earlier this year while it works out how to carefully tread the line between creativity and sensitivity. Based on recent demos though, it plans to target content creators with images (Imagen 3), video generation (Veo), and music (Lyria).

Like Microsoft, Google has seen that GenAI is a natural fit for collaboration and office productivity. Gemini can now assist the sidebar of Workspace apps, like Docs, Sheets, Slides, Drive, Gmail, and Meet. With Google Search already a critical productivity tool for most knowledge workers, it is determined to remain a leader in the GenAI era.

At their recent Cloud Next event, Google announced the Gemini Code Assist, a GenAI-powered development tool that is more robust than its previous offering. Using RAG, it can customise suggestions for developers by accessing an organisation’s private codebase. With a one-million-token large context window, it also has full codebase awareness making it possible to make extensive changes at once.

The Hardware Problem of AI

The demands that GenAI places on compute and memory have created a shortage of AI chips, causing the valuation of GPU giant, NVIDIA, to skyrocket into the trillions of dollars. Though the initial training is most hardware-intensive, its importance will only rise as organisations leverage proprietary data for custom model development. Inferencing is less compute-heavy for early use cases, such as text generation and coding, but will be dwarfed by the needs of image, video, and audio creation.

Realising compute and memory will be a bottleneck, the hyperscalers are looking to solve this constraint by innovating with new chip designs of their own. AWS has custom-built specialised chips – Trainium2 and Inferentia2 – to bring down costs compared to traditional compute instances. Similarly, Microsoft announced the Maia 100, which it developed in conjunction with OpenAI. Google also revealed its 6th-generation tensor processing unit (TPU), Trillium, with significant increase in power efficiency, high bandwidth memory capacity, and peak compute performance.

The Future of the GenAI Landscape

As enterprises gain experience with GenAI, they will look to partner with providers that they can trust. Challenges around data security, governance, lineage, model transparency, and hallucination management will all need to be resolved. Additionally, controlling compute costs will begin to matter as GenAI initiatives start to scale. Enterprises should explore a multi-provider approach and leverage specialised data management vendors to ensure a successful GenAI journey.

Historically, data scientists have been the linchpins in the world of AI and machine learning, responsible for everything from data collection and curation to model training and validation. However, as the field matures, we’re witnessing a significant shift towards specialisation, particularly in data engineering and the strategic role of Large Language Models (LLMs) in data curation and labelling. The integration of AI into applications is also reshaping the landscape of software development and application design.

The Growth of Embedded AI

AI is being embedded into applications to enhance user experience, optimise operations, and provide insights that were previously inaccessible. For example, natural language processing (NLP) models are being used to power conversational chatbots for customer service, while machine learning algorithms are analysing user behaviour to customise content feeds on social media platforms. These applications leverage AI to perform complex tasks, such as understanding user intent, predicting future actions, or automating decision-making processes, making AI integration a critical component of modern software development.

This shift towards AI-embedded applications is not only changing the nature of the products and services offered but is also transforming the roles of those who build them. Since the traditional developer may not possess extensive AI skills, the role of data scientists is evolving, moving away from data engineering tasks and increasingly towards direct involvement in development processes.

The Role of LLMs in Data Curation

The emergence of LLMs has introduced a novel approach to handling data curation and processing tasks traditionally performed by data scientists. LLMs, with their profound understanding of natural language and ability to generate human-like text, are increasingly being used to automate aspects of data labelling and curation. This not only speeds up the process but also allows data scientists to focus more on strategic tasks such as model architecture design and hyperparameter tuning.

The accuracy of AI models is directly tied to the quality of the data they’re trained on. Incorrectly labelled data or poorly curated datasets can lead to biased outcomes, mispredictions, and ultimately, the failure of AI projects. The role of data engineers and the use of advanced tools like LLMs in ensuring the integrity of data cannot be overstated.

The Impact on Traditional Developers

Traditional software developers have primarily focused on writing code, debugging, and software maintenance, with a clear emphasis on programming languages, algorithms, and software architecture. However, as applications become more AI-driven, there is a growing need for developers to understand and integrate AI models and algorithms into their applications. This requirement presents a challenge for developers who may not have specialised training in AI or data science. This is seeing an increasing demand for upskilling and cross-disciplinary collaboration to bridge the gap between traditional software development and AI integration.

Clear Role Differentiation: Data Engineering and Data Science

In response to this shift, the role of data scientists is expanding beyond the confines of traditional data engineering and data science, to include more direct involvement in the development of applications and the embedding of AI features and functions.

Data engineering has always been a foundational element of the data scientist’s role, and its importance has increased with the surge in data volume, variety, and velocity. Integrating LLMs into the data collection process represents a cutting-edge approach to automating the curation and labelling of data, streamlining the data management process, and significantly enhancing the efficiency of data utilisation for AI and ML projects.

Accurate data labelling and meticulous curation are paramount to developing models that are both reliable and unbiased. Errors in data labelling or poorly curated datasets can lead to models that make inaccurate predictions or, worse, perpetuate biases. The integration of LLMs into data engineering tasks is facilitating a transformation, freeing them from the burdens of manual data labelling and curation. This has led to a more specialised data scientist role that allocates more time and resources to areas that can create greater impact.

The Evolving Role of Data Scientists

Data scientists, with their deep understanding of AI models and algorithms, are increasingly working alongside developers to embed AI capabilities into applications. This collaboration is essential for ensuring that AI models are effectively integrated, optimised for performance, and aligned with the application’s objectives.

- Model Development and Innovation. With the groundwork of data preparation laid by LLMs, data scientists can focus on developing more sophisticated and accurate AI models, exploring new algorithms, and innovating in AI and ML technologies.

- Strategic Insights and Decision Making. Data scientists can spend more time analysing data and extracting valuable insights that can inform business strategies and decision-making processes.

- Cross-disciplinary Collaboration. This shift also enables data scientists to engage more deeply in interdisciplinary collaboration, working closely with other departments to ensure that AI and ML technologies are effectively integrated into broader business processes and objectives.

- AI Feature Design. Data scientists are playing a crucial role in designing AI-driven features of applications, ensuring that the use of AI adds tangible value to the user experience.

- Model Integration and Optimisation. Data scientists are also involved in integrating AI models into the application architecture, optimising them for efficiency and scalability, and ensuring that they perform effectively in production environments.

- Monitoring and Iteration. Once AI models are deployed, data scientists work on monitoring their performance, interpreting outcomes, and making necessary adjustments. This iterative process ensures that AI functionalities continue to meet user needs and adapt to changing data landscapes.

- Research and Continued Learning. Finally, the transformation allows data scientists to dedicate more time to research and continued learning, staying ahead of the rapidly evolving field of AI and ensuring that their skills and knowledge remain cutting-edge.

Conclusion

The integration of AI into applications is leading to a transformation in the roles within the software development ecosystem. As applications become increasingly AI-driven, the distinction between software development and AI model development is blurring. This convergence needs a more collaborative approach, where traditional developers gain AI literacy and data scientists take on more active roles in application development. The evolution of these roles highlights the interdisciplinary nature of building modern AI-embedded applications and underscores the importance of continuous learning and adaptation in the rapidly advancing field of AI.

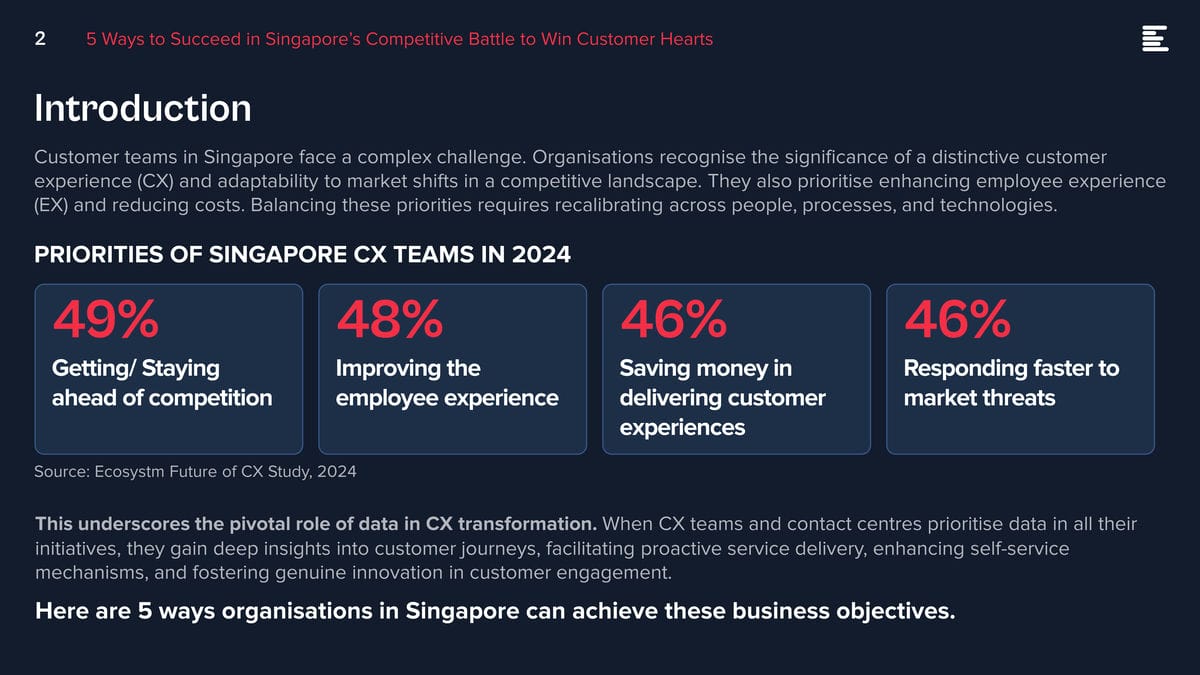

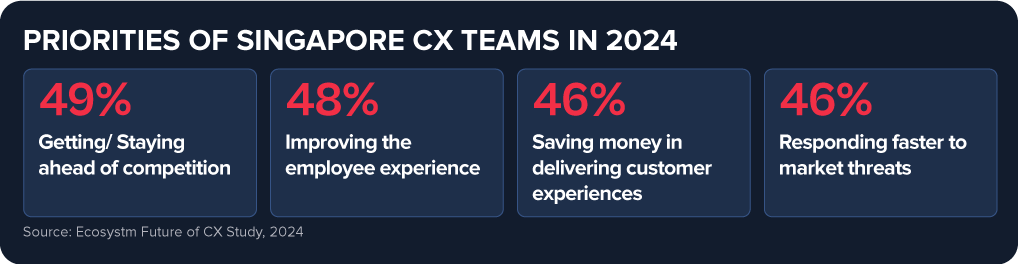

Customer teams in Singapore face a complex challenge. Organisations recognise the significance of a distinctive customer experience (CX) and adaptability to market shifts in a competitive landscape. They also prioritise enhancing employee experience (EX) and reducing costs. Balancing these priorities requires recalibrating across people, processes, and technologies.

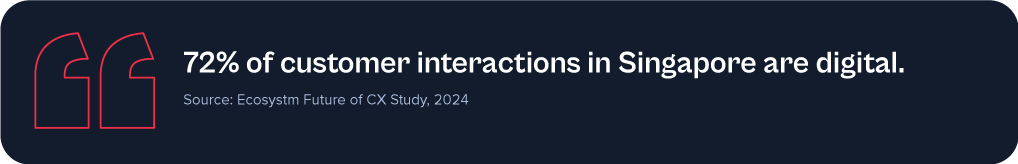

This underscores the pivotal role of data in CX transformation. When CX teams and contact centres prioritise data in all their initiatives, they gain deep insights into customer journeys, facilitating proactive service delivery, enhancing self-service mechanisms, and fostering genuine innovation in customer engagement.

Here are 5 ways organisations in Singapore can achieve these business objectives.

Download ‘5 Ways to Succeed in Singapore’s Competitive Battle to Win Customer Hearts’ as a PDF.

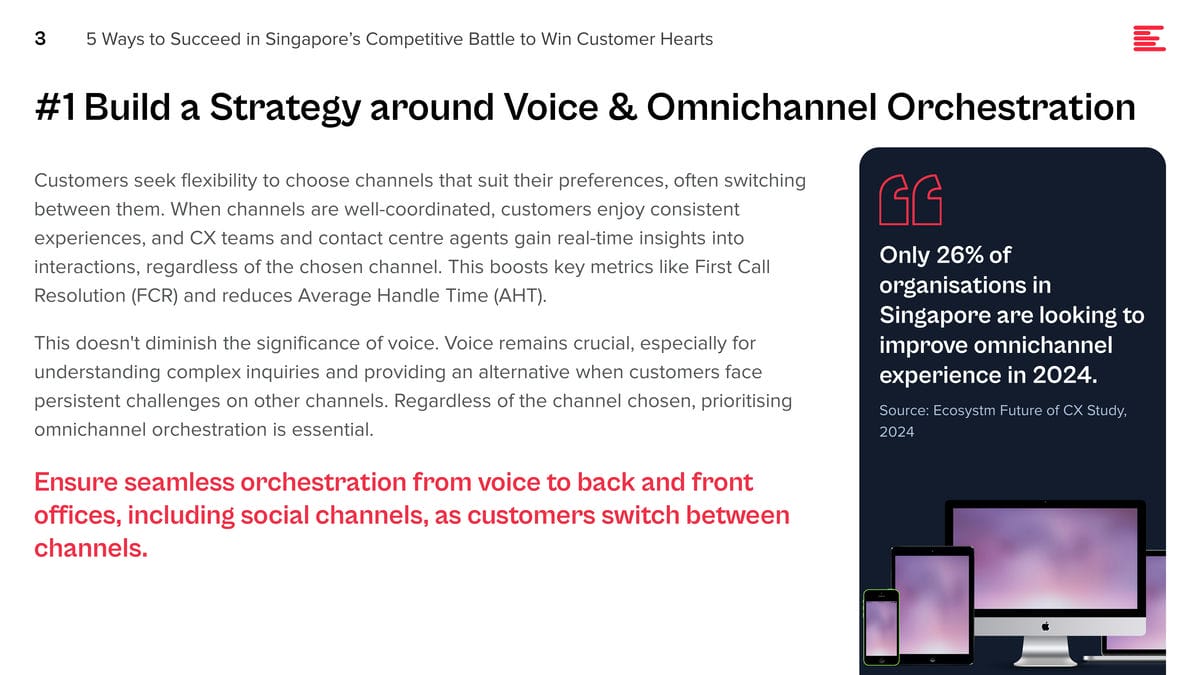

#1 Build a Strategy around Voice & Omnichannel Orchestration

Customers seek flexibility to choose channels that suit their preferences, often switching between them. When channels are well-coordinated, customers enjoy consistent experiences, and CX teams and contact centre agents gain real-time insights into interactions, regardless of the chosen channel. This boosts key metrics like First Call Resolution (FCR) and reduces Average Handle Time (AHT).

This doesn’t diminish the significance of voice. Voice remains crucial, especially for understanding complex inquiries and providing an alternative when customers face persistent challenges on other channels. Regardless of the channel chosen, prioritising omnichannel orchestration is essential.

Ensure seamless orchestration from voice to back and front offices, including social channels, as customers switch between channels.

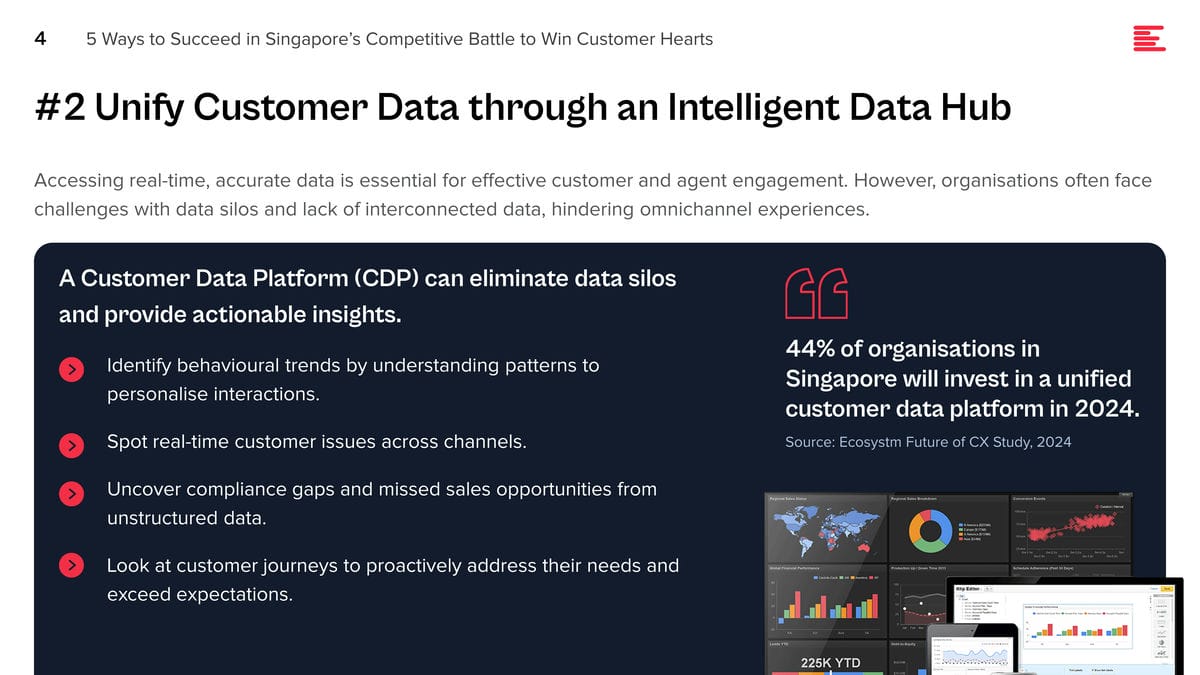

#2 Unify Customer Data through an Intelligent Data Hub

Accessing real-time, accurate data is essential for effective customer and agent engagement. However, organisations often face challenges with data silos and lack of interconnected data, hindering omnichannel experiences.

A Customer Data Platform (CDP) can eliminate data silos and provide actionable insights.

- Identify behavioural trends by understanding patterns to personalise interactions.

- Spot real-time customer issues across channels.

- Uncover compliance gaps and missed sales opportunities from unstructured data.

- Look at customer journeys to proactively address their needs and exceed expectations.

#3 Transform CX & EX with AI

GenAI and Large Language Models (LLMs) is revolutionising how brands address customer and employee challenges, boosting efficiency, and enhancing service quality.

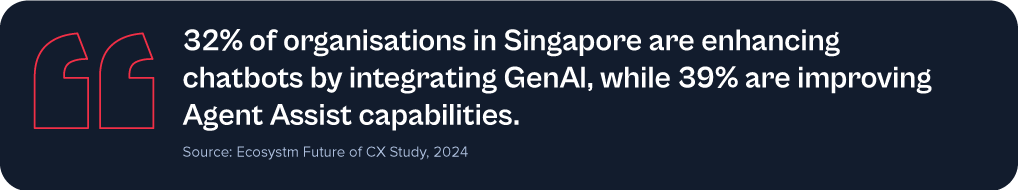

Despite 62% of Singapore organisations investing in virtual assistants/conversational AI, many have yet to integrate emerging technologies to elevate their CX & EX capabilities.

Agent Assist solutions provide real-time insights before customer interactions, optimising service delivery and saving time. With GenAI, they can automate mundane tasks like call summaries, freeing agents to focus on high-value tasks such as sales collaboration, proactive feedback management, personalised outbound calls, and upskilling.

Going beyond chatbots and Agent Assist solutions, predictive AI algorithms leverage customer data to forecast trends and optimise resource allocation. AI-driven identity validation swiftly confirms customer identities, mitigating fraud risks.

#4 Augment Existing Systems for Success

Despite the rise in digital interactions, many organisations struggle to fully modernise their legacy systems.

For those managing multiple disparate systems yet aiming to lead in CX transformation, a platform that integrates desired capabilities for holistic CX and EX experiences is vital.

A unified platform streamlines application management, ensuring cohesion, unified KPIs, enhanced security, simplified maintenance, and single sign-on for agents. This approach offers consistent experiences across channels and early issue detection, eliminating the need to navigate multiple applications or projects.

Capabilities that a platform should have:

- Programmable APIs to deliver messages across preferred social and messaging channels.

- Modernisation of outdated IVRs with self-service automation.

- Transformation of static mobile apps into engaging experience tools.

- Fraud prevention across channels through immediate phone number verification APIs.

#5 Focus on Proactive CX

In the new CX economy, organisations must meet customers on their terms, proactively engaging them before they initiate interactions. This will require organisations to re-evaluate all aspects of their CX delivery.

- Redefine the Contact Centre. Transform it into an “Intelligent” Data Hub providing unified and connected experiences. Leverage intelligent APIs to proactively manage customer interactions seamlessly across journeys.

- Reimagine the Agent’s Role. Empower agents to be AI-powered brand ambassadors, with access to prior and real-time interactions, instant decision-making abilities, and data-led knowledge bases.

- Redesign the Channel and Brand Experience. Ensure consistent omnichannel experiences through data unification and coherency. Use programmable APIs to personalise conversations and identify customer preferences for real-time or asynchronous messaging. Incorporate innovative technologies such as video to enhance the channel experience.

Customer feedback is at the heart of Customer Experience (CX). But it’s changing. What we consider customer feedback, how we collect and analyse it, and how we act on it is changing. Today, an estimated 80-90% of customer data is unstructured. Are you able and ready to leverage insights from that vast amount of customer feedback data?

Let’s begin with the basics: What is VoC and why is there so much buzz around it now?

Voice of the Customer (VoC) traditionally refers to customer feedback programs. In its most basic form that means organisations are sending surveys to customers to ask for feedback. And for a long time that really was the only way for organisations to understand what their customers thought about their brand, products, and services.

But that was way back then. Over the last few years, we’ve seen the market (organisations and vendors) dipping their toes into the world of unsolicited feedback.

What’s unsolicited feedback, you ask?

Unsolicited feedback simply means organisations didn’t actually ask for it and they’re often not in control over it, but the customer provides feedback in some way, shape, or form. That’s quite a change to the traditional survey approach, where they got answers to questions they specifically asked (solicited feedback).

Unsolicited feedback is important for many reasons:

- Organisations can tap into a much wider range of feedback sources, from surveys to contact centre phone calls, chats, emails, complaints, social media conversations, online reviews, CRM notes – the list is long.

- Surveys have many advantages, but also many disadvantages. From only hearing from a very specific customer type (those who respond and are typically at the extreme ends of the feedback sentiment), getting feedback on the questions they ask, and hearing from a very small portion of the customer base (think email open rates and survey fatigue).

- With unsolicited feedback organisations hear from 100% of the customers who interact with the brand. They hear what customers have to say, and not just how they answer predefined questions.

It is a huge step up, especially from the traditional post-call survey. Imagine a customer just spent 30 min on the line with an agent explaining their problem and frustration, just to receive a survey post call, to tell the organisation what they just told the agent, and how they felt about the experience. Organisations should already know that. In fact, they probably do – they just haven’t started tapping into that data yet. At least not for CX and customer insights purposes.

When does GenAI feature?

We can now tap into those raw feedback sources and analyse the unstructured data in a way never seen before. Long gone are the days of manual excel survey verbatim read-throughs or coding (although I’m well aware that that’s still happening!). Tech, in particular GenAI and Large Language Models (LLMs), are now assisting organisations in decluttering all the messy conversations and unstructured data. Not only is the quality of the analysis greatly enhanced, but the insights are also presented in user-friendly formats. Customer teams ask for the insights they need, and the tools spit it out in text form, graphs, tables, and so on.

The time from raw data to insights has reduced drastically, from hours and days down to seconds. Not only has the speed, quality, and ease of analysis improved, but many vendors are now integrating recommendations into their offerings. The tools can provide “basic” recommendations to help customer teams to act on the feedback, based on the insights uncovered.

Think of all the productivity gains and spare time organisations now have to act on the insights and drive positive CX improvements.

What does that mean for CX Teams and Organisations?

Including unsolicited feedback into the analysis to gain customer insights also changes how organisations set up and run CX and insights programs.

It’s important to understand that feedback doesn’t belong to a single person or team. CX is a team sport and particularly when it comes to acting on insights. It’s essential to share these insights with the right people, at the right time.

Some common misperceptions:

- Surveys have “owners” and only the owners can see that feedback.

- Feedback that comes through a specific channel, is specific to that channel or product.

- Contact centre feedback is only collected to coach staff.

If that’s how organisations have built their programs, they’ll have to rethink what they’re doing.

If organisations think about some of the more commonly used unstructured feedback, such as that from the contact centre or social media, it’s important to note that this feedback isn’t solely about the contact centre or social media teams. It’s about something else. In fact, it’s usually about something that created friction in the customer experience, that was generated by another team in the organisation. For example: An incorrect bill can lead to a grumpy social media post or a faulty product can lead to a disgruntled call to the contact centre. If the feedback is only shared with the social media or contact centre team, how will the underlying issues be resolved? The frontline teams service customers, but organisations also need to fix the underlying root causes that created the friction in the first place.

And that’s why organisations need to start consolidating the feedback data and democratise it.

It’s time to break down data and organisational silos and truly start thinking about the customer. No more silos. Instead, organisations must focus on a centralised customer data repository and data democratisation to share insights with the right people at the right time.

In my next Ecosystm Insights, I will discuss some of the tech options that CX teams have. Stay tuned!

Despite financial institutions’ unwavering efforts to safeguard their customers, scammers continually evolve to exploit advancements in technology. For example, the number of scams and cybercrimes reported to the police in Singapore increased by a staggering 49.6% to 50,376 at an estimated cost of USD 482M in 2023. GenAI represents the latest challenge to the industry, providing fraudsters with new avenues for deception.

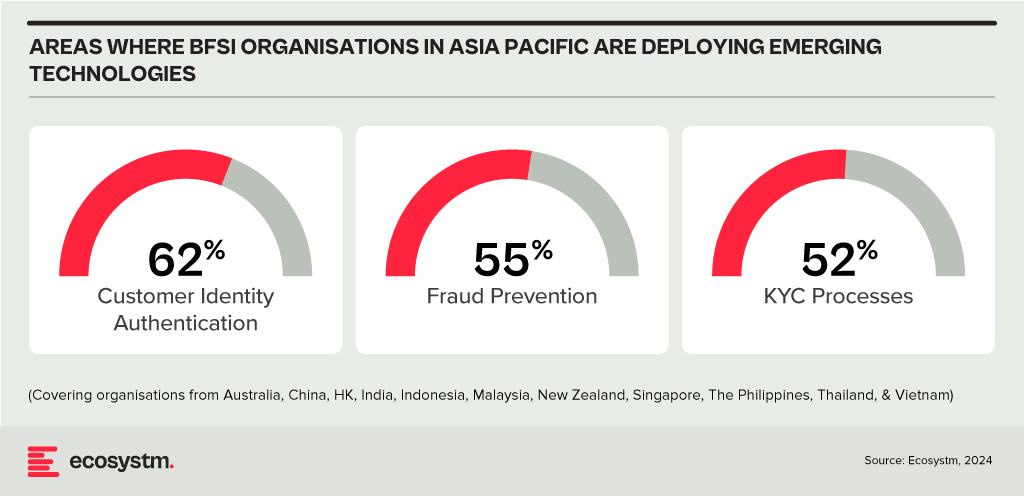

Ecosystm research shows that BFSI organisations in Asia Pacific are spending more on technologies to authenticate customer identity and prevent fraud, than they are in their Know Your Customer (KYC) processes.

The Evolution of the Threat Landscape in BFSI

Synthetic Identity Fraud. This involves the creation of fictitious identities by combining real and fake information, distinct from traditional identity theft where personal data is stolen. These synthetic identities are then exploited to open fraudulent accounts, obtain credit, or engage in financial crimes, often evading detection due to their lack of association with real individuals. The Deloitte Centre for Financial Services predicts that synthetic identity fraud will result in USD 23B in losses by 2030. Synthetic fraud is posing significant challenges for financial institutions and law enforcement agencies, especially with the emergence of advanced technologies like GenAI being used to produce realistic documents blending genuine and false information, undermining Know Your Customer (KYC) protocols.

AI-Enhanced Phishing. Ecosystm research reveals that in Asia Pacific, 71% of customer interactions in BFSI occur across multiple digital channels, including mobile apps, emails, messaging, web chats, and conversational AI. In fact, 57% of organisations plan to further improve customer self-service capabilities to meet the demand for flexible and convenient service delivery. The proliferation of digital channels brings with it an increased risk of phishing attacks.

While these organisations continue to educate their customers on how to secure their accounts in a digital world, GenAI poses an escalating threat here as well. Phishing schemes will employ widely available LLMs to generate convincing text and even images. For many potential victims, misspellings and strangely worded appeals are the only hint that an email from their bank is not what it seems. The maturing of deepfake technology will also make it possible for malicious agents to create personalised voice and video attacks.

Identity Fraud Detection and Prevention

Although fraudsters are exploiting every new vulnerability, financial organisations also have new tools to protect their customers. Organisations should build a layered defence to prevent increasingly sophisticated attempts at fraud.

- Behavioural analytics. Using machine learning, financial organisations can differentiate between standard activities and suspicious behaviour at the account level. Data that can be analysed includes purchase patterns, unusual transaction values, VPN use, browser choice, log-in times, and impossible travel. Anomalies can be flagged, and additional security measures initiated to stem the attack.

- Passive authentication. Accounts can be protected even before password or biometric authentication by analysing additional data, such as phone number and IP address. This approach can be enhanced by comparing databases populated with the details of suspicious actors.

- SIM swap detection. SMS-based MFA is vulnerable to SIM swap attacks where a customer’s phone number is transferred to the fraudster’s own device. This can be prevented by using an authenticator app rather than SMS. Alternatively, SIM swap history can be detected before sending one-time passwords (OTPs).

- Breached password detection. Although customers are strongly discouraged to reuse passwords across sites, some inevitably will. By employing a service that maintains a database of credentials leaked during third-party breaches, it is possible to compare with active customer passwords and initiate a reset.

- Stronger biometrics. Phone-based fingerprint recognition has helped financial organisations safeguard against fraud and simplify the authentication experience. Advances in biometrics continue with recognition for faces, retina, iris, palm print, and voice making multimodal biometric protection possible. Liveness detection will grow in importance to combat against AI-generated content.

- Step-up validation. Authentication requirements can be differentiated according to risk level. Lower risk activities, such as balance check or internal transfer, may only require minimal authentication while higher risk ones, like international or cryptocurrency transactions may require a step up in validation. When anomalous behaviour is detected, even greater levels of security can be initiated.

Recommendations

- Reduce friction. While it may be tempting to implement heavy handed approaches to prevent fraud, it is also important to minimise friction in the authentication system. Frustrated users may abandon services or find risky ways to circumvent security. An effective layered defence should act in the background to prevent attackers getting close.

- AI Phishing Awareness. Even the savviest of customers could fall prey to advanced phishing attacks that are using GenAI. Social engineering at scale becomes increasingly more possible with each advance in AI. Monitor emerging global phishing activities and remind customers to be ever vigilant of more polished and personalised phishing attempts.

- Deploy an authenticator app. Consider shifting away from OTP SMS as an MFA method and implement either an authenticator app or one embedded in the financial app instead.

- Integrate authentication with fraud analytics. Select an authentication provider that can integrate its offering with analytics to identify fraud or unusual behaviour during account creation, log in, and transactions. The two systems should work in tandem.

- Take a zero-trust approach. Protecting both customers and employees is critical, particularly in the hybrid work era. Implement zero trust tools to prevent employees from falling victim to malicious attacks and minimising damage if they do.

Over the past year, many organisations have explored Generative AI and LLMs, with some successfully identifying, piloting, and integrating suitable use cases. As business leaders push tech teams to implement additional use cases, the repercussions on their roles will become more pronounced. Embracing GenAI will require a mindset reorientation, and tech leaders will see substantial impact across various ‘traditional’ domains.

AIOps and GenAI Synergy: Shaping the Future of IT Operations

When discussing AIOps adoption, there are commonly two responses: “Show me what you’ve got” or “We already have a team of Data Scientists building models”. The former usually demonstrates executive sponsorship without a specific business case, resulting in a lukewarm response to many pre-built AIOps solutions due to their lack of a defined business problem. On the other hand, organisations with dedicated Data Scientist teams face a different challenge. While these teams can create impressive models, they often face pushback from the business as the solutions may not often address operational or business needs. The challenge arises from Data Scientists’ limited understanding of the data, hindering the development of use cases that effectively align with business needs.

The most effective approach lies in adopting an AIOps Framework. Incorporating GenAI into AIOps frameworks can enhance their effectiveness, enabling improved automation, intelligent decision-making, and streamlined operational processes within IT operations.

This allows active business involvement in defining and validating use-cases, while enabling Data Scientists to focus on model building. It bridges the gap between technical expertise and business requirements, ensuring AIOps initiatives are influenced by the capabilities of GenAI, address specific operational challenges and resonate with the organisation’s goals.

The Next Frontier of IT Infrastructure

Many companies adopting GenAI are openly evaluating public cloud-based solutions like ChatGPT or Microsoft Copilot against on-premises alternatives, grappling with the trade-offs between scalability and convenience versus control and data security.

Cloud-based GenAI offers easy access to computing resources without substantial upfront investments. However, companies face challenges in relinquishing control over training data, potentially leading to inaccurate results or “AI hallucinations,” and concerns about exposing confidential data. On-premises GenAI solutions provide greater control, customisation, and enhanced data security, ensuring data privacy, but require significant hardware investments due to unexpectedly high GPU demands during both the training and inferencing stages of AI models.

Hardware companies are focusing on innovating and enhancing their offerings to meet the increasing demands of GenAI. The evolution and availability of powerful and scalable GPU-centric hardware solutions are essential for organisations to effectively adopt on-premises deployments, enabling them to access the necessary computational resources to fully unleash the potential of GenAI. Collaboration between hardware development and AI innovation is crucial for maximising the benefits of GenAI and ensuring that the hardware infrastructure can adequately support the computational demands required for widespread adoption across diverse industries. Innovations in hardware architecture, such as neuromorphic computing and quantum computing, hold promise in addressing the complex computing requirements of advanced AI models.

The synchronisation between hardware innovation and GenAI demands will require technology leaders to re-skill themselves on what they have done for years – infrastructure management.

The Rise of Event-Driven Designs in IT Architecture

IT leaders traditionally relied on three-tier architectures – presentation for user interface, application for logic and processing, and data for storage. Despite their structured approach, these architectures often lacked scalability and real-time responsiveness. The advent of microservices, containerisation, and serverless computing facilitated event-driven designs, enabling dynamic responses to real-time events, and enhancing agility and scalability. Event-driven designs, are a paradigm shift away from traditional approaches, decoupling components and using events as a central communication mechanism. User actions, system notifications, or data updates trigger actions across distributed services, adding flexibility to the system.

However, adopting event-driven designs presents challenges, particularly in higher transaction-driven workloads where the speed of serverless function calls can significantly impact architectural design. While serverless computing offers scalability and flexibility, the latency introduced by initiating and executing serverless functions may pose challenges for systems that demand rapid, real-time responses. Increasing reliance on event-driven architectures underscores the need for advancements in hardware and compute power. Transitioning from legacy architectures can also be complex and may require a phased approach, with cultural shifts demanding adjustments and comprehensive training initiatives.

The shift to event-driven designs challenges IT Architects, whose traditional roles involved designing, planning, and overseeing complex systems. With Gen AI and automation enhancing design tasks, Architects will need to transition to more strategic and visionary roles. Gen AI showcases capabilities in pattern recognition, predictive analytics, and automated decision-making, promoting a symbiotic relationship with human expertise. This evolution doesn’t replace Architects but signifies a shift toward collaboration with AI-driven insights.

IT Architects need to evolve their skill set, blending technical expertise with strategic thinking and collaboration. This changing role will drive innovation, creating resilient, scalable, and responsive systems to meet the dynamic demands of the digital age.

Whether your organisation is evaluating or implementing GenAI, the need to upskill your tech team remains imperative. The evolution of AI technologies has disrupted the tech industry, impacting people in tech. Now is the opportune moment to acquire new skills and adapt tech roles to leverage the potential of GenAI rather than being disrupted by it.

As tech providers such as Microsoft enhance their capabilities and products, they will impact business processes and technology skills, and influence other tech providers to reshape their product and service offerings. Microsoft recently organised briefing sessions in Sydney and Singapore, to present their future roadmap, with a focus on their AI capabilities.

Ecosystm Advisors Achim Granzen, Peter Carr, and Tim Sheedy provide insights on Microsoft’s recent announcements and messaging.

Click here to download Ecosystm VendorSphere: Microsoft’s AI Vision – Initiatives & Impact

Ecosystm Question: What are your thoughts on Microsoft Copilot?

Tim Sheedy. The future of GenAI will not be about single LLMs getting bigger and better – it will be about the use of multiple large and small language models working together to solve specific challenges. It is wasteful to use a large and complex LLM to solve a problem that is simpler. Getting these models to work together will be key to solving industry and use case specific business and customer challenges in the future. Microsoft is already doing this with Microsoft 365 Copilot.

Achim Granzen. Microsoft’s Copilot – a shrink-wrapped GenAI tool based on OpenAI – has become a mainstream product. Microsoft has made it available to their enterprise clients in multiple ways: for personal productivity in Microsoft 365, for enterprise applications in Dynamics 365, for developers in Github and Copilot Studio, and to partners to integrate Copilot into their applications suites (E.g. Amdocs’ Customer Engagement Platform).

Ecosystm Question: How, in your opinion, is the Microsoft Copilot a game changer?

Microsoft’s Customer Copyright Commitment, initially launched as Copilot Copyright Commitment, is the true game changer.

Achim Granzen. It safeguards Copilot users from potential copyright infringement lawsuits related to data used for algorithm training or output results. In November 2023, Microsoft expanded its scope to cover commercial usage of their OpenAI interface as well.

This move not only protects commercial clients using Microsoft’s GenAI products but also extends to any GenAI solutions built by their clients. This initiative significantly reduces a key risk associated with GenAI adoption, outlined in the product terms and conditions.

However, compliance with a set of Required Mitigations and Codes of Conduct is necessary for clients to benefit from this commitment, aligning with responsible AI guidelines and best practices.

Ecosystm Question: Where will organisations need most help on their AI journeys?

Peter Carr. Unfortunately, there is no playbook for AI.

- The path to integrating AI into business strategies and operations lacks a one-size-fits-all guide. Organisations will have to navigate uncharted territories for the time being. This means experimenting with AI applications and learning from successes and failures. This exploratory approach is crucial for leveraging AI’s potential while adapting to unique organisational challenges and opportunities. So, companies that are better at agile innovation will do better in the short term.

- The effectiveness of AI is deeply tied to the availability and quality of connected data. AI systems require extensive datasets to learn and make informed decisions. Ensuring data is accessible, clean, and integrated is fundamental for AI to accurately analyse trends, predict outcomes, and drive intelligent automation across various applications.

Ecosystm Question: What advice would you give organisations adopting AI?

Tim Sheedy. It is all about opportunities and responsibility.

- There is a strong need for responsible AI – at a global level, at a country level, at an industry level and at an organisational level. Microsoft (and other AI leaders) are helping to create responsible AI systems that are fair, reliable, safe, private, secure, and inclusive. There is still a long way to go, but these capabilities do not completely indemnify users of AI. They still have a responsibility to set guardrails in their own businesses about the use and opportunities for AI.

- AI and hybrid work are often discussed as different trends in the market, with different solution sets. But in reality, they are deeply linked. AI can help enhance and improve hybrid work in businesses – and is a great opportunity to demonstrate the value of AI and tools such as Copilot.

Ecosystm Question: What should Microsoft focus on?

Tim Sheedy. Microsoft faces a challenge in educating the market about adopting AI, especially Copilot. They need to educate business, IT, and AI users on embracing AI effectively. Additionally, they must educate existing partners and find new AI partners to drive change in their client base. Success in the race for knowledge workers requires not only being first but also helping users maximise solutions. Customers have limited visibility of Copilot’s capabilities, today. Improving customer upskilling and enhancing tools to prompt users to leverage capabilities will contribute to Microsoft’s (or their competitors’) success in dominating the AI tool market.

Peter Carr. Grassroots businesses form the economic foundation of the Asia Pacific economies. Typically, these businesses do not engage with global SIs (GSIs), which drive Microsoft’s new service offerings. This leads to an adoption gap in the sector that could benefit most from operational efficiencies. To bridge this gap, Microsoft must empower non-GSI partners and managed service providers (MSPs) at the local and regional levels. They won’t achieve their goal of democratising AI, unless they do. Microsoft has the potential to advance AI technology while ensuring fair and widespread adoption.

I have spent many years analysing the mobile and end-user computing markets. Going all the way back to 1995 where I was part of a Desktop PC research team, to running the European wireless and mobile comms practice, to my time at 3 Mobile in Australia and many years after, helping clients with their end-user computing strategies. From the birth of mobile data services (GPRS, WAP, and so on to 3G, 4G and 5G), from simple phones to powerful foldable devices, from desktop computers to a complex array of mobile computing devices to meet the many and varied employee needs. I am always looking for the “next big thing” – and there have been some significant milestones – Palm devices, Blackberries, the iPhone, Android, foldables, wearables, smaller, thinner, faster, more powerful laptops.

But over the past few years, innovation in this space has tailed off. Outside of the foldable space (which is already four years old), the major benefits of new devices are faster processors, brighter screens, and better cameras. I review a lot of great computers too (like many of the recent Surface devices) – and while they are continuously improving, not much has got my clients or me “excited” over the past few years (outside of some of the very cool accessibility initiatives).

The Force of AI

But this is all about to change. Devices are going to get smarter based on their data ecosystem, the cloud, and AI-specific local processing power. To be honest, this has been happening for some time – but most of the “magic” has been invisible to us. It happened when cameras took multiple shots and selected the best one; it happened when pixels were sharpened and images got brighter, better, and more attractive; it happened when digital assistants were called upon to answer questions and provide context.

Microsoft, among others, are about to make AI smarts more front and centre of the experience – Windows Copilot will add a smart assistant that can not only advise but execute on advice. It will help employees improve their focus and productivity, summarise documents and long chat threads, select music, distribute content to the right audience, and find connections. Added to Microsoft 365 Copilot it will help knowledge workers spend less time searching and reading – and more time doing and improving.

The greater integration of public and personal data with “intent insights” will also play out on our mobile devices. We are likely to see the emergence of the much-promised “integrated app”– one that can take on many of the tasks that we currently undertake across multiple applications, mobile websites, and sometimes even multiple devices. This will initially be through the use of public LLMs like Bard and ChatGPT, but as more custom, private models emerge they will serve very specific functions.

Focused AI Chips will Drive New Device Wars

In parallel to these developments, we expect the emergence of very specific AI processors that are paired to very specific AI capabilities. As local processing power becomes a necessity for some AI algorithms, the broad CPUs – and even the AI-focused ones (like Google’s Tensor Processor) – will need to be complemented by specific chips that serve specific AI functions. These chips will perform the processing more efficiently – preserving the battery and improving the user experience.

While this will be a longer-term trend, it is likely to significantly change the game for what can be achieved locally on a device – enabling capabilities that are not in the realm of imagination today. They will also spur a new wave of device competition and innovation – with a greater desire to be on the “latest and greatest” devices than we see today!

So, while the levels of device innovation have flattened, AI-driven software and chipset innovation will see current and future devices enable new levels of employee productivity and consumer capability. The focus in 2023 and beyond needs to be less on the hardware announcements and more on the platforms and tools. End-user computing strategies need to be refreshed with a new perspective around intent and intelligence. The persona-based strategies of the past have to be changed in a world where form factors and processing power are less relevant than outcomes and insights.