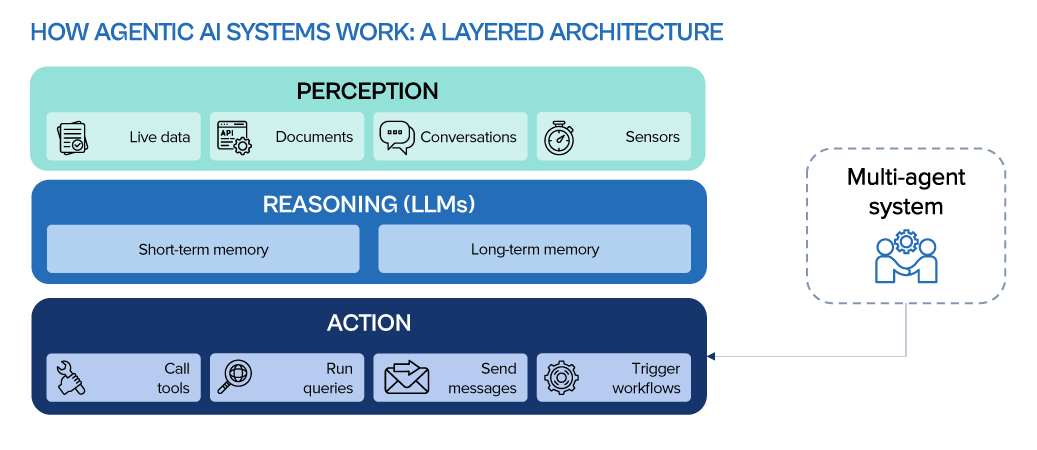

We’re in the middle of a major shift in how AI shows up at work. It’s no longer just about automation or predictions. What’s emerging now is AI that acts with intent, systems that observe their surroundings, make sense of what’s happening, plan intelligently, and then execute, often without constant human direction.

This new class of intelligent systems is called Agentic AI. And it doesn’t just improve productivity – it fundamentally reimagines it.

Click here to download “Tech Focus: Agentic AI & the Future of Work” as a PDF.

What is Agentic AI?

Agentic AI is about more than reacting to input; it’s about pursuing outcomes. At its core are intelligent agents: software entities that don’t just wait for instructions, but take initiative, make decisions, collaborate, and learn over time.

What’s Making Agentic AI Possible Now?

- LLMs with reasoning capabilities not just generating text, but planning, reflecting, and making decisions.

- Function calling enabling models to use APIs and tools to take real-world actions.

- Retrieval-Augmented Generation (RAG) grounding outputs in relevant, up-to-date information.

- Vector databases and embeddings supporting memory, recall, and contextual understanding.

- Reinforcement learning allowing agents to learn and improve through feedback.

- Orchestration frameworks platforms like AutoGen, LangGraph, and CrewAI that help build, coordinate, and manage multi-agent systems.

Getting Started with Agentic AI

If you’re exploring Agentic AI, the first step isn’t technical but strategic. Focus on use cases where autonomy creates clear value: high-volume tasks, cross-functional coordination, or real-time decision-making.

Then, build your foundation:

- Start small, but design for scale. Begin with pilot use cases in areas like customer support or IT operations

- Invest in enablers. APIs, clean data, observability tools, and a robust security posture are essential

- Choose the orchestration frameworks. Tools that make it easier to build, deploy, and monitor agentic workflows

- Prioritise governance. Define access control, ethical boundaries, and clear oversight mechanisms from day one

Ecosystm Opinion

Agentic AI doesn’t just execute tasks; it collaborates, learns, and adapts. It marks a fundamental change in how work flows across teams, systems, and decisions. As these technologies mature, we’ll see them embedded across industries – from finance to healthcare to manufacturing.

But they won’t replace people. They’ll amplify us; boosting judgement, creativity, speed, and impact. The future of work isn’t just faster or more automated. It’s agentic, and it’s already here.

Across our conversations with marketing leads, sales heads, customer experience owners, and tech architects, one theme keeps coming up: It’s not about collecting more data. It’s about making sense of what we already have.

As customer journeys grow more fragmented, leaders are grappling with a big question: how do we unify data in a way that helps teams act fast, personally, and responsibly?

This is where CRM and CDP integration becomes critical. Not a technical afterthought, but a strategic decision.

Click here to download “Ground Realities: Conversations about Customer Data” as a PDF.

Why CRM and CDP Must Work Together

CRMs are relationship systems, built to track sales conversations, account history, support interactions, and contact details. CDPs are behaviour systems designed to unify signals from web, mobile, ads, apps, and third-party tools.

They each solve different problems, but the same customer is at the centre.

Without integration, CRMs miss the behavioural context needed for real-time decisions, while CDPs lack structured data about customer relationships like deal history or support issues. Each system works in isolation, limiting the quality of insights and slowing down effective action.

“Marketing runs on signals: clicks, visits, scrolls, app drops. If that data doesn’t talk to our CRM, our campaigns feel completely disconnected.” – VP, Growth Marketing

When CRM and CDP are Integrated

Sales gains visibility into customer behaviour, not just who clicked a proposal, but how often they return, what products they browse, and when interest peaks. This helps reps prioritise high-intent leads and time their outreach perfectly.

Marketing stops shooting in the dark. Integrated data enables them to segment audiences precisely, trigger campaigns in real time, and ensure compliance with consent and privacy settings.

Customer Experience teams can connect the dots across touchpoints. If a high-value customer reduces app usage, flags an issue in chat, and has an upcoming renewal, the team can step in proactively.

IT and Analytics benefit from a single source of truth. Fewer silos mean reduced data duplication, easier governance, and more reliable AI models. Clean, contextual data reduces alert fatigue and increases trust across teams.

Why It Matters Now

Fragmented Journeys Are the Norm. Customers interact across websites, mobile apps, social DMs, emails, chatbots, and in-store visits – often within the same day. No single platform captures this complexity unless CRM and CDP data are aligned.

Real-Time Expectations Are Rising. A customer abandons a cart or posts a complaint – and expects a relevant response within minutes, not days. Teams need integrated systems to recognise these moments and act instantly, not wait for weekly dashboards or manual pulls.

Privacy & Compliance Can’t Be Retrofitted. With stricter regulations (like India’s DPDP Act, GDPR, and industry-specific norms), disconnected systems mean scattered consent records, inconsistent data handling, and increased risk of non-compliance or customer mistrust.

“It’s not about choosing CRM or CDP. It’s about making sure they work together so our AI tools don’t go rogue.” – CTO, Retail Platform

The AI Layer Makes This Urgent

Agentic AI is no longer a concept on the horizon. It’s already reshaping how teams engage customers, automate responses, and make decisions on the fly. But it’s only as good as the data it draws from.

For example, when an AI assistant is trained to spot churn risk or recommend offers, it needs both:

- CDP inputs. Mobile session drop-offs, email unsubscribes, product page bounces, app crashes

- CRM insights. Contract renewal dates, support history, pricing objections, NPS scores

Without the full picture, it either overlooks critical risks, or worse, responds in ways that feel tone-deaf or irrelevant.

A Smarter Stack for Customer-Centric Growth

The CRM vs CDP debate is outdated – both are essential parts of a unified data strategy. Integration goes beyond syncing contacts; it requires real-time data flow, clear governance, and aligned teams. As AI-driven growth accelerates, this integrated data backbone is no longer just a technical task but a leadership imperative. Companies that master it won’t just automate, they’ll truly understand their customers, gaining a decisive competitive edge.

Australia is making meaningful progress on its digital journey, driven by a vibrant tech sector, widespread technology adoption, and rising momentum in AI. But realising its full potential as a leading digital economy will depend on bridging the skills gap, moving beyond surface-level AI applications, accelerating SME digital transformation, and navigating ongoing economic uncertainty. For many enterprises, the focus is shifting from experimentation to execution, using technology to drive efficiency, resilience, and measurable outcomes.

Increasingly, leaders are asking not just how fast Australia can innovate, but how wisely. Strategic choices made now will shape a digital future grounded in national values where technology fuels both economic growth and public good.

These five key realities capture the current state of Australia’s technology landscape, based on insights from Ecosystm’s industry conversations and research.

1. Responsible by Design: Australia’s Path to Trusted AI

AI in Australia is progressing with a strong focus on ethics and public trust. Regulators like ASIC and the OAIC (Office of the Australian Information Commissioner) have made it clear that AI systems, especially in banking, insurance, and healthcare, must be transparent and fair. Banks like ANZ and Commonwealth Bank, have developed responsible AI frameworks to ensure their algorithms don’t unintentionally discriminate or mislead customers.

Yet a clear gap remains between ambition and readiness. Ecosystm research shows nearly 77% of Australian organisations acknowledge progress in piloting real-world use cases but worry they’re falling behind due to weak governance and poor-quality data.

The conversation around AI in Australia is evolving beyond productivity to include building trust. Success is now measured by the confidence regulators, customers, and communities have in AI systems. The path forward is clear: AI must drive innovation while upholding principles of fairness, transparency, and accountability.

2. The New AI Skillset: Where Data Science Meets Compliance and Context

Australia is on track to face a shortfall of 250,000 skilled workers in tech and business by 2030, according to the Future Skills Organisation. But the gap isn’t just in coders or engineers; it’s in hybrid talent: professionals who can connect AI development with regulatory, ethical, and commercial understanding.

In sectors like finance, AI adoption has stalled not due to lack of tools, but due to a lack of people who can interpret financial regulations and translate them into data science requirements. The same challenge affects healthcare, where digital transformation projects often slow down because technical teams lack domain-specific compliance and risk expertise.

While skilled migration has rebounded post-pandemic, the domestic pipeline remains limited. In response, organisations like Microsoft and Commonwealth Bank are investing in cross-skilling employees in AI, cloud, and risk management. Government initiatives such as CSIRO’s Responsible AI program and UNSW’s AI education efforts are also working to build talent fluent in both technology and ethics.

Despite these efforts, Australia’s shortage of hybrid talent remains a critical bottleneck, shaping not just how fast AI is adopted, but how responsibly and effectively it is deployed.

3. Beyond Coverage: Closing the Digital Gap for Regional Australia

Australia’s vast geography creates a uniquely local digital divide. Despite the National Broadband Network (NBN) rollout, many regional areas still face slow speeds and outages. The 2023 Regional Telecommunications Review found that over 2.8 million Australians remain without reliable internet access. Industries suffer tangible impacts. GrainCorp, a major agribusiness, uses AI to communicate with workers during the harvest season, but regional connectivity gaps hinder real-time monitoring and analytics. In healthcare, the Royal Flying Doctor Service reports that poor internet reliability in remote areas undermines telehealth consultations, particularly crucial for Indigenous communities.

Efforts to address these gaps are underway. Telstra launched satellite services through partnerships with Starlink and OneWeb to cover remote zones. However, these solutions often come with prohibitive costs, particularly for smaller businesses, farms, and community organisations that cannot afford private network infrastructure.

The implications are clear: without reliable and affordable internet, regional enterprises will struggle to adopt AI, cloud-based systems, and digital tools that drive efficiency and equity. The next step must be a coordinated approach involving government, telecom providers, and industry, focused not just on coverage, but on quality, affordability, and support for local innovation. Bridging this digital divide is not simply about infrastructure, it’s about ensuring inclusive access to the tools that power modern business and essential services.

4. Resilience Over Defence: Australia’s Evolving Cybersecurity Focus

Australia’s cyber landscape has shifted sharply following major breaches like Optus, Medibank, and Latitude Financial, which pushed cybersecurity to the top of national agendas. In response, regulators and organisations have adopted a more urgent, coordinated stance. Under the Security of Critical Infrastructure (SOCI) Act, critical sectors must now report serious incidents within hours, enabling faster, government-led responses and stronger collective resilience.

Organisations across sectors are stepping up their defences, moving from reactive measures to proactive preparedness. NAB confirmed that it spends over USD 150M annually on cybersecurity, focusing on real-time threat hunting, simulation exercises, and red teaming. Telstra continues to run annual “cyber war games” involving IT, legal, and crisis communications teams to prepare for worst-case scenarios.

This collective focus signals a broader shift across Australian industries: cybersecurity maturity is no longer judged by perimeter defence alone. Instead, resilience – an organisation’s ability to detect, respond, and recover swiftly – is now the benchmark for protecting critical assets in an increasingly complex threat landscape.

5. Designing for the Long Term: Sustainability as a Core Capability

Organisations across Australia are under growing pressure – not only from regulators, but also from investors, customers, and communities – to demonstrate that their digital strategies are delivering real environmental and social outcomes. The bar has shifted from ESG disclosure to ESG performance. Technology is no longer just an efficiency lever; it’s expected to be a catalyst for sustainability transformation.

This expectation is especially acute in Australia’s core industries, where environmental impact is both material and highly scrutinised. In mining, for example, Rio Tinto’s 20-year renewable energy deal with Edify Energy aims to cut emissions by up to 70% at its Queensland aluminium operations by 2028. But the focus on transition is not limited to high-emission sectors. In financial services, institutions are actively supporting the shift to a low-carbon economy, from setting long-term net-zero targets to aligning lending practices with climate goals, including phasing out support for high-emission assets.

Yet for many, the path forward is still fragmented. ESG data often sits in silos, legacy systems constrain visibility, and ownership of sustainability metrics is scattered. Digital transformation efforts that treat ESG as an add-on, rather than embedding it into the foundations of data, governance, and decision-making, risk missing the mark. Australia’s next digital frontier will be measured not just by innovation, but by how effectively it enables a low-carbon, inclusive, and resilient economy.

Shaping Australia’s Digital Future

Australia’s technology journey is accelerating, but significant challenges must be addressed to unlock its full potential. Moving beyond basic digitalisation, the country is embracing advanced technologies as essential drivers of economic growth and productivity. Strong government initiatives and investments are creating a foundation for innovation and building a highly skilled digital workforce. However, overcoming barriers such as talent shortages, infrastructure gaps, and governance complexities is critical. Only by tackling these obstacles head-on and embedding technology deeply across organisations of all sizes can Australia transform automation into true data-driven autonomy and new business models, securing its position as a global digital leader.

AI can no longer be treated as a side experiment; it is often embedded in core decisions, customer experiences, operations, and innovation. And as adoption accelerates, so does regulatory scrutiny. Around the world, governments are moving quickly to set rules on how AI can be used, what risks must be controlled, and who is held accountable when harm occurs.

This shift makes Responsible AI a strategic imperative – not just a compliance checkbox. It’s about reducing reputational risk, protecting customers and IP, and earning the trust needed to scale AI responsibly. Embedding transparency, fairness, and accountability into AI systems isn’t just ethical, it’s smart business.

Understanding the regulatory landscape is a key part of that responsibility. As frameworks evolve, organisations must stay ahead of the rules shaping AI and ensure leadership is asking the right questions.

EU AI Act: Setting the Standard for Responsible AI

The EU AI Act is the world’s first comprehensive legislative framework for AI. It introduces a risk-based classification system: minimal, limited, high, and unacceptable. High-risk applications, including those used in HR, healthcare, finance, law enforcement, and critical infrastructure, must comply with strict requirements around transparency, data governance, ongoing monitoring, and human oversight. Generative AI models above certain thresholds are also subject to obligations such as disclosing training data sources and ensuring content integrity.

Although an EU regulation, the Act has global relevance. Organisations outside the EU may fall within its scope if their AI systems impact EU citizens or markets. And just as the GDPR became a de facto global standard for data protection, the EU AI Act is expected to create a ripple effect, shaping how other countries approach AI regulation. It sets a clear precedent for embedding safety, accountability, and human-centric principles into AI governance. As a result, it is one of the most closely tracked developments by compliance teams, risk officers, and AI governance leads worldwide.

However, as AI governance firms up worldwide, Asia Pacific organisations must look beyond Europe. From Washington to Beijing, several regulatory frameworks are rapidly influencing global norms. Whether organisations are building, deploying, or partnering on AI, these five are shaping the rules of the game.

AI Regulations Asia Pacific Organisations Must Track

1. United States: Setting the Tone for Global AI Risk Management

The U.S. Executive Order on AI (2023) signals a major policy shift in federal oversight. It mandates agencies to establish AI safety standards, governance protocols, and risk assessment practices, with an emphasis on fairness, explainability, and security, especially in sensitive domains like healthcare, employment, and finance. Central to this effort is the NIST AI Risk Management Framework (AI RMF), quickly emerging as a global touchstone.

Though designed as domestic policy, the Order’s influence is global. It sets a high bar for what constitutes responsible AI and is already shaping procurement norms and international expectations. For Asia Pacific organisations, early alignment isn’t just about accessing the U.S. market; it’s about maintaining credibility and competitiveness in a global AI landscape that is rapidly converging around these standards.

Why it matters to Asia Pacific organisations

- Global Supply Chains Depend on It. U.S.-linked firms must meet stringent AI safety and procurement standards to stay viable. Falling short could mean loss of market and partnership access.

- NIST Is the New Global Benchmark. Aligning with AI RMF enables consistent risk management and builds confidence with global regulators and clients.

- Explainability Is Essential. AI systems must provide auditable, transparent decisions to satisfy legal and market expectations.

- Security Isn’t Optional. Preventing misuse and securing models is a non-negotiable baseline for participation in global AI ecosystems.

2. China: Leading with Strict GenAI Regulation

China’s 2023 Generative AI Measures impose clear rules on public-facing GenAI services. Providers must align content with “core socialist values,” prevent harmful bias, and ensure outputs are traceable and verifiable. Additionally, algorithms must be registered with regulators, with re-approval required for significant changes. These measures embed accountability and auditability into AI development and signal a new standard for regulatory oversight.

For Asia Pacific organisations, this is more than compliance with local laws; it’s a harbinger of global trends. As major economies adopt similar rules, embracing traceability, algorithmic governance, and content controls now offers a competitive edge. It also demonstrates a commitment to trustworthy AI, positioning firms as serious players in the future global AI market.

Why it matters to Asia Pacific organisations

- Regulatory Access and Avoiding Risk. Operating in or reaching Chinese users means strict content and traceability compliance is mandatory.

- Global Trend Toward Algorithm Governance. Requirements like algorithm registration are becoming regional norms and early adoption builds readiness.

- Transparency and Documentation. Rules align with global moves toward auditability and explainability.

- Content and Data Localisation. Businesses must invest in moderation and rethink infrastructure to comply with China’s standards.

3. Singapore: A Practical Model for Responsible AI

Singapore’s Model AI Governance Framework, developed by IMDA and PDPC, offers a pragmatic and principles-led path to ethical AI. Centred on transparency, human oversight, robustness, fairness, and explainability, the framework is accompanied by a detailed implementation toolkit, including use-case templates and risk-based guidance. It’s a practical playbook for firms looking to embed responsibility into their AI systems from the start.

For Asia Pacific organisations, Singapore’s approach serves as both a local standard and a launchpad for global alignment. Adopting it enables responsible innovation, prepares teams for tighter compliance regimes, and builds trust with stakeholders at home and abroad. It’s a smart move for firms seeking to lead responsibly in the region’s growing AI economy.

Why it matters to Asia Pacific organisations

- Regionally Rooted, Globally Relevant. Widely adopted across Southeast Asia, the framework suits industries from finance to logistics.

- Actionable Tools for Teams. Templates and checklists make responsible AI real and repeatable at scale.

- Future Compliance-Ready. Even if voluntary now, it positions firms to meet tomorrow’s regulations with ease.

- Trust as a Strategic Asset. Emphasising fairness and oversight boosts buy-in from regulators, partners, and users.

- Global Standards Alignment. Harmonises with the NIST RMF and G7 guidance, easing cross-border operations.

4. OECD & G7: The Foundations of Global AI Trust

The OECD AI Principles, adopted by over 40 countries, and the G7 Hiroshima Process establish a high-level consensus on what trustworthy AI should look like. They champion values such as transparency, accountability, robustness, and human-centricity. The G7 further introduced voluntary codes for foundation model developers, encouraging practices like documenting limitations, continuous risk testing, and setting up incident reporting channels.

For Asia Pacific organisations, these frameworks are early indicators of where global regulation is heading. Aligning now sends a strong signal of governance maturity, supports safer AI deployment, and strengthens relationships with investors and international partners. They also help firms build scalable practices that can evolve alongside regulatory expectations.

Why it matters to Asia Pacific organisations

- Blueprint for Trustworthy AI. Principles translate to real-world safeguards like explainability and continuous testing.

- Regulatory Foreshadowing. Many Asia Pacific countries cite these frameworks in shaping their own AI policies.

- Investor and Partner Signal. Compliance demonstrates maturity to stakeholders, aiding capital access and deals.

- Safety Protocols for Scale. G7 recommendations help prevent AI failures and harmful outcomes.

- Enabler of Cross-Border Collaboration. Global standards support smoother AI export, adoption, and partnership.

5. Japan: Balancing Innovation and Governance

Japan’s AI governance, guided by its 2022 strategy and active role in the G7 Hiroshima Process, follows a soft law approach that encourages voluntary adoption of ethical principles. The focus is on human-centric, transparent, and safe AI, allowing companies to experiment within defined ethical boundaries without heavy-handed mandates.

For Asia Pacific organisations, Japan offers a compelling governance model that supports responsible innovation. By following its approach, firms can scale AI while staying aligned with international norms and anticipating formal regulations. It’s a flexible yet credible roadmap for building internal AI governance today.

Why it matters to Asia Pacific organisations

- Room to Innovate with Guardrails. Voluntary guidelines support agile experimentation without losing ethical direction.

- Emphasis on Human-Centred AI. Design principles prioritise user rights and build long-term trust.

- G7-Driven Interoperability. As a G7 leader, Japan’s standards help companies align with broader international norms.

- Transparency and Safety Matter. Promoting explainability and security sets firms apart in global markets.

- Blueprint for Internal Governance. Useful for creating internal policies that are regulation-ready.

Why This Matters: Beyond Compliance

The global regulatory patchwork is quickly evolving into a complex landscape of overlapping expectations. For multinational companies, this creates three clear implications:

- Compliance is no longer optional. With enforcement kicking in (especially under the EU AI Act), failure to comply could mean fines, blocked products, or reputational damage.

- Enterprise AI needs guardrails. Businesses must build not just AI products, but AI governance, covering model explainability, data quality, access control, bias mitigation, and audit readiness.

- Trust drives adoption. As AI systems touch more customer and employee experiences, being able to explain and defend AI decisions becomes essential for maintaining stakeholder trust.

AI regulation is not a brake on innovation; it’s the foundation for sustainable, scalable growth. For forward-thinking businesses, aligning with emerging standards today will not only reduce risk but also increase competitive advantage tomorrow. The organisations that win in the AI age will be the ones who combine speed with responsibility, and governance with ambition.

Consider the sheer volume of information flowing through today’s financial systems: every QR payment, e-KYC onboarding, credit card swipe, and cross-border transfer captures a data point. With digital banking and Open Banking, financial institutions are sitting on a goldmine of insights. But this isn’t just about data collection; it’s about converting that data into strategic advantage in a fast-moving, customer-driven landscape.

With digital banks gaining traction and regulators around the world pushing bold reforms, the industry is entering a new phase of financial innovation powered by data and accelerated by AI.

Ecosystm gathered insights and identified key challenges from senior banking leaders during a series of roundtables we moderated across Asia Pacific. The conversations revealed a clear picture of where momentum is building – and where obstacles continue to slow progress. From these discussions, several key themes emerged that highlight both opportunities and ongoing barriers in the Banking sector.

1. AI is Leading to End-to-End Transformation

Banks are moving beyond generic digital offerings to deliver hyper-personalised, data-driven experiences that build loyalty and drive engagement. AI is driving this shift by helping institutions anticipate customer needs through real-time analysis of behavioural, transactional, and demographic data. From pre-approved credit offers and contextual investment nudges to app interfaces that adapt to individual financial habits, personalisation is becoming a core strategy, not just a feature. This is a huge departure from reactive service models, positioning data as a long-term strategic asset.

But the impact of AI isn’t limited to customer-facing experiences. It’s also driving innovation deep within the banking stack, from fraud detection and SME loan processing to intelligent chatbots that scale customer support. On the infrastructure side, banks are investing in agile, AI-ready platforms to support automation, model training, and advanced analytics at scale. These shifts are redefining how banks operate, make decisions, and deliver value. Institutions that integrate AI across both front-end journeys and back-end processes are setting a new benchmark for agility, efficiency, and competitiveness in a fast-changing financial landscape.

2. Regulatory Shifts are Redrawing the Competitive Landscape

Regulators are moving quickly in Asia Pacific by introducing frameworks for Open Banking, real-time payments, and even AI-specific standards like Singapore’s AI Verify. But the challenge for banks isn’t just keeping up with evolving external mandates. Internally, many are navigating a complicated mix of overlapping policies, built up over years of compliance with local, regional, and global rules. This often slows down innovation and makes it harder to implement AI and automation consistently across the organisation.

As banks double down on AI, it is clear that governance can’t be an afterthought. Many are still dealing with fragmented ownership of AI systems, inconsistent oversight, and unclear rules around things like model fairness and explainability. The more progressive ones are starting to fix this by setting up centralised governance frameworks, investing in risk-based controls, and putting processes in place to monitor things like bias and model drift from day one. They are not just trying to stay compliant; they are preparing for what’s coming next. In this landscape, the ability to manage regulatory complexity with speed and clarity, both internally and externally, is quickly becoming a competitive edge.

3. Success Depends on Strategy, Not Just Tech

While enthusiasm for AI is high, sustainable success hinges on a clear, aligned strategy that connects technology to business outcomes. Many banks struggle with fragmented initiatives because they lack a unified roadmap that prioritises high-impact use cases. Without clear goals, AI projects often fail to deliver meaningful value, becoming isolated pilots with limited scalability.

To avoid this, banks need to develop robust return-on-investment (ROI) models tailored to their context — measuring benefits like faster credit decisioning, reduced fraud losses, or increased cross-selling effectiveness. These models must consider not only the upfront costs of infrastructure and talent, but also ongoing expenses such as model retraining, governance, and integration with existing systems.

Ethical AI governance is another essential pillar. With growing regulatory scrutiny and public concern about opaque “black box” models, banks must embed transparency, fairness, and accountability into their AI frameworks from the outset. This goes beyond compliance; strong governance builds trust and is key to responsible, long-term use of AI in sensitive, high-stakes financial environments.

4. Legacy Challenges Still Hold Banks Back

Despite strong momentum, many banks face foundational barriers that hinder effective AI deployment. Chief among these is data fragmentation. Core customer, transaction, compliance, and risk data are often scattered across legacy systems and third-party platforms, making it difficult to access the integrated, high-quality data that AI models require.

This limits the development of comprehensive solutions and makes AI implementations slower, costlier, and less effective. Instead of waiting for full system replacements, banks need to invest in integration layers and modern data platforms that unify data sources and make them AI-ready. These platforms can connect siloed systems – such as CRM, payments, and core banking – to deliver a consolidated view, which is crucial for accurate credit scoring, personalised offers, and effective risk management.

Banks must also address talent gaps. The shortage of in-house AI expertise means many institutions rely on external consultants, which increases costs and reduces knowledge transfer. Without building internal capabilities and adjusting existing processes to accommodate AI, even sophisticated models may end up underused or misapplied.

5. Collaboration and Capability Building are Key Enablers

AI transformation isn’t just a technology project – it’s an organisation-wide shift that requires new capabilities, ways of working, and strategic partnerships. Success depends on more than just hiring data scientists. Relationship managers, credit officers, compliance teams, and frontline staff all need to be trained to understand and act on AI-driven insights. Processes such as loan approvals, fraud escalations, and customer engagement must be redesigned to integrate AI outputs seamlessly.

To drive continuous innovation, banks should establish internal Centres of Excellence for AI. These hubs can lead experimentation with high-value use cases like predictive credit scoring or real-time fraud detection, while ensuring that learnings are shared across business units. They also help avoid duplication and promote strategic alignment.

Partnerships with fintechs, technology providers, and academic institutions play a vital role as well. These collaborations offer access to cutting-edge tools, niche expertise, and locally relevant AI models that reflect the regulatory, cultural, and linguistic contexts banks operate in. In a fast-moving and increasingly competitive space, this combination of internal capability building and external collaboration gives banks the agility and foresight to lead.

Indonesia’s vast, diverse population and scattered islands create a unique landscape for AI adoption. Across sectors – from healthcare to logistics and banking to public services – leaders view AI not just as a tool for efficiency but as a means to expand reach, build resilience, and elevate citizen experience. With AI expected to add up to 12% of Indonesia’s GDP by 2030, it’s poised to be a core engine of growth.

Yet, ambition isn’t enough. While AI interest is high, execution is patchy. Many organisations remain stuck in isolated pilots or siloed experiments. Those scaling quickly face familiar hurdles: fragmented infrastructure, talent gaps, integration issues, and a lack of unified strategy and governance.

Ecosystm gathered insights and identified key challenges from senior tech leaders during a series of roundtables we moderated in Jakarta. The conversations revealed a clear picture of where momentum is building – and where obstacles continue to slow progress. From these discussions, several key themes emerged that highlight both opportunities and ongoing barriers in the country’s digital journey.

Theme 1. Digital Natives are Accelerating Innovation; But Need Scalable Guardrails

Indonesia’s digital-first companies – especially in fintech, logistics tech, and media streaming – are rapidly building on AI and cloud-native foundations. Players like GoTo, Dana, Jenius, and Vidio are raising the bar not only in customer experience but also in scaling technology across a mobile-first nation. Their use of AI for customer support, real-time fraud detection, biometric eKYC, and smart content delivery highlights the agility of digital-native models. This innovation is particularly concentrated in Jakarta and Bandung, where vibrant startup ecosystems and rich talent pools drive fast iteration.

Yet this momentum brings new risks. Deepfake attacks during onboarding, unsecured APIs, and content piracy pose real threats. Without the layered controls and regulatory frameworks typical of banks or telecom providers, many startups are navigating high-stakes digital terrain without a safety net.

As these companies become pillars of Indonesia’s digital economy, a new kind of guardrail is essential; flexible enough to support rapid growth, yet robust enough to mitigate systemic risk.

A sector-wide governance playbook, grounded in local realities and aligned with global standards, could provide the balance needed to scale both quickly and securely.

Theme 2. Scaling AI in Indonesia: Why Infrastructure Investment Matters

Indonesia’s ambition for AI is high, and while digital infrastructure still faces challenges, significant opportunities lie ahead. Although telecom investment has slowed and state funding tightened, growing momentum from global cloud players is beginning to reshape the landscape. AWS’s commitment to building cloud zones and edge locations beyond Java is a major step forward.

For AI to scale effectively across Indonesia’s diverse archipelago, the next wave of progress will depend on stronger investment incentives for data centres, cloud interconnects, and edge computing.

A proactive government role – through updated telecom regulations, streamlined permitting, and public-private partnerships – can unlock this potential.

Infrastructure isn’t just the backbone of digital growth; it’s a powerful lever for inclusion, enabling remote health services, quality education, and SME empowerment across even the most distant regions.

Theme 3. Cyber Resilience Gains Momentum; But Needs to Be More Holistic

Indonesian organisations are facing an evolving wave of cyber threats – from sophisticated ransomware to DDoS attacks targeting critical services. This expanding threat landscape has elevated cyber resilience from a technical concern to a strategic imperative embraced by CISOs, boards, and risk committees alike. While many organisations invest heavily in security tools, the challenge remains in moving beyond fragmented solutions toward a truly resilient operating model that emphasises integration, simulation, and rapid response.

The shift from simply being “secure” to becoming genuinely “resilient” is gaining momentum. Resilience – captured by the Bahasa Indonesia term “ulet” – is now recognised as the ability not just to defend, but to endure disruption and bounce back stronger. Regulatory steps like OJK’s cyber stress testing and continuity planning requirements are encouraging organisations to go beyond mere compliance.

Organisations will now need to operationalise resilience by embedding it into culture through cross-functional drills, transparent crisis playbooks, and agile response practices – so when attacks strike, business impact is minimised and trust remains intact.

For many firms, especially in finance and logistics, this mindset and operational shift will be crucial to sustaining growth and confidence in a rapidly evolving digital landscape.

Theme 4. Organisations Need a Roadmap for Legacy System Transformation

Legacy systems continue to slow modernisation efforts in traditional sectors such as banking, insurance, and logistics by creating both technical and organisational hurdles that limit innovation and scalability. These outdated IT environments are deeply woven into daily operations, making integration complex, increasing downtime risks, and frustrating cross-functional teams striving to deliver digital value swiftly. The challenge goes beyond technology – there’s often a disconnect between new digital initiatives and existing workflows, which leads to bottlenecks and slows progress.

Recognising these challenges, many organisations are now investing in middleware solutions, automation, and phased modernisation plans that focus on upgrading key components gradually. This approach helps bridge the gap between legacy infrastructure and new digital capabilities, reducing the risk of enterprise-wide disruption while enabling continuous innovation.

The crucial next step is to develop and commit to a clear, incremental roadmap that balances risk with progress – ensuring legacy systems evolve in step with digital ambitions and unlock the full potential of transformation.

Theme 5. AI Journey Must Be Rooted in Local Talent and Use Cases

Ecosystm research reveals that only 13% of Indonesian organisations have experimented with AI, with most yet to integrate it into their core strategies.

While Indonesia’s AI maturity remains uneven, there is a broad recognition of AI’s potential as a powerful equaliser – enhancing public service delivery across 17,000 islands, democratising diagnostics in rural healthcare, and improving disaster prediction for flood-prone Jakarta.

The government’s 2045 vision emphasises inclusive growth and differentiated human capital, but achieving these goals requires more than just infrastructure investment. Building local talent pipelines is critical. Initiatives like IBM’s AI Academy in Batam, which has trained over 2,000 AI practitioners, are promising early steps. However, scaling this impact means embedding AI education into national curricula, funding interdisciplinary research, and supporting SMEs with practical adoption toolkits.

The opportunity is clear: GenAI can act as an multiplier, empowering even resource-constrained sectors to enhance reach, personalisation, and citizen engagement.

To truly unlock AI’s potential, Indonesia must move beyond imported templates and focus on developing grounded, context-aware AI solutions tailored to its unique landscape.

From Innovation to Impact

Indonesia’s tech journey is at a pivotal inflection point – where ambition must transform into alignment, and isolated pilots must scale into robust platforms. Success will depend not only on technology itself but on purpose-driven strategy, resilient infrastructure, cultural readiness, and shared accountability across industries. The future won’t be shaped by standalone innovations, but by coordinated efforts that convert experimentation into lasting, systemic impact.

Automation and AI hold immense promise for accelerating productivity, reducing errors, and streamlining tasks across virtually every industry. From manufacturing plants that operate robotic arms to software-driven solutions that analyse millions of data points in seconds, these technological advancements are revolutionising how we work. However, AI has already led to, and will continue to bring about, many unintended consequences.

One that has been discussed for nearly a decade but is starting to impact employees and brand experiences is the “automation paradox”. As AI and automation take on more routine tasks, employees find themselves tackling the complex exceptions and making high-stakes decisions.

What is the Automation Paradox?

1. The Shifting Burden from Low to High Value Tasks

When AI systems handle mundane or repetitive tasks, ‘human’ employees can direct their efforts toward higher-value activities. At first glance, this shift seems purely beneficial. AI helps filter out extraneous work, enabling humans to focus on the tasks that require creativity, empathy, or nuanced judgment. However, by design, these remaining tasks often carry greater responsibility. For instance, in a retail environment with automated checkout systems, a human staff member is more likely to deal with complex refund disputes or tense customer interactions. Or in a warehouse, as many processes are automated by AI and robots, humans are left with the oversight of, and responsibility for entire processes. Over time, handling primarily high-pressure situations can become mentally exhausting, contributing to job stress and potential burnout.

2. Increased Reliance on Human Judgment in Edge Cases

AI excels at pattern recognition and data processing at scale, but unusual or unprecedented scenarios can stump even the best-trained models. The human workforce is left to solve these complex, context-dependent challenges. Take self-driving cars as an example. While most day-to-day driving can be safely automated, human oversight is essential for unpredictable events – like sudden weather changes or unexpected road hazards.

Human intervention can be a critical, life-or-death matter, amplifying the pressure and stakes for those still in the loop.

3. The Fallibility Factor of AI

Ironically, as AI becomes more capable, humans may trust it too much. When systems make mistakes, it is the human operator who must detect and rectify them. But the further removed people are from the routine checks and balances – since “the system” seems to handle things so competently – the greater the chance that an error goes unnoticed until it has grown into a major problem. For instance, in the aviation industry, pilots who rely heavily on autopilot systems must remain vigilant for rare but critical emergency scenarios, which can be more taxing due to limited practice in handling manual controls.

Add to These the Known Challenges of AI!

Bias in Data and Algorithms. AI systems learn from historical data, which can carry societal and organisational biases. If left unchecked, these algorithms can perpetuate or even amplify unfairness. For instance, an AI-driven hiring platform trained on past decisions might favour candidates from certain backgrounds, unintentionally excluding qualified applicants from underrepresented groups.

Privacy and Data Security Concerns. The power of AI often comes from massive data collection, whether for predicting consumer trends or personalising user experiences. This accumulation of personal and sensitive information raises complex legal and ethical questions. Leaks, hacks, or improper data sharing can cause reputational damage and legal repercussions.

Skills Gap and Workforce Displacement. While AI can eliminate the need for certain manual tasks, it creates a demand for specialised skills, such as data science, machine learning operations, and AI ethics oversight. If an organisation fails to provide employees with retraining opportunities, it risks exacerbating skill gaps and losing valuable institutional knowledge.

Ethical and Social Implications. AI-driven decision-making can have profound impacts on communities. For example, a predictive policing system might inadvertently target specific neighbourhoods based on historical arrest data. When these systems lack transparency or accountability, public trust erodes, and social unrest can follow.

How Can We Mitigate the Known and Unknown Consequences of AI?

While some of the unintended consequences of AI and automation won’t be known until systems are deployed and processes are in practice, there are some basic hygiene approaches that technology leaders and their organisational peers can take to minimise these impacts.

- Human-Centric Design. Incorporate user feedback into AI system development. Tools should be designed to complement human skills, not overshadow them.

- Comprehensive Training. Provide ongoing education for employees expected to handle advanced AI or edge-case scenarios, ensuring they remain engaged and confident when high-stakes decisions arise.

- Robust Governance. Develop clear policies and frameworks that address bias, privacy, and security. Assign accountability to leaders who understand both technology and organisational ethics.

- Transparent Communication. Maintain clear channels of communication regarding what AI can and cannot do. Openness fosters trust, both internally and externally.

- Increase your organisational AIQ (AI Quotient). Most employees are not fully aware of the potential of AI and its opportunity to improve – or change – their roles. Conduct regular upskilling and knowledge sharing activities to improve the AIQ of your employees so they start to understand how people, plus data and technology, will drive their organisation forward.

Let me know your thoughts on the Automation Paradox, and stay tuned for my next blog on redefining employee skill pathways to tackle its challenges.

AI has broken free from the IT department. It’s no longer a futuristic concept but a present-day reality transforming every facet of business. Departments across the enterprise are now empowered to harness AI directly, fuelling innovation and efficiency without waiting for IT’s stamp of approval. The result? A more agile, data-driven organisation where AI unlocks value and drives competitive advantage.

Ecosystm’s research over the past two years, including surveys and in-depth conversations with business and technology leaders, confirms this trend: AI is the dominant theme. And while the potential is clear, the journey is just beginning.

Here are key AI insights for HR Leaders from our research.

Click here to download “AI Stakeholders: The HR Perspective” as a PDF.

HR: Leading the Charge (or Should Be)

Our research reveals a fascinating dynamic in HR. While 54% of HR leaders currently use AI for recruitment (scanning resumes, etc.), their vision extends far beyond. A striking majority plan to expand AI’s reach into crucial areas: 74% for workforce planning, 68% for talent development and training, and 62% for streamlining employee onboarding.

The impact is tangible, with organisations already seeing significant benefits. GenAI has streamlined presentation creation for bank employees, allowing them to focus on content rather than formatting and improving efficiency. Integrating GenAI into knowledge bases has simplified access to internal information, making it quicker and easier for employees to find answers. AI-driven recruitment screening is accelerating hiring in the insurance sector by analysing resumes and applications to identify top candidates efficiently. Meanwhile, AI-powered workforce management systems are transforming field worker management by optimising job assignments, enabling real-time tracking, and ensuring quick responses to changes.

The Roadblocks and the Opportunity

Despite this promising outlook, HR leaders face significant hurdles. Limited exploration of use cases, the absence of a unified organisational AI strategy, and ethical concerns are among the key barriers to wider AI deployments.

Perhaps most concerning is the limited role HR plays in shaping AI strategy. While 57% of tech and business leaders cite increased productivity as the main driver for AI investments, HR’s influence is surprisingly weak. Only 20% of HR leaders define AI use cases, manage implementation, or are involved in governance and ownership. A mere 8% primarily manage AI solutions.

This disconnect represents a massive opportunity.

2025 and Beyond: A Call to Action for HR

Despite these challenges, our research indicates HR leaders are prioritising AI for 2025. Increased productivity is the top expected outcome, while three in ten will focus on identifying better HR use cases as part of a broader data-centric approach.

The message is clear: HR needs to step up and claim its seat at the AI table. By proactively defining use cases, championing ethical considerations, and collaborating closely with tech teams, HR can transform itself into a strategic driver of AI adoption, unlocking the full potential of this transformative technology for the entire organisation. The future of HR is intelligent, and it’s time for HR leaders to embrace it.

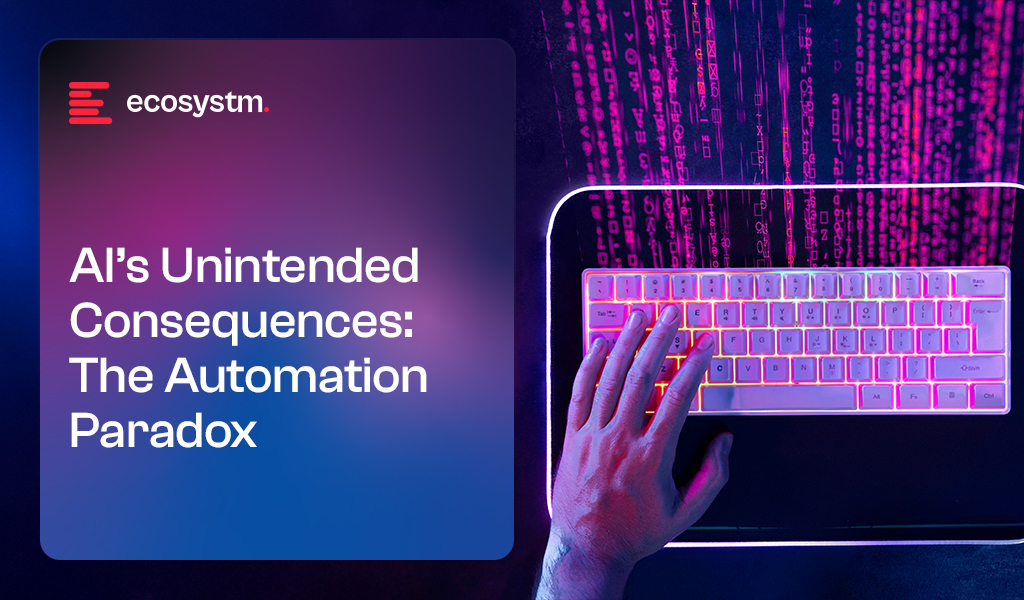

Ecosystm research shows that cybersecurity is the most discussed technology at the Board and Management level, driven by the increasing sophistication of cyber threats and the rapid adoption of AI. While AI enhances security, it also introduces new vulnerabilities. As organisations face an evolving threat landscape, they are adopting a more holistic approach to cybersecurity, covering prevention, detection, response, and recovery.

In 2025, cybersecurity leaders will continue to navigate a complex mix of technological advancements, regulatory pressures, and changing business needs. To stay ahead, organisations will prioritise robust security solutions, skilled professionals, and strategic partnerships.

Ecosystm analysts Darian Bird, Sash Mukherjee, and Simona Dimovski present the key cybersecurity trends for 2025.

Click here to download ‘Securing the AI Frontier: Top 5 Cyber Trends for 2025’ as a PDF

1. Cybersecurity Will Be a Critical Differentiator in Corporate Strategy

The convergence of geopolitical instability, cyber weaponisation, and an interconnected digital economy will make cybersecurity a cornerstone of corporate strategy. State-sponsored cyberattacks targeting critical infrastructure, supply chains, and sensitive data have turned cyber warfare into an operational reality, forcing businesses to prioritise security.

Regulatory pressures are driving this shift, mandating breach reporting, data sovereignty, and significant penalties, while international cybersecurity norms compel companies to align with evolving standards to remain competitive.

The stakes are high. Stakeholders now see cybersecurity as a proxy for trust and resilience, scrutinising both internal measures and ecosystem vulnerabilities.

2. Zero Trust Architectures Will Anchor AI-Driven Environments

The future of cybersecurity lies in never trusting, always verifying – especially where AI is involved.

In 2025, the rise of AI-driven systems will make Zero Trust architectures vital for cybersecurity. Unlike traditional networks with implicit trust, AI environments demand stricter scrutiny due to their reliance on sensitive data, autonomous decisions, and interconnected systems. The growing threat of adversarial attacks – data poisoning, model inversion, and algorithmic manipulation – highlights the urgency of continuous verification.

Global forces are driving this shift. Regulatory mandates like the EU’s DORA, the US Cybersecurity Executive Order, and the NIST Zero Trust framework call for robust safeguards for critical systems. These measures align with the growing reliance on AI in high-stakes sectors like Finance, Healthcare, and National Security.

3. Organisations Will Proactively Focus on AI Governance & Data Privacy

Organisations are caught between excitement and uncertainty regarding AI. While the benefits are immense, businesses struggle with the complexities of governing AI. The EU AI Act looms large, pushing global organisations to brace for stricter regulations, while a rise in shadow IT sees business units bypassing traditional IT to deploy AI independently.

In this environment of regulatory ambiguity and organisational flux, CISOs and CIOs will prioritise data privacy and governance, proactively securing organisations with strong data frameworks and advanced security solutions to stay ahead of emerging regulations.

Recognising that AI will be multi-modal, multi-vendor, and hybrid, organisations will invest in model orchestration and integration platforms to simplify management and ensure smoother compliance.

4. Network & Security Stacks Will Streamline Through Converged Platforms

This shift stems from the need for unified management, cost efficiency, and the recognition that standardisation enhances security posture.

Tech providers are racing to deliver comprehensive network and security platforms.

Recent M&A moves by HPE (Juniper), Palo Alto Networks (QRadar SaaS), Fortinet (Lacework), and LogRhythm (Exabeam) highlight this trend. Rising player Cato Networks is capitalising on mid-market demand for single-provider solutions, with many customers planning to consolidate vendors in their favour. Meanwhile, telecoms are expanding their SASE offerings to support organisations adapting to remote work and growing cloud adoption.

5. AI Will Be Widely Used to Combat AI-Powered Threats in Real-time

By 2025, the rise of AI-powered cyber threats will demand equally advanced AI-driven defences.

Threat actors are using AI to launch adaptive attacks like deepfake fraud, automated phishing, and adversarial machine learning, operating at a speed and scale beyond traditional defences.

Real-time AI solutions will be essential for detection and response.

Nation-state-backed advanced persistent threat (APT) groups and GenAI misuse are intensifying these challenges, exploiting vulnerabilities in critical infrastructure and supply chains. Mandatory reporting and threat intelligence sharing will strengthen AI defences, enabling real-time adaptation to emerging threats.