The Hamburg State Commissioner for Data Protection and Freedom of Information (HmbBfDI) imposed a fine of USD 41.3 million on Swedish MNC, Hennes & Mauritz (H&M) for illegal surveillance of employees in H&M Germany’s service centre at Nuremberg.

The data privacy violations reportedly began in 2014 when the company started collecting employee data including their personal information, holidays, medical records, informal chats and other private details. It was found that the information was unlawfully recorded and stored; and was further opened to managers. The violations were discovered in October 2019 when due to a computing error the data became accessible company-wide for a short span.

Ecosystm Principal Analyst Claus Mortensen says. “This is one of those cases that are so blatant that you cannot really say it is setting a precedent for future cases. All the factors that would constitute a breach of the GDPR are here: it involves several types of data that shouldn’t be collected; poorly managed storage and access control; and to finish it all off, a data leak. So even though the fine is relatively high, H&M should probably be happy that it was not bigger – the GDPR authorises fines of up to 4% of a company’s global annual turnover.”

Mortensen adds, “It should also be said that H&M has handled the aftermath well by accepting full blame and by offering compensation to all affected employees. It is possible that these intentions were considered by the HmbBfDI and prevented an even higher fine.”

The penalty on the Swedish retailer is the highest in Germany linked to the General Data Protection Regulation (GDPR) legislation since it came into effect in 2018 and the second highest throughout the continent. Last year, France’s data protection watchdog fined Google USD 58.7 million for not appropriately disclosing data collection practices to users across its services to personalise advertising.

Talking about the growing significance of fines for data breaches, Ecosystm Principal Advisor Andrew Milroy says, “To be effective, GDPR needs to be enforced consistently across the board and have a significant impact. It is too easy to ‘corner cut’ data protection activities. Some breaches may not have an operational impact. For this reason, the cost of being caught needs to be sufficiently large so that it makes commercial sense to comply.”

According to Milroy, “The sizeable fine meted out to H&M together with the publicity it has generated shows that the regulators are serious about GDPR and enforcing it. Other regulators around the world need to make sure that their jurisdictions don’t become ‘soft touches’ for malicious actors.”

EU Proposing New Data Sharing Rules

We are also seeing the European Union (EU) make moves to regulate digital services and customer data use by technology providers, as part of the European Union Digital Strategy. The EU is drafting new rules under the Digital Services Act to force larger technology providers to share their customer data across the industry, to create an even playing field for smaller providers and SMEs. The aim is to make the data available to all for both commercial use and innovation. This is being driven by the EU’s antitrust arm, aimed to reduce the competitive edge tech giants have over their competition and they may be banned from preferential treatment of their own services on their sites or platforms. The law, which is expected to be formalised later this year, is also expected to prohibit technology providers from pre-installing applications or exclusive services on smartphones, laptops or devices. The measures will support users to move between platforms without losing access to their data.

The telecom industry in India was in a pretty tight spot due to various challenges led by the Adjusted Gross Revenue (AGR) contention. AGR is a fee-sharing mechanism between the Government and the telecom providers who shifted to ‘revenue-sharing fee’ model in 1999, from the ‘fixed license fee’ model. Telecom providers are supposed to share a percentage of their AGR with the Government. While the government says that AGR includes all revenues from both telecom as well as non-telecom services, the operators contend that it should include only the revenue from core services. While the legal proceedings continue, India’s telecom industry continued facing other challenges such as one of the lowest ARPUs in the world and intense competition.

However, COVID-19 has given the industry a boost, changing the market dynamics and due to the increased interests of global investors. In his report, The New Normal for Telecom Providers in Southeast Asia, Ecosystm Principal Advisor, Shamir Amanullah talks about how the telecom sector has fast evolved as the backbone of business and social interactions as the adoption of applications such as video conferencing and collaborative tools surge. Streaming services such as Netflix have become the go-to source for entertainment, putting the telecom sector in the spotlight today.

India’s monthly active internet user base is estimated to touch 639 million by the end of December, thanks to the COVID-19-induced measures that have forced people to stay indoors. Currently estimated at 574 million, the number of monthly active internet users has grown 24% over that of 2019, indicating an overall penetration of 41% last year. Further, It is estimated that India will have more than 907 million internet users by 2023, accounting for nearly 64% of the population. There are also around 71 million children aged 5-11 years, who go online using devices of family members exhibiting high future digital adoption in the Gen Z.

India’s rural areas are driving the country’s digital revolution, with a 45% growth in internet penetration in 2019 as compared to 11% in urban India. Rural India has an estimated 264 million internet users and is expected to reach 304 million in 2020. Local language content and video drive the internet boom in rural India, with a 250% rise in penetration in the last four years. Mobile is the device of choice for 100% of active users to browse the internet.

Global Interest in the Indian Market

Reliance Jio

Jio Platforms, a subsidiary of Reliance Industries (India’s most valued firm) has raised an estimate of USD 20.2 billion in the past four months from 13 investors by selling about 33% stake in the firm. To put this into context, India’s entire start-up ecosystem raised USD 14.5 billion last year! Besides Google and Facebook, the list of investors includes Qualcomm Investment Ventures, Intel Capital, KKR, TPG, General Atlantic, Silver Lake, L Catterton, Vista Equity Partners, the Abu Dhabi Investment Authority and Saudi Arabia’s Public Investment Fund.

Google’s new investment gives Jio Platforms an equity valuation of USD 58 billion. The investment today from Google is one of the rare instances when it has joined its global rival Facebook in backing a firm. Google and Reliance Jio Platforms will work on a customised version of the Android operating system to develop low-cost, entry-level smartphones to serve the next hundreds of millions of users, according to Mukesh Ambani, Chairman and MD of Reliance Industries. These phones will support Google Play and future wireless standard 5G, he said.

Jio is increasing its focus on the development of areas such as digital services, education, healthcare and entertainment that can support economic growth and social inclusion at a critical time for the economy. At the Reliance Annual General meet, it was announced that Jio has developed a complete 5G solution from scratch that will enable us the launch of a world-class 5G service in India. Jio also revealed that the company is developing Jio TV Plus, Jio Glass, and more.

With an estimated 387 million subscribers as on 31st March 2020 making them the largest in the country, Jio Platforms provides telecom, broadband, and digital content services. Leveraging advanced technologies like Big Data Analytics, AI, IoT, Augmented and Mixed Reality, and Blockchain, this platform is focused on providing affordable internet connectivity with the content to match.

Bharti Airtel

Bharti Telecom, the promoter of Bharti Airtel, has sold a 2.75% stake in the telecom operator for an estimated USD 1.15 billion in May 2020 to a healthy mix of investors – long-only and hedge fund – across Asia, Europe and the US. The promoter entity will use the proceeds of the stake sale to pare debt and become a “debt-free company”.

It was reported that Amazon is in early-stage talks to buy a stake worth USD 2 billion in Bharti Airtel. This translates to a 5% stake based on the current market valuation of the telecom operator. There have also been conversations about the possibility of an agreement on a commercial transaction where Airtel would offer Amazon’s products at cheaper rates. However, Bharti Airtel has clarified that it works with digital and OTT platforms from time-to-time but has no other activity to report.

Airtel has also shared plans to integrate technology and telecom to build a digital platform to take on Jio’s ambitions of evolving into a tech and consumer company. To scale up its digital platforms business, Airtel has been betting on four pillars: data, distribution, payments, and network.

Bharti Airtel also announced it has partnered with Verizon to launch the BlueJeans video-conferencing service in India to serve business customers in the world’s second-largest internet market. They have an estimated 328 million subscribers as on 31st March 2020 making them the 2nd largest in the country.

The Third Player

Vodafone Idea Limited

Vodafone has an estimated 319 million subscribers as on 31st March 2020 making them the 3rd largest telecom provider in the country. There was unvalidated news that Google had shown interest in Vodafone but that does not seem relevant now given their investment in Jio.

The AGR case remains a significant factor for the telecom sector, particularly for Vodafone given their precarious financial position.

However, in recent times, their ARPU is expected to increase by over 40% from USD 1.23 to USD 1.88, through increased pricing. The stock market is responding positively to Vodafone with the stock almost doubling in the last 1 month

As organisations aim to maintain operations during the ongoing crisis, there has been an exponential increase in employees working from home and relying on the Workplace of the Future technologies. 41% of organisations in an ongoing Ecosystm study on the Digital Priorities in the New Normal cited making remote working possible as a key organisational measure introduced to combat current workplace challenges.

Ecosystm Principal Advisor, Audrey William says, “During the COVID-19 pandemic, people have become reliant on voice, video and collaboration tools and even when things go back to normal in the coming months, the blended way of work will be the norm. There has been a surge of video and collaboration technologies. The need to have good communication and collaboration tools whether at home or in the office has become a basic expectation especially when working from home. It has become non-negotiable.”

William also notes, “We are living in an ‘Experience Economy’ – if the user experience around voice, video and collaboration is poor, customers will find a platform that gives them the experience they like. To get that equation right is not easy and there is a lot of R&D, partnerships and user experience design involved.”

AWS and Slack Partnership

Amid a rapid increase in remote working requirements, AWS and Slack announced a multi-year partnership to collaborate on solutions to enable the Workplace of the Future. This will give Slack users the ability to manage their AWS resources within Slack, as well as replace Slack’s voice and video call features with AWS’s Amazon Chime. And AWS will be using Slack for their internal communication and collaboration.

Slack already uses AWS cloud infrastructure to support enterprise customers and have committed to spend USD 50 million a year over five years with AWS. However, the extended partnership is promising a new breed of solutions for the future workforce.

Slack and AWS are also planning to tightly integrate key features such as: AWS Key Management Service with Slack Enterprise Key Management (EKM) for better security and encryption; AWS Chatbot to push AWS Virtual machines notifications to Slack users; and AWS AppFlow to secure data flow between Slack, AWS S3 Storage and AWS Redshift data warehouse.

The Competitive Landscape

The partnership between AWS and Slack has enabled Slack to scale and compete with more tools in its arsenal. The enterprise communication and collaboration market is heating up with announcements such as Zoom ramping up its infrastructure on Oracle Cloud. The other major cloud platform players already have their own collaboration offerings, with Microsoft Teams and Google Meet. The AWS-Slack announcement is another example of industry players looking to improve their offerings through partnership agreements. Slack is already integrated with a number of Microsoft services such as OneDrive, Outlook and SharePoint and there was talk of being integrated with Microsoft Teams earlier this year. Similarly, Slack has also integrated some GSuite tools on its platform.

“There is a battle going on now in the voice, video and collaboration space and there are many players that offer rich enterprise grade capabilities in this space. AWS is already Slack’s “preferred” cloud infrastructure provider, and the two companies have a common rival in Microsoft, competing with its Azure and Teams products, respectively,” says William.

The Single Platform Approach

The competition in the video, voice and collaboration market in becoming increasingly intense and the ability to make it easy for users across all functions on one common platform is the ideal situation. This explains why we have seen vendors in recent months adding greater capabilities to their offerings. For instance, Zoom added Zoom phone functionality to expand its offerings to users. Avaya released Spaces – an integrated cloud meeting and team collaboration solution with chat, voice, video, online meetings, and content sharing capabilities. The market also has Cisco as an established presence, providing video and voice solutions to many large organisations.

Organisations want an all-in-one platform for voice, video and collaboration if possible as it makes it easier for management. Microsoft Teams is a single platform for enterprise communications and collaboration. William says, “Teams has seen steady uptake since its launch and for many IT managers the ability to capture all feedback, issues/logs on one platform is important. Other vendors are pushing the one vendor platform option heavily; for example, 8×8 has been able to secure wins in the market because of the one vendor platform push.”

“As the competition heats up, we can expect more acquisitions and partnerships in the communications and collaboration space, in an effort to provide all functions on a single platform,” says William. “However, irrespective of what IT Teams want, we are still seeing organisations use different platforms from multiple vendors. This is a clear indication that in the end there is only one benefit that organisations seek – quality of experience.”

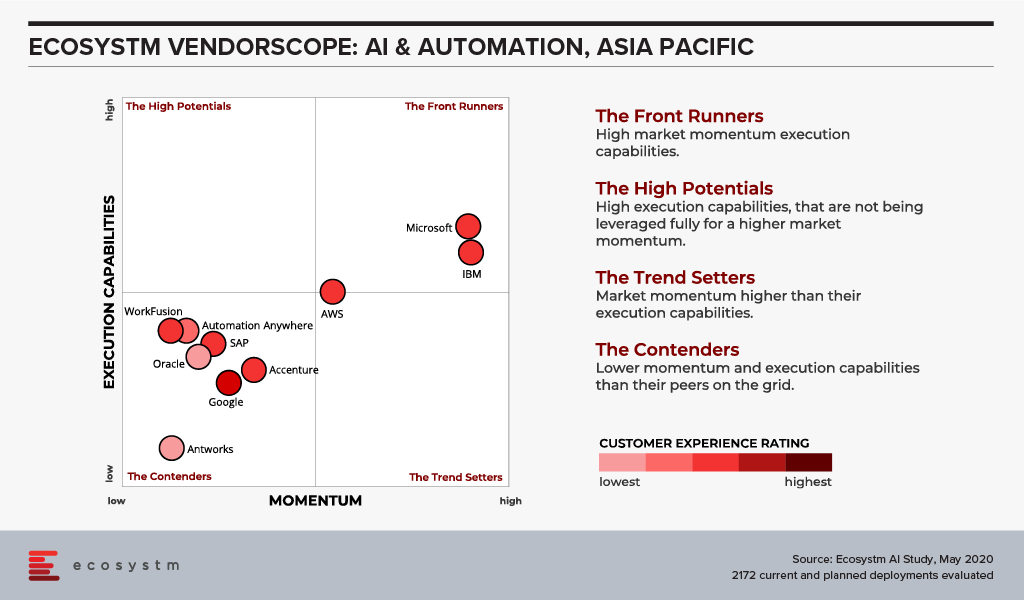

I’m really excited to launch our AI and Automation VendorScope! This new tool can help technology buyers understand which vendors are offering an exceptional customer experience, which ones have momentum and which are executing and delivering on their promised capabilities. The positioning of vendors in Ecosystm VendorScopes is independent of analyst bias or opinion or vendor influence – customers directly rate their suppliers in our ongoing market benchmarks and assessments.

The Evolution of the AI Market

The AI market has evolved significantly over the past few years. It has gone from a niche, poorly understood technology, to a mainstream one. Projects have moved from large, complex, moonshot-style “change the world” initiatives to small, focused capabilities that look to deliver value quickly. And they have moved from primarily internally focused projects to delivering value to customers and partners. Even the current pandemic is changing the lens of AI projects as 38% of the companies we benchmarked in Asia Pacific in the Ecosystm Business Pulse Study, are recalibrating their AI models for the significant change in trading conditions and customer circumstances.

Automation has changed too – from a heavily fragmented market with many specific – and often very simple tools – to comprehensive suites of automation capabilities. We are also beginning to see the use of machine learning within the automation platforms as this market matures and chases after the bigger automation opportunities where processes are not only simplified but removed through intelligent automation.

Cloud Platform Providers Continue to Lead

But what has changed little over the years is the dominance of the big cloud providers as the AI leaders. Azure, IBM and AWS continue to dominate customer mentions and intentions. And it is in customer mentions that the frontrunners in the VendorScope – Microsoft and IBM – set themselves apart. Not only are they important players today – but existing customers AND non-customers plan to use their services over the next 12-24 months. This gives them the market momentum over the other players. Even AWS and Google – the other two public cloud giants – who also have strong AI offerings – didn’t see the same proportions of customers and prospects planning to use their AI platforms and tools.

While Microsoft and IBM may have stolen the lead for now, they cannot expect the challengers to sit still. In the last few weeks alone we have seen several major launches of AI capabilities from some providers. And the Automation vendors are looking to new products and partnerships to take them forward.

Without the market momentum, Microsoft and IBM would still stand above the rest of the pack – just not as dramatically! Both companies are not just offering the AI building blocks, but also offer smart applications and services – this is possibly what sets them apart in an era where more and more customers want their applications to be smart out-of-the-box (or out-of-the-cloud). The appetite for long, expensive AI projects is waning – fast time to value will win deals today.

The biggest change in AI over the next few years will hopefully be more buyers demanding that their applications are smart out-of-the-box/cloud. AI and Automation shouldn’t be expensive add-ons – they should form the core of smart applications – applications that work for the business and for the customer. Applications that will deliver the next generation of employee and customer experiences.

Ecosystm Vendorscope: AI & Automation

Signup for Free to access the Ecosystm Vendorscope: AI & Automation report.

The COVID-19 crisis has forced countries to implement work from home policies and lockdowns. Since the crisis hit, uptake of cloud communication and collaboration solutions have seen a dramatic increase. Video conferencing provider, Zoom has emerged as a key player in the market, with a rapid increase in user base from 10 million daily active participants in December 2019 to 200 million in March 2020 – a growth in the number of users of nearly 200%!

Security Concerns around Zoom

The rapid increase in user base and the surge in traffic has required Zoom to re-evaluate its offerings and capacity. The platform was primarily built for enterprises and now is seeing unprecedented usage in conducting team meetings, webinars, virtual conferences, e-learning, and social events.

The one area where they were impacted most is security. In his report, Cybersecurity Considerations in the COVID-19 Era, Ecosystm Principal Advisor Andrew Milroy says, “The extraordinary growth of Zoom has made it a target for attackers. It has had to work remarkably hard to plug the security gaps, identified by numerous breaches. Many security vulnerabilities have been discovered with Zoom such as, a vulnerability to UNC path injection in the client chat feature, which allows hackers to steal Windows credentials, keeping decryption keys in the cloud which can potentially be accessed by hackers and the ability for trolls to ‘Zoombomb’ open and unprotected meetings.”

“Zoom largely responded to these disclosures quickly and transparently, and it has already patched many of the weaknesses highlighted by the security community. But it continues to receive rigorous stress testing by hackers, exposing more vulnerabilities.”

However, Milroy does not think that this issue is unique to Zoom. “Collaboration platforms tend to tread a fine line between performance and security. Too much security can cause performance and usability to be impacted negatively. Too little security, as we have seen, allows hackers to find vulnerabilities. If data privacy is critical for a meeting, then perhaps collaboration platforms should not be used, or organisations should not share critical information on them.”

Zoom to increase Capacity and Scalability

Zoom is aware that it has to increase its service capacity and scalability of its offerings, if it has to successfully leverage its current market presence, beyond the COVID-19 crisis. Last week Zoom announced that that it had selected Oracle as its cloud Infrastructure provider. One of the reasons cited for the choice is Oracle’s “industry-leading security”. It has been reported that Zoom is transferring more than 7 PB of data through Oracle Cloud Infrastructure servers daily.

In addition to growing their data centres, Zoom has been using AWS and Microsoft Azure as its hosting providers. Milroy says, “It makes sense for Zoom to use another supplier rather than putting ‘all its eggs in one or two baskets’. Zoom has not shared the commercial details, but it is likely that Oracle has offered more predictable pricing. Also, the security offered by the Oracle Cloud Infrastructure deal is likely to have impacted the choice and it is likely that Oracle has also priced its security features very competitively.”

“It must also be borne in mind that Google, Microsoft and Amazon are all competing directly with Zoom. They all offer video collaboration platforms and like Zoom, are seeing huge growth in demand. Zoom may not wish to contribute to the growth of its competitors any more than it needs to.”

Milroy sees another benefit to using Oracle. “Oracle is known to have a presence in the government sector – especially in the US. Working with Oracle might make it easier for Zoom to win large government contracts, to consolidate its market presence.”

Gain access to more insights from the Ecosystm Cloud Study

In The Top 5 Cloud trends for 2020, Ecosystm Principal Analyst, Claus Mortensen had predicted that in 2020, cloud and IoT will drive edge computing.

“Edge computing has been widely touted as a necessary component of a viable 5G setup, as it offers a more cost-effective and lower latency option than a traditional infrastructure. Also, with IoT being a major part of the business case behind 5G, the number of connected devices and endpoints is set to explode in the coming years, potentially overloading an infrastructure based fully on centralised data centres for processing the data,” says Mortensen.

Although some are positioning the Edge as the ultimate replacement of cloud, Mortensen believes it will be a complementary rather than a competing technology. “The more embedded major cloud providers like AWS and Microsoft can become with 5G providers, the better they can service customers, who want to access cloud resources via the mobile networks. This is especially compelling for customers who need very low latency access.”

Affirmed Networks Brings Microsoft to the 5G Infrastructure Table

Microsoft recently announced that they were in discussions to acquire Affirmed Networks, a provider of network functions virtualisation (NFV) software for telecom operators. The company’s existing enterprise customer base is impressive with over 100 major telecom customers including big names such as AT&T, Orange and Vodafone. Affirmed Networks’ recently appointed CEO, Anand Krishnamurthy says that their virtualised cloud-native network, Evolved Packet Core, allows for scale on demand with a range of automation capabilities, at 70% of the cost of traditional networks. The telecom industry has been steadily moving away from proprietary hardware-based infrastructure, opting for open, software-defined networking (SDN). This acquisition will potentially allow Microsoft to leverage their Azure platform for 5G infrastructure and for cloud-based edge computing applications.

Ecosystm Principal Advisor, Shamir Amanullah says, “The telecommunications industry is suffering from a decline in traditional services leading to a concerted effort in reducing costs and introducing new digital services. To do this in preparation for 5G, carriers are working towards transforming their operations and business support systems to a more virtualised and software-defined infrastructure. 5G will be dynamic, more than ever before, for a number of reasons. 5G will operate across a range of frequencies and bands, with significantly more devices and connections, highly software-defined with computing power at the Edge.”

Microsoft is by no means the only tech giant that is exploring this space. Google recently announced a new solution, Anthos for Telecom and a new service called the Global Mobile Edge Cloud (GMEC), aimed at giving telecom providers compute power on the Edge. At about the same time, HPE announced a new portfolio of as-a-service offering to help telecom companies build and deploy open 5G networks. Late last year, AWS had launched AWS Wavelength that promises to bring compute and storage services at the edge of telecom providers’ 5G networks. Microsoft’s acquisition of Affirmed Networks brings them to the 5G infrastructure table.

Microsoft Continues to Focus on 5G Offerings

The acquisition of Affirmed Networks is not the only Microsoft initiative to improve their 5G offerings. Last week also saw Microsoft announce Azure Edge Zones aimed at reducing latency for both public and private networks. AT&T is a good example of how public carriers will use the Azure Edge Zones. As part of the ongoing partnership with Microsoft, AT&T has already launched a Dallas Edge Zone, with another one planned for Los Angeles, later in the year. Microsoft also intends to offer the Azure Edge Zones, independent of carriers in denser areas. They also launched Azure Private Edge Zones for private enterprise networks suitable for delivering ultra low latency performance for IoT devices.

5G will remain a key area of focus for cloud and software giants. Amanullah sees this trend as a challenge to infrastructure providers such as Huawei, Ericsson and Nokia. “History has shown how these larger software providers can be fast, nimble, innovative, and extremely customer-centric. Current infrastructure providers should not take this challenge lightly.”

Talking about the top 5 global cloud players – Microsoft, AWS, Google, Alibaba and IBM – in the Ecosystm Predicts: The Top 5 Cloud Trends for 2020, Ecosystm Principal Analyst, Claus Mortensen had said, “their ability to compete will increasingly come down to expansion of their service capabilities beyond their current offerings. Ecosystm expects these players to further enhance their focus on expanding their services, management and integration capabilities through global and in-country partnerships.” Google Cloud is doing just that. The last week has been busy for Google Cloud with a few announcements that show that it is ramping up – adding both depth and breadth to their portfolio.

Expanding Data Centre Footprint

This year Google Cloud is set to expand the number of locations to 26 countries. Earlier in the year, Google CEO Sundar Pichai had promised to invest more than US$ 10 billion in expanding their data centre footprints in the USA and they have recently opened their Salt Lake City data centre. Last week Google announced four new data centre locations in Doha (Qatar), Toronto (Canada), Melbourne (Australia), and Delhi (India). With Australia, Canada and India, Google appears to be following the same policy they followed in Japan – where locations in Osaka and Tokyo give customers the option to have an in-country disaster recovery solution. Doha marks Google Cloud’s first foray into the Middle East. While the data centre will primarily cater to global clients, Google has noted a substantial interest from customers in the Middle East and Africa.

Mortensen says, “Google’s new data centres can be seen as an organic geographical expansion of their cloud services but there are a few more factors at play. With data privacy laws getting stricter across the globe, the ability to offer localised data storage is becoming more important – and India is a very good example of a market where keeping data within the geographical borders will become a must.”

“The expansion will also help the development of Google’s own edge computing services going forward. As we noted in our Ecosystm Predicts document, we believe that Cloud and IoT will drive edge computing (which is tightly tied to 5G). Edge computing will function in a symbiotic relationship with centralised data centres where low latency is important. The geographical expansion of Google’s data centre presence will thus also help their push towards edge computing services.”

Google offers their cloud infrastructure and digital transformation (DX) solutions to customers in 150 countries. Not only are they expanding their data centre footprint, but they are also creating industry differentiation. They have targeted industry-specific solutions that deliver new digital capabilities in 6 key verticals – financial services; telecommunications media and entertainment; retail; healthcare and life sciences; manufacturing and industrial; and public sector.

Partnering with Telecom Providers

Last week also saw the unveiling of the Global Mobile Edge Cloud (GMEC) aimed at the telecom industry’s need to transform and the challenges it faces. The telecom industry – long considered an enabler of DX in other industries – stands at a crossroads now. It is time for the industry to transform in order to succeed in a challenging market, newer devices and networking capabilities, and evolving customer requirements – both consumer and enterprise. Talking about the impact of 5G on telecom providers, Ecosystm Principal Advisor, Shamir Amanullah says, “5G is an enterprise play and leading tech giants, carriers and the companies in the ecosystem are collaborating and inking partnerships in order to create solutions and monetise 5G opportunities across industries.”

Google Cloud announced a partnership with AT&T, which is meant to leverage AT&T’s 5G network and Google Cloud’s edge compute technologies (AI and machine learning, analytics, Kubernetes and networking) to develop a joint portfolio of 5G edge computing solutions. This is part of Google’s larger strategy of supporting telecom providers in their efforts to monetise 5G as a business services platform. Through the GMEC, Google Cloud will partner with carriers to offer a suite of applications and services at the edge via 5G networks.

The telecom industry is a key focus as Google aims to help operators take 5G to market, by creating solutions and services that can be offered to enterprises. This includes better customer engagement through data-driven experiences, and improvement of operational efficiencies across core telecom systems. Telecom providers such as Vodafone and Wind Tre are leveraging Google to improve customer experience through data-driven insights.

Amanullah says, “Google Cloud already has thousands of edge nodes inside the carrier networks which will be enabled for use by enterprises, providing access to data analytics, AI and machine learning capabilities. Carriers can offer enterprises these data-driven solutions, to transform the customer experience they offer. Google will also create solutions which will enable carriers and enterprises to improve infrastructure and operational efficiencies through modern cloud-based Operations Support Systems (OSS) and Business Support Systems (BSS).”

Mortensen also thinks that the data centre expansion should be seen in the light of Google’s GMEC push. “Both India and the Middle East are big potential markets via the local telecom providers.”

Two things happened recently that 99% of the ICT world would normally miss. After all microprocessor and chip interconnect technology is quite the geek area where we generally don’t venture into. So why would I want to bring this to your attention?

We are excited about the innovation that analytics, machine learning (ML) and all things real time processing will bring to our lives and the way we run our business. The data center, be it on an enterprise premise or truly on a cloud service provider’s infrastructure is being pressured to provide compute, memory, input/output (I/O) and storage requirements to take advantage of the hardware engineers would call ‘accelerators’. In its most simple form, an accelerator microprocessor does the specialty work for ML and analytics algorithms while the main microprocessor is trying to hold everything else together to ensure that all of the silicon parts are in sync. If we have a ML accelerator that is too fast with its answers, it will sit and wait for everyone else as its outcomes squeezed down a narrow, slow pipe or interconnect – in other words, the servers that are in the data center are not optimized for these workloads. The connection between the accelerators and the main components becomes the slowest and weakest link…. So now back to the news of the day.

A new high speed CPU-to-device interconnect standard, the Common Express Link (CXL) 1.0 was announced by Intel and a consortium of leading technology companies (Huawei and Cisco in the network infrastructure space, HPE and Dell EMC in the server hardware market, and Alibaba, Facebook, Google and Microsoft for the cloud services provider markets). CXL joins a crowded field of other standards already in the server link market including CAPI, NVLINK, GEN-Z and CCIX. CXL is being positioned to improve the performance of the links between FPGA and GPUs, the most common accelerators to be involved in ML-like workloads.

Of course there were some names that were absent from the launch – Arm, AMD, Nvidia, IBM, Amazon and Baidu. Each of them are members of the other standards bodies and probably are playing the waiting game.

Now let’s pause for a moment and look at the other announcement that happened at the same time. Nvidia and Mellanox announced that the two companies had reached a definitive agreement under which Nvidia will acquire Mellanox for $6.9 billion. Nvidia puts the acquisition reasons as “The data and compute intensity of modern workloads in AI, scientific computing and data analytics is growing exponentially and has put enormous performance demands on hyperscale and enterprise datacenters. While computing demand is surging, CPU performance advances are slowing as Moore’s law has ended. This has led to the adoption of accelerated computing with Nvidia GPUs and Mellanox’s intelligent networking solutions.”

So to me it seems that despite Intel working on CXL for four years, it looks like they might have been outbid by Nvidia for Mellanox. Mellanox has been around for 20 years and was the major supplier of Infiniband, a high speed interconnect that is common in high performance workloads and very well accepted by the HPC industry. (Note: Intel was also one of the founders of the Infiniband Trade Association, IBTA, before they opted to refocus on the PCI bus). With the growing need for fast links between the accelerators and the microprocessors, it would seem like Mellanox persistence had paid off and now has the market coming to it. One can’t help but think that as soon as Intel knew that Nvidia was getting Mellanox, it pushed forward with the CXL announcement – rumors that have had no response from any of the parties.

Advice for Tech Suppliers:

The two announcements are great for any vendor who is entering the AI, intense computing world using graphics and floating point arithmetic functions. We know that more digital-oriented solutions are asking for analytics based outcomes so there will be a growing demand for broader commoditized server platforms to support them. Tech suppliers should avoid backing or picking one of either the CXL or Infiniband at the moment until we see how the CXL standard evolves and how nVidia integrates Mellanox.

Advice for Tech Users:

These two announcements reflect innovation that is generally so far away from the end user, that it can go unnoticed. However, think about how USB (Universal Serial Bus) has changed the way we connect devices to our laptops, servers and other mobile devices. The same will true for this connection as more and more data is both read and outcomes generated by the ‘accelerators’ for the way we drive our cars, digitize our factories, run our hospitals, and search the Internet. Innovation in this space just got a shot in the arm from these two announcements.