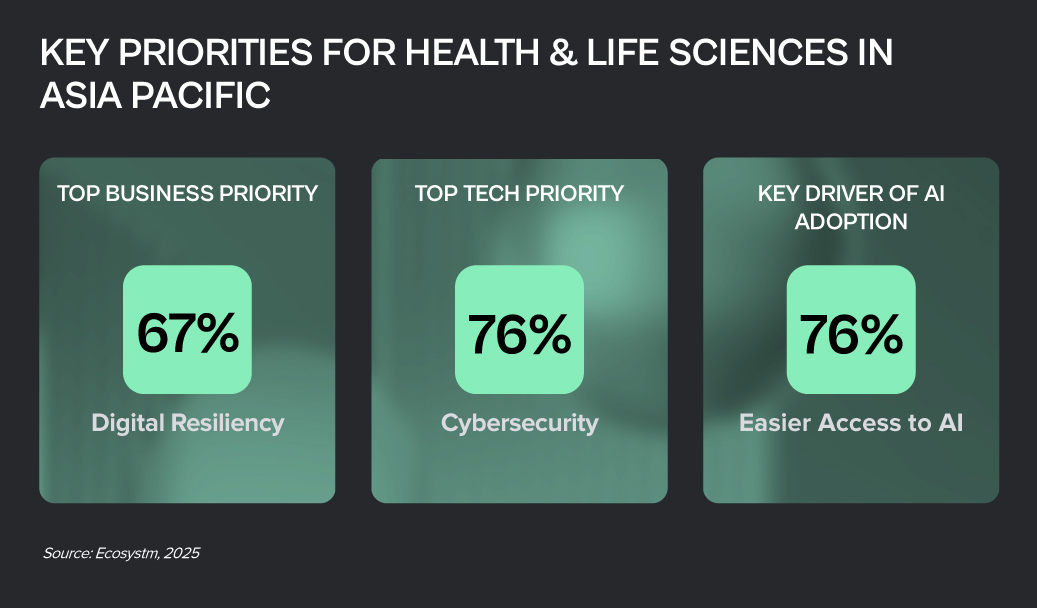

Advanced technology and the growing interconnectedness of devices are no longer futuristic concepts in health and life sciences – they’re driving a powerful transformation. Technology, combined with societal demands, is reshaping drug discovery, clinical trials, patient care, and even our understanding of the human body.

The potential to create more efficient, personalised, and effective healthcare solutions has never been greater.

Click here to download “Future Forward: Reimagining Health & Life Sciences” as a PDF.

Modernising HR for Enhanced Efficiency & Employee Experience

The National Healthcare Group (NHG), a leading public healthcare provider in Singapore, recognised the need to modernise their HR system to better support 20,000+ healthcare professionals and improve patient services.

The iConnect@NHG initiative was launched to centralise HR functions, providing mobile access and self-service capabilities, and streamlining workflows across NHG’s integrated network of hospitals, polyclinics, and specialty centres.

The solution streamlined HR processes, giving employees easy access to essential data, career tools, and claims. The cloud-based platform improved data accuracy, reduced admin work, and integrated analytics for better decision-making and engagement. With 95% adoption, productivity and job satisfaction surged, enabling staff to focus on care delivery.

Automating Workflows for Better Patient Outcomes

Gold Coast Health handles a high volume of patient interactions across a wide range of medical services. The challenge was to streamline operations and reduce administrative burdens to improve patient care.

The solution involved automating the patient intake process by replacing paper forms with electronic versions, freeing up significant staff time.

A new clinical imaging solution also automates the uploading of wound images and descriptions into patient records, further saving time. Additionally, Gold Coast Health implemented a Discharge to Reassess system to automate follow-ups for long-term outpatient care. They are also exploring AI to simplify tasks and improve access to information, allowing clinical teams to focus more on patient care.

Streamlining Operations, Improving Care

IHH Healthcare, a global provider with over 80 hospitals across 10 countries, faced a fragmented IT landscape that hindered data management and patient care.

To resolve this, IHH migrated their core application workloads, including EMRs, to a next-gen cloud platform, unifying data across their network and enhancing analytics.

Additionally, they adopted an on-prem cloud solution to comply with local data residency requirements. This transformation reduced report generation time from days to hours, boosting operational efficiency and improving patient and clinician experiences. By leveraging advanced cloud technologies, IHH is strengthening their commitment to delivering world-class healthcare.

Creating Seamless & Compassionate Patient Journeys

The Narayana Health group in India is committed to providing accessible, high-quality care. However, they faced challenges with fragmented patient data, which hindered personalised care and efficient interactions.

To address this, Narayana Health centralised patient data, providing agents with a 360-degree view to offer more informed and compassionate service.

By automating tasks like call routing and form-filling, the organisation reduced average handling times and increased appointment conversions. Additionally, automated communication tools delivered timely, sensitive updates, strengthening patient relationships. The initiative has improved operational efficiency and deepened the organisation’s patient-centric focus.

Reimagining Location Services for Digital Healthcare

Halodoc, a leading digital health platform in Indonesia, connects millions of users with healthcare professionals and pharmacies.

To improve key services like home lab appointments and medicine delivery, Halodoc sought a more cost-effective and secure location service.

The transition resulted in an 88% reduction in costs for geocoding and places functionalities while enhancing data security. With better performance monitoring, Halodoc processed millions of geocoding and place requests with no major issues. This migration not only optimised costs but also resolved long-standing technical challenges, positioning Halodoc for future innovation, including machine learning and AI. The move strengthened their data security and provided a solid foundation for continued growth and high-quality healthcare delivery across Indonesia.

Driving Efficiency & Accessibility through Integrated Systems

Lupin, a global pharma leader, aimed to boost patient care, streamline operations, and enhance accessibility. By integrating systems and centralising data, Lupin wanted seamless interactions between patients, doctors, and the salesforce.

The company implemented a scalable infrastructure optimised for critical business applications, backed by high-performance server and storage technologies.

This integration improved data-driven decision-making, leading to optimised operations, reduced costs, and improved medicine quality and affordability. The robust infrastructure also ensured near-zero downtime, enhancing reliability and efficiency. Through this transformation, Lupin reinforced its commitment to providing patient-centred, affordable healthcare with faster, more efficient outcomes.

Leveraging AI for Cloud Security

Mitsubishi Tanabe Pharma’s “VISION 30” seeks to deliver personalised healthcare by 2030, focusing on precision medicine and digital solutions. The company is investing in advanced digital technologies and secure data infrastructure to achieve these goals.

To secure their expanding cloud platform, the company adopted a zero-trust model and enhanced identity management.

A security assessment identified gaps in cloud configuration, prompting tailored security improvements. GenAI was introduced to translate and summarise security alerts, reducing processing time from 10 minutes to just one minute, improving efficiency and security awareness across the team. The company is actively exploring further AI-driven solutions to strengthen security and drive their digital transformation, advancing the vision for personalised healthcare.

AI has become a battleground for geopolitical competition, national resilience, and societal transformation. The stakes are no longer theoretical, and the window for action is closing fast.

In March, the U.S. escalated its efforts to shape the global technology landscape by expanding export controls on advanced AI and semiconductor technologies. Over 80 entities – more than 50 in China – were added to the export blacklist, aiming to regulate access to critical technologies. The move seeks to limit the development of high-performance computing, quantum technologies, and AI in certain regions, citing national security concerns.

As these export controls tighten, reports have surfaced of restricted chips entering China through unofficial channels, including e-commerce platforms. U.S. authorities are working to close these gaps by sanctioning new entities attempting to circumvent the restrictions. The Department of Commerce’s Bureau of Industry and Security (BIS) is also pushing for stricter Know Your Customer (KYC) regulations for cloud service providers to limit unauthorised access to GPU resources across the Asia Pacific region.

Geopolitics & the Pursuit of AI Dominance

Bipartisan consensus has emerged in Washington around the idea that leading in artificial general intelligence (AGI) is a national security imperative. If AI is destined to shape the future balance of power, the U.S. government believes it cannot afford to fall behind. This mindset has accelerated an arms-race dynamic reminiscent of the Thucydides Trap, where the fear of being overtaken compels both sides to push ahead, even if alignment and safety mechanisms are not yet in place.

China has built extensive domestic surveillance infrastructure and has access to large volumes of data that would be difficult to collect under the regulatory frameworks of many other countries. Meanwhile, major U.S. social media platforms can refine their AI models using behavioural data from a broad global user base. AI is poised to enhance governments’ ability to monitor compliance and enforce laws that were written before the digital age – laws that previously assumed enforcement would be limited by practical constraints. This raises important questions about how civil liberties may evolve when technological limitations are no longer a barrier to enforcement.

The Digital Battlefield

Cybersecurity Threat. AI is both a shield and a sword in cybersecurity. We are entering an era of algorithm-versus-algorithm warfare, where AI’s speed and adaptability will dictate who stays secure and who gets compromised. Nations are prioritising AI for cyber defence to stay ahead of state actors using AI for attacks. For example, the DARPA AI Cyber Challenge is funding tools that use AI to identify and patch vulnerabilities in real-time – essential for defending against state-sponsored threats.

Yet, a key vulnerability exists within AI labs themselves. Many of these organisations, though responsible for cutting-edge models, operate more like startups than defence institutions. This results in informal knowledge sharing, inconsistent security standards, and minimal government oversight. Despite their strategic importance, these labs lack the same protections and regulations as traditional military research facilities.

High-Risk Domains and the Proliferation of Harm. AI’s impact on high-risk domains like biotechnology and autonomous systems is raising alarms. Advanced AI tools could lower the barriers for small groups or even individuals to misuse biological data. As Anthropic CEO Dario Amodei warns, “AI will vastly increase the number of people who can cause catastrophic harm.”

This urgency for oversight mirrors past technological revolutions. The rise of nuclear technology prompted global treaties and safety protocols, and the expansion of railroads drove innovations like block signalling and standardised gauges. With AI’s rapid progression, similar safety measures must be adopted quickly.

Meanwhile, AI-driven autonomous systems are growing in military applications. Drones equipped with AI for real-time navigation and target identification are increasingly deployed in conflict zones, especially where traditional systems like GPS are compromised. While these technologies promise faster, more precise operations, they also raise critical ethical questions about decision-making, accountability, and latency.

The 2024 National Security Memorandum on AI laid down initial guidelines for responsible AI use in defence. However, significant challenges remain around enforcement, transparency, and international cooperation.

AI for Intelligence and Satellite Analysis. AI also holds significant potential for national intelligence. Governments collect massive volumes of satellite imagery daily – far more than human analysts can process alone. AI models trained on geospatial data can greatly enhance the ability to detect movement, monitor infrastructure, and improve border security. Companies like ICEYE and Satellogic are advancing their computer vision capabilities to increase image processing efficiency and scale. As AI systems improve at identifying patterns and anomalies, each satellite image becomes increasingly valuable. This could drive a new era of digital intelligence, where AI capabilities become as critical as the satellites themselves.

Policy, Power, and AI Sovereignty

Around the world, governments are waking up to the importance of AI sovereignty – ensuring that critical capabilities, infrastructure, and expertise remain within national borders. In Europe, France has backed Mistral AI as a homegrown alternative to US tech giants, part of a wider ambition to reduce dependency and assert digital independence. In China, DeepSeek has gained attention for developing competitive LLMs using relatively modest compute resources, highlighting the country’s determination to lead without relying on foreign technologies.

These moves reflect a growing recognition that in the AI age, sovereignty doesn’t just mean political control – it also means control over compute, data, and talent.

In the US, the public sector is working to balance oversight with fostering innovation. Unlike the internet, the space program, or the Manhattan Project, the AI revolution was primarily initiated by the private sector, with limited state involvement. This has left the public sector in a reactive position, struggling to keep up. Government processes are inherently slow, with legislation, interagency reviews, and procurement cycles often lagging rapid technological developments. While major AI breakthroughs can happen within months, regulatory responses may take years.

To address this gap, efforts have been made to establish institutions like the AI Safety Institute and requiring labs to share their internal safety evaluations. However, since then, there has been a movement to reduce the regulatory burden on the AI sector, emphasising the importance of supporting innovation over excessive caution.

A key challenge is the need to build both policy frameworks and physical infrastructure in tandem. Advanced AI models require significant computational resources, and by extension, large amounts of energy. As countries like the US and China compete to be at the forefront of AI innovation, ensuring a reliable energy supply for AI infrastructure becomes crucial.

If data centres cannot scale quickly or if clean energy becomes too expensive, there is a risk that AI infrastructure could migrate to countries with fewer regulations and lower energy costs. Some nations are already offering incentives to attract these capabilities, raising concerns about the long-term security of critical systems. Governments will need to carefully balance sovereignty over AI infrastructure with the development of sufficient domestic electricity generation capacity, all while meeting sustainability goals. Without strong partnerships and more flexible policy mechanisms, countries may risk ceding both innovation and governance to private actors.

What Lies Ahead

AI is no longer an emerging trend – it is a cornerstone of national power. It will shape not only who leads in innovation but also who sets the rules of global engagement: in cyber conflict, intelligence gathering, economic dominance, and military deterrence. The challenge governments face is twofold. First, to maintain strategic advantage, they must ensure that AI development – across private labs, defence systems, and public infrastructure – remains both competitive and secure. Second, they must achieve this while safeguarding democratic values and civil liberties, which are often the first to erode under unchecked surveillance and automation.

This isn’t just about faster processors or smarter algorithms. It’s about determining who defines the future – how decisions are made, who has oversight, and what values are embedded in the systems that will govern our lives.

The education sector is evolving rapidly, driven by technological innovation and shifting societal needs. This transformation extends beyond digitisation, requiring a fundamental rethink of how students and employees engage. AI-driven personalisation, immersive virtual environments, and data analytics are reshaping curricula, teaching strategies, and operational efficiency.

Here are recent examples of transformation across the Asia Pacific.

Click here to download “Future Forward: Reimagining Education” as a PDF.

Streamlining Service Delivery

Griffith University struggled with fragmented systems and siloed information, leading to inconsistent service and inefficiencies. Managing support for over 45,000 students became unsustainable, demanding a streamlined solution.

By adopting an enterprise service management platform, Griffith consolidated multiple portals into a single system, automating ticketing, request management, and AI-driven self-service.

Starting with library services, the transformation expanded across IT, HR, legal, and other functions, improving accessibility and collaboration. The impact was immediate: self-service surged by 87%, first-contact resolution jumped by 43%, and incident resolution time dropped by 25%. Call volume fell 31% and email inquiries 46%. Now scaling the platform university-wide, Griffith is streamlining service for students and staff.

AI for Recruitment & Content

The Indian Institute of Hotel Management (IIHM) sought to improve recruitment efficiency and enhance educational content creation. Manual hiring processes were slow and inconsistent, while developing high-quality learning materials was resource-intensive.

IIHM implemented an AI-driven platform to automate candidate assessments and generate accurate, engaging educational content.

This transformation cut interview times by half, improved hiring precision to 90%, and boosted student job placements by up to 30%. AI-generated materials reached 95% accuracy, creating a more effective learning experience. With stronger recruitment and enriched education, IIHM continues to reinforce its leadership in hospitality training.

AI-Accelerated Research

La Trobe University sought to harness GenAI to streamline research operations and accelerate market entry. Researchers faced challenges in accessing university-approved knowledge efficiently, while limited development capabilities slowed the commercialisation of research findings.

By implementing a retrieval-augmented generation (RAG) system, La Trobe enabled rapid, AI-powered access to research data, initially tested on autism studies.

Simultaneously, the university co-developed an AI-driven application to transform research into market-ready solutions faster. AI-driven development reduced time from months to weeks, with core components built in under a week. By leveraging in-house AI tools, La Trobe achieved an 8.7x cost reduction compared to outsourcing. This initiative positioned the university as a leader in AI-driven innovation, bridging the gap between academia and industry.

AI-Driven Personalisation

BINUS University aimed to future-proof its operations and student learning experiences. With GenAI reshaping education, the university sought to integrate AI into administration and teaching to boost efficiency and deliver adaptive, personalised learning.

BINUS has integrated AI across key areas, driving efficiency and personalisation.

AI-powered student intake predictions have reached 90% accuracy, optimising resource allocation across 14 campuses. GenAI automates Diploma Supplement Document (DPI) creation, reducing manual effort and improving accuracy. AI enhances the library system with personalised book recommendations and powers the AI Tutor for faster, tailored academic feedback. AI-driven language learning platforms further boost student engagement.

Unified Digital Workflows

Western Sydney University (WSU) faced inefficiencies from over 32 shared email addresses and paper-based forms, causing delays, poor inquiry tracking, and complicated administration – hindering timely, effective support.

WSU launched WesternNow to replace outdated systems with a unified digital platform, streamlining service requests, enhancing case tracking, cutting manual processes, and improving the user experience for students and staff.

This made WSU’s service delivery more responsive and efficient. The platform drastically improved efficiency, cutting request logging time from over 4 minutes to seconds. Staff tracked and resolved cases seamlessly without sifting through emails. Workflow digitisation eliminated most paper forms, saving time and resources, while consolidating forms into services reduced their number by 40%.

The tech industry is experiencing a strategic convergence of AI, data management, and cybersecurity, driving a surge in major M&A activity. As enterprises tackle digital transformation, these three pillars are at the forefront, accelerating the race to acquire and integrate critical technologies.

Here are this year’s key consolidation moves, showcasing how leading tech companies are positioning themselves to capitalise on the rising demand for AI-driven solutions, robust data infrastructure, and enhanced cybersecurity.

AI Convergence: Architecting the Intelligent Enterprise

From customer service to supply chain management, AI is being deployed across the entire enterprise value chain. This widespread demand for AI solutions is creating a dynamic M&A market, with tech companies acquiring specialised AI capabilities.

IBM’s AI Power Play

IBM’s acquisitions of HashiCorp and DataStax mark a decisive step in its push to lead enterprise AI and hybrid cloud. The USD 6.4B HashiCorp deal that got finalised this year, brings Terraform, a top-tier infrastructure-as-code tool that streamlines multi-cloud deployments – key to integrating IBM’s Red Hat OpenShift and Watsonx AI. Embedding Terraform enhances automation, making hybrid cloud infrastructure more efficient and AI-ready.

The DataStax acquisition strengthens IBM’s AI data strategy. With AstraDB and Apache Cassandra, IBM gains scalable NoSQL solutions for AI workloads, while Langflow simplifies AI app development. Together, these moves position IBM as an end-to-end AI and cloud powerhouse, offering enterprises seamless automation, data management, and AI deployment at scale.

MongoDB’s RAG Focus

MongoDB’s USD 220M acquisition of Voyage AI signals a strategic push toward enhancing AI reliability. At the core of this move is retrieval-augmented generation (RAG), a technology that curbs AI hallucinations by grounding responses in accurate, relevant data.

By integrating Voyage AI into its Atlas cloud database, MongoDB is making AI applications more trustworthy and reducing the complexity of RAG implementations. Enterprises can now build AI-driven solutions directly within their database, streamlining development while improving accuracy. This move consolidates MongoDB’s role as a key player in enterprise AI, offering both scalable data management and built-in AI reliability.

Google’s 1B Bet on Anthropic

Google’s continued investment in Anthropic reinforces its commitment to foundation model innovation and the evolving GenAI landscape. More than a financial move, this signals Google’s intent to shape the future of AI by backing one of the field’s most promising players.

This investment aligns with a growing trend among cloud giants securing stakes in foundation model developers to drive AI advancements. By deepening ties with Anthropic, Google not only gains access to cutting-edge AI research but also strengthens its position in developing safe, scalable, and enterprise-ready AI. This solidifies Google’s long-term AI strategy, ensuring its leadership in GenAI while seamlessly integrating these capabilities into its cloud ecosystem.

ServiceNow’s AI Automation Expansion

ServiceNow’s USD 2.9B acquisition of Moveworks completed this year, marking a decisive push into AI-driven service desk automation. This goes beyond feature expansion – it redefines enterprise support operations by embedding intelligent automation into workflows, reducing resolution times, and enhancing employee productivity.

The acquisition reflects a growing shift: AI-powered service management is no longer optional but essential. Moveworks’ AI-driven capabilities – natural language understanding, machine learning, and automated issue resolution – will enable ServiceNow to deliver a smarter, more proactive support experience. Additionally, gaining Moveworks’ customer base strengthens ServiceNow’s market reach.

Data Acquisition Surge: Fuelling Digital Transformation

Data has transcended its role as a byproduct of operations, becoming the lifeblood that fuels digital transformation. This fundamental shift has triggered a surge in strategic acquisitions focused on enhancing data management and storage capabilities.

Lenovo Scaling Enterprise Storage

Lenovo’s USD 2B acquisition of Infinidat strengthens its position in enterprise storage as data demands surge. Infinidat’s AI-driven InfiniBox delivers high-performance, low-latency storage for AI, analytics, and HPC, while InfiniGuard ensures advanced data protection.

By integrating these technologies, Lenovo expands its hybrid cloud offerings, challenging Dell and NetApp while reinforcing its vision as a full-stack data infrastructure provider.

Databricks Streamlining Data Warehouse Migrations

Databricks’ USD 15B acquisition of BladeBridge accelerates data warehouse migrations with AI-driven automation, reducing manual effort and errors in migrating legacy platforms like Snowflake and Teradata. BladeBridge’s technology enhances Databricks’ SQL platform, simplifying the transition to modern data ecosystems.

This strengthens Databricks’ Data Intelligence Platform, boosting its appeal by enabling faster, more efficient enterprise data consolidation and supporting rapid adoption of data-driven initiatives.

Cybersecurity Consolidation: Fortifying the Digital Fortress

The escalating sophistication of cyber threats has transformed cybersecurity from a reactive measure to a strategic imperative. This has fuelled a surge in M&A aimed at building comprehensive and integrated security solutions.

Turn/River Capital’s Security Acquisition

Turn/River Capital’s USD 4.4 billion acquisition of SolarWinds underscores the enduring demand for robust IT service management and security software. This acquisition is a testament to the essential role SolarWinds plays in enterprise IT infrastructure, even in the face of past security breaches.

This is a bold investment, in the face of prior vulnerability and highlights a fundamental truth: the need for reliable security solutions outweighs even the most public of past failings. Investors are willing to make long term bets on companies that provide core security services.

Sophos Expanding Managed Detection & Response Capabilities

Sophos completed the acquisition of Secureworks for USD 859M significantly strengthens its managed detection and response (MDR) capabilities, positioning Sophos as a major player in the MDR market. This consolidation reflects the growing demand for comprehensive cybersecurity solutions that offer proactive threat detection and rapid incident response.

By integrating Secureworks’ XDR products, Sophos enhances its ability to provide end-to-end protection for its customers, addressing the evolving threat landscape with advanced security technologies.

Cisco’s Security Portfolio Expansion

Cisco completed the USD 28B acquisition of SnapAttack further expanding its security business, building upon its previous acquisition of Splunk. This move signifies Cisco’s commitment to creating a comprehensive security portfolio that can address the diverse needs of its enterprise customers.

By integrating SnapAttack’s threat detection capabilities, Cisco strengthens its ability to provide proactive threat intelligence and incident response, solidifying its position as a leading provider of security solutions.

Google’s Cloud Security Reinforcement

Google’s strategic acquisition of Wiz, a leading cloud security company, for USD 32B demonstrates its commitment to securing cloud-native environments. Wiz’s expertise in proactive threat detection and remediation will significantly enhance Google Cloud’s security offerings. This move is particularly crucial as organisations increasingly migrate their workloads to the cloud.

By integrating Wiz’s capabilities, Google aims to provide its customers with a robust security framework that can protect their cloud-based assets from sophisticated cyber threats. This acquisition positions Google as a stronger competitor in the cloud security market, reinforcing its commitment to enterprise-grade cybersecurity.

The Way Ahead

The M&A trends of 2025 underscore the critical role of AI, data, and security in shaping the technology landscape. Companies that prioritise these core areas will be best positioned for long-term success. Strategic acquisitions, when executed with foresight and agility, will serve as essential catalysts for navigating the complexities of the evolving digital world.

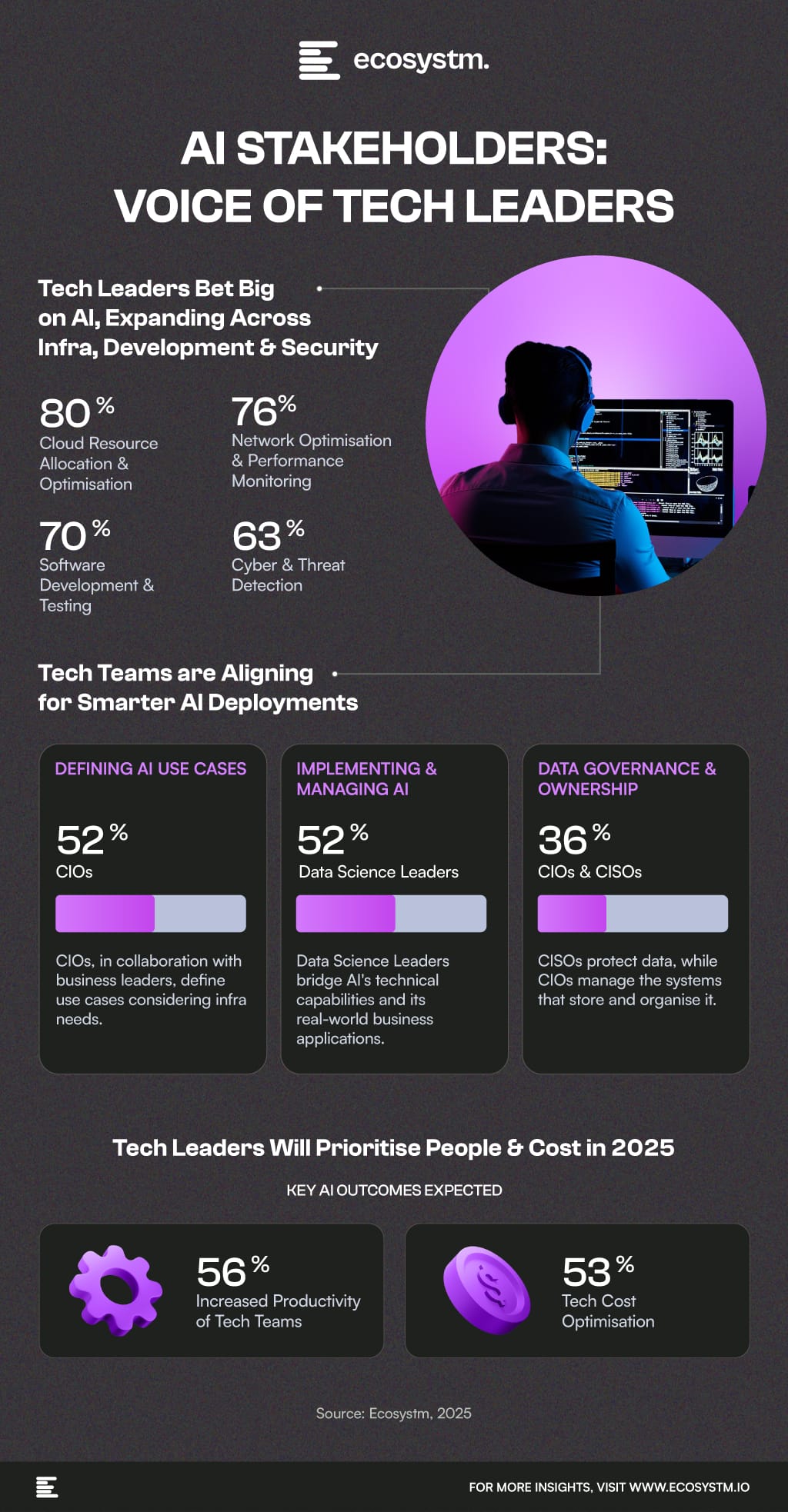

AI’s biggest impact in an organisation is on the tech teams.

From automating IT support to optimising infrastructure, development, and security, AI is transforming the way tech teams work. IT leaders are leveraging AI to boost productivity, cut costs, and drive innovation at scale.

Here’s how tech leaders are coming together to push AI initiatives forward.

Great customer engagement takes effort, but AI gives customer success leaders the edge to do more.

Ecosystm research shows that while 60% of Sales, Marketing, and CX leaders see productivity gains from AI, there’s still untapped potential. AI can deepen engagement, reduce churn, boost upsell opportunities, and drive real financial impact.

Here’s where customer success leaders stand today – and where the biggest gaps remain.

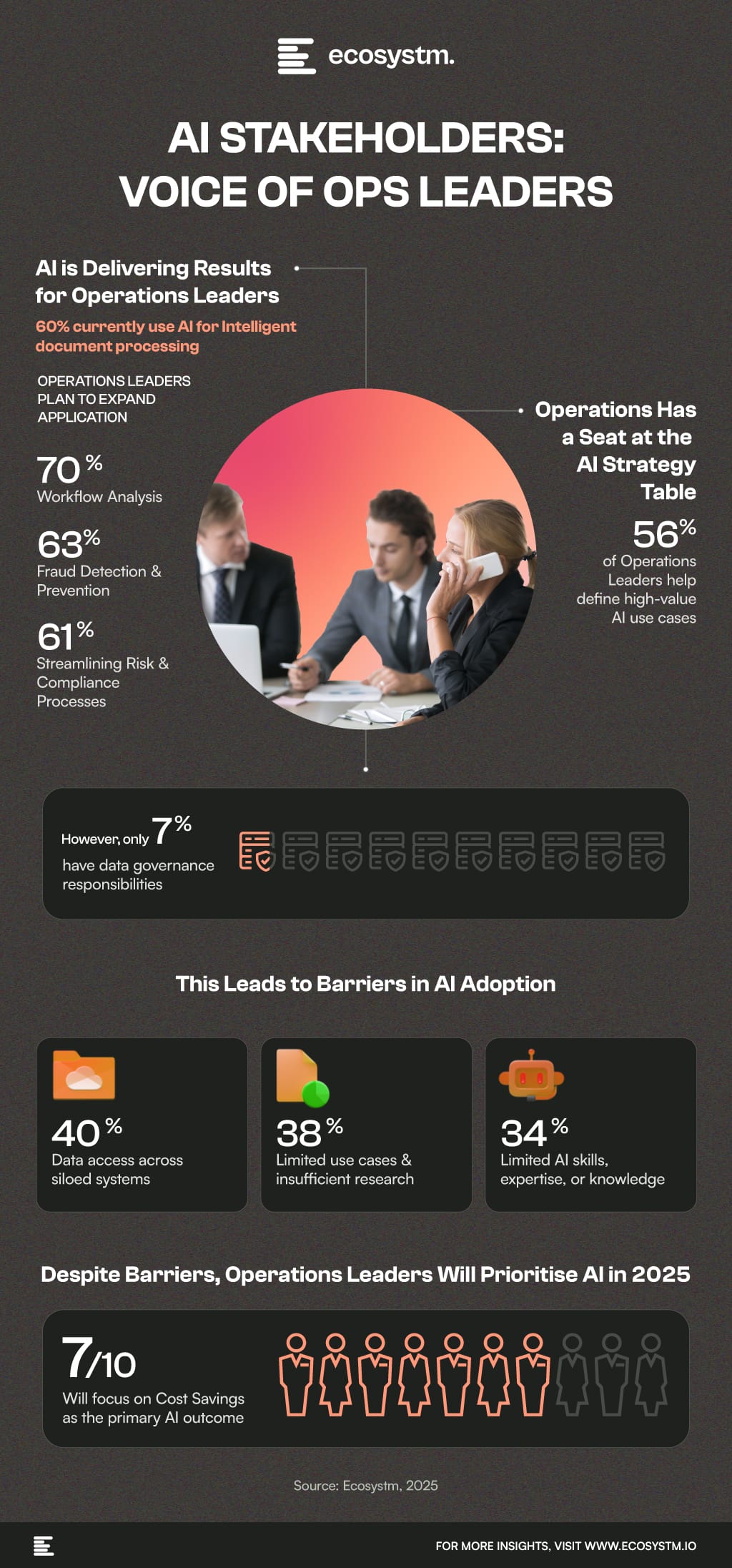

Ecosystm research finds that 54% of organisations place cost savings as the key focus of AI adoption. This explains why Operations shapes AI and use cases.

But the teams often lack control over data and solutions.

Overcoming these gaps will be crucial for stronger execution and long-term success.

Here’s where Operations leaders stand today.

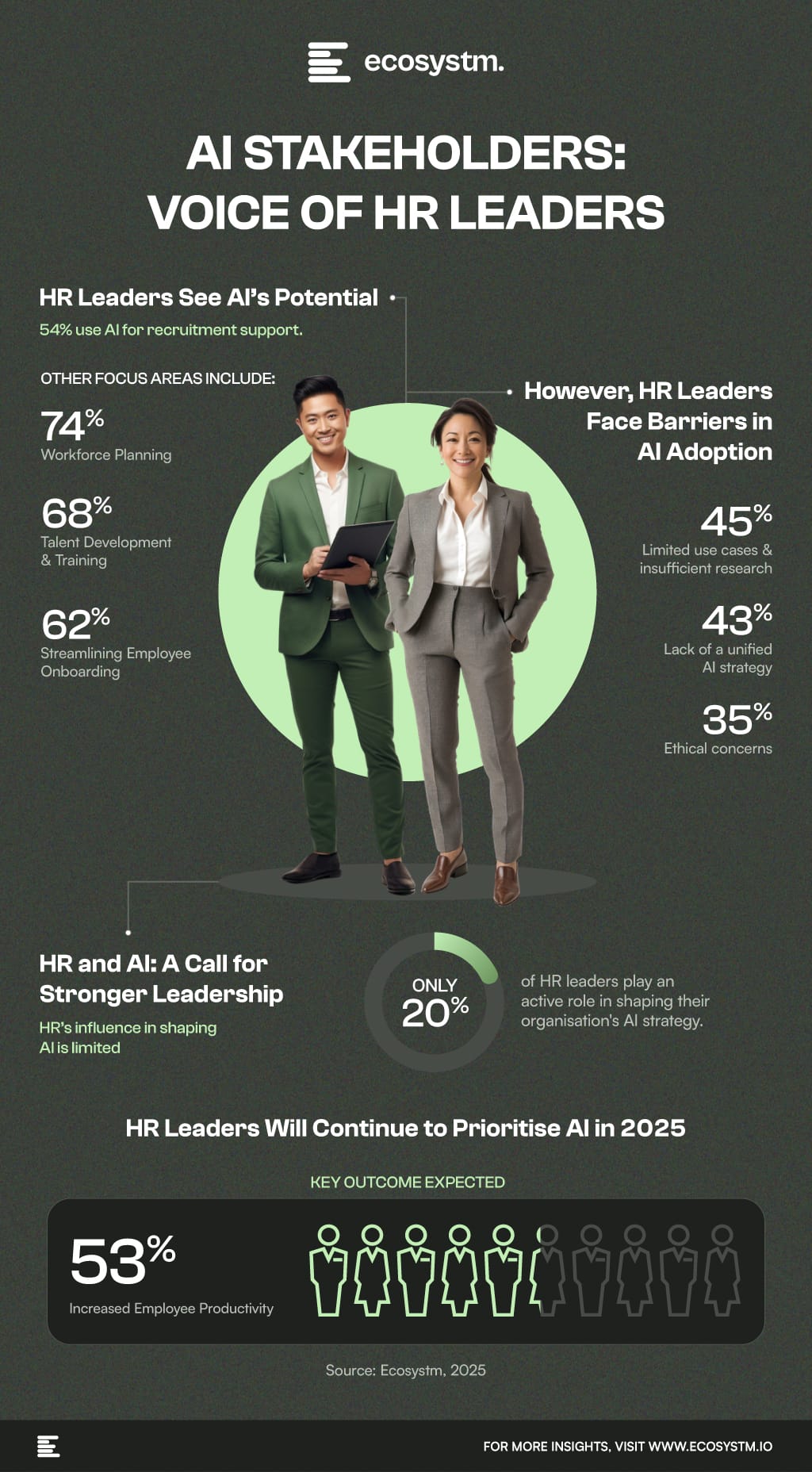

AI is set to transform the workplace – enhancing productivity and reshaping roles. HR isn’t just a bystander; it’s key to ensuring employees benefit while managing AI’s uncertainties. It’s time HR had a bigger seat at the table.

Here’s where AI adoption in HR stands today.

Barely weeks into 2025, the Consumer Electronics Show (CES) announced a wave of AI-powered innovations – from Nvidia’s latest RTX 50-series graphics chip with AI-powered rendering to Halliday’s futuristic augmented reality smart glasses. AI has firmly emerged from the “fringe” technology to become the foundation of industry transformation. According to MIT, 95% of businesses are already using AI in some capacity, and more than half are aiming for full-scale integration by 2026.

But as AI adoption increases, the real challenge isn’t just about developing smarter models – it’s about whether the underlying infrastructure can keep up.

The AI-Driven Cloud: Strategic Growth

Cloud providers are at the heart of the AI revolution, but in 2025, it is not just about raw computing power anymore. It’s about smarter, more strategic expansion.

Microsoft is expanding its AI infrastructure footprint beyond traditional tech hubs, investing USD 300M in South Africa to build AI-ready data centres in an emerging market. Similarly, AWS is doubling down on another emerging market with an investment of USD 8B to develop next-generation cloud infrastructure in Maharashtra, India.

This focus on AI is not limited to the top hyperscalers; Oracle, for instance, is seeing rapid cloud growth, with 15% revenue growth expected in 2026 and 20% in 2027. This growth is driven by deep AI integration and investments in semiconductor technology. Oracle is also a key player in OpenAI and SoftBank’s Stargate AI initiative, showcasing its commitment to AI innovation.

Emerging players and disruptors are also making their mark. For instance, CoreWeave, a former crypto mining company, has pivoted to AI cloud services. They recently secured a USD 12B contract with OpenAI to provide computing power for training and running AI models over the next five years.

The signs are clear – the demand for AI is reshaping the cloud industry faster than anyone expected.

Strategic Investments In Data Centres Powering Growth

Enterprises are increasingly investing in AI-optimised data centres, driven by the need to reduce reliance on traditional data centres, lower latency, achieve cost savings, and gain better control over data.

Reliance Industries is set to build the world’s largest AI data centre in Jamnagar, India, with a 3-gigawatt capacity. This ambitious project aims to accelerate AI adoption by reducing inferencing costs and enabling large-scale AI workloads through its ‘Jio Brain’ platform. Similarly, in the US, a group of banks has committed USD 2B to fund a 100-acre AI data centre in Utah, underscoring the financial sector’s confidence in AI’s future and the increasing demand for high-performance computing infrastructure.

These large-scale investments are part of a broader trend – AI is becoming a key driver of economic and industrial transformation. As AI adoption accelerates, the need for advanced data centres capable of handling vast computational workloads is growing. The enterprise sector’s support for AI infrastructure highlights AI’s pivotal role in shaping digital economies and driving long-term growth.

AI Hardware Reimagined: Beyond the GPU

While cloud providers are racing to scale up, semiconductor companies are rethinking AI hardware from the ground up – and they are adapting fast.

Nvidia is no longer just focused on cloud GPUs – it is now working directly with enterprises to deploy H200-powered private AI clusters. AMD’s MI300X chips are being integrated into financial services for high-frequency trading and fraud detection, offering a more energy-efficient alternative to traditional AI hardware.

Another major trend is chiplet architectures, where AI models run across multiple smaller chips instead of a single, power-hungry processor. Meta’s latest AI accelerator and Google’s custom TPU designs are early adopters of this modular approach, making AI computing more scalable and cost-effective.

The AI hardware race is no longer just about bigger chips – it’s about smarter, more efficient designs that optimise performance while keeping energy costs in check.

Collaborative AI: Sharing The Infrastructure Burden

As AI infrastructure investments increase, so do costs. Training and deploying LLMs requires billions in high-performance chips, cloud storage, and data centres. To manage these costs, companies are increasingly teaming up to share infrastructure and expertise.

SoftBank and OpenAI formed a joint venture in Japan to accelerate AI adoption across enterprises. Meanwhile, Telstra and Accenture are partnering on a global scale to pool their AI infrastructure resources, ensuring businesses have access to scalable AI solutions.

In financial services, Palantir and TWG Global have joined forces to deploy AI models for risk assessment, fraud detection, and customer automation – leveraging shared infrastructure to reduce costs and increase efficiency.

And with tech giants spending over USD 315 billion on AI infrastructure this year alone – plus OpenAI’s USD 500 billion commitment – the need for collaboration will only grow.

These joint ventures are more than just cost-sharing arrangements; they are strategic plays to accelerate AI adoption while managing the massive infrastructure bill.

The AI Infrastructure Power Shift

The AI infrastructure race in 2025 isn’t just about bigger investments or faster chips – it’s about reshaping the tech landscape. Leaders aren’t just building AI infrastructure; they’re determining who controls AI’s future. Cloud providers are shaping where and how AI is deployed, while semiconductor companies focus on energy efficiency and sustainability. Joint ventures highlight that AI is too big for any single player.

But rapid growth comes with challenges: Will smaller enterprises be locked out? Can regulations keep pace? As investments concentrate among a few, how will competition and innovation evolve?

One thing is clear: Those who control AI infrastructure today will shape tomorrow’s AI-driven economy.