Over the past year of moderating AI roundtables, I’ve had a front-row seat to how the conversation has evolved. Early discussions often centred on identifying promising use cases and grappling with the foundational work, particularly around data readiness. More recently, attention has shifted to emerging capabilities like Agentic AI and what they mean for enterprise workflows. The pace of change has been rapid, but one theme has remained consistent throughout: ROI.

What’s changed is the depth and nuance of that conversation. As AI moves from pilot projects to core business functions, the question is no longer just if it delivers value, but how to measure it in a way that captures its true impact. Traditional ROI frameworks, focused on immediate, measurable returns, are proving inadequate when applied to AI initiatives that reshape processes, unlock new capabilities, and require long-term investment.

To navigate this complexity, organisations need a more grounded, forward-looking approach that considers not only direct gains but also enablement, scalability, and strategic relevance. Getting this right is key to both validating today’s investments and setting the stage for meaningful, sustained transformation.

Here is a summary of the key thoughts around AI ROI from multiple conversations across the Asia Pacific region.

1. Redefining ROI Beyond Short-Term Wins

A common mistake when adopting AI is using traditional ROI models that expect quick, obvious wins like cutting costs or boosting revenue right away. But AI works differently. Its real value often shows up slowly, through better decision-making, greater agility, and preparing the organisation to compete long-term.

AI projects need big upfront investments in things like improving data quality, upgrading infrastructure, and managing change. These costs are clear from the start, while the bigger benefits, like smarter predictions, faster processes, and a stronger competitive edge, usually take years to really pay off and aren’t easy to measure the usual way.

Ecosystm research finds that 60% of organisations in Asia Pacific expect to see AI ROI over two to five years, not immediately.

The most successful AI adopters get this and have started changing how they measure ROI. They look beyond just money and track things like explainability (which builds trust and helps with regulations), compliance improvements, how AI helps employees work better, and how it sparks new products or business models. These less obvious benefits are actually key to building strong, AI-ready organisations that can keep innovating and growing over time.

2. Linking AI to High-Impact KPIs: Problem First, Not Tech First

Successful AI initiatives always start with a clearly defined business problem or opportunity; not the technology itself. When a precise pain point is identified upfront, AI shifts from a vague concept to a powerful solution.

An industrial firm in Asia Pacific reduced production lead time by 40% by applying AI to optimise inspection and scheduling. This result was concrete, measurable, and directly tied to business goals.

This problem-first approach ensures every AI use case links to high-impact KPIs – whether reducing downtime, improving product quality, or boosting customer satisfaction. While this short-to-medium-term focus on results might seem at odds with the long-term ROI perspective, the two are complementary. Early wins secure executive buy-in and funding, giving AI initiatives the runway needed to mature and scale for sustained strategic impact.

Together, these perspectives build a foundation for scalable AI value that balances immediate relevance with future resilience.

3. Tracking ROI Across the Lifecycle

A costly misconception is treating pilot projects as the final success marker. While pilots validate concepts, true ROI only begins once AI is integrated into operations, scaled organisation-wide, and sustained over time.

Ecosystm research reveals that only about 32% of organisations rigorously track AI outcomes with defined success metrics; most rely on ad-hoc or incomplete measures.

To capture real value, ROI must be measured across the full AI lifecycle. This includes infrastructure upgrades needed for scaling, ongoing model maintenance (retraining and tuning), strict data governance to ensure quality and compliance, and operational support to monitor and optimise deployed AI systems.

A lifecycle perspective acknowledges the real value – and hidden costs – emerge beyond pilots, ensuring organisations understand the total cost of ownership and sustained benefits.

4. Strengthening the Foundations: Talent, Data, and Strategy

AI success hinges on strong foundations, not just models. Many projects fail due to gaps in skills, data quality, or strategic focus – directly blocking positive ROI and wasting resources.

Top organisations invest early in three pillars:

- Data Infrastructure. Reliable, scalable data pipelines and quality controls are vital. Poor data leads to delays, errors, higher costs, and compliance risks, hurting ROI.

- Skilled Talent. Cross-functional teams combining technical and domain expertise speed deployment, improve quality, reduce errors, and drive ongoing innovation – boosting ROI.

- Strategic Roadmap. Clear alignment with business goals ensures resources focus on high-impact projects, secures executive support, fosters collaboration, and enables measurable outcomes through KPIs.

Strengthening these fundamentals turns AI investments into consistent growth and competitive advantage.

5. Navigating Tool Complexity: Toward Integrated AI Lifecycle Management

One of the biggest challenges in measuring AI ROI is tool fragmentation. The AI lifecycle spans multiple stages – data preparation, model development, deployment, monitoring, and impact tracking – and organisations often rely on different tools for each. MLOps platforms track model performance, BI tools measure KPIs, and governance tools ensure compliance, but these systems rarely connect seamlessly.

This disconnect creates blind spots. Metrics sit in silos, handoffs across teams become inefficient, and linking model performance to business outcomes over time becomes manual and error prone. As AI becomes more embedded in core operations, the need for integration is becoming clear.

To close this gap, organisations are adopting unified AI lifecycle management platforms. These solutions provide a centralised view of model health, usage, and business impact, enriched with governance and collaboration features. By aligning technical and business metrics, they enable faster iteration, responsible scaling, and clearer ROI across the lifecycle.

Final Thoughts: The Cost of Inaction

Measuring AI ROI isn’t just about proving cost savings; it’s a shift in how organisations think about value. AI delivers long-term gains through better decision-making, improved compliance, more empowered employees, and the capacity to innovate continuously.

Yet too often, the cost of doing nothing is overlooked. Failing to invest in AI leads to slower adaptation, inefficient processes, and lost competitive ground. Traditional ROI models, built for short-term, linear investments, don’t account for the strategic upside of early adoption or the risks of falling behind.

That’s why leading organisations are reframing the ROI conversation. They’re looking beyond isolated productivity metrics to focus on lasting outcomes: scalable governance, adaptable talent, and future-ready business models. In a fast-evolving environment, inaction carries its own cost – one that may not appear in today’s spreadsheet but will shape tomorrow’s performance.

The White House has mandated federal agencies to conduct risk assessments on AI tools and appoint officers, including Chief Artificial Intelligence Officers (CAIOs), for oversight. This directive, led by the Office of Management and Budget (OMB), aims to modernise government AI adoption and promote responsible use. Agencies must integrate AI oversight into their core functions, ensuring safety, security, and ethical use. CAIOs will be tasked with assessing AI’s impact on civil rights and market competition. Agencies have until December 1, 2024, to address non-compliant AI uses, emphasising swift implementation.

How will this impact global AI adoption? Ecosystm analysts share their views.

Click here to download ‘Ensuring Ethical AI: US Federal Agencies’ New Mandate’ as a PDF.

The Larger Impact: Setting a Global Benchmark

This sets a potential global benchmark for AI governance, with the U.S. leading the way in responsible AI use, inspiring other nations to follow suit. The emphasis on transparency and accountability could boost public trust in AI applications worldwide.

The appointment of CAIOs across U.S. federal agencies marks a significant shift towards ethical AI development and application. Through mandated risk management practices, such as independent evaluations and real-world testing, the government recognises AI’s profound impact on rights, safety, and societal norms.

This isn’t merely a regulatory action; it’s a foundational shift towards embedding ethical and responsible AI at the heart of government operations. The balance struck between fostering innovation and ensuring public safety and rights protection is particularly noteworthy.

This initiative reflects a deep understanding of AI’s dual-edged nature – the potential to significantly benefit society, countered by its risks.

The Larger Impact: Blueprint for Risk Management

In what is likely a world first, AI brings together technology, legal, and policy leaders in a concerted effort to put guardrails around a new technology before a major disaster materialises. These efforts span from technology firms providing a form of legal assurance for use of their products (for example Microsoft’s Customer Copyright Commitment) to parliaments ratifying AI regulatory laws (such as the EU AI Act) to the current directive of installing AI accountability in US federal agencies just in the past few months.

It is universally accepted that AI needs risk management to be responsible and acceptable – installing an accountable C-suite role is another major step of AI risk mitigation.

This is an interesting move for three reasons:

- The balance of innovation versus governance and risk management.

- Accountability mandates for each agency’s use of AI in a public and transparent manner.

- Transparency mandates regarding AI use cases and technologies, including those that may impact safety or rights.

Impact on the Private Sector: Greater Accountability

AI Governance is one of the rare occasions where government action moves faster than private sector. While the immediate pressure is now on US federal agencies (and there are 438 of them) to identify and appoint CAIOs, the announcement sends a clear signal to the private sector.

Following hot on the heels of recent AI legislation steps, it puts AI governance straight into the Boardroom. The air is getting very thin for enterprises still in denial that AI governance has advanced to strategic importance. And unlike the CFC ban in the Eighties (the Montreal protocol likely set the record for concerted global action) this time the technology providers are fully onboard.

There’s no excuse for delaying the acceleration of AI governance and establishing accountability for AI within organisations.

Impact on Tech Providers: More Engagement Opportunities

Technology vendors are poised to benefit from the medium to long-term acceleration of AI investment, especially those based in the U.S., given government agencies’ preferences for local sourcing.

In the short term, our advice to technology vendors and service partners is to actively engage with CAIOs in client agencies to identify existing AI usage in their tools and platforms, as well as algorithms implemented by consultants and service partners.

Once AI guardrails are established within agencies, tech providers and service partners can expedite investments by determining which of their platforms, tools, or capabilities comply with specific guardrails and which do not.

Impact on SE Asia: Promoting a Digital Innovation Hub

By 2030, Southeast Asia is poised to emerge as the world’s fourth-largest economy – much of that growth will be propelled by the adoption of AI and other emerging technologies.

The projected economic growth presents both challenges and opportunities, emphasizing the urgency for regional nations to enhance their AI governance frameworks and stay competitive with international standards. This initiative highlights the critical role of AI integration for private sector businesses in Southeast Asia, urging organizations to proactively address AI’s regulatory and ethical complexities. Furthermore, it has the potential to stimulate cross-border collaborations in AI governance and innovation, bridging the U.S., Southeast Asian nations, and the private sector.

It underscores the global interconnectedness of AI policy and its impact on regional economies and business practices.

By leading with a strategic approach to AI, the U.S. sets an example for Southeast Asia and the global business community to reevaluate their AI strategies, fostering a more unified and responsible global AI ecosystem.

The Risks

U.S. government agencies face the challenge of sourcing experts in technology, legal frameworks, risk management, privacy regulations, civil rights, and security, while also identifying ongoing AI initiatives. Establishing a unified definition of AI and cataloguing processes involving ML, algorithms, or GenAI is essential, given AI’s integral role in organisational processes over the past two decades.

However, there’s a risk that focusing on AI governance may hinder adoption.

The role should prioritise establishing AI guardrails to expedite compliant initiatives while flagging those needing oversight. While these guardrails will facilitate “safe AI” investments, the documentation process could potentially delay progress.

The initiative also echoes a 20th-century mindset for a 21st-century dilemma. Hiring leaders and forming teams feel like a traditional approach. Today, organisations can increase productivity by considering AI and automation as initial solutions. Investing more time upfront to discover initiatives, set guardrails, and implement AI decision-making processes could significantly improve CAIO effectiveness from the outset.

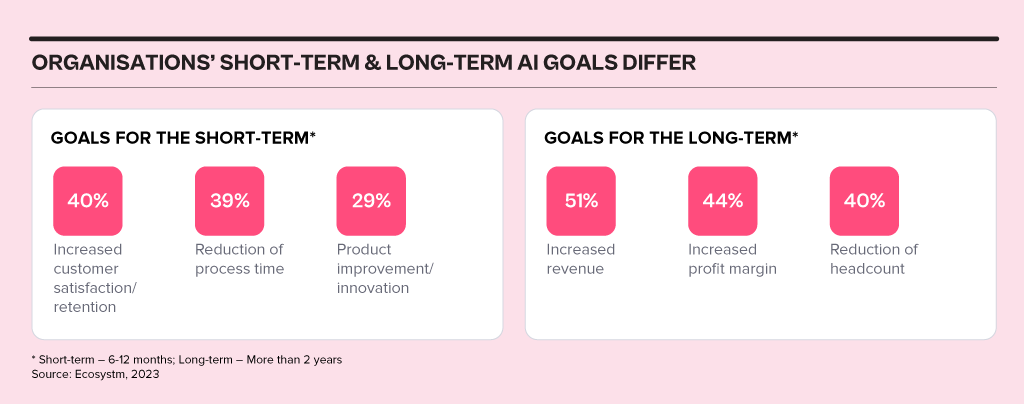

In 2024, business and technology leaders will leverage the opportunity presented by the attention being received by Generative AI engines to test and integrate AI comprehensively across the business. Many organisations will prioritise the alignment of their initial Generative AI initiatives with broader AI strategies, establishing distinct short-term and long-term goals for their AI investments.

AI adoption will influence business processes, technology skills, and, in turn, reshape the product/service offerings of AI providers.

Ecosystm analysts Achim Granzen, Peter Carr, Richard Wilkins, Tim Sheedy, and Ullrich Loeffler present the top 5 AI trends in 2024.

Click here to download ‘Ecosystm Predicts: Top 5 AI Trends in 2024.

#1 By the End of 2024, Gen AI Will Become a ‘Hygiene Factor’ for Tech Providers

AI has widely been commended as the ‘game changer’ that will create and extend the divide between adopters and laggards and be the deciding factor for success and failure.

Cutting through the hype, strategic adoption of AI is still at a nascent stage and 2024 will be another year where companies identify use cases, experiment with POCs, and commit renewed efforts to get their data assets in order.

The biggest impact of AI will be derived from integrated AI capability in standard packaged software and products – and this will include Generative AI. We will see a plethora of product releases that seamlessly weave Generative AI into everyday tools generating new value through increased efficiency and user-friendliness.

Technology will be the first industry where AI becomes the deciding factor between success and failure; tech providers will be forced to deliver on their AI promises or be left behind.

#2 Gen AI Will Disrupt the Role of IT Architects

Traditionally, IT has relied on three-tier architectures for applications, that faced limitations in scalability and real-time responsiveness. The emergence of microservices, containerisation, and serverless computing has paved the way for event-driven designs, a paradigm shift that decouples components and use events like user actions or data updates as triggers for actions across distributed services. This approach enhances agility, scalability, and flexibility in the system.

The shift towards event-driven designs and advanced architectural patterns presents a compelling challenge for IT Architects, as traditionally their role revolved around designing, planning and overseeing complex systems.

Generative AI is progressively demonstrating capabilities in architectural design through pattern recognition, predictive analytics, and automated decision-making.

With the adoption of Generative AI, the role of an IT Architect will change into a symbiotic relationship where human expertise collaborates with AI insights.

#3 Gen AI Adoption Will be Confined to Specific Use Cases

A little over a year ago, a new era in AI began with the initial release of OpenAI’s ChatGPT. Since then, many organisations have launched Generative AI pilots.

In its second-year enterprises will start adoption – but in strictly defined and limited use cases. Examples such as Microsoft Copilot demonstrate an early adopter route. While productivity increases for individuals can be significant, its enterprise impact is unclear (at this time).

But there are impactful use cases in enterprise knowledge and document management. Organisations across industries have decades (or even a century) of information, including digitised documents and staff expertise. That treasure trove of information can be made accessible through cognitive search and semantic answering, driven by Generative AI.

Generative AI will provide organisations with a way to access, distill, and create value out of that data – a task that may well be impossible to achieve in any other way.

#4 Gen AI Will Get Press Inches; ‘Traditional’ AI Will Do the Hard Work

While the use cases for Generative AI will continue to expand, the deployment models and architectures for enterprise Generative AI do not add up – yet.

Running Generative AI in organisations’ data centres is costly and using public models for all but the most obvious use cases is too risky. Most organisations opt for a “small target” strategy, implementing Generative AI in isolated use cases within specific processes, teams, or functions. Justifying investment in hardware, software, and services for an internal AI platform is challenging when the payback for each AI initiative is not substantial.

“Traditional AI/ML” will remain the workhorse, with a significant rise in use cases and deployments. Organisations are used to investing for AI by individual use cases. Managing process change and training is also more straightforward with traditional AI, as the changes are implemented in a system or platform, eliminating the need to retrain multiple knowledge workers.

#5 AI Will Pioneer a 21st Century BPM Renaissance

As we near the 25-year milestone of the 21st century, it becomes clear that many businesses are still operating with 20th-century practices and philosophies.

AI, however, represents more than a technological breakthrough; it offers a new perspective on how businesses operate and is akin to a modern interpretation of Business Process Management (BPM). This development carries substantial consequences for digital transformation strategies. To fully exploit the potential of AI, organisations need to commit to an extensive and ongoing process spanning the collection, organisation, and expansion of data, to integrating these insights at an application and workflow level.

The role of AI will transcend technological innovation, becoming a driving force for substantial business transformation. Sectors that specialise in workflow, data management, and organisational transformation are poised to see the most growth in 2024 because of this shift.