It seems for many employees, the benefits of working from home or even adopting a hybrid model are a thing of the past. Employees are returning to the grind of long commutes and losing hours in transit. What is driving this shift in sentiment? CEOs, who once rooted for remote work, have undergone a change of heart – many say that remote work hampers their ability to innovate.

That may not be the real reason, however. There is a good chance that the CEO and/or other managers feel they have lost control or visibility over their employees. Returning to a more traditional management approach, where everyone is within direct sight, might seem like a simpler solution.

The Myths of Workplace Innovation

I find it ironic that organisations say they want employees to come into the office because they cannot innovate at the same rate. What the last few years have demonstrated – and quite conclusively – is that employees can innovate wherever they are, if they are driven to it and have the right tools. So, organisations need to evaluate whether they have innovated on and evolved their hybrid and remote work solutions effectively, to continue to support hybrid work – and innovation.

What is confusing about this stance that many organisations are taking, is that when an organisation has multiple offices, they are effectively a hybrid business – they have had people working from different locations, but have never felt the need to get all their staff together for 3-5 days every week for organisation-wide innovation that is suddenly so important today.

The CEO of a tech research firm once said – the office used to be considered the place to get together to use the tools we need to innovate; but the reality is that the office is just one of the tools that businesses have, to drive their organisation forward. Ironically, this same CEO has recently called everyone back into the office 3 days a week!

Is Remote Work the Next Step in Employee Rights?

It has become clear that remote and hybrid work is the next step in employees getting greater rights. Many organisations fought against the five-day work weeks, claiming they wouldn’t make as much money as they did when employees worked whenever they were told. They fought against the 40-hour work week (in France some fought against the 35-hour work week!) They fight against the introduction of new public holidays, against increases to the minimum wages, against paid parental leave.

Some industries, companies, unions, and countries are looking to (or already have) formalised hybrid and remote work in their policies and regulations. More unions and businesses will do this – and employees will have choice.

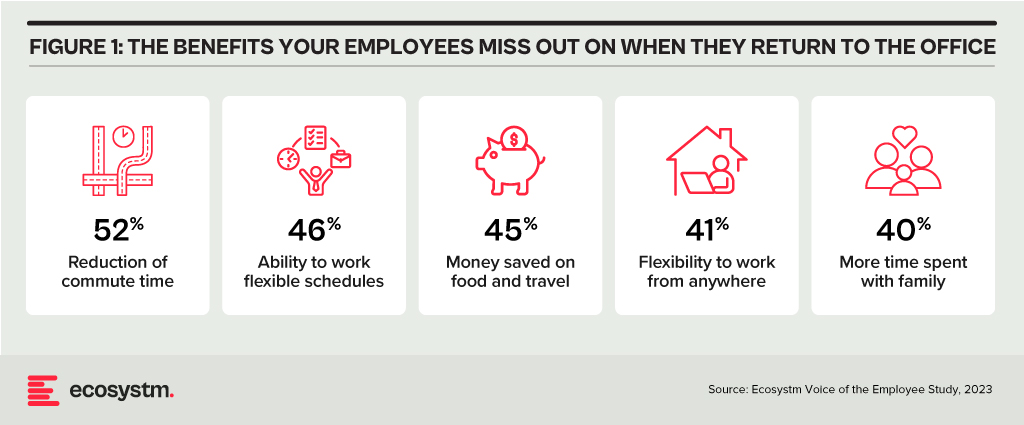

People will have the option to work for an employer who wants their employees to come into the office – or work for someone else. And this will depend on preferences and working styles – some employees enjoy the time spent away from home and like the social nature of office environments. But many also like the extra time, money, and flexibility that remote work allows.

There might be many reasons why leadership teams would want employees to come into the office – and establishing and maintaining a common corporate culture would be a leading reason. But what they need to do is stop pretending it is about “innovation”. Innovation is possible while working remotely, as it is when working from separate offices or even different floors within the same building.

Evolving Employee Experience & Collaboration Needs

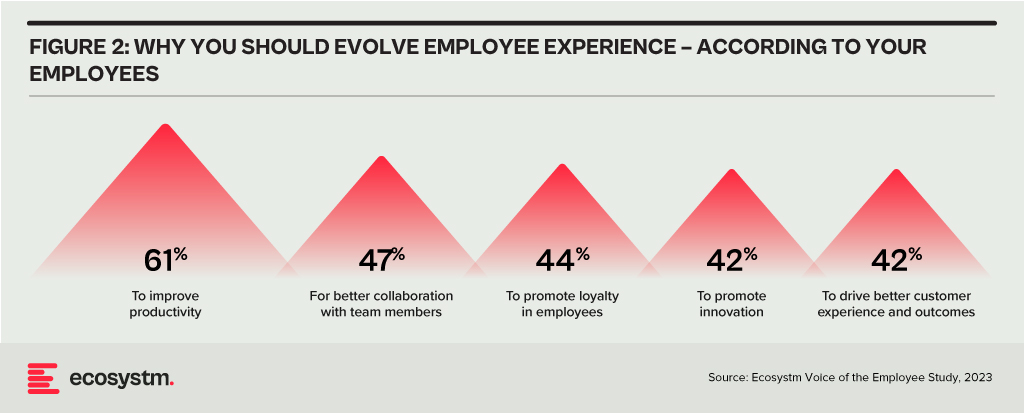

Organisations today face a challenge – and it is not the inability to innovate in a hybrid work environment! It is in their ability to deliver the employee experience that their employees want. This is more challenging now because there are more preferences, options, and technologies available. But it is established that organisations need to continue to evolve their employee experience.

Technology does and will continue to play an important role in keeping our employees connected and productive. AI – such as Microsoft Copilot – will continue to improve our productivity. But the management needs to evolve with the technology. If the senior management feels that connecting people will help to solve the current growth challenges in the business, then it becomes the role of managers to better connect people – not just teams in offices, but virtual teams across the entire organisation.

Organisations that have focused their energies on connecting their employees better, regardless of their location (such as REA in Australia), find that productivity and innovation rates are better than when people are physically together. What do they do differently?

- Managers find their roles have moved from supporting individual employees to connecting employees

- Documentation of progress and challenges means that everyone knows where to focus their energies

- Managed virtual (and in-person) meetings mean that everyone has a voice and gets to contribute (not the loudest, most talkative or most senior person)

Remote and hybrid workers are often well-positioned to come up with new and innovative ideas. Senior management can encourage innovation and risk-taking by creating a safe environment for employees to share their ideas and by providing them with the resources they need to develop and implement their ideas. Sometimes these resources are in an office – but they don’t have to be. Manufacturers are quickly moving to complete digital development, prototyping, and testing of their new and improved products and services. Digital is often faster, better, and more innovative than physical – but employees need to be allowed to embrace these new platforms and tools to drive better organisational and customer outcomes.

What the pandemic has taught us is that people are good at solving problems; they are good at innovating irrespective of whether their managers are watching or not.

It is not hyperbole to state that AI is on the cusp of having significant implications on society, business, economies, governments, individuals, cultures, politics, the arts, manufacturing, customer experience… I think you get the idea! We cannot understate the impact that AI will have on society. In times gone by, businesses tested ideas, new products, or services with small customer segments before they went live. But with AI we are all part of this experiment on the impacts of AI on society – its benefits, use cases, weaknesses, and threats.

What seemed preposterous just six months ago is not only possible but EASY! Do you want a virtual version of yourself, a friend, your CEO, or your deceased family member? Sure – just feed the data. Will succession planning be more about recording all conversations and interactions with an executive so their avatar can make the decisions when they leave? Why not? How about you turn the thousands of hours of recorded customer conversations with your contact centre team into a virtual contact centre team? Your head of product can present in multiple countries in multiple languages, tailored to the customer segments, industries, geographies, or business needs at the same moment.

AI has the potential to create digital clones of your employees, it can spread fake news as easily as real news, it can be used for deception as easily as for benefit. Is your organisation prepared for the social, personal, cultural, and emotional impacts of AI? Do you know how AI will evolve in your organisation?

When we focus on the future of AI, we often interview AI leaders, business leaders, futurists, and analysts. I haven’t seen enough focus on psychologists, sociologists, historians, academics, counselors, or even regulators! The Internet and social media changed the world more than we ever imagined – at this stage, it looks like these two were just a rehearsal for the real show – Artificial Intelligence.

Lack of Government or Industry Regulation Means You Need to Self-Regulate

These rapid developments – and the notable silence from governments, lawmakers, and regulators – make the requirement for an AI Ethics Policy for your organisation urgent! Even if you have one, it probably needs updating, as the scenarios that AI can operate within are growing and changing literally every day.

- For example, your customer service team might want to create a virtual customer service agent from a real person. What is the policy on this? How will it impact the person?

- Your marketing team might be using ChatGPT or Bard for content creation. Do you have a policy specifically for the creation and use of content using assets your business does not own?

- What data is acceptable to be ingested by a public Large Language Model (LLM). Are are you governing data at creation and publishing to ensure these policies are met?

- With the impending public launch of Microsoft’s Co-Pilot AI service, what data can be ingested by Co-Pilot? How are you governing the distribution of the insights that come out of that capability?

If policies are not put in place, data tagged, staff trained, before using a tool such as Co-Pilot, your business will be likely to break some privacy or employment laws – on the very first day!

What do the LLMs Say About AI Ethics Policies?

So where do you go when looking for an AI Ethics policy? ChatGPT and Bard of course! I asked the two for a modern AI Ethics policy.

You can read what they generated in the graphic below.

I personally prefer the ChatGPT4 version as it is more prescriptive. At the same time, I would argue that MOST of the AI tools that your business has access to today don’t meet all of these principles. And while they are tools and the ethics should dictate the way the tools are used, with AI you cannot always separate the process and outcome from the tool.

For example, a tool that is inherently designed to learn an employee’s character, style, or mannerisms cannot be unbiased if it is based on a biased opinion (and humans have biases!).

LLMs take data, content, and insights created by others, and give it to their customers to reuse. Are you happy with your website being used as a tool to train a startup on the opportunities in the markets and customers you serve?

By making content public, you acknowledge the risk of others using it. But at least they visited your website or app to consume it. Not anymore…

A Policy is Useless if it Sits on a Shelf

Your AI ethics policy needs to be more than a published document. It should be the beginning of a conversation across the entire organisation about the use of AI. Your employees need to be trained in the policy. It needs to be part of the culture of the business – particularly as low and no-code capabilities push these AI tools, practices, and capabilities into the hands of many of your employees.

Nearly every business leader I interview mentions that their organisation is an “intelligent, data-led, business.” What is the role of AI in driving this intelligent business? If being data-driven and analytical is in the DNA of your organisation, soon AI will also be at the heart of your business. You might think you can delay your investments to get it right – but your competitors may be ahead of you.

So, as you jump head-first into the AI pool, start to create, improve and/or socialise your AI Ethics Policy. It should guide your investments, protect your brand, empower your employees, and keep your business resilient and compliant with legacy and new legislation and regulations.