AI tools have become a game-changer for the technology industry, enhancing developer productivity and software quality. Leveraging advanced machine learning models and natural language processing, these tools offer a wide range of capabilities, from code completion to generating entire blocks of code, significantly reducing the cognitive load on developers. AI-powered tools not only accelerate the coding process but also ensure higher code quality and consistency, aligning seamlessly with modern development practices. Organisations are reaping the benefits of these tools, which have transformed the software development lifecycle.

Impact on Developer Productivity

AI tools are becoming an indispensable part of software development owing to their:

- Speed and Efficiency. AI-powered tools provide real-time code suggestions, which dramatically reduces the time developers spend writing boilerplate code and debugging. For example, Tabnine can suggest complete blocks of code based on the comments or a partial code snippet, which accelerates the development process.

- Quality and Accuracy. By analysing vast datasets of code, AI tools can offer not only syntactically correct but also contextually appropriate code suggestions. This capability reduces bugs and improves the overall quality of the software.

- Learning and Collaboration. AI tools also serve as learning aids for developers by exposing them to new or better coding practices and patterns. Novice developers, in particular, can benefit from real-time feedback and examples, accelerating their professional growth. These tools can also help maintain consistency in coding standards across teams, fostering better collaboration.

Advantages of Using AI Tools in Development

- Reduced Time to Market. Faster coding and debugging directly contribute to shorter development cycles, enabling organisations to launch products faster. This reduction in time to market is crucial in today’s competitive business environment where speed often translates to a significant market advantage.

- Cost Efficiency. While there is an upfront cost in integrating these AI tools, the overall return on investment (ROI) is enhanced through the reduced need for extensive manual code reviews, decreased dependency on large development teams, and lower maintenance costs due to improved code quality.

- Scalability and Adaptability. AI tools learn and adapt over time, becoming more efficient and aligned with specific team or project needs. This adaptability ensures that the tools remain effective as the complexity of projects increases or as new technologies emerge.

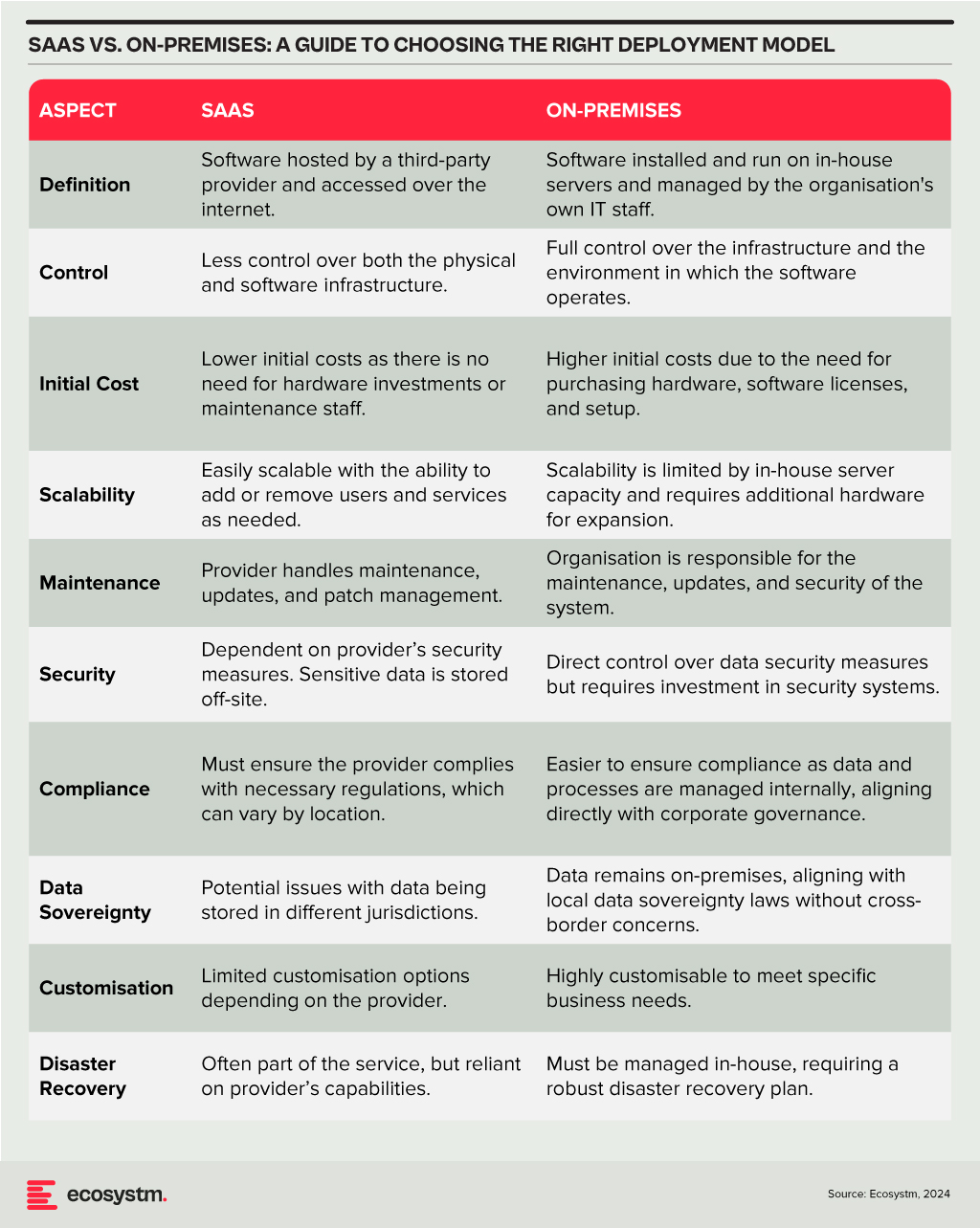

Deployment Models

The choice between SaaS and on-premises deployment models involves a trade-off between control, cost, and flexibility. Organisations need to consider their specific requirements, including the level of control desired over the infrastructure, sensitivity of the data, compliance needs, and available IT resources. A thorough assessment will guide the decision, ensuring that the deployment model chosen aligns with the organisation’s operational objectives and strategic goals.

Technology teams must consider challenges such as the reliability of generated code, the potential for generating biased or insecure code, and the dependency on external APIs or services. Proper oversight, regular evaluations, and a balanced integration of AI tools with human oversight are recommended to mitigate these risks.

A Roadmap for AI Integration

The strategic integration of AI tools in software development offers a significant opportunity for companies to achieve a competitive edge. By starting with pilot projects, organisations can assess the impact and utility of AI within specific teams. Encouraging continuous training in AI advancements empowers developers to leverage these tools effectively. Regular audits ensure that AI-generated code adheres to security standards and company policies, while feedback mechanisms facilitate the refinement of tool usage and address any emerging issues.

Technology teams have the opportunity to not only boost operational efficiency but also cultivate a culture of innovation and continuous improvement in their software development practices. As AI technology matures, even more sophisticated tools are expected to emerge, further propelling developer capabilities and software development to new heights.

GenAI has taken the world by storm, with organisations big and small eager to pilot use cases for automation and productivity boosts. Tech giants like Google, AWS, and Microsoft are offering cloud-based GenAI tools, but the demand is straining current infrastructure capabilities needed for training and deploying large language models (LLMs) like ChatGPT and Bard.

Understanding the Demand for Chips

The microchip manufacturing process is intricate, involving hundreds of steps and spanning up to four months from design to mass production. The significant expense and lengthy manufacturing process for semiconductor plants have led to global demand surpassing supply. This imbalance affects technology companies, automakers, and other chip users, causing production slowdowns.

Supply chain disruptions, raw material shortages (such as rare earth metals), and geopolitical situations have also had a fair role to play in chip shortages. For example, restrictions by the US on China’s largest chip manufacturer, SMIC, made it harder for them to sell to several organisations with American ties. This triggered a ripple effect, prompting tech vendors to start hoarding hardware, and worsening supply challenges.

As AI advances and organisations start exploring GenAI, specialised AI chips are becoming the need of the hour to meet their immense computing demands. AI chips can include graphics processing units (GPUs), application-specific integrated circuits (ASICs), and field-programmable gate arrays (FPGAs). These specialised AI accelerators can be tens or even thousands of times faster and more efficient than CPUs when it comes to AI workloads.

The surge in GenAI adoption across industries has heightened the demand for improved chip packaging, as advanced AI algorithms require more powerful and specialised hardware. Effective packaging solutions must manage heat and power consumption for optimal performance. TSMC, one of the world’s largest chipmakers, announced a shortage in advanced chip packaging capacity at the end of 2023, that is expected to persist through 2024.

The scarcity of essential hardware, limited manufacturing capacity, and AI packaging shortages have impacted tech providers. Microsoft acknowledged the AI chip crunch as a potential risk factor in their 2023 annual report, emphasising the need to expand data centre locations and server capacity to meet customer demands, particularly for AI services. The chip squeeze has highlighted the dependency of tech giants on semiconductor suppliers. To address this, companies like Amazon and Apple are investing heavily in internal chip design and production, to reduce dependence on large players such as Nvidia – the current leader in AI chip sales.

How are Chipmakers Responding?

NVIDIA, one of the largest manufacturers of GPUs, has been forced to pivot its strategy in response to this shortage. The company has shifted focus towards developing chips specifically designed to handle complex AI workloads, such as the A100 and V100 GPUs. These AI accelerators feature specialised hardware like tensor cores optimised for AI computations, high memory bandwidth, and native support for AI software frameworks.

While this move positions NVIDIA at the forefront of the AI hardware race, experts say that it comes at a significant cost. By reallocating resources towards AI-specific GPUs, the company’s ability to meet the demand for consumer-grade GPUs has been severely impacted. This strategic shift has worsened the ongoing GPU shortage, further straining the market dynamics surrounding GPU availability and demand.

Others like Intel, a stalwart in traditional CPUs, are expanding into AI, edge computing, and autonomous systems. A significant competitor to Intel in high-performance computing, AMD acquired Xilinx to offer integrated solutions combining high-performance central processing units (CPUs) and programmable logic devices.

Global Resolve Key to Address Shortages

Governments worldwide are boosting chip capacity to tackle the semiconductor crisis and fortify supply chains. Initiatives like the CHIPS for America Act and the European Chips Act aim to bolster domestic semiconductor production through investments and incentives. Leading manufacturers like TSMC and Samsung are also expanding production capacities, reflecting a global consensus on self-reliance and supply chain diversification. Asian governments are similarly investing in semiconductor manufacturing to address shortages and enhance their global market presence.

Japan is providing generous government subsidies and incentives to attract major foreign chipmakers such as TSMC, Samsung, and Micron to invest and build advanced semiconductor plants in the country. Subsidies have helped to bring greenfield investments in Japan’s chip sector in recent years. TSMC alone is investing over USD 20 billion to build two cutting-edge plants in Kumamoto by 2027. The government has earmarked around USD 13 billion just in this fiscal year to support the semiconductor industry.

Moreover, Japan’s collaboration with the US and the establishment of Rapidus, a memory chip firm, backed by major corporations, further show its ambitions to revitalise its semiconductor industry. Japan is also looking into advancements in semiconductor materials like silicon carbide (SiC) and gallium nitride (GaN) – crucial for powering electric vehicles, renewable energy systems, and 5G technology.

South Korea. While Taiwan holds the lead in semiconductor manufacturing volume, South Korea dominates the memory chip sector, largely due to Samsung. The country is also spending USD 470 billion over the next 23 years to build the world’s largest semiconductor “mega cluster” covering 21,000 hectares in Gyeonggi Province near Seoul. The ambitious project, a partnership with Samsung and SK Hynix, will centralise and boost self-sufficiency in chip materials and components to 50% by 2030. The mega cluster is South Korea’s bold plan to cement its position as a global semiconductor leader and reduce dependence on the US amidst growing geopolitical tensions.

Vietnam. Vietnam is actively positioning itself to become a major player in the global semiconductor supply chain amid the push to diversify away from China. The Southeast Asian nation is offering tax incentives, investing in training tens of thousands of semiconductor engineers, and encouraging major chip firms like Samsung, Nvidia, and Amkor to set up production facilities and design centres. However, Vietnam faces challenges such as a limited pool of skilled labour, outdated energy infrastructure leading to power shortages in key manufacturing hubs, and competition from other regional players like Taiwan and Singapore that are also vying for semiconductor investments.

The Potential of SLMs in Addressing Infrastructure Challenges

Small language models (SLMs) offer reduced computational requirements compared to larger models, potentially alleviating strain on semiconductor supply chains by deploying on smaller, specialised hardware.

Innovative SLMs like Google’s Gemini Nano and Mistral AI’s Mixtral 8x7B enhance efficiency, running on modest hardware, unlike their larger counterparts. Gemini Nano is integrated into Bard and available on Pixel 8 smartphones, while Mixtral 8x7B supports multiple languages and suits tasks like classification and customer support.

The shift towards smaller AI models can be pivotal to the AI landscape, democratising AI and ensuring accessibility and sustainability. While they may not be able to handle complex tasks as well as LLMs yet, the ability of SLMs to balance model size, compute power, and ethical considerations will shape the future of AI development.

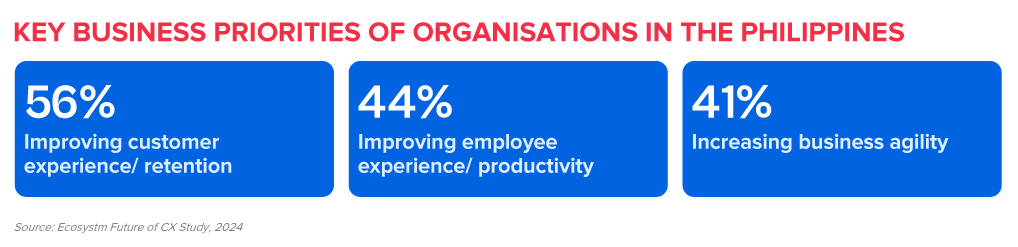

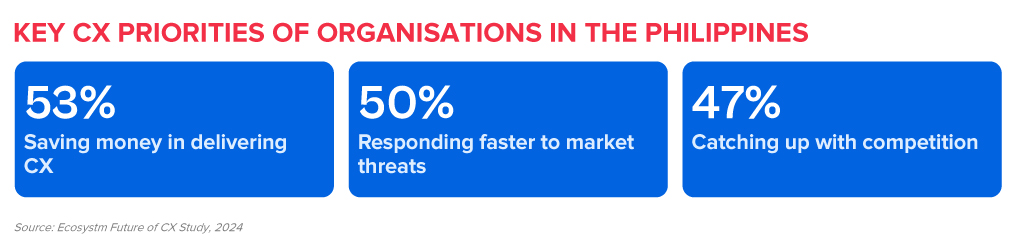

The Philippines, renowned as a global contact centre hub, is experiencing heightened pressure on the global stage, leading to intensified competition within the country. Smaller BPOs are driving larger players to innovate, requiring a stronger focus on empowering customer experience (CX) teams, and enhancing employee experience (EX) in organisations in the Philippines.

As the Philippines expands its global footprint, organisations must embrace progressive approaches to outpace rivals in the CX sector.

These priorities can be achieved through a robust data strategy that empowers CX teams and contact centres to glean actionable insights.

Here are 5 ways organisations in the Philippines can achieve their CX objectives.

Download ‘Securing the CX Edge: 5 Strategies for Organisations in the Philippines’ as a PDF.

#1 Modernise Voice and Omnichannel Orchestration

Ensuring that all channels are connected and integrated at the core is critical in delivering omnichannel experiences. Organisations must ensure that the conversation can be continued seamlessly irrespective of the channel the customer chooses, without losing the context.

Voice must be integrated within the omnichannel strategy. Even with the rise of digital and self-service, voice remains crucial, especially for understanding complex inquiries and providing an alternative when customers face persistent challenges on other channels.

Transition from a siloed view of channels to a unified and integrated approach.

#2 Empower CX Teams with Actionable Customer Data

An Intelligent Data Hub aggregates, integrates, and organises customer data across multiple data sources and channels and eliminates the siloed approach to collecting and analysing customer data.

Drive accurate and proactive conversations with your customers through a unified customer data platform.

- Unifies user history across channels into a single customer view.

- Enables the delivery of an omnichannel experience.

- Identifies behavioural trends by understanding patterns to personalise interactions.

- Spots real-time customer issues across channels.

- Uncovers compliance gaps and missed sales opportunities from unstructured data.

- Looks at customer journeys to proactively address their needs.

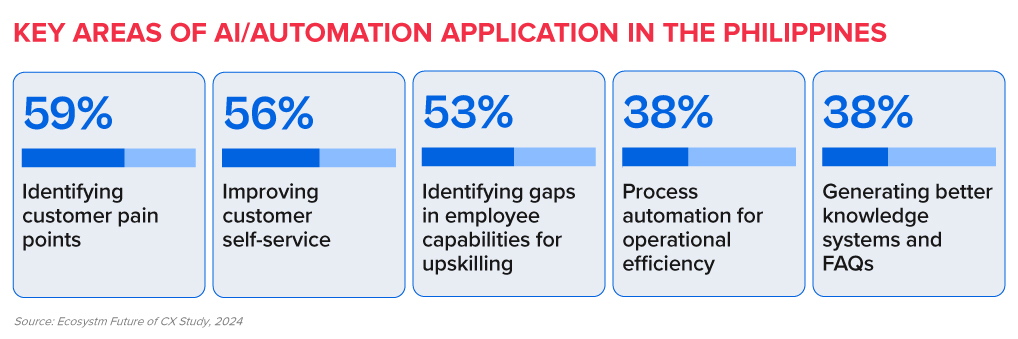

#3 Transform CX & EX with AI/Automation

AI and automation should be the cornerstone of an organisation’s CX efforts to positively impact both customers and employees.

Evaluate all aspects of AI/automation to enhance both customer and employee experience.

- Predictive AI algorithms analyse customer data to forecast trends and optimise resource allocation.

- AI-driven identity validation reduces fraud risk.

- Agent Assist Solutions offer real-time insights to agents, enhancing service delivery and efficiency.

- GenAI integration automates post-call activities, allowing agents to focus on high-value tasks.

#4 Augment Existing Systems for Success

Many organisations face challenges in fully modernising legacy systems and reducing reliance on multiple tech providers.

CX transformation while managing multiple disparate systems will require a platform that integrates desired capabilities for holistic CX and EX experiences.

A unified platform streamlines application management, ensuring cohesion, unified KPIs, enhanced security, simplified maintenance, and single sign-on for agents. This approach offers consistent experiences across channels and early issue detection, eliminating the need to navigate multiple applications or projects.

Capabilities that a platform should have:

- Programmable APIs to deliver messages across preferred social and messaging channels.

- Modernisation of outdated IVRs with self-service automation.

- Transformation of static mobile apps into engaging experience tools.

- Fraud prevention across channels through immediate phone number verification APIs.

#5 Focus on Proactive CX

In the new CX economy, organisations must meet customers on their terms, proactively engaging them before they initiate interactions. This requires a re-evaluation of all aspects of CX delivery.

- Redefine the Contact Centre. Transforming it into an “Intelligent” Data Hub providing unified and connected experiences; leveraging intelligent APIs to proactively manage customer interactions seamlessly across journeys.

- Reimagine the Agent’s Role. Empowering agents to be AI-powered brand ambassadors, with access to prior and real-time interactions, instant decision-making abilities, and data-led knowledge bases.

- Redesign the Channel and Brand Experience. Ensuring consistent omnichannel experiences through unified and coherent data; using programmable APIs to personalise conversations and discern customer preferences for real-time or asynchronous messaging; integrating innovative technologies like video to enrich the channel experience.

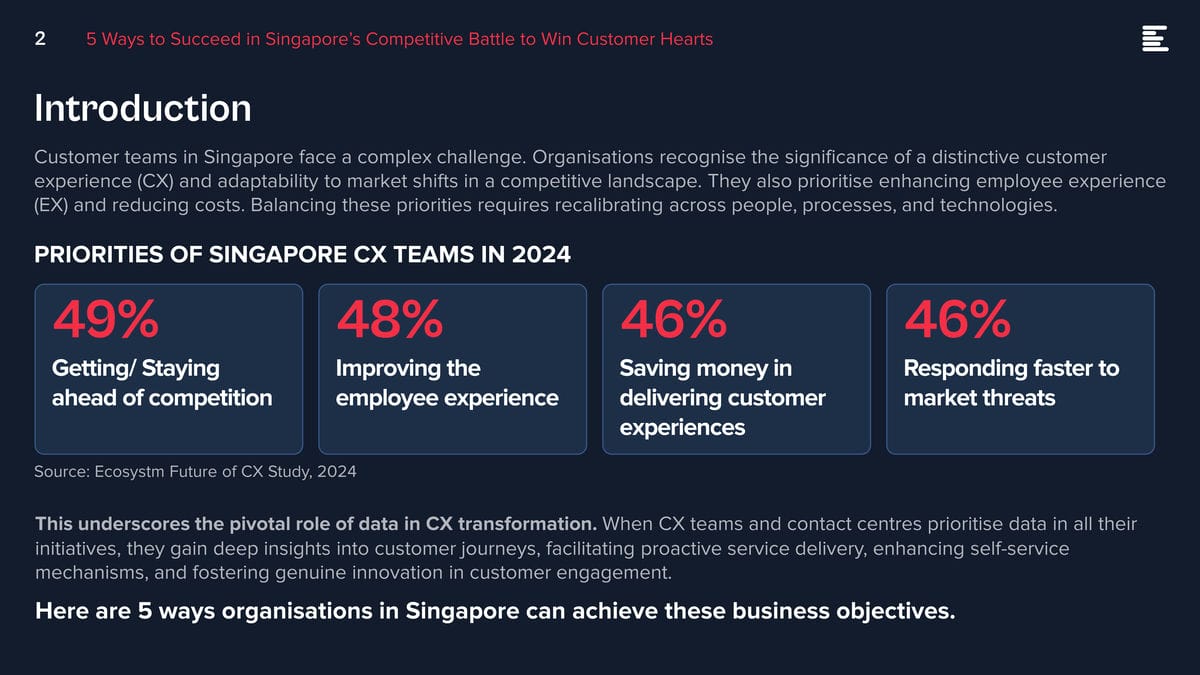

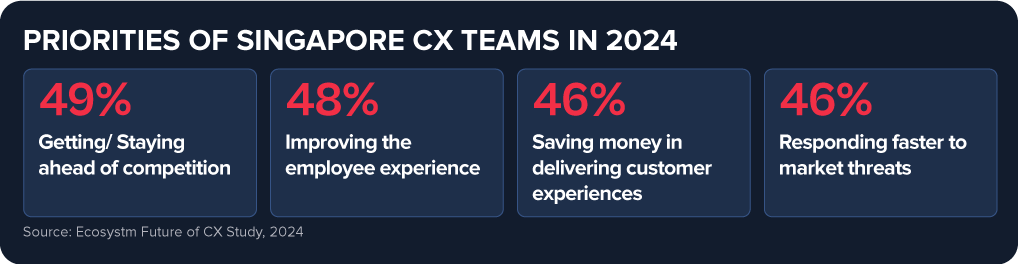

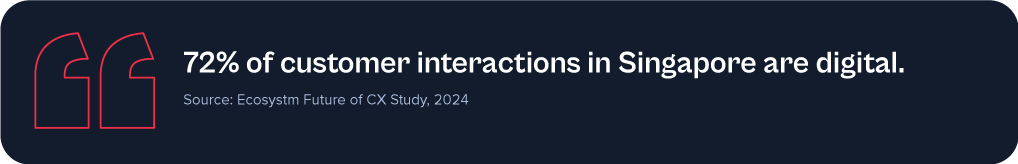

Customer teams in Singapore face a complex challenge. Organisations recognise the significance of a distinctive customer experience (CX) and adaptability to market shifts in a competitive landscape. They also prioritise enhancing employee experience (EX) and reducing costs. Balancing these priorities requires recalibrating across people, processes, and technologies.

This underscores the pivotal role of data in CX transformation. When CX teams and contact centres prioritise data in all their initiatives, they gain deep insights into customer journeys, facilitating proactive service delivery, enhancing self-service mechanisms, and fostering genuine innovation in customer engagement.

Here are 5 ways organisations in Singapore can achieve these business objectives.

Download ‘5 Ways to Succeed in Singapore’s Competitive Battle to Win Customer Hearts’ as a PDF.

#1 Build a Strategy around Voice & Omnichannel Orchestration

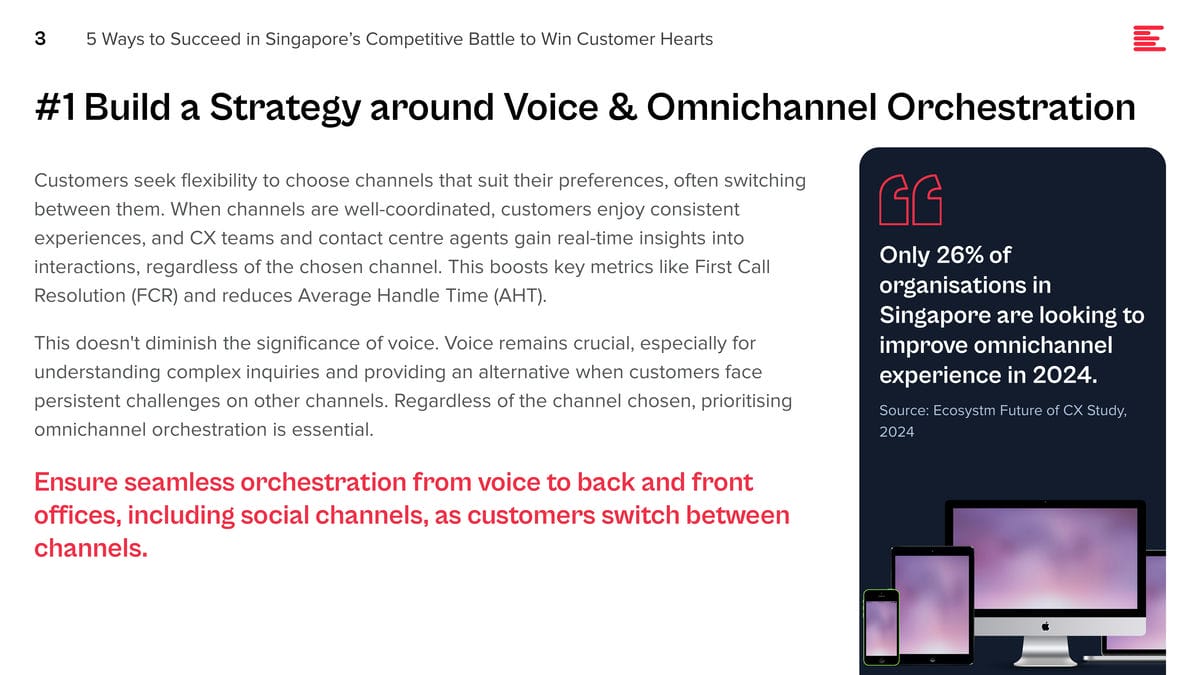

Customers seek flexibility to choose channels that suit their preferences, often switching between them. When channels are well-coordinated, customers enjoy consistent experiences, and CX teams and contact centre agents gain real-time insights into interactions, regardless of the chosen channel. This boosts key metrics like First Call Resolution (FCR) and reduces Average Handle Time (AHT).

This doesn’t diminish the significance of voice. Voice remains crucial, especially for understanding complex inquiries and providing an alternative when customers face persistent challenges on other channels. Regardless of the channel chosen, prioritising omnichannel orchestration is essential.

Ensure seamless orchestration from voice to back and front offices, including social channels, as customers switch between channels.

#2 Unify Customer Data through an Intelligent Data Hub

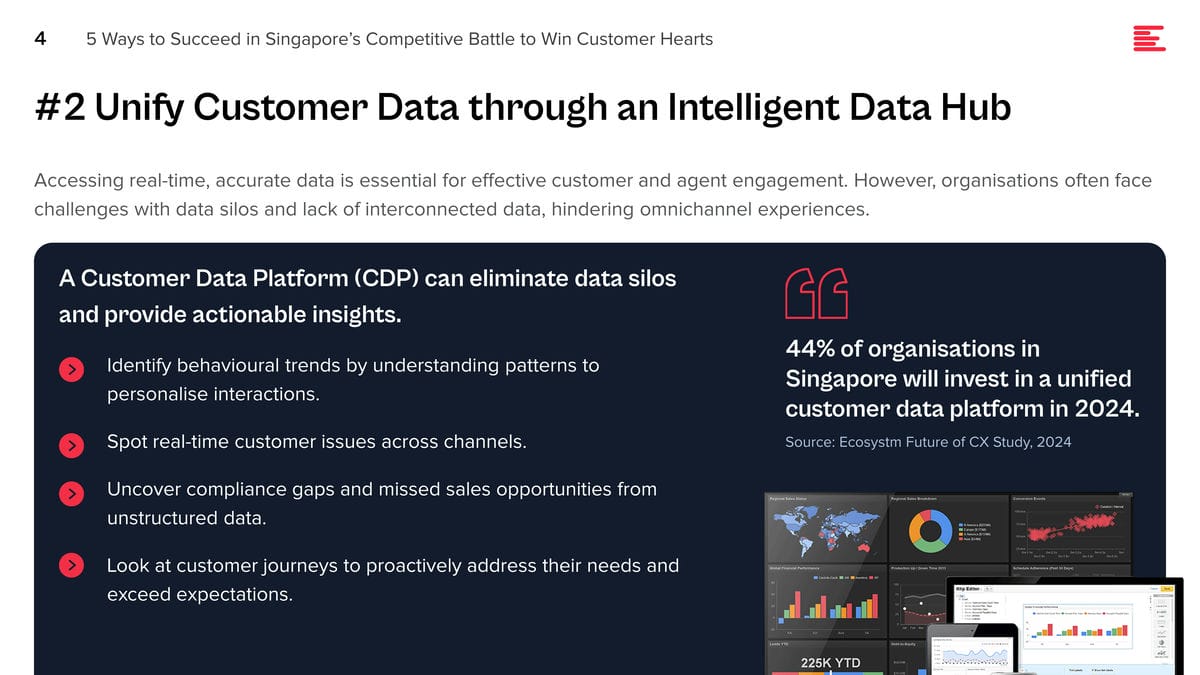

Accessing real-time, accurate data is essential for effective customer and agent engagement. However, organisations often face challenges with data silos and lack of interconnected data, hindering omnichannel experiences.

A Customer Data Platform (CDP) can eliminate data silos and provide actionable insights.

- Identify behavioural trends by understanding patterns to personalise interactions.

- Spot real-time customer issues across channels.

- Uncover compliance gaps and missed sales opportunities from unstructured data.

- Look at customer journeys to proactively address their needs and exceed expectations.

#3 Transform CX & EX with AI

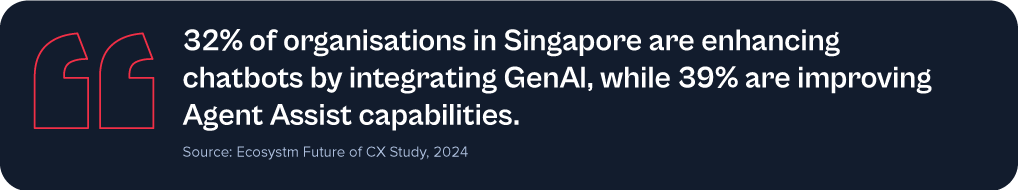

GenAI and Large Language Models (LLMs) is revolutionising how brands address customer and employee challenges, boosting efficiency, and enhancing service quality.

Despite 62% of Singapore organisations investing in virtual assistants/conversational AI, many have yet to integrate emerging technologies to elevate their CX & EX capabilities.

Agent Assist solutions provide real-time insights before customer interactions, optimising service delivery and saving time. With GenAI, they can automate mundane tasks like call summaries, freeing agents to focus on high-value tasks such as sales collaboration, proactive feedback management, personalised outbound calls, and upskilling.

Going beyond chatbots and Agent Assist solutions, predictive AI algorithms leverage customer data to forecast trends and optimise resource allocation. AI-driven identity validation swiftly confirms customer identities, mitigating fraud risks.

#4 Augment Existing Systems for Success

Despite the rise in digital interactions, many organisations struggle to fully modernise their legacy systems.

For those managing multiple disparate systems yet aiming to lead in CX transformation, a platform that integrates desired capabilities for holistic CX and EX experiences is vital.

A unified platform streamlines application management, ensuring cohesion, unified KPIs, enhanced security, simplified maintenance, and single sign-on for agents. This approach offers consistent experiences across channels and early issue detection, eliminating the need to navigate multiple applications or projects.

Capabilities that a platform should have:

- Programmable APIs to deliver messages across preferred social and messaging channels.

- Modernisation of outdated IVRs with self-service automation.

- Transformation of static mobile apps into engaging experience tools.

- Fraud prevention across channels through immediate phone number verification APIs.

#5 Focus on Proactive CX

In the new CX economy, organisations must meet customers on their terms, proactively engaging them before they initiate interactions. This will require organisations to re-evaluate all aspects of their CX delivery.

- Redefine the Contact Centre. Transform it into an “Intelligent” Data Hub providing unified and connected experiences. Leverage intelligent APIs to proactively manage customer interactions seamlessly across journeys.

- Reimagine the Agent’s Role. Empower agents to be AI-powered brand ambassadors, with access to prior and real-time interactions, instant decision-making abilities, and data-led knowledge bases.

- Redesign the Channel and Brand Experience. Ensure consistent omnichannel experiences through data unification and coherency. Use programmable APIs to personalise conversations and identify customer preferences for real-time or asynchronous messaging. Incorporate innovative technologies such as video to enhance the channel experience.

Customer feedback is at the heart of Customer Experience (CX). But it’s changing. What we consider customer feedback, how we collect and analyse it, and how we act on it is changing. Today, an estimated 80-90% of customer data is unstructured. Are you able and ready to leverage insights from that vast amount of customer feedback data?

Let’s begin with the basics: What is VoC and why is there so much buzz around it now?

Voice of the Customer (VoC) traditionally refers to customer feedback programs. In its most basic form that means organisations are sending surveys to customers to ask for feedback. And for a long time that really was the only way for organisations to understand what their customers thought about their brand, products, and services.

But that was way back then. Over the last few years, we’ve seen the market (organisations and vendors) dipping their toes into the world of unsolicited feedback.

What’s unsolicited feedback, you ask?

Unsolicited feedback simply means organisations didn’t actually ask for it and they’re often not in control over it, but the customer provides feedback in some way, shape, or form. That’s quite a change to the traditional survey approach, where they got answers to questions they specifically asked (solicited feedback).

Unsolicited feedback is important for many reasons:

- Organisations can tap into a much wider range of feedback sources, from surveys to contact centre phone calls, chats, emails, complaints, social media conversations, online reviews, CRM notes – the list is long.

- Surveys have many advantages, but also many disadvantages. From only hearing from a very specific customer type (those who respond and are typically at the extreme ends of the feedback sentiment), getting feedback on the questions they ask, and hearing from a very small portion of the customer base (think email open rates and survey fatigue).

- With unsolicited feedback organisations hear from 100% of the customers who interact with the brand. They hear what customers have to say, and not just how they answer predefined questions.

It is a huge step up, especially from the traditional post-call survey. Imagine a customer just spent 30 min on the line with an agent explaining their problem and frustration, just to receive a survey post call, to tell the organisation what they just told the agent, and how they felt about the experience. Organisations should already know that. In fact, they probably do – they just haven’t started tapping into that data yet. At least not for CX and customer insights purposes.

When does GenAI feature?

We can now tap into those raw feedback sources and analyse the unstructured data in a way never seen before. Long gone are the days of manual excel survey verbatim read-throughs or coding (although I’m well aware that that’s still happening!). Tech, in particular GenAI and Large Language Models (LLMs), are now assisting organisations in decluttering all the messy conversations and unstructured data. Not only is the quality of the analysis greatly enhanced, but the insights are also presented in user-friendly formats. Customer teams ask for the insights they need, and the tools spit it out in text form, graphs, tables, and so on.

The time from raw data to insights has reduced drastically, from hours and days down to seconds. Not only has the speed, quality, and ease of analysis improved, but many vendors are now integrating recommendations into their offerings. The tools can provide “basic” recommendations to help customer teams to act on the feedback, based on the insights uncovered.

Think of all the productivity gains and spare time organisations now have to act on the insights and drive positive CX improvements.

What does that mean for CX Teams and Organisations?

Including unsolicited feedback into the analysis to gain customer insights also changes how organisations set up and run CX and insights programs.

It’s important to understand that feedback doesn’t belong to a single person or team. CX is a team sport and particularly when it comes to acting on insights. It’s essential to share these insights with the right people, at the right time.

Some common misperceptions:

- Surveys have “owners” and only the owners can see that feedback.

- Feedback that comes through a specific channel, is specific to that channel or product.

- Contact centre feedback is only collected to coach staff.

If that’s how organisations have built their programs, they’ll have to rethink what they’re doing.

If organisations think about some of the more commonly used unstructured feedback, such as that from the contact centre or social media, it’s important to note that this feedback isn’t solely about the contact centre or social media teams. It’s about something else. In fact, it’s usually about something that created friction in the customer experience, that was generated by another team in the organisation. For example: An incorrect bill can lead to a grumpy social media post or a faulty product can lead to a disgruntled call to the contact centre. If the feedback is only shared with the social media or contact centre team, how will the underlying issues be resolved? The frontline teams service customers, but organisations also need to fix the underlying root causes that created the friction in the first place.

And that’s why organisations need to start consolidating the feedback data and democratise it.

It’s time to break down data and organisational silos and truly start thinking about the customer. No more silos. Instead, organisations must focus on a centralised customer data repository and data democratisation to share insights with the right people at the right time.

In my next Ecosystm Insights, I will discuss some of the tech options that CX teams have. Stay tuned!

The White House has mandated federal agencies to conduct risk assessments on AI tools and appoint officers, including Chief Artificial Intelligence Officers (CAIOs), for oversight. This directive, led by the Office of Management and Budget (OMB), aims to modernise government AI adoption and promote responsible use. Agencies must integrate AI oversight into their core functions, ensuring safety, security, and ethical use. CAIOs will be tasked with assessing AI’s impact on civil rights and market competition. Agencies have until December 1, 2024, to address non-compliant AI uses, emphasising swift implementation.

How will this impact global AI adoption? Ecosystm analysts share their views.

Click here to download ‘Ensuring Ethical AI: US Federal Agencies’ New Mandate’ as a PDF.

The Larger Impact: Setting a Global Benchmark

This sets a potential global benchmark for AI governance, with the U.S. leading the way in responsible AI use, inspiring other nations to follow suit. The emphasis on transparency and accountability could boost public trust in AI applications worldwide.

The appointment of CAIOs across U.S. federal agencies marks a significant shift towards ethical AI development and application. Through mandated risk management practices, such as independent evaluations and real-world testing, the government recognises AI’s profound impact on rights, safety, and societal norms.

This isn’t merely a regulatory action; it’s a foundational shift towards embedding ethical and responsible AI at the heart of government operations. The balance struck between fostering innovation and ensuring public safety and rights protection is particularly noteworthy.

This initiative reflects a deep understanding of AI’s dual-edged nature – the potential to significantly benefit society, countered by its risks.

The Larger Impact: Blueprint for Risk Management

In what is likely a world first, AI brings together technology, legal, and policy leaders in a concerted effort to put guardrails around a new technology before a major disaster materialises. These efforts span from technology firms providing a form of legal assurance for use of their products (for example Microsoft’s Customer Copyright Commitment) to parliaments ratifying AI regulatory laws (such as the EU AI Act) to the current directive of installing AI accountability in US federal agencies just in the past few months.

It is universally accepted that AI needs risk management to be responsible and acceptable – installing an accountable C-suite role is another major step of AI risk mitigation.

This is an interesting move for three reasons:

- The balance of innovation versus governance and risk management.

- Accountability mandates for each agency’s use of AI in a public and transparent manner.

- Transparency mandates regarding AI use cases and technologies, including those that may impact safety or rights.

Impact on the Private Sector: Greater Accountability

AI Governance is one of the rare occasions where government action moves faster than private sector. While the immediate pressure is now on US federal agencies (and there are 438 of them) to identify and appoint CAIOs, the announcement sends a clear signal to the private sector.

Following hot on the heels of recent AI legislation steps, it puts AI governance straight into the Boardroom. The air is getting very thin for enterprises still in denial that AI governance has advanced to strategic importance. And unlike the CFC ban in the Eighties (the Montreal protocol likely set the record for concerted global action) this time the technology providers are fully onboard.

There’s no excuse for delaying the acceleration of AI governance and establishing accountability for AI within organisations.

Impact on Tech Providers: More Engagement Opportunities

Technology vendors are poised to benefit from the medium to long-term acceleration of AI investment, especially those based in the U.S., given government agencies’ preferences for local sourcing.

In the short term, our advice to technology vendors and service partners is to actively engage with CAIOs in client agencies to identify existing AI usage in their tools and platforms, as well as algorithms implemented by consultants and service partners.

Once AI guardrails are established within agencies, tech providers and service partners can expedite investments by determining which of their platforms, tools, or capabilities comply with specific guardrails and which do not.

Impact on SE Asia: Promoting a Digital Innovation Hub

By 2030, Southeast Asia is poised to emerge as the world’s fourth-largest economy – much of that growth will be propelled by the adoption of AI and other emerging technologies.

The projected economic growth presents both challenges and opportunities, emphasizing the urgency for regional nations to enhance their AI governance frameworks and stay competitive with international standards. This initiative highlights the critical role of AI integration for private sector businesses in Southeast Asia, urging organizations to proactively address AI’s regulatory and ethical complexities. Furthermore, it has the potential to stimulate cross-border collaborations in AI governance and innovation, bridging the U.S., Southeast Asian nations, and the private sector.

It underscores the global interconnectedness of AI policy and its impact on regional economies and business practices.

By leading with a strategic approach to AI, the U.S. sets an example for Southeast Asia and the global business community to reevaluate their AI strategies, fostering a more unified and responsible global AI ecosystem.

The Risks

U.S. government agencies face the challenge of sourcing experts in technology, legal frameworks, risk management, privacy regulations, civil rights, and security, while also identifying ongoing AI initiatives. Establishing a unified definition of AI and cataloguing processes involving ML, algorithms, or GenAI is essential, given AI’s integral role in organisational processes over the past two decades.

However, there’s a risk that focusing on AI governance may hinder adoption.

The role should prioritise establishing AI guardrails to expedite compliant initiatives while flagging those needing oversight. While these guardrails will facilitate “safe AI” investments, the documentation process could potentially delay progress.

The initiative also echoes a 20th-century mindset for a 21st-century dilemma. Hiring leaders and forming teams feel like a traditional approach. Today, organisations can increase productivity by considering AI and automation as initial solutions. Investing more time upfront to discover initiatives, set guardrails, and implement AI decision-making processes could significantly improve CAIO effectiveness from the outset.

Despite financial institutions’ unwavering efforts to safeguard their customers, scammers continually evolve to exploit advancements in technology. For example, the number of scams and cybercrimes reported to the police in Singapore increased by a staggering 49.6% to 50,376 at an estimated cost of USD 482M in 2023. GenAI represents the latest challenge to the industry, providing fraudsters with new avenues for deception.

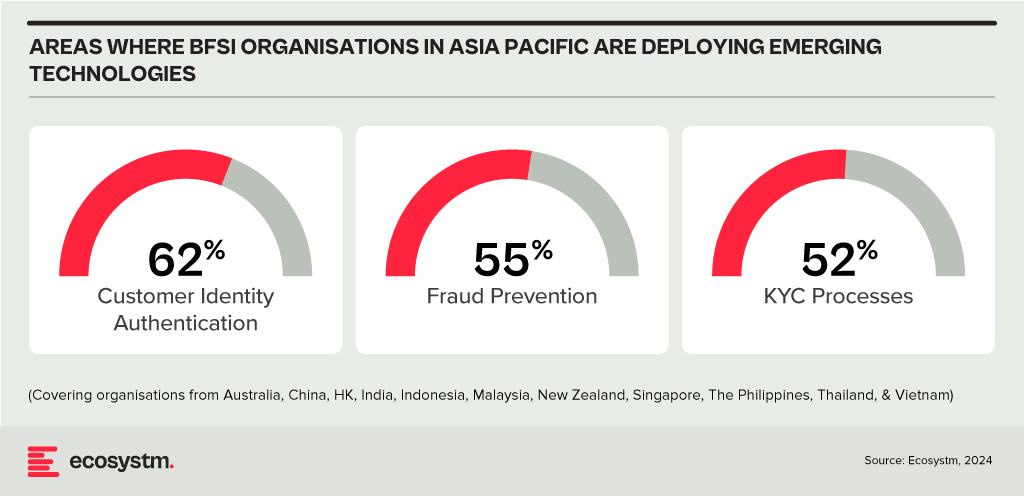

Ecosystm research shows that BFSI organisations in Asia Pacific are spending more on technologies to authenticate customer identity and prevent fraud, than they are in their Know Your Customer (KYC) processes.

The Evolution of the Threat Landscape in BFSI

Synthetic Identity Fraud. This involves the creation of fictitious identities by combining real and fake information, distinct from traditional identity theft where personal data is stolen. These synthetic identities are then exploited to open fraudulent accounts, obtain credit, or engage in financial crimes, often evading detection due to their lack of association with real individuals. The Deloitte Centre for Financial Services predicts that synthetic identity fraud will result in USD 23B in losses by 2030. Synthetic fraud is posing significant challenges for financial institutions and law enforcement agencies, especially with the emergence of advanced technologies like GenAI being used to produce realistic documents blending genuine and false information, undermining Know Your Customer (KYC) protocols.

AI-Enhanced Phishing. Ecosystm research reveals that in Asia Pacific, 71% of customer interactions in BFSI occur across multiple digital channels, including mobile apps, emails, messaging, web chats, and conversational AI. In fact, 57% of organisations plan to further improve customer self-service capabilities to meet the demand for flexible and convenient service delivery. The proliferation of digital channels brings with it an increased risk of phishing attacks.

While these organisations continue to educate their customers on how to secure their accounts in a digital world, GenAI poses an escalating threat here as well. Phishing schemes will employ widely available LLMs to generate convincing text and even images. For many potential victims, misspellings and strangely worded appeals are the only hint that an email from their bank is not what it seems. The maturing of deepfake technology will also make it possible for malicious agents to create personalised voice and video attacks.

Identity Fraud Detection and Prevention

Although fraudsters are exploiting every new vulnerability, financial organisations also have new tools to protect their customers. Organisations should build a layered defence to prevent increasingly sophisticated attempts at fraud.

- Behavioural analytics. Using machine learning, financial organisations can differentiate between standard activities and suspicious behaviour at the account level. Data that can be analysed includes purchase patterns, unusual transaction values, VPN use, browser choice, log-in times, and impossible travel. Anomalies can be flagged, and additional security measures initiated to stem the attack.

- Passive authentication. Accounts can be protected even before password or biometric authentication by analysing additional data, such as phone number and IP address. This approach can be enhanced by comparing databases populated with the details of suspicious actors.

- SIM swap detection. SMS-based MFA is vulnerable to SIM swap attacks where a customer’s phone number is transferred to the fraudster’s own device. This can be prevented by using an authenticator app rather than SMS. Alternatively, SIM swap history can be detected before sending one-time passwords (OTPs).

- Breached password detection. Although customers are strongly discouraged to reuse passwords across sites, some inevitably will. By employing a service that maintains a database of credentials leaked during third-party breaches, it is possible to compare with active customer passwords and initiate a reset.

- Stronger biometrics. Phone-based fingerprint recognition has helped financial organisations safeguard against fraud and simplify the authentication experience. Advances in biometrics continue with recognition for faces, retina, iris, palm print, and voice making multimodal biometric protection possible. Liveness detection will grow in importance to combat against AI-generated content.

- Step-up validation. Authentication requirements can be differentiated according to risk level. Lower risk activities, such as balance check or internal transfer, may only require minimal authentication while higher risk ones, like international or cryptocurrency transactions may require a step up in validation. When anomalous behaviour is detected, even greater levels of security can be initiated.

Recommendations

- Reduce friction. While it may be tempting to implement heavy handed approaches to prevent fraud, it is also important to minimise friction in the authentication system. Frustrated users may abandon services or find risky ways to circumvent security. An effective layered defence should act in the background to prevent attackers getting close.

- AI Phishing Awareness. Even the savviest of customers could fall prey to advanced phishing attacks that are using GenAI. Social engineering at scale becomes increasingly more possible with each advance in AI. Monitor emerging global phishing activities and remind customers to be ever vigilant of more polished and personalised phishing attempts.

- Deploy an authenticator app. Consider shifting away from OTP SMS as an MFA method and implement either an authenticator app or one embedded in the financial app instead.

- Integrate authentication with fraud analytics. Select an authentication provider that can integrate its offering with analytics to identify fraud or unusual behaviour during account creation, log in, and transactions. The two systems should work in tandem.

- Take a zero-trust approach. Protecting both customers and employees is critical, particularly in the hybrid work era. Implement zero trust tools to prevent employees from falling victim to malicious attacks and minimising damage if they do.

Healthcare delivery and healthtech have made significant strides; yet, the fundamental challenges in healthcare have remained largely unchanged for decades. The widespread acceptance and integration of digital solutions in recent years have supported healthcare providers’ primary goals of enhancing operational efficiency, better resource utilisation (with addressing skill shortages being a key driver), improving patient experience, and achieving better clinical outcomes. With governments pushing for advancements in healthcare outcomes at sustainable costs, the concept of value-based healthcare has gained traction across the industry.

Technology-driven Disruption

Healthcare saw significant disruptions four years ago, and while we will continue to feel the impact for the next decade, one positive outcome was witnessing the industry’s ability to transform amid such immense pressure. I am definitely not suggesting another healthcare calamity! But disruptions can have a positive impact – and I believe that technology will continue to disrupt healthcare at pace. Recently, my colleague Tim Sheedy shared his thoughts on how 2024 is poised to become the year of the AI startup, highlighting innovative options that organisations should consider in their AI journeys. AI startups and innovators hold the potential to further the “good disruption” that will transform healthcare.

Of course, there are challenges associated, including concerns on ethical and privacy-related issues, the reliability of technology – particularly while scaling – and on professional liability. However, the industry cannot overlook the substantial number of innovative startups that are using AI technologies to address some of the most pressing challenges in the healthcare industry.

Why Now?

AI is not new to healthcare. Many would cite the development of MYCIN – an early AI program aimed at identifying treatments for blood infections – as the first known example. It did kindle interest in research in AI and even during the 1980s and 1990s, AI brought about early healthcare breakthroughs, including faster data collection and processing, enhanced precision in surgical procedures, and research and mapping of diseases.

Now, healthcare is at an AI inflection point due to a convergence of three significant factors.

- Advanced AI. AI algorithms and capabilities have become more sophisticated, enabling them to handle complex healthcare data and tasks with greater accuracy and efficiency.

- Demand for Accessible Healthcare. Healthcare systems globally are striving for better care amid resource constraints, turning to AI for efficiency, cost reduction, and broader access.

- Consumer Demand. As people seek greater control over their health, personalised care has become essential. AI can analyse vast patient data to identify health risks and customise care plans, promoting preventative healthcare.

Promising Health AI Startups

As innovative startups continue to emerge in healthcare, we’re particularly keeping an eye on those poised to revolutionise diagnostics, care delivery, and wellness management. Here are some examples.

DIAGNOSTICS

- Claritas HealthTech has created advanced image enhancement software to address challenges in interpreting unclear medical images, improving image clarity and precision. A cloud-based platform with AI diagnostic tools uses their image enhancement technology to achieve greater predictive accuracy.

- Ibex offers Galen, a clinical-grade, multi-tissue platform to detect and grade cancers, that integrate with third-party digital pathology software solutions, scanning platforms, and laboratory information systems.

- MEDICAL IP is focused on advancing medical imaging analysis through AI and 3D technologies (such as 3D printing, CAD/CAM, AR/VR) to streamline medical processes, minimising time and costs while enhancing patient comfort.

- Verge Genomics is a biopharmaceutical startup employing systems biology to expedite the development of life-saving treatments for neurodegenerative diseases. By leveraging patient genomes, gene expression, and epigenomics, the platform identifies new therapeutic gene targets, forecasts effective medications, and categorises patient groups for enhanced clinical efficacy.

- X-Zell focuses on advanced cytology, diagnosing diseases through single atypical cells or clusters. Their plug-and-play solution detects, visualises, and digitises these phenomena in minimally invasive body fluids. With no complex specimen preparation required, it slashes the average sample-to-diagnosis time from 48 hours to under 4 hours.

CARE DELIVERY

- Abridge specialises in automating clinical notes and medical discussions for physicians, converting patient-clinician conversations into structured clinical notes in real time, powered by GenAI. It integrates seamlessly with EMRs such as Epic.

- Waltz Health offers AI-driven marketplaces aimed at reducing costs and innovative consumer tools to facilitate informed care decisions. Tailored for payers, pharmacies, and consumers, they introduce a fresh approach to pricing and reimbursing prescriptions that allows consumers to purchase medication at the most competitive rates, improving accessibility.

- Acorai offers a non-invasive intracardiac pressure monitoring device for heart failure management, aimed at reducing hospitalisations and readmissions. The technology can analyse acoustics, vibratory, and waveform data using ML to monitor intracardiac pressures.

WELLNESS MANAGEMENT

- Anya offers AI-driven support for women navigating life stages such as fertility, pregnancy, parenthood, and menopause. For eg. it provides support during the critical first 1,001 days of the parental journey, with personalised advice, tracking of developmental milestones, and connections with healthcare professionals.

- Dacadoo’s digital health engagement platform aims to motivate users to adopt healthier lifestyles through gamification, social connectivity, and personalised feedback. By analysing user health data, AI algorithms provide tailored insights, goal-setting suggestions, and challenges.

Conclusion

There is no question that innovative startups can solve many challenges for the healthcare industry. But startups flourish because of a supportive ecosystem. The health innovation ecosystem needs to be a dynamic network of stakeholders committed to transforming the industry and health outcomes – and this includes healthcare providers, researchers, tech companies, startups, policymakers, and patients. Together we can achieve the longstanding promise of accessible, cost-effective, and patient-centric healthcare.

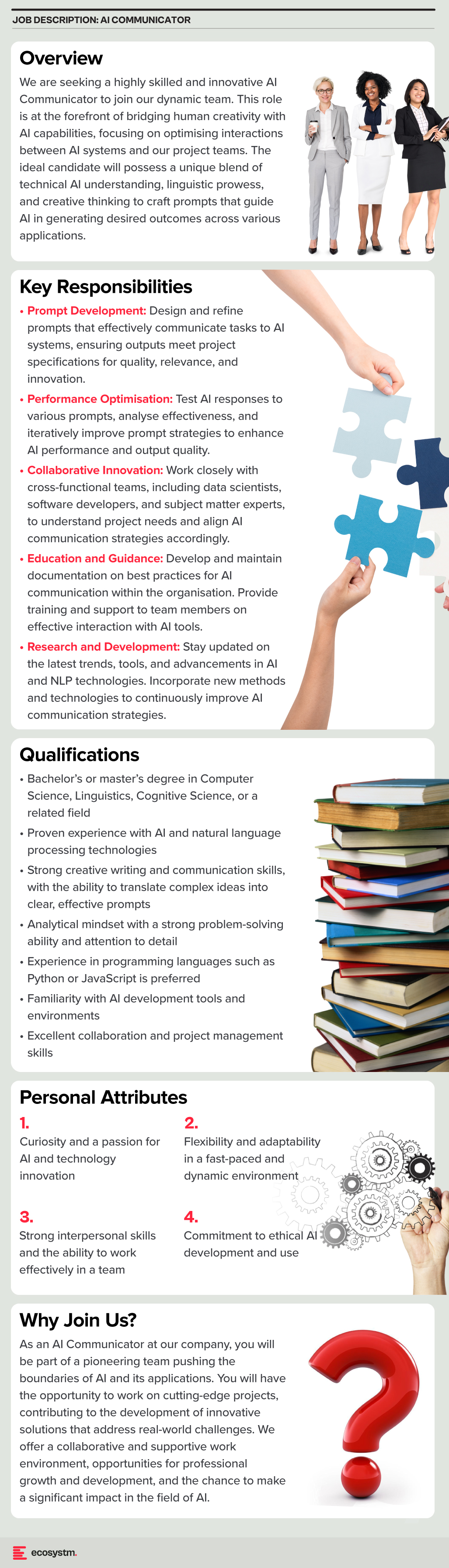

As AI evolves rapidly, the emergence of GenAI technologies such as GPT models has sparked a novel and critical role: prompt engineering. This specialised function is becoming indispensable in optimising the interaction between humans and AI, serving as a bridge that translates human intentions into prompts that guide AI to produce desired outcomes. In this Ecosystm Insight, I will explore the importance of prompt engineering, highlighting its significance, responsibilities, and the impact it has on harnessing AI’s full potential.

Understanding Prompt Engineering

Prompt engineering is an interdisciplinary role that combines elements of linguistics, psychology, computer science, and creative writing. It involves crafting inputs (prompts) that are specifically designed to elicit the most accurate, relevant, and contextually appropriate responses from AI models. This process requires a nuanced understanding of how different models process information, as well as creativity and strategic thinking to manipulate these inputs for optimal results.

As GenAI applications become more integrated across sectors – ranging from creative industries to technical fields – the ability to effectively communicate with AI systems has become a cornerstone of leveraging AI capabilities. Prompt engineers play a crucial role in this scenario, refining the way we interact with AI to enhance productivity, foster innovation, and create solutions that were previously unimaginable.

The Art and Science of Crafting Prompts

Prompt engineering is as much an art as it is a science. It demands a balance between technical understanding of AI models and the creative flair to engage these models in producing novel content. A well-crafted prompt can be the difference between an AI generating generic, irrelevant content and producing work that is insightful, innovative, and tailored to specific needs.

Key responsibilities in prompt engineering include:

- Prompt Optimisation. Fine-tuning prompts to achieve the highest quality output from AI models. This involves understanding the intricacies of model behaviour and leveraging this knowledge to guide the AI towards desired responses.

- Performance Testing and Iteration. Continuously evaluating the effectiveness of different prompts through systematic testing, analysing outcomes, and refining strategies based on empirical data.

- Cross-Functional Collaboration. Engaging with a diverse team of professionals, including data scientists, AI researchers, and domain experts, to ensure that prompts are aligned with project goals and leverage domain-specific knowledge effectively.

- Documentation and Knowledge Sharing. Developing comprehensive guidelines, best practices, and training materials to standardise prompt engineering methodologies within an organisation, facilitating knowledge transfer and consistency in AI interactions.

The Strategic Importance of Prompt Engineering

Effective prompt engineering can significantly enhance the efficiency and outcomes of AI projects. By reducing the need for extensive trial and error, prompt engineers help streamline the development process, saving time and resources. Moreover, their work is vital in mitigating biases and errors in AI-generated content, contributing to the development of responsible and ethical AI solutions.

As AI technologies continue to advance, the role of the prompt engineer will evolve, incorporating new insights from research and practice. The ability to dynamically interact with AI, guiding its creative and analytical processes through precisely engineered prompts, will be a key differentiator in the success of AI applications across industries.

Want to Hire a Prompt Engineer?

Here is a sample job description for a prompt engineer if you think that your organisation will benefit from the role.

Conclusion

Prompt engineering represents a crucial evolution in the field of AI, addressing the gap between human intention and machine-generated output. As we continue to explore the boundaries of what AI can achieve, the demand for skilled prompt engineers – who can navigate the complex interplay between technology and human language – will grow. Their work not only enhances the practical applications of AI but also pushes the frontier of human-machine collaboration, making them indispensable in the modern AI ecosystem.