The latest shift in AI takes us beyond data analysis and content generation. With agentic AI we are now seeing systems that can plan, reason, act autonomously, and adapt based on outcomes. This shift marks a practical turning point for operational execution and strategic agility.

Smart On-Ramps for Agentic AI

Technology providers are rapidly maturing their agentic AI offerings, increasingly packaging them as pre-built agents designed for quick deployment. These often require minimal customisation and target common enterprise needs – onboarding assistants, IT helpdesk agents, internal knowledge copilots, or policy compliance checkers – integrated with existing platforms like Microsoft 365, Salesforce, or ServiceNow.

For example, a bank might deploy a templated underwriting agent to pre-screen loan applications, while a university could roll out a student support bot that flags at-risk learners and nudges them toward action. These plug-and-play pilots let organisations move fast, with lower risk and clearer ROI.

Templated agents won’t suit every context, particularly where rules are complex or data is fragmented. But for many, they offer a smart on-ramp: a focused, contained pilot that delivers value, builds momentum, and lays the groundwork to scale Agentic AI more broadly.

Here are 10 such opportunities – five cross-industry and five sector-specific – ideal for launching agentic AI in your organisation. Each addresses a real-world pain point, with measurable impact and momentum for broader change.

Horizontal Use Cases

1. Employee Onboarding & Integration Assistant

An AI agent that guides new hires through their critical first weeks and months by answering FAQs about company policies, automating paperwork, scheduling introductory meetings, and sending personalised reminders to complete mandatory training, all integrated with HRIS, LMS, and calendaring systems. This can help reduce the administrative load on HR teams by handling repetitive onboarding tasks, potentially freeing up significant time, while also improving new hire satisfaction and accelerating time-to-productivity by providing employees with better support and engagement from day one.

Consideration. Begin with a specific department or a targeted hiring wave. Prioritise roles with high turnover or complex onboarding needs. Ensure HR data is clean and accessible, and policy documents are up to date.

2. Automated Meeting Follow-ups & Action Tracking

With permission, AI agents can listen to virtual meetings, identify key discussion points, summarise decisions, extract and assign action items with deadlines, and proactively follow up via email or collaboration platforms like Slack or Teams to help ensure tasks are completed. By integrating with meeting platforms, project management tools, and email, this can reduce the burden of manual note-taking and follow-up, potentially saving team members 1-2 hours per week, while also improving execution rates and accountability to make meetings more action-focused.

Consideration. Deploy with a small, cross-functional team that has frequent meetings. Clearly communicate the agent’s role and data privacy protocols to ensure user comfort and compliance.

3. Intelligent Procurement Assistant

An agent that interprets internal requests, initiates purchase orders, compares vendor options against predefined criteria, flags potential compliance issues based on policies and spending limits, and manages approval workflows, integrating with ERP systems, vendor databases, and internal policy documents. This can help accelerate procurement cycles, reduce manual errors, and lower the risk of non-compliant spending, potentially freeing procurement specialists to focus more on strategic sourcing rather than transactional tasks.

Consideration. Begin with a specific category of low-to-medium value purchases (e.g., office supplies, standard software licenses). Define clear, rule-based policies for the agent to follow.

4. Enhanced Sales/Outreach Research Agent

Given a target account, citizen segment, or potential beneficiary profile, this agent autonomously gathers and synthesises insights from CRM data, public financial records, social media, news feeds, and industry reports. It then generates tailored talking points, personalised outreach messages, and intelligent discovery questions for human operators. This can provide representatives with deeper insights, potentially improving their preparation and boosting early-stage conversion rates, while reducing manual research time significantly and allowing teams to focus more on building relationships.

Consideration. Train the agent on a specific sales vertical or a targeted public outreach campaign. Ensure robust data privacy compliance when accessing and synthesising public information.

5. Proactive Internal IT Helpdesk Agent

This agent enables employees to describe technical issues in natural language through familiar platforms like Slack, Teams, or internal portals. It can intelligently troubleshoot problems, guide users through self-service solutions from a knowledge base, or escalate more complex issues to the appropriate IT specialist, often pre-filling support tickets with relevant diagnostic information. This approach can lead to faster issue resolution, reduce the number of common support tickets, and improve employee satisfaction with IT services, while freeing IT staff to focus on more complex problems and strategic initiatives.

Consideration. Start with a well-documented set of frequently asked questions (FAQs) or common Tier 1 IT issues (e.g., password resets, VPN connection problems). Ensure a clear escalation path to human support.

Industry-Specific Use Cases

6. Intelligent Insurance Claims Triage (Insurance)

This agent reviews incoming insurance claims by processing unstructured data such as claim descriptions, photos, and documents. It automatically cross-references policy coverage, identifies missing information, and assigns priority or flags potential fraud based on predefined rules and learned patterns. This can speed up initial claims processing, reduce the manual workload for claims adjusters, and improve the early detection of suspicious claims, helping to lower fraud risk and deliver a faster, more efficient customer experience during a critical time.

Consideration. Focus on a specific, high-volume, and relatively standardized claim type (e.g., minor motor vehicle damage, simple property claims). Ensure robust data integration with policy management and fraud detection systems.

7. Automated Credit Underwriting Assistant (Banking)

An AI agent that pre-screens loan applications by gathering and analysing data from internal banking systems, external credit bureaus, and public records. It identifies key risk factors, generates preliminary credit scores, and prepares initial decision recommendations for human loan officers to review and approve. This can significantly shorten loan processing times, improve consistency in risk assessments, and allow human underwriters to concentrate on more complex cases and customer interactions.

Consideration. Apply this agent to a specific, well-defined loan product (e.g., unsecured personal loans, small business loans) with clear underwriting criteria. Strict human-in-the-loop oversight for final decisions is paramount.

8. Clinical Trial Workflow Coordinator (Healthcare)

This agent monitors clinical trial timelines, tracks participant progress, flags potential non-compliance or protocol deviations, and coordinates tasks and communication between research teams, labs, and regulatory bodies. Integrated with Electronic Health Records (EHRs), trial management systems, and regulatory databases, it helps reduce delays in complex clinical workflows, improves adherence to strict protocols and regulations, and enhances data quality, potentially speeding up drug development and patient access to new treatments.

Consideration. Focus on a single phase of a trial or specific documentation compliance checkpoints within an ongoing study. Ensure secure and compliant access to sensitive patient and trial data.

9. Predictive Maintenance Scheduler (Manufacturing)

By continuously analysing real-time IoT sensor data from machinery, this agent uses predictive analytics to anticipate potential equipment failures. It then schedules maintenance at optimal times, taking into account production schedules, spare part availability, and technician workloads, and automatically assigns tasks. This approach can significantly boost machine uptime and overall equipment effectiveness by reducing unplanned downtime, optimize technician efficiency, and extend asset lifespan, resulting in notable cost savings.

Consideration. Implement for a critical, high-value machine or a specific production line where downtime is extremely costly. Requires reliable and high-fidelity IoT sensor data.

10. Personalised Student Success Advisor (Higher Education)

This agent analyses student performance data such as grades, attendance, and LMS activity to identify those at risk of struggling or dropping out. It then proactively nudges students about upcoming deadlines, recommends personalised learning resources, and connects them with tutoring services or academic advisors. This support can improve retention rates, contribute to better academic outcomes, and enhance the overall student experience by providing timely, tailored assistance.

Consideration. Start with a specific cohort (e.g., first-year students, transfer students) or focus on a particular set of foundational courses. Ensure ethical data usage and transparent communication with students about the agent’s role.

Pilot Success Framework: Getting Started Today

As we have seen in the considerations above, starting with a high-impact, relatively low-risk use case is the recommended approach for beginning an agentic AI journey. This focuses on strategic, measured steps rather than a massive initial overhaul. When selecting a first pilot, organisations should identify projects with clear boundaries – specific data sources, explicit goals, and well-defined actions – avoiding overly ambitious or ambiguous initiatives.

A good pilot tackles a specific pain point and delivers measurable benefits, whether through time savings, fewer errors, or improved user satisfaction. Choosing scenarios with limited stakeholder risk and minimal disruption allows for learning and iteration without significant operational impact.

Executing a pilot effectively under these guidelines can generate momentum, earn stakeholder support, and lay the groundwork for scaling AI-driven transformation throughout the organisation. The future of autonomous operations begins with such focused pilots.

As one of Asia’s most digitally mature economies, Singapore was an early mover in national digital transformation and is now turning that head start into resilient, innovation-led economic value. Today, the conversation across boardrooms, regulators, and industry circles has evolved: it’s no longer just about adopting technology but about embedding digital as a systemic driver of competitiveness, inclusion, and sustained growth.

Singapore’s approach offers a model for the region, with its commitment to building a holistic digital ecosystem. This goes beyond infrastructure, it includes nurturing digital talent, fostering a vibrant innovation and startup culture, enabling trusted cross-border data flows, and championing public-private collaboration. Crucially, its forward-looking regulatory stance balances support for experimentation with the need to uphold public trust.

Through our conversations with leaders in Singapore and Ecosystm’s broader research, we see a country intentionally architecting its digital future, focused on real-world outcomes, regional relevance, and long-term economic resilience.

Here are five insights that capture the pulse of Singapore’s digital transformation.

Theme 1: Digital Governance as Strategy: Setting the Pace for Innovation & Trust

Singapore’s approach to digital governance goes beyond policy. It’s a deliberate strategy to build trust, accelerate innovation, and maintain economic competitiveness. The guiding principle is clear: technology must be both transformative and trustworthy.

This vision is clearly visible in the public sector, where digital platforms and services are setting the pace for the rest of the economy. Public service apps are designed to be citizen-centric, secure, and efficient, demonstrating how digital delivery can work at scale. The Government Tech Stack allows agencies to rapidly build and integrate services using shared APIs, cloud infrastructure, and secure data layers. Open data initiatives like Data.gov.sg unlock thousands of datasets, while tools such as FormSG and SG Notify make it easy for any organisation to digitise services and engage users in real time.

By leading with well-designed digital infrastructure and standards, the public sector creates blueprints that others can adopt, lowering the barriers to innovation for businesses of all sizes. For SMEs in particular, these tools and frameworks offer a practical foundation to modernise operations and participate more fully in the digital economy.

Singapore is also setting clear rules for responsible tech. IMDA’s Trusted Data Sharing Framework and AI Verify establish standards for secure data use and transparent AI, giving businesses the certainty they need to innovate with confidence. All of this is underpinned by strategic investments in digital infrastructure, including a new generation of sustainable, high-capacity data centres to meet growing regional demand. In Singapore, digital governance isn’t a constraint, it’s a catalyst.

Theme 2: AI in Singapore: From Experimentation to Accountability

Few places have embraced AI’s potential as strongly as Singapore. In 2022 and 2023, fuelled by the National AI Strategy and commercial pressure to deliver results, organisations across industries rushed into pilots in 2022 and 2023. Ecosystm research shows that by 2024, nearly 82% of large enterprises in Singapore were experimenting with AI, with 37% deploying it across multiple departments.

However, that initial wave of excitement soon gave way to realism. Leaders now speak candidly about AI fatigue and the growing demand for measurable returns. The conversation has shifted from “What can we automate?” to “What’s actually worth scaling?” Organisations are scrutinising whether their AI projects deliver tangible value, integrate into daily operations, and meet evolving regulatory expectations.

This maturity is especially visible in Singapore’s banking sector, where the stakes are high and scrutiny is intense. Banks were among the first to embrace AI aggressively and are now leading the shift toward disciplined prioritisation. From actively hunting down use cases, they’ve pivoted to focusing on the select few that deliver real business outcomes. With increasing pressure to ensure transparency, auditability, and alignment with global standards, finance leaders are setting the tone for AI accountability across the economy.

The result: a more grounded, impact-focused AI strategy. While many regional peers are still chasing pilots, Singapore is entering a new phase, defined by fewer but better AI initiatives, built to solve real problems and deliver meaningful ROI.

Theme 3: The Cyber Imperative: Trust, Recovery, and Resilience

Singapore’s digital leadership brings not only opportunities but also increased exposure to cyber threats. In 2024 alone, the country faced 21 million cyberattacks, ranking eighth globally as both a target and a source. High-profile breaches, from vendor compromises affecting thousands of banking customers to earlier incidents like the SingHealth data breach, have exposed vulnerabilities across critical sectors.

These incidents have sparked a fundamental shift in Singapore’s cybersecurity mindset from building impenetrable digital fortresses to embracing digital resilience. The government recognises that breaches are inevitable and prioritises rapid containment and recovery over prevention alone. Regulatory bodies like MAS have tightened incident reporting rules, demanding quicker, more transparent responses from affected organisations.

For enterprises in Singapore, cybersecurity has moved beyond a technical challenge to become a strategic imperative deeply tied to customer trust and business continuity. Leaders are investing heavily in real-time threat detection, incident response, and crisis management capabilities. In a landscape where vulnerabilities are real and constant, cyber resilience is now a critical competitive advantage because in Singapore’s digital economy, trust and operational reliability are non-negotiable.

Theme 4: Beyond Coding: Singapore’s Quest for Hybrid Digital Talent

Singapore’s digital ambitions increasingly depend on its human capital. While consistently ranking high in global talent competitiveness, the city-state faces a projected shortfall of over 1.2 million digitally skilled workers, particularly in fields like cybersecurity, data science, and AI engineering.

But the challenge isn’t purely technical. Organisations now demand talent that bridges technology, business strategy, and regulatory insight. Many digital initiatives stall not from technology limitations, but from a lack of professionals who can translate complex digital concepts into business value and ensure regulatory compliance.

To address this, government initiatives like the TechSkills Accelerator (TeSA) offer training subsidies and career conversion programmes. Meanwhile, leading tech providers including AWS, Microsoft, Google, and IBM, are stepping up, partnering with government and industry to deliver specialised training, certification programmes, and talent pipelines that help close the skills gap.

Still, enterprises grapple with keeping pace amid rapid technological change, balancing reskilling local talent with attracting specialised professionals from abroad. The future of Singapore’s digital economy will be defined as much by people as by technology; and by the partnerships that help bridge this critical gap.

Theme 5: Tracking Impact, Driving Change: Singapore’s Sustainability and Tech Synergy

Sustainability remains a core pillar of Singapore’s digital ambitions, driven by the government’s unwavering focus and supportive green financing options unlike in some markets where momentum has slowed. Anchored by the Singapore Green Plan 2030, the nation aims to double solar energy capacity and reduce landfill waste per capita by 30% by 2030.

Digital technology plays a critical role in this vision. Initiatives like the Green Data Centre Roadmap promote energy-efficient infrastructure and sustainable cooling technologies, balancing growth in the digital economy with carbon footprint management. Singapore is also emerging as a regional hub for carbon services, leveraging digital platforms such as the Carbon Services Platform to track, verify, and trade emissions, fostering credible and transparent carbon markets.

Government-backed green financing schemes, including the Green Bond Grant Scheme and Sustainability-Linked Loans, are accelerating investments in eco-friendly projects, enabling enterprises to fund sustainable innovation while meeting global ESG standards.

Despite these advances, leaders highlight challenges such as the lack of standardised sustainability metrics and rising risks of greenwashing, which complicate scaling green finance and cross-border sustainability reporting. Still, Singapore’s ability to integrate sustainability with digital innovation underscores its ambition to be more than a tech hub. It aims to be a trusted leader in building a responsible, future-ready economy.

From Innovation to Lasting Impact

Singapore stands at a critical inflection point. Already recognised as one of the world’s most advanced digital economies, its greatest test now is execution transforming cutting-edge technology from promise into real, everyday impact. The nation must balance rapid innovation with robust security, while shaping global standards that reflect its unique blend of ambition and pragmatism.

With deep-rooted trust across government, industry, and society, Singapore is uniquely equipped to lead not just in developing technology, but in embedding it responsibly to create lasting value for its people and the wider region. The next chapter will define whether Singapore can move from digital leadership to digital legacy.

In AI’s early days, enterprise leaders asked a straightforward question: “What can this automate?” The focus was on speed, scale, and efficiency and AI delivered. But that question is evolving. Now, the more urgent ask is: “Can this AI understand people?”

This shift – from automation to emotional intelligence – isn’t just theoretical. It’s already transforming how organisations connect with customers, empower employees, and design digital experiences. We’re shifting to a phase of humanised AI – systems that don’t just respond accurately, but intuitively, with sensitivity to mood, tone, and need.

One of the most unexpected, and revealing, AI use cases is therapy. Millions now turn to AI chat tools to manage anxiety, process emotions, and share deeply personal thoughts. What started as fringe behaviour is fast becoming mainstream. This emotional turn isn’t a passing trend; it marks a fundamental shift in how people expect technology to relate to them.

For enterprises, this raises a critical challenge: If customers are beginning to turn to AI for emotional support, what kind of relationship do they expect from it? And what does it take to meet that expectation – not just effectively, but responsibly, and at scale?

The Rise of Chatbot Therapy

Therapy was never meant to be one of AI’s first mass-market emotional use cases; and yet, here we are.

Apps like Wysa, Serena, and Youper have been quietly reshaping the digital mental health landscape for years, offering on-demand support through chatbots. Designed by clinicians, these tools draw on established methods like Cognitive Behavioural Therapy (CBT) and mindfulness to help users manage anxiety, depression, and stress. The conversations are friendly, structured, and often, surprisingly helpful.

But something even more unexpected is happening; people are now using general-purpose AI tools like ChatGPT for therapeutic support, despite them not being designed for it. Increasingly, users are turning to ChatGPT to talk through emotions, navigate relationship issues, or manage daily stress. Reddit threads and social posts describe it being used as a therapist or sounding board. This isn’t Replika or Wysa, but a general AI assistant being shaped into a personal mental health tool purely through user behaviour.

This shift is driven by a few key factors. First, access. Traditional therapy is expensive, hard to schedule, and for many, emotionally intimidating. AI, on the other hand, is always available, listens without judgement, and never gets tired.

Tone plays a big role too. Thanks to advances in reinforcement learning and tone conditioning, models like ChatGPT are trained to respond with calm, non-judgmental empathy. The result feels emotionally safe; a rare and valuable quality for those facing anxiety, isolation, or uncertainty. A recent PLOS study found that not only did participants struggle to tell human therapists apart from ChatGPT, they actually rated the AI responses as more validating and empathetic.

And finally, and perhaps surprisingly, is trust. Unlike wellness apps that push subscriptions or ads, AI chat feels personal and agenda-free. Users feel in control of the interaction – no small thing in a space as vulnerable as mental health.

None of this suggests AI should replace professional care. Risks like dependency, misinformation, or reinforcing harmful patterns are real. But it does send a powerful signal to enterprise leaders: people now expect digital systems to listen, care, and respond with emotional intelligence.

That expectation is changing how organisations design experiences – from how a support bot speaks to customers, to how an internal wellness assistant checks in with employees during a tough week. Humanised AI is no longer a niche feature of digital companions. It’s becoming a UX standard; one that signals care, builds trust, and deepens relationships.

Digital Companionship as a Solution for Support

Ten years ago, talking to your AI meant asking Siri to set a reminder. Today, it might mean sharing your feelings with a digital companion, seeking advice from a therapy chatbot, or even flirting with a virtual persona! This shift from functional assistant to emotional companion marks more than a technological leap. It reflects a deeper transformation in how people relate to machines.

One of the earliest examples of this is Replika, launched in 2017, which lets users create personalised chatbot friends or romantic partners. As GenAI advanced, so did Replika’s capabilities, remembering past conversations, adapting tone, even exchanging voice messages. A Nature study found that 90% of Replika users reported high levels of loneliness compared to the general population, but nearly half said the app gave them a genuine sense of social support.

Replika isn’t alone. In China, Xiaoice (spun off from Microsoft in 2020) has hundreds of millions of users, many of whom chat with it daily for companionship. In elder care, ElliQ, a tabletop robot designed for seniors has shown striking results: a report from New York State’s Office for the Aging cited a 95% drop in loneliness among participants.

Even more freeform platforms like Character.AI, where users converse with AI personas ranging from historical figures to fictional characters, are seeing explosive growth. People are spending hours in conversation – not to get things done, but to feel seen, inspired, or simply less alone.

The Technical Leap: What Has Changed Since the LLM Explosion

The use of LLMs for code editing and content creation is already mainstream in most enterprises but use cases have expanded alongside the capabilities of new models. LLMs now have the capacity to act more human – to carry emotional tone, remember user preferences, and maintain conversational continuity.

Key advances include:

- Memory. Persistent context and long-term recall

- Reinforcement Learning from Human Feedback (RLHF). Empathy and safety by design

- Sentiment and Emotion Recognition. Reading mood from text, voice, and expression

- Role Prompting. Personas using brand-aligned tone and behaviour

- Multimodal Interaction. Combining text, voice, image, gesture, and facial recognition

- Privacy-Sensitive Design. On-device inference, federated learning, and memory controls

Enterprise Implications: Emotionally Intelligent AI in Action

The examples shared might sound fringe or futuristic, but they reveal something real: people are now open to emotional interaction with AI. And that shift is creating ripple effects. If your customer service chatbot feels robotic, it pales in comparison to the AI friend someone chats with on their commute. If your HR wellness bot gives stock responses, it may fall flat next to the AI that helped a user through a panic attack the night before.

The lesson for enterprises isn’t to mimic friendship or romance, but to recognise the rising bar for emotional resonance. People want to feel understood. Increasingly, they expect that even from machines.

For enterprises, this opens new opportunities to tap into both emotional intelligence and public comfort with humanised AI. Emerging use cases include:

- Customer Experience. AI that senses tone, adapts responses, and knows when to escalate

- Brand Voice. Consistent personality and tone embedded in AI interfaces

- Employee Wellness. Assistants that support mental health, coaching, and daily check-ins

- Healthcare & Elder Care. Companions offering emotional and physical support

- CRM & Strategic Communications. Emotion-aware tools that guide relationship building

Ethical Design and Guardrails

Emotional AI brings not just opportunity, but responsibility. As machines become more attuned to human feelings, ethical complexity grows. Enterprises must ensure transparency – users should always know they’re speaking to a machine. Emotional data must be handled with the same care as health data. Empathy should serve the user, not manipulate them. Healthy boundaries and human fallback must be built in, and organisations need to be ready for regulation, especially in sensitive sectors like healthcare, finance, and education.

Emotional intelligence is no longer just a human skill; it’s becoming a core design principle, and soon, a baseline expectation.

Those who build emotionally intelligent AI with integrity can earn trust, loyalty, and genuine connection at scale. But success won’t come from speed or memory alone – it will come from how the experience makes people feel.

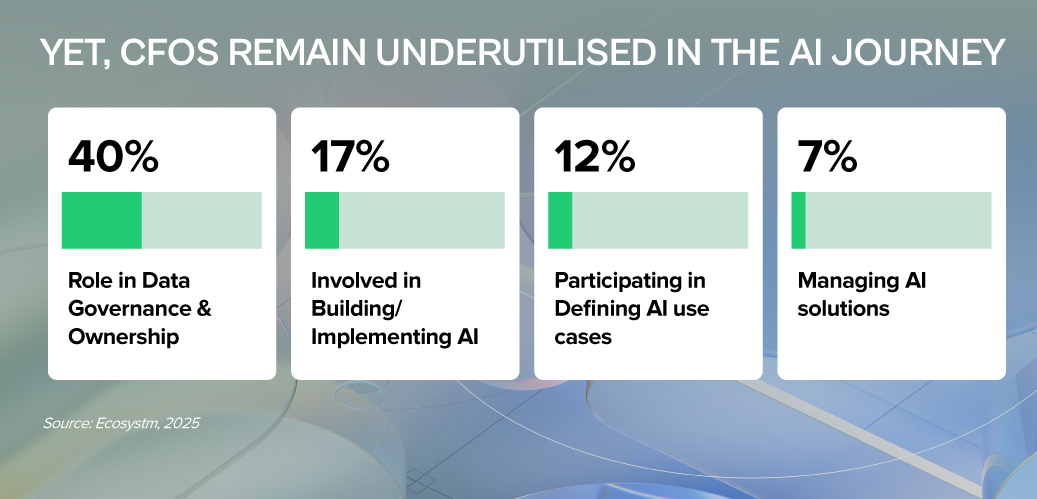

AI is not just reshaping how businesses operate — it’s redefining the CFO’s role at the centre of value creation, risk management, and operational leadership.

As stewards of capital, CFOs must cut through the hype and ensure AI investments deliver measurable business returns. As guardians of risk and compliance, they must shield their organisations from new threats — from algorithmic bias to data privacy breaches with heavy financial and reputational costs. And as leaders of their function, CFOs now have a generational opportunity to modernise finance, champion AI adoption, and build teams ready for an AI-powered future.

LEAD WITH RIGOUR. SAFEGUARD WITH VIGILANCE. CHAMPION WITH VISION.

That’s the CFO playbook for AI success.

Click here to download “AI Stakeholders: The Finance Perspective” as a PDF.

1. Investor & ROI Gatekeeper: Ensuring AI Delivers Value

CFOs must scrutinise AI investments with the same discipline as any major capital allocation.

- Demand Clear Business Cases. Every AI initiative should articulate the problem solved, expected gains (cost, efficiency, accuracy), and specific KPIs.

- Prioritise Tangible ROI. Focus on AI projects that show measurable impact. Start with high-return, lower-risk use cases before scaling.

- Assess Total Cost of Ownership (TCO). Go beyond upfront costs – factor in integration, maintenance, training, and ongoing AI model management.

Only 37% of Asia Pacific organisations invest in FinOps to cut costs, boost efficiency, and strengthen financial governance over tech spend.

2. Risk & Compliance Steward: Navigating AI’s New Risk Landscape

AI brings significant regulatory, compliance, and reputational risks that CFOs must manage – in partnership with peers across the business.

- Champion Data Quality & Governance. Enforce rigorous data standards and collaborate with IT, risk, and business teams to ensure accuracy, integrity, and compliance across the enterprise.

- Ensure Data Accessibility. Break down silos with CIOs and CDOs and invest in shared infrastructure that AI initiatives depend on – from data lakes to robust APIs.

- Address Bias & Safeguard Privacy. Monitor AI models to detect bias, especially in sensitive processes, while ensuring compliance.

- Protect Security & Prevent Breaches. Strengthen defences around financial and personal data to avoid costly security incidents and regulatory penalties.

3. AI Champion & Business Leader: Driving Adoption in Finance

Beyond gatekeeping, CFOs must actively champion AI to transform finance operations and build future-ready teams.

- Identify High-Impact Use Cases. Work with teams to apply AI where it solves real pain points – from automating accounts payable to improving forecasting and fraud detection.

- Build AI Literacy. Help finance teams see AI as an augmentation tool, not a threat. Invest in upskilling while identifying gaps – from data management to AI model oversight.

- Set AI Governance Frameworks. Define accountability, roles, and control mechanisms to ensure responsible AI use across finance.

- Stay Ahead of the Curve. Monitor emerging tech that can streamline finance and bring in expert partners to fast-track AI adoption and results.

CFOs: From Gatekeepers to Growth Drivers

AI is not just a tech shift – it’s a CFO mandate. To lead, CFOs must embrace three roles: Investor, ensuring every AI bet delivers real ROI; Risk Guardian, protecting data integrity and compliance in a world of new risks; and AI Champion, embedding AI into finance teams to boost speed, accuracy, and insight.

This is how finance moves from record-keeping to value creation. With focused leadership and smart collaboration, CFOs can turn AI from buzzword to business impact.

AI has rapidly transitioned from a theoretical concept to a strategic imperative, reshaping core business functions and fundamentally altering the operational landscape of technology teams. By empowering teams with increased autonomy and data-driven capabilities, organisations are positioned to realise substantial value and achieve a decisive competitive advantage.

The most profound impact of AI can be observed within tech teams. AI-driven automation of routine tasks and streamlined operations are enabling technology professionals to refocus their efforts on strategic initiatives. This shift transforms the technology function from a reactive system maintenance role to a proactive developer of intelligent infrastructure and future-oriented systems.

Ecosystm research reveals key findings that Tech Leaders need to know.

Click here to download “AI Stakeholders: The Tech Leader’s Perspective” as a PDF.

Strategic AI Deployment

Ecosystm research reveals a clear trend: technology leaders are strategically investing in the immense potential of AI. While 61% currently leverage AI for IT support and helpdesk automation, there is a clear aspiration for broader deployment across infrastructure, development, and security. 80% are prioritising cloud resource allocation and optimisation, followed by 76% focusing on network optimisation and performance monitoring, along with significant interest in software development and testing, and cyber threat detection.

One Infrastructure Leader shared that the organisation uses AI to dynamically scale infrastructure while automating maintenance to prevent outages. This approach has led to unprecedented efficiency and freed up their teams for more strategic work. The leader emphasised that AI is helping to tackle complex infrastructure challenges and is key to achieving operational excellence.

A Cyber Leader discussed the role of AI in enhancing their defense capabilities. While not a “silver bullet,” it is a powerful tool in the fight against cyber threats. AI significantly enhances threat intelligence and fraud analysis, complementing, rather than replacing, security team efforts. This integration has helped streamline security operations and improve the ability to respond to emerging risks.

AI is also making waves in software development. A Data Science Leader explained how AI quality control tools have reduced bug counts by 30%, enabling faster release cycles and a 10% improvement in internal customer satisfaction.

Collaborative AI Implementation: A Cross-Functional Approach

The successful implementation of AI requires a collaborative, cross-functional approach. The responsibility for identifying viable use cases, developing and maintaining systems, and ensuring robust data governance is distributed among various technology leadership roles. CIOs, in collaboration with business stakeholders, define strategic use cases, considering infrastructure requirements. Data Science Leaders bridge the gap between AI’s technical capabilities and practical business applications. CISOs safeguard data, while CIOs manage the systems that store and organise it.

Navigating Challenges, Prioritising Strategic AI Initiatives

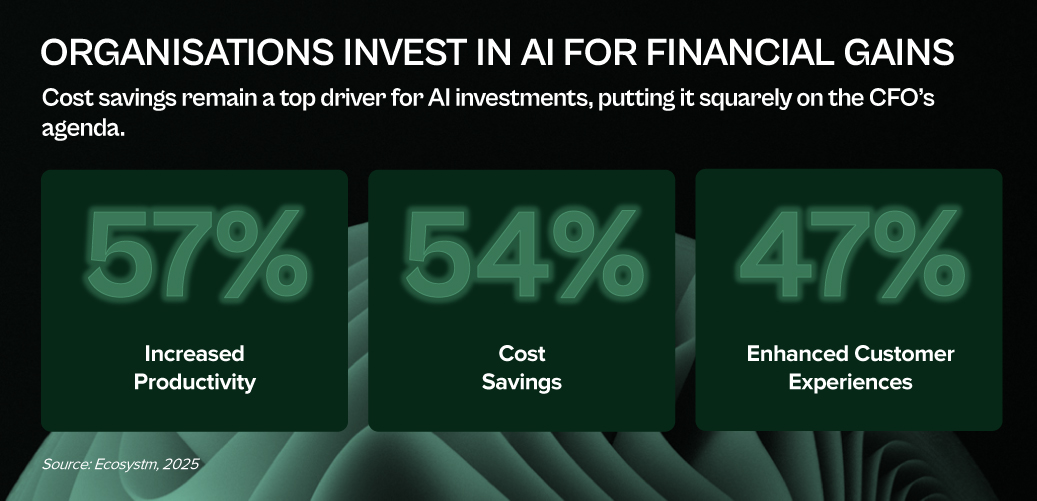

Despite the acknowledged potential of AI, technology leaders must address several critical challenges, including use case prioritisation, skill gaps, and the development of comprehensive AI strategies. Nevertheless, the strategic importance of AI will continue to drive its prioritisation in 2025. Key anticipated outcomes include increased technology team productivity (56%) and technology cost optimisation (53%).

AI is no longer a supplementary tool but a core strategic asset. By strategically integrating AI, technology teams are transitioning from operational support to strategic innovation, building the intelligent systems that will define the future of business.

Operations leaders are on the front lines of the AI revolution. They see the transformative potential of AI and are actively driving its adoption to streamline processes, boost efficiency, and unlock new levels of performance. The value is clear: AI is no longer a futuristic concept, but a present-day necessity.

Over the past two years, Ecosystm’s research – including surveys and deep dives with business and tech leaders has confirmed this: AI is the dominant theme.

Here are some insights for Operations Leaders from our research.

Click here to download “AI Stakeholders: The Operations Perspective” as a PDF

From Streamlined Workflows to Smarter Decisions

AI is already making a tangible difference in operations. A significant 60% of operations leaders are currently leveraging AI for intelligent document processing, freeing up valuable time and resources. But this is just the beginning. The vision extends far beyond, with plans to expand AI’s reach into crucial areas like workflow analysis, fraud detection, and streamlining risk and compliance processes. Imagine AI optimising transportation routes in real-time, predicting equipment maintenance needs before they arise, or automating complex scheduling tasks. This is the operational reality AI is creating.

Real-World Impact, Real-World Examples

The impact of AI is not just theoretical. Operations leaders are witnessing firsthand how AI is driving tangible improvements. “With AI-powered vision and sensors, we’ve boosted efficiency, accuracy, and safety in our manufacturing processes,” shares one leader. Others highlight the security benefits: “From fraud detection to claims processing, AI is safeguarding our transactions and improving trust in our services.” Even complex logistical challenges are being conquered: “Our AI-driven logistics solution has cut costs, saved time, and turned complex operations into seamless processes.” These real-world examples showcase the power of AI to deliver concrete results across diverse operational functions.

Operations Takes a Seat at the AI Strategy Table (But Faces Challenges)

With 54% of organisations prioritising cost savings from AI, operations leaders are rightfully taking a seat at the AI strategy table, shaping use cases and driving adoption. A remarkable 56% of operations leaders are actively involved in defining high-value AI applications. However, a disconnect exists. Despite their influence on AI strategy, only a small fraction (7%) of operations leaders have direct data governance responsibilities. This lack of control over the very fuel that powers AI – data – creates a significant hurdle.

Further challenges include data access across siloed systems, limiting the ability to gain a holistic view, difficulty in identifying and prioritising the most impactful AI use cases, and persistent skills shortages. These barriers, while significant, are not deterring operations leaders.

The Future is AI-Driven

Despite these challenges, operations leaders are doubling down on AI. A striking 7 out of 10 plan to prioritise AI investments in 2025, driven by the pursuit of greater cost savings. And the biggest data effort on the horizon? Identifying and prioritising better use cases for AI. This focus on practical applications demonstrates a clear understanding: the future of operations is inextricably linked to the power of AI. By addressing the challenges they face and focusing on strategic implementation, operations leaders are poised to unlock the full potential of AI and transform their organisations.

AI has broken free from the IT department. It’s no longer a futuristic concept but a present-day reality transforming every facet of business. Departments across the enterprise are now empowered to harness AI directly, fuelling innovation and efficiency without waiting for IT’s stamp of approval. The result? A more agile, data-driven organisation where AI unlocks value and drives competitive advantage.

Ecosystm’s research over the past two years, including surveys and in-depth conversations with business and technology leaders, confirms this trend: AI is the dominant theme. And while the potential is clear, the journey is just beginning.

Here are key AI insights for HR Leaders from our research.

Click here to download “AI Stakeholders: The HR Perspective” as a PDF.

HR: Leading the Charge (or Should Be)

Our research reveals a fascinating dynamic in HR. While 54% of HR leaders currently use AI for recruitment (scanning resumes, etc.), their vision extends far beyond. A striking majority plan to expand AI’s reach into crucial areas: 74% for workforce planning, 68% for talent development and training, and 62% for streamlining employee onboarding.

The impact is tangible, with organisations already seeing significant benefits. GenAI has streamlined presentation creation for bank employees, allowing them to focus on content rather than formatting and improving efficiency. Integrating GenAI into knowledge bases has simplified access to internal information, making it quicker and easier for employees to find answers. AI-driven recruitment screening is accelerating hiring in the insurance sector by analysing resumes and applications to identify top candidates efficiently. Meanwhile, AI-powered workforce management systems are transforming field worker management by optimising job assignments, enabling real-time tracking, and ensuring quick responses to changes.

The Roadblocks and the Opportunity

Despite this promising outlook, HR leaders face significant hurdles. Limited exploration of use cases, the absence of a unified organisational AI strategy, and ethical concerns are among the key barriers to wider AI deployments.

Perhaps most concerning is the limited role HR plays in shaping AI strategy. While 57% of tech and business leaders cite increased productivity as the main driver for AI investments, HR’s influence is surprisingly weak. Only 20% of HR leaders define AI use cases, manage implementation, or are involved in governance and ownership. A mere 8% primarily manage AI solutions.

This disconnect represents a massive opportunity.

2025 and Beyond: A Call to Action for HR

Despite these challenges, our research indicates HR leaders are prioritising AI for 2025. Increased productivity is the top expected outcome, while three in ten will focus on identifying better HR use cases as part of a broader data-centric approach.

The message is clear: HR needs to step up and claim its seat at the AI table. By proactively defining use cases, championing ethical considerations, and collaborating closely with tech teams, HR can transform itself into a strategic driver of AI adoption, unlocking the full potential of this transformative technology for the entire organisation. The future of HR is intelligent, and it’s time for HR leaders to embrace it.

Over the past year, Ecosystm has conducted extensive research, including surveys and in-depth conversations with industry leaders, to uncover the most pressing topics and trends. And unsurprisingly, AI emerged as the dominant theme.

Here are some insights from our research.

Click here to download ‘AI in BFSI: Success Stories & Insights’ as a PDF

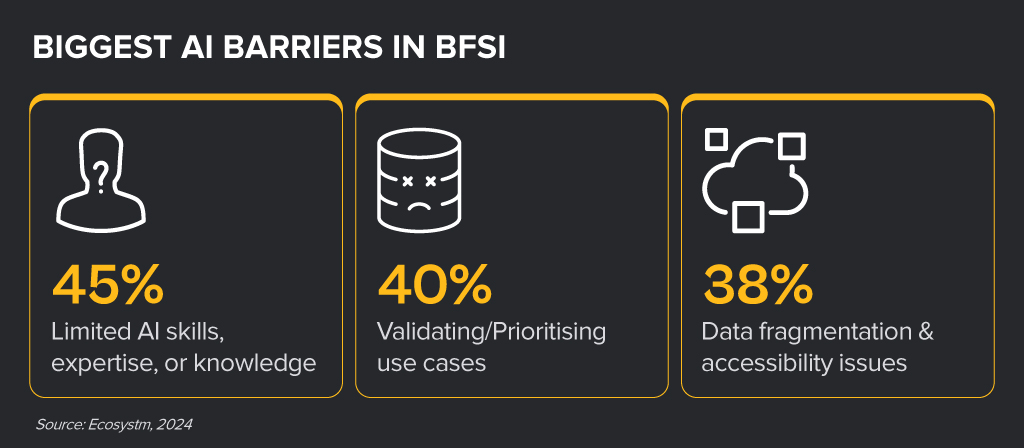

From personalised recommendations to streamlined operations, AI is transforming the products, services and processes in the BFSI industries. While leaders realise that AI holds significant potential, turning that potential into reality is often tough. Many BFSI organisations struggle to move beyond AI pilots because of some key barriers.

Despite the challenges, BFSI organisations are witnessing early AI success in these 3 areas:

- 1. Customer Service & Engagement

- 2. Risk Management & Fraud Detection

- 3. Process Automation & Efficiency

Customer Service & Engagement Use Cases

- Virtual Assistants and Chatbots. Delivering real-time product information and customer support

- Customer Experience Analysis. Analysing data to uncover trends and improve user experiences

- Personalised Recommendations. Providing tailored financial products based on user behaviour and preferences

“While we remain cautious about customer-facing applications, many of our AI use cases provide valuable customer insights to our employees. Human-in-the-loop is still a critical consideration.” – INSURANCE CX LEADER

Risk Management & Fraud Detection Use Cases

- Enhanced Credit Scoring. Improved assessment of creditworthiness and risks

- Advanced Fraud Detection. Easier detection and prevention of fraudulent activities

- Comprehensive Risk Strategy. Assessment of risk factors to develop effective strategies

“We deployed enterprise-grade AI models that are making a significant impact in specialised areas like credit decisioning and risk modelling.” – BANKING DATA LEADER

Process Automation and Efficiency

- Backend Process Streamlining. Automating workflows and processes to boost efficiency

- Loan & Claims Processing. Speeding up application and approval processes

- Invoice Processing. Automating invoice management to minimise errors

“Our focus is on creating a mindset where employees see AI as a tool that can augment their capabilities rather than replace them.” – BANKING COO

Large organisations in the banking and financial services industry have come a long way over the past two decades in cutting costs, restructuring IT systems and redefining customer relationship management. And, as if that was not enough, they now face the challenge of having to adapt to ongoing global technological shifts or the challenge of having to “do something with AI” without being AI-ready in terms of strategy, skills and culture.

Most organisations in the industry have started approaching AI implementation in a conventional way, based on how they have historically managed IT initiatives. Their first attempts at experimenting with AI have led to rapid conclusions forming seven common myths. However, as experience with AI grows, these myths are gradually being debunked. Let us put these myths to a reality check.

1. We can rely solely on external tech companies

Even in a highly regulated industry like banking and financial services, internal processes and data management practices can vary significantly from one institution to another. Experience shows that while external providers – many of whom lack direct industry experience – can offer solutions tailored to the more obvious use cases and provide customisation, they fall short when it comes to identifying less apparent opportunities and driving fundamental changes in workflows. No one understands an institution’s data better than its own employees. Therefore, a key success factor in AI implementation is active internal ownership, involving employees directly rather than delegating the task entirely to external parties. While technology providers are essential partners, organisations must also cultivate their own internal understanding of AI to ensure successful implementation.

2. AI is here to be applied to single use cases

In the early stages of experimenting with AI, many financial institutions treated it as a side project, focusing on developing minimum viable products and solving isolated problems to explore what worked and what didn’t. Given their inherently risk-averse nature, organisations often approached AI cautiously, addressing one use case at a time to avoid disrupting their broader IT landscape or core business. However, with AI’s potential for deep transformation, the financial services industry has an opportunity not only to address inefficiencies caused by manual, time-consuming tasks but also to question how data is created, captured, and used from the outset. This requires an ecosystem of visionary minds in the industry who join forces and see beyond deal generation.

3. We can staff AI projects with our highly motivated junior employees and let our senior staff focus on what they do best – managing the business

Financial institutions that still view AI as a side hustle, secondary to their day-to-day operations, often assign junior employees to handle AI implementation. However, this can be a mistake. AI projects involve numerous small yet critical decisions, and team members need the authority and experience to make informed judgments that align with the organisation’s goals. Also, resistance to change often comes from those who were not involved in shaping or developing the initiative. Experience shows that project teams with a balanced mix of seniority and diversity in perspectives tend to deliver the best results, ensuring both strategic insight and operational engagement.

4. AI projects do not pay off

Compared to conventional IT projects, the business cases for AI implementation – especially when limited to solving a few specific use cases – often do not pay off over a period of two to three years. Traditional IT projects can usually be executed with minimal involvement of subject matter experts, and their costs are easier to estimate based on reference projects. In contrast, AI projects are highly experimental, requiring multiple iterations, significant involvement from experts, and often lacking comparable reference projects. When AI solutions address only small parts of a process, the benefits may not be immediately apparent. However, if AI is viewed as part of a long-term transformational journey, gradually integrating into all areas of the organisation and unlocking new business opportunities over the next five to ten years, the true value of AI becomes clear. A conventional business case model cannot fully capture this long-term payoff.

5. We are on track with AI if we have several initiatives ongoing

Many financial institutions have begun their AI journey by launching multiple, often unrelated, use case-based projects. The large number of initiatives can give top management a false sense of progress, as if they are fully engaged in AI. However, investors and project teams often ask key questions: Where are these initiatives leading? How do they contribute? What is the AI vision and strategy, and how does it align with the business strategy? If these answers remain unclear, it’s difficult to claim that the organisation is truly on track with AI. To ensure that AI initiatives are truly impactful and aligned with business objectives, organisations must have a clear AI vision and strategy – and not rely on number of initiatives to measure progress.

6. AI implementation projects always exceed their deadlines

AI solutions in the banking and financial services industry are rarely off-the-shelf products. In cases of customisation or in-house development, particularly when multiple model-building iterations and user tests are required, project delays of three to nine months can occur. This is largely because organisations want to avoid rolling out solutions that do not perform reliably. The goal is to ensure that users have a positive experience with AI and embrace the change. Over time, as an organisation becomes more familiar with AI implementation, the process will become faster.

7. We upskill our people by giving them access to AI training

Learning by doing has always been and will remain the most effective way to learn, especially with technology. Research has shown that 90% of knowledge acquired in training is forgotten after a week if it is not applied. For organisations, the best way to digitally upskill employees is to involve them in AI implementation projects, even if it’s just a few hours per week. To evaluate their AI readiness or engagement, organisations could develop new KPIs, such as the average number of hours an employee actively engages in AI implementation or the percentage of employees serving as subject matter experts in AI projects.

Which of these myths have you believed, and where do you already see changes?