The White House has mandated federal agencies to conduct risk assessments on AI tools and appoint officers, including Chief Artificial Intelligence Officers (CAIOs), for oversight. This directive, led by the Office of Management and Budget (OMB), aims to modernise government AI adoption and promote responsible use. Agencies must integrate AI oversight into their core functions, ensuring safety, security, and ethical use. CAIOs will be tasked with assessing AI’s impact on civil rights and market competition. Agencies have until December 1, 2024, to address non-compliant AI uses, emphasising swift implementation.

How will this impact global AI adoption? Ecosystm analysts share their views.

Click here to download ‘Ensuring Ethical AI: US Federal Agencies’ New Mandate’ as a PDF.

The Larger Impact: Setting a Global Benchmark

This sets a potential global benchmark for AI governance, with the U.S. leading the way in responsible AI use, inspiring other nations to follow suit. The emphasis on transparency and accountability could boost public trust in AI applications worldwide.

The appointment of CAIOs across U.S. federal agencies marks a significant shift towards ethical AI development and application. Through mandated risk management practices, such as independent evaluations and real-world testing, the government recognises AI’s profound impact on rights, safety, and societal norms.

This isn’t merely a regulatory action; it’s a foundational shift towards embedding ethical and responsible AI at the heart of government operations. The balance struck between fostering innovation and ensuring public safety and rights protection is particularly noteworthy.

This initiative reflects a deep understanding of AI’s dual-edged nature – the potential to significantly benefit society, countered by its risks.

The Larger Impact: Blueprint for Risk Management

In what is likely a world first, AI brings together technology, legal, and policy leaders in a concerted effort to put guardrails around a new technology before a major disaster materialises. These efforts span from technology firms providing a form of legal assurance for use of their products (for example Microsoft’s Customer Copyright Commitment) to parliaments ratifying AI regulatory laws (such as the EU AI Act) to the current directive of installing AI accountability in US federal agencies just in the past few months.

It is universally accepted that AI needs risk management to be responsible and acceptable – installing an accountable C-suite role is another major step of AI risk mitigation.

This is an interesting move for three reasons:

- The balance of innovation versus governance and risk management.

- Accountability mandates for each agency’s use of AI in a public and transparent manner.

- Transparency mandates regarding AI use cases and technologies, including those that may impact safety or rights.

Impact on the Private Sector: Greater Accountability

AI Governance is one of the rare occasions where government action moves faster than private sector. While the immediate pressure is now on US federal agencies (and there are 438 of them) to identify and appoint CAIOs, the announcement sends a clear signal to the private sector.

Following hot on the heels of recent AI legislation steps, it puts AI governance straight into the Boardroom. The air is getting very thin for enterprises still in denial that AI governance has advanced to strategic importance. And unlike the CFC ban in the Eighties (the Montreal protocol likely set the record for concerted global action) this time the technology providers are fully onboard.

There’s no excuse for delaying the acceleration of AI governance and establishing accountability for AI within organisations.

Impact on Tech Providers: More Engagement Opportunities

Technology vendors are poised to benefit from the medium to long-term acceleration of AI investment, especially those based in the U.S., given government agencies’ preferences for local sourcing.

In the short term, our advice to technology vendors and service partners is to actively engage with CAIOs in client agencies to identify existing AI usage in their tools and platforms, as well as algorithms implemented by consultants and service partners.

Once AI guardrails are established within agencies, tech providers and service partners can expedite investments by determining which of their platforms, tools, or capabilities comply with specific guardrails and which do not.

Impact on SE Asia: Promoting a Digital Innovation Hub

By 2030, Southeast Asia is poised to emerge as the world’s fourth-largest economy – much of that growth will be propelled by the adoption of AI and other emerging technologies.

The projected economic growth presents both challenges and opportunities, emphasizing the urgency for regional nations to enhance their AI governance frameworks and stay competitive with international standards. This initiative highlights the critical role of AI integration for private sector businesses in Southeast Asia, urging organizations to proactively address AI’s regulatory and ethical complexities. Furthermore, it has the potential to stimulate cross-border collaborations in AI governance and innovation, bridging the U.S., Southeast Asian nations, and the private sector.

It underscores the global interconnectedness of AI policy and its impact on regional economies and business practices.

By leading with a strategic approach to AI, the U.S. sets an example for Southeast Asia and the global business community to reevaluate their AI strategies, fostering a more unified and responsible global AI ecosystem.

The Risks

U.S. government agencies face the challenge of sourcing experts in technology, legal frameworks, risk management, privacy regulations, civil rights, and security, while also identifying ongoing AI initiatives. Establishing a unified definition of AI and cataloguing processes involving ML, algorithms, or GenAI is essential, given AI’s integral role in organisational processes over the past two decades.

However, there’s a risk that focusing on AI governance may hinder adoption.

The role should prioritise establishing AI guardrails to expedite compliant initiatives while flagging those needing oversight. While these guardrails will facilitate “safe AI” investments, the documentation process could potentially delay progress.

The initiative also echoes a 20th-century mindset for a 21st-century dilemma. Hiring leaders and forming teams feel like a traditional approach. Today, organisations can increase productivity by considering AI and automation as initial solutions. Investing more time upfront to discover initiatives, set guardrails, and implement AI decision-making processes could significantly improve CAIO effectiveness from the outset.

2024 has started cautiously for organisations, with many choosing to continue with tech projects that have already initiated, while waiting for clearer market conditions before starting newer transformation projects. This means that tech providers must continue to refine their market messaging and enhance their service/product offerings to strengthen their market presence in the latter part of the year. Ecosystm analysts present five key considerations for tech providers as they navigate evolving market and customer trends, this year.

Navigating Market Dynamics

Continuing Economic Uncertainties. Organisations will focus on ongoing projects and consider expanding initiatives in the latter part of the year. This means that tech providers should maintain visibility and trust with existing clients. They also need to help their customers meet multiple KPIs.

Popularity of Generative AI. For organisations, this will be the time to go beyond the novelty factor and assess practical business outcomes, allied costs, and change management. Tech providers need to include ROI discussions for short-term and mid-term perspectives as organisations move beyond pilots.

Infrastructure Market Disruption. Tech leaders will keep an eye out for advancements and disruptions in the market (likely to originate from the semiconductor sector). The disruptions might require tech vendors to re-assess the infrastructure partner ecosystem.

Need for New Tech Skills. Tech leaders will evaluate Generative AI’s impact on AIOps and IT Architecture; invest in upskilling for talent retention. Tech providers must prioritise creating user-friendly experiences to make technology accessible to business users. Training and partner enablement will also need a higher focus.

Increased Focus on Governance. Tech leaders will consult tech vendors on how to implement safeguards for data usage, sharing, and cybersecurity. This opens up opportunities in offering governance-related services.

5 Key Considerations for Tech Vendors

#1 Get Ready for the Year of the AI Startup

While many AI companies have been around for years, this will be the year that many of them make a significant play into enterprises in Asia Pacific. This comes at a time when many organisations are attempting to reduce tech debt and simplify their tech architecture.

For these AI startups to succeed, they will need to create watertight business cases, and do a lot of the hard work in pre-integrating their solutions with the larger platforms to reduce the time to value and simplify the systems integration work.

To respond to these emerging threats, existing tech providers will need to not only accelerate their own use of AI in their platforms, but also ramp up the education and promotion of these capabilities.

#2 Lead With Data, Not AI Capabilities

Organisations recognise the need for AI to enhance their workforce, improve customer experience, and automate processes. However, the initial challenge lies in improving data quality, as trust in early AI models hinges on high-quality training data for long-term success.

Tech vendors that can help with data source discovery, metadata analysis, and seamless data pipeline creation will emerge as trusted AI partners. Transformation tools that automate deduplication and quality assurance tasks empower data scientists to focus on high-value work. Automation models like Segment Anything enhance unstructured data labeling, particularly for images. Finally synthetic data will gain importance as quality sources become scarce.

Tech vendors will be tempted to capitalise on the Generative AI hype but for sake of positive early experiences, they should begin with data quality.

#3 Prepare Thoroughly for AI-driven Business Demand

Besides pureplay AI opportunities, AI will drive a renewed and increased interest in data and data management. Tech and service providers can capitalise on this by understanding the larger picture around their clients’ data maturity and governance. Initial conversations around AI can be door openers to bigger, transformational engagements.

Tech vendors should avoid the pitfall of downplaying AI risks. Instead, they should make all efforts to own and drive the conversation with their clients. They need to be forthcoming about their in-house responsible AI guidelines and understand what is happening in AI legislation world-wide (hint: a lot!)

Tech providers must establish strong client partnerships for AI initiatives to succeed. They must address risk and benefit equally to reap the benefits of larger AI-driven transformation engagements.

#4 Converge Network & Security Capabilities

Networking and security vendors will need to develop converged offerings as these two technologies increasingly overlap in the hybrid working era. Organisations are now entering a new phase of maturity as they evolve their remote working policies and invest in tools to regain control. They will require simplified management, increased visibility, and to provide a consistent user experience, wherever employees are located.

There has already been a widespread adoption of SD-WAN and now organisations are starting to explore next generation SSE technologies. Procuring these capabilities from a single provider will help to remove complexity from networks as the number of endpoints continue to grow.

Tech providers should take a land and expand approach, getting a foothold with SASE modules that offer rapid ROI. They should focus on SWG and ZTNA deals with an eye to expanding in CASB and FWaaS, as customers gain experience.

#5 Double Down on Your Partner Ecosystem

The IT services market, particularly in Asia Pacific, is poised for significant growth. Factors, including the imperative to cut IT operational costs, the growing complexity of cloud migrations and transformations, change management for Generative AI capabilities, and rising security and data governance needs, will drive increased spending on IT services.

Tech services providers – consultants, SIs, managed services providers, and VARs – will help drive organisations’ tech spend and strategy. This is a good time to review partners, evaluating whether they can take the business forward, or whether there is a need to expand or change the partner mix.

Partner reviews should start with an evaluation of processes and incentives to ensure they foster desired behaviour from customers and partners. Tech vendors should develop a 21st century partner program to improve chances of success.

2023 has been an eventful year. In May, the WHO announced that the pandemic was no longer a global public health emergency. However, other influencers in 2023 will continue to impact the market, well into 2024 and beyond.

Global Conflicts. The Russian invasion of Ukraine persisted; the Israeli-Palestinian conflict escalated into war; African nations continued to see armed conflicts and political crises; there has been significant population displacement.

Banking Crisis. American regional banks collapsed – Silicon Valley Bank and First Republic Bank collapses ranking as the third and second-largest banking collapses in US history; Credit Suisse was acquired by UBS in Switzerland.

Climate Emergency. The UN’s synthesis report found that there’s still a chance to limit global temperature increases by 1.5°C; Loss and Damage conversations continued without a significant impact.

Power of AI. The interest in generative AI models heated up; tech vendors incorporated foundational models in their enterprise offerings – Microsoft Copilot was launched; awareness of AI risks strengthened calls for Ethical/Responsible AI.

Click below to find out what Ecosystm analysts Achim Granzen, Darian Bird, Peter Carr, Sash Mukherjee and Tim Sheedy consider the top 5 tech market forces that will impact organisations in 2024.

Click here to download ‘Ecosystm Predicts: Tech Market Dynamics 2024’ as a PDF

#1 State-sponsored Attacks Will Alter the Nature Of Security Threats

It is becoming clearer that the post-Cold-War era is over, and we are transitioning to a multi-polar world. In this new age, malevolent governments will become increasingly emboldened to carry out cyber and physical attacks without the concern of sanction.

Unlike most malicious actors driven by profit today, state adversaries will be motivated to maximise disruption.

Rather than encrypting valuable data with ransomware, wiper malware will be deployed. State-sponsored attacks against critical infrastructure, such as transportation, energy, and undersea cables will be designed to inflict irreversible damage. The recent 23andme breach is an example of how ethnically directed attacks could be designed to sow fear and distrust. Additionally, even the threat of spyware and phishing will cause some activists, journalists, and politicians to self-censor.

#2 AI Legislation Breaches Will Occur, But Will Go Unpunished

With US President Biden’s recently published “Executive order on Safe, Secure and Trustworthy AI” and the European Union’s “AI Act” set for adoption by the European Parliament in mid-2024, codified and enforceable AI legislation is on the verge of becoming reality. However, oversight structures with powers to enforce the rules are currently not in place for either initiative and will take time to build out.

In 2024, the first instances of AI legislation violations will surface – potentially revealed by whistleblowers or significant public AI failures – but no legal action will be taken yet.

#3 AI Will Increase Net-New Carbon Emissions

In an age focused on reducing carbon and greenhouse gas emissions, AI is contributing to the opposite. Organisations often fail to track these emissions under the broader “Scope 3” category. Researchers at the University of Massachusetts, Amherst, found that training a single AI model can emit over 283T of carbon dioxide, equal to emissions from 62.6 gasoline-powered vehicles in a year.

Organisations rely on cloud providers for carbon emission reduction (Amazon targets net-zero by 2040, and Microsoft and Google aim for 2030, with the trajectory influencing global climate change); yet transparency on AI greenhouse gas emissions is limited. Diverse routes to net-zero will determine the level of greenhouse gas emissions.

Some argue that AI can help in better mapping a path to net-zero, but there is concern about whether the damage caused in the process will outweigh the benefits.

#4 ESG Will Transform into GSE to Become the Future of GRC

Previously viewed as a standalone concept, ESG will be increasingly recognised as integral to Governance, Risk, and Compliance (GRC) practices. The ‘E’ in ESG, representing environmental concerns, is becoming synonymous with compliance due to growing environmental regulations. The ‘S’, or social aspect, is merging with risk management, addressing contemporary issues such as ethical supply chains, workplace equity, and modern slavery, which traditional GRC models often overlook. Governance continues to be a crucial component.

The key to organisational adoption and transformation will be understanding that ESG is not an isolated function but is intricately linked with existing GRC capabilities.

This will present opportunities for GRC and Risk Management providers to adapt their current solutions, already deployed within organisations, to enhance ESG effectiveness. This strategy promises mutual benefits, improving compliance and risk management while simultaneously advancing ESG initiatives.

#5 Productivity Will Dominate Workforce Conversations

The skills discussions have shifted significantly over 2023. At the start of the year, HR leaders were still dealing with the ‘productivity conundrum’ – balancing employee flexibility and productivity in a hybrid work setting. There were also concerns about skills shortage, particularly in IT, as organisations prioritised tech-driven transformation and innovation.

Now, the focus is on assessing the pros and cons (mainly ROI) of providing employees with advanced productivity tools. For example, early studies on Microsoft Copilot showed that 70% of users experienced increased productivity. Discussions, including Narayana Murthy’s remarks on 70-hour work weeks, have re-ignited conversations about employee well-being and the impact of technology in enabling employees to achieve more in less time.

Against the backdrop of skills shortages and the need for better employee experience to retain talent, organisations are increasingly adopting/upgrading their productivity tools – starting with their Sales & Marketing functions.

It’s been barely one year since we entered the Generative AI Age. On November 30, 2022, OpenAI launched ChatGPT, with no fanfare or promotion. Since then, Generative AI has become arguably the most talked-about tech topic, both in terms of opportunities it may bring and risks that it may carry.

The landslide success of ChatGPT and other Generative AI applications with consumers and businesses has put a renewed and strengthened focus on the potential risks associated with the technology – and how best to regulate and manage these. Government bodies and agencies have created voluntary guidelines for the use of AI for a number of years now (the Singapore Framework, for example, was launched in 2019).

There is no active legislation on the development and use of AI yet. Crucially, however, a number of such initiatives are currently on their way through legislative processes globally.

EU’s Landmark AI Act: A Step Towards Global AI Regulation

The European Union’s “Artificial Intelligence Act” is a leading example. The European Commission (EC) started examining AI legislation in 2020 with a focus on

- Protecting consumers

- Safeguarding fundamental rights, and

- Avoiding unlawful discrimination or bias

The EC published an initial legislative proposal in 2021, and the European Parliament adopted a revised version as their official position on AI in June 2023, moving the legislation process to its final phase.

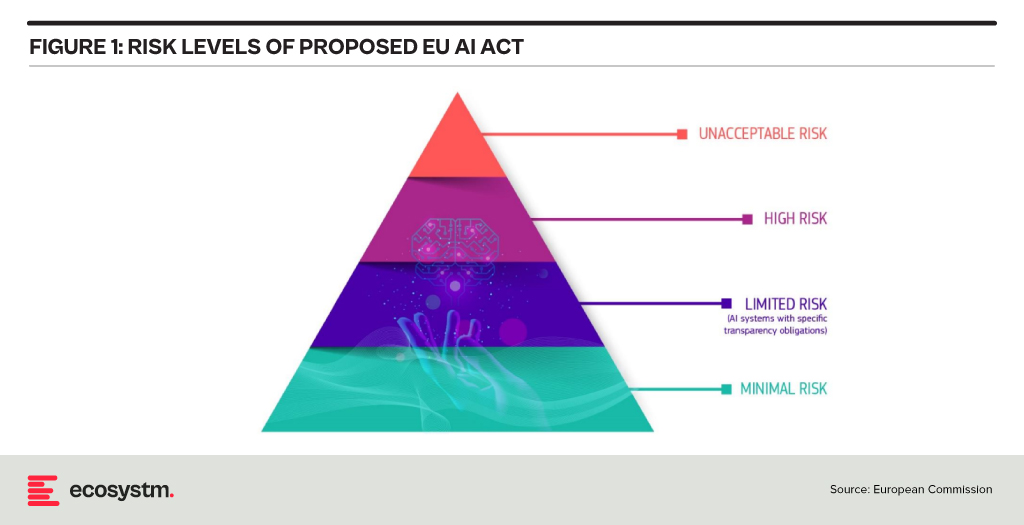

This proposed EU AI Act takes a risk management approach to regulating AI. Organisations looking to employ AI must take note: an internal risk management approach to deploying AI would essentially be mandated by the Act. It is likely that other legislative initiatives will follow a similar approach, making the AI Act a potential role model for global legislations (following the trail blazed by the General Data Protection Regulation). The “G7 Hiroshima AI Process”, established at the G7 summit in Japan in May 2023, is a key example of international discussion and collaboration on the topic (with a focus on Generative AI).

Risk Classification and Regulations in the EU AI Act

At the heart of the AI Act is a system to assess the risk level of AI technology, classify the technology (or its use case), and prescribe appropriate regulations to each risk class.

For each of these four risk levels, the AI Act proposes a set of rules and regulations. Evidently, the regulatory focus is on High-Risk AI systems.

Contrasting Approaches: EU AI Act vs. UK’s Pro-Innovation Regulatory Approach

The AI Act has received its share of criticism, and somewhat different approaches are being considered, notably in the UK. One set of criticism revolves around the lack of clarity and vagueness of concepts (particularly around person-related data and systems). Another set of criticism revolves around the strong focus on the protection of rights and individuals and highlights the potential negative economic impact for EU organisations looking to leverage AI, and for EU tech companies developing AI systems.

A white paper by the UK government published in March 2023, perhaps tellingly, named “A pro-innovation approach to AI regulation” emphasises on a “pragmatic, proportionate regulatory approach … to provide a clear, pro-innovation regulatory environment”, The paper talks about an approach aiming to balance the protection of individuals with economic advancements for the UK on its way to become an “AI superpower”.

Further aspects of the EU AI Act are currently being critically discussed. For example, the current text exempts all open-source AI components not part of a medium or higher risk system from regulation but lacks definition and considerations for proliferation.

Adopting AI Risk Management in Organisations: The Singapore Approach

Regardless of how exactly AI regulations will turn out around the world, organisations must start today to adopt AI risk management practices. There is an added complexity: while the EU AI Act does clearly identify high-risk AI systems and example use cases, the realisation of regulatory practices must be tackled with an industry-focused approach.

The approach taken by the Monetary Authority of Singapore (MAS) is a primary example of an industry-focused approach to AI risk management. The Veritas Consortium, led by MAS, is a public-private-tech partnership consortium aiming to guide the financial services sector on the responsible use of AI. As there is no AI legislation in Singapore to date, the consortium currently builds on Singapore’s aforementioned “Model Artificial Intelligence Governance Framework”. Additional initiatives are already underway to focus specifically on Generative AI for financial services, and to build a globally aligned framework.

To Comply with Upcoming AI Regulations, Risk Management is the Path Forward

As AI regulation initiatives move from voluntary recommendation to legislation globally, a risk management approach is at the core of all of them. Adding risk management capabilities for AI is the path forward for organisations looking to deploy AI-enhanced solutions and applications. As that task can be daunting, an industry consortium approach can help circumnavigate challenges and align on implementation and realisation strategies for AI risk management across the industry. Until AI legislations are in place, such industry consortia can chart the way for their industry – organisations should seek to participate now to gain a head start with AI.