Asia is undergoing a digital renaissance. Central to this transformation is the rise of digital natives: companies born in the digital era, built on cloud infrastructure, powered by data, and guided by customer-centric agility. Unlike traditional businesses retrofitting technology onto old models, Asia’s digital natives have grown up with mobile-first architecture, software-as-a-service models, and a mindset of continuous iteration. They’re not merely disrupting — they are reshaping economies, industries, and even governance.

But we are seeing a new wave of change. The rise of Agentic AI – autonomous, multi-agent systems that handle workflows, decision-making, and collaboration with minimal human input – is set to redefine industries yet again. For digital natives, embracing Agentic AI is no longer optional. Those who adopt it will unlock unprecedented automation, speed, and scale. Those who don’t risk being leapfrogged by competitors operating faster, smarter, and more efficiently.

The path forward demands evolution and collaboration. Digital natives must upgrade their capabilities, while large tech vendors must shift from selling solutions to co-creating them with these agile players. Together, they can accelerate time-to-market and build future-ready ecosystems.

Reimagining Scale and Customer Experience

Asia’s digital natives have already proven that scale and localisation are not mutually exclusive. Grab, for instance, evolved from a ride-hailing app into Southeast Asia’s super-app by integrating services that reflect local habits, from hawker food delivery in Singapore to motorcycle taxis in Indonesia.

Rather than building physical infrastructure, they leverage platforms and cloud-native tools to reach millions at low marginal cost. AI has been their growth engine, powering hyper-personalisation and real-time responsiveness. Shopee dynamically tailors product recommendations, pricing, and even language options to create user experiences that feel “just for me.”

But with Agentic AI, the bar is rising again. The next leap isn’t just personalisation; it’s orchestration. Autonomous systems will manage entire customer journeys, dynamically adjusting pricing, inventory, and support across markets in real-time. Digital natives that embrace this will set new standards for customer responsiveness and operational scale.

To navigate this leap, co-creating AI solutions with tech partners will be crucial. Joint innovation will enable digital natives to move faster, build proprietary capabilities, and deliver richer customer experiences at scale.

Reshaping Work, Operations, and Organisational Models

Digital natives have long redefined how work gets done, breaking down silos and blending technology with business agility. But Agentic AI accelerates this transformation. Where AI once automated repetitive tasks, it now autonomously manages workflows across sales, legal, HR, and operations.

Tokopedia, for example, uses AI to triage customer queries, detect fraud, and optimise marketplace operations, freeing employees to focus on strategic work. This shift is reshaping productivity itself: traditional KPIs like team size or hours worked are giving way to outcome-driven metrics like resolution speed and value delivered.

With leaner but more impactful teams, digital natives are well-positioned to thrive. But success hinges on evolving workforce models. Upskilling employees to collaborate with AI is no longer optional. Data and AI literacy must be embedded across roles, transforming even non-technical teams into AI-augmented contributors.

This is where partnerships with big tech providers can unlock value. By co-developing workforce models, training frameworks, and governance structures, digital natives and vendors can accelerate AI adoption while keeping humans at the centre.

Unlocking New Ecosystems Through Data and Collaboration

Asia’s digital natives understand that data is more than an asset: it’s a strategic lever for building defensible moats and unlocking new ecosystems. Razorpay processes billions in payment data to assess SME creditworthiness, while LINE integrates messaging, payments, and content to deliver deeply personalised services.

What’s emerging is a shift from vendor-client dynamics to co-innovation partnerships. Flipkart’s collaboration with tech providers to deploy GenAI across customer support, logistics, and e-commerce personalisation is a prime example. By co-developing proprietary AI solutions – from multi-modal search to real-time inventory forecasting – Flipkart is turning its data ecosystem into a competitive advantage.

Agentic AI will only deepen this trend. As autonomous systems handle tasks once outsourced, firms are repatriating operations, creating resilient, data-governed ecosystems closer to consumers. This shift challenges traditional outsourcing models and aligns with Asia’s growing emphasis on data sovereignty and sovereign AI capabilities.

How Agentic AI Will Challenge Digital Natives

Even for Asia’s most agile players, Agentic AI presents new hurdles:

- Loss of Advantage. Without Agentic AI, digital natives risk falling behind as competitors unlock unprecedented automation and optimisation. What was once their competitive edge – speed and agility – could erode rapidly.

- Adaptation Costs. Transitioning to Agentic AI demands serious investment – in infrastructure, talent, and change management. Scaling autonomous systems is complex and resource-intensive.

- Talent Shift. Agentic AI will redefine traditional roles, enhancing employee contributions but also requiring massive upskilling and workflow redesigns. HR, sales, and operations teams must evolve or risk obsolescence.

Navigating these challenges will require digital natives to evolve not just technologically, but organisationally and culturally – and to seek partnerships that accelerate this transformation.

Digital Natives: From Disruptors to Co-Creators of Asia’s Future

Asia’s digital natives are no longer just disruptors; they are architects of the region’s digital economy. But as Agentic AI, data sovereignty, and ecosystem shifts reshape the landscape, they must evolve.

The future belongs to those who co-create. By partnering with large tech vendors, digital natives can accelerate innovation, scale faster, and solve the region’s biggest challenges, from inclusive finance to smart cities and sustainable mobility.

Barely weeks into 2025, the Consumer Electronics Show (CES) announced a wave of AI-powered innovations – from Nvidia’s latest RTX 50-series graphics chip with AI-powered rendering to Halliday’s futuristic augmented reality smart glasses. AI has firmly emerged from the “fringe” technology to become the foundation of industry transformation. According to MIT, 95% of businesses are already using AI in some capacity, and more than half are aiming for full-scale integration by 2026.

But as AI adoption increases, the real challenge isn’t just about developing smarter models – it’s about whether the underlying infrastructure can keep up.

The AI-Driven Cloud: Strategic Growth

Cloud providers are at the heart of the AI revolution, but in 2025, it is not just about raw computing power anymore. It’s about smarter, more strategic expansion.

Microsoft is expanding its AI infrastructure footprint beyond traditional tech hubs, investing USD 300M in South Africa to build AI-ready data centres in an emerging market. Similarly, AWS is doubling down on another emerging market with an investment of USD 8B to develop next-generation cloud infrastructure in Maharashtra, India.

This focus on AI is not limited to the top hyperscalers; Oracle, for instance, is seeing rapid cloud growth, with 15% revenue growth expected in 2026 and 20% in 2027. This growth is driven by deep AI integration and investments in semiconductor technology. Oracle is also a key player in OpenAI and SoftBank’s Stargate AI initiative, showcasing its commitment to AI innovation.

Emerging players and disruptors are also making their mark. For instance, CoreWeave, a former crypto mining company, has pivoted to AI cloud services. They recently secured a USD 12B contract with OpenAI to provide computing power for training and running AI models over the next five years.

The signs are clear – the demand for AI is reshaping the cloud industry faster than anyone expected.

Strategic Investments In Data Centres Powering Growth

Enterprises are increasingly investing in AI-optimised data centres, driven by the need to reduce reliance on traditional data centres, lower latency, achieve cost savings, and gain better control over data.

Reliance Industries is set to build the world’s largest AI data centre in Jamnagar, India, with a 3-gigawatt capacity. This ambitious project aims to accelerate AI adoption by reducing inferencing costs and enabling large-scale AI workloads through its ‘Jio Brain’ platform. Similarly, in the US, a group of banks has committed USD 2B to fund a 100-acre AI data centre in Utah, underscoring the financial sector’s confidence in AI’s future and the increasing demand for high-performance computing infrastructure.

These large-scale investments are part of a broader trend – AI is becoming a key driver of economic and industrial transformation. As AI adoption accelerates, the need for advanced data centres capable of handling vast computational workloads is growing. The enterprise sector’s support for AI infrastructure highlights AI’s pivotal role in shaping digital economies and driving long-term growth.

AI Hardware Reimagined: Beyond the GPU

While cloud providers are racing to scale up, semiconductor companies are rethinking AI hardware from the ground up – and they are adapting fast.

Nvidia is no longer just focused on cloud GPUs – it is now working directly with enterprises to deploy H200-powered private AI clusters. AMD’s MI300X chips are being integrated into financial services for high-frequency trading and fraud detection, offering a more energy-efficient alternative to traditional AI hardware.

Another major trend is chiplet architectures, where AI models run across multiple smaller chips instead of a single, power-hungry processor. Meta’s latest AI accelerator and Google’s custom TPU designs are early adopters of this modular approach, making AI computing more scalable and cost-effective.

The AI hardware race is no longer just about bigger chips – it’s about smarter, more efficient designs that optimise performance while keeping energy costs in check.

Collaborative AI: Sharing The Infrastructure Burden

As AI infrastructure investments increase, so do costs. Training and deploying LLMs requires billions in high-performance chips, cloud storage, and data centres. To manage these costs, companies are increasingly teaming up to share infrastructure and expertise.

SoftBank and OpenAI formed a joint venture in Japan to accelerate AI adoption across enterprises. Meanwhile, Telstra and Accenture are partnering on a global scale to pool their AI infrastructure resources, ensuring businesses have access to scalable AI solutions.

In financial services, Palantir and TWG Global have joined forces to deploy AI models for risk assessment, fraud detection, and customer automation – leveraging shared infrastructure to reduce costs and increase efficiency.

And with tech giants spending over USD 315 billion on AI infrastructure this year alone – plus OpenAI’s USD 500 billion commitment – the need for collaboration will only grow.

These joint ventures are more than just cost-sharing arrangements; they are strategic plays to accelerate AI adoption while managing the massive infrastructure bill.

The AI Infrastructure Power Shift

The AI infrastructure race in 2025 isn’t just about bigger investments or faster chips – it’s about reshaping the tech landscape. Leaders aren’t just building AI infrastructure; they’re determining who controls AI’s future. Cloud providers are shaping where and how AI is deployed, while semiconductor companies focus on energy efficiency and sustainability. Joint ventures highlight that AI is too big for any single player.

But rapid growth comes with challenges: Will smaller enterprises be locked out? Can regulations keep pace? As investments concentrate among a few, how will competition and innovation evolve?

One thing is clear: Those who control AI infrastructure today will shape tomorrow’s AI-driven economy.

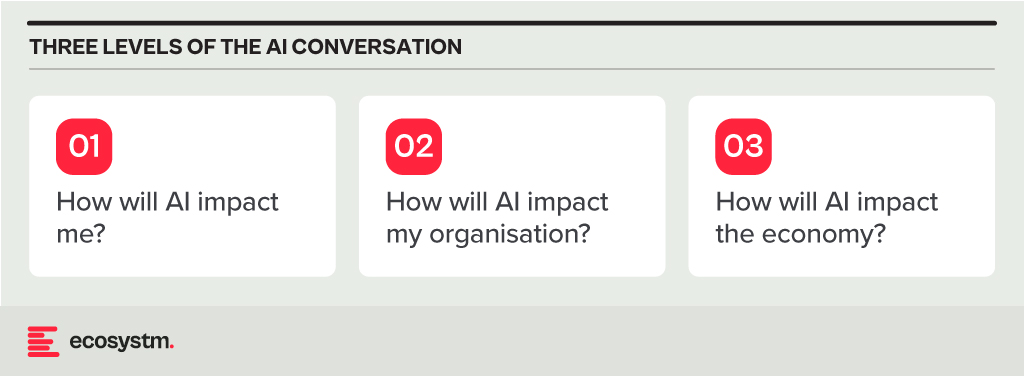

In my earlier post this week, I referred to the need for a grown-up conversation on AI. Here, I will focus on what conversations we need to have and what the solutions to AI disruption might be.

The Impact of AI on Individuals

AI is likely to impact people a lot! You might lose your job to AI. Even if it is not that extreme, it’s likely AI will do a lot of your job. And it might not be the “boring bits” – and sometimes the boring bits make a job manageable! IT helpdesk professionals, for instance, are already reporting that AIOps means they only deal with the difficult challenges. While that might be fun to start with, some personality types find this draining, knowing that every problem that ends up in the queue might take hours or days to resolve.

Your job will change. You will need new skills. Many organisations don’t invest in their employees, so you’ll need to upskill yourself in your own time and at your own cost. Look for employers who put new skill acquisition at the core of their employee offering. They are likelier to be more successful in the medium-to-long term and will also be the better employers with a happier workforce.

The Impact of AI on Organisations

Again – the impact on organisations will be huge. It will change the shape and size of organisations. We have already seen the impact in many industries. The legal sector is a major example where AI can do much of the job of a paralegal. Even in the IT helpdesk example shared earlier, where organisations with a mature tech environment will employ higher skilled professionals in most roles. These sectors need to think where their next generation of senior employees will come from, if junior roles go to AI. Software developers and coders are seeing greater demand for their skills now, even as AI tools increasingly augment their work. However, these skills are at an inflection point, as solutions like TuringBots have already started performing developer roles and are likely to take over the job of many developers and even designers in the near future.

Some industries will find that AI helps junior roles act more like senior employees, while others will use AI to perform the junior roles. AI will also create new roles (such as “prompt engineers”), but even those jobs will be done by AI in the future (and we are starting to see that).

HR teams, senior leadership, and investors need to work together to understand what the future might look like for their organisations. They need to start planning today for that future. Hint: invest in skills development and acquisition – that’s what will help you to succeed in the future.

The Impact of AI on the Economy

Assuming the individual and organisational impacts play out as described, the economic impacts of widespread AI adoption will be significant, similar to the “Great Depression”. If organisations lay off 30% of their employees, that means 30% of the economy is impacted, potentially leading to drying up of some government and an increase in government spend on welfare etc. – basically leading to major societal disruption.

The “AI won’t displace workers” narrative strikes me as the technological equivalent of climate change denial. Just like ignoring environmental warnings, dismissing the potential for AI to significantly impact the workforce is a recipe for disaster. Let’s not fall into the same trap and be an “AI denier”.

What is the Solution?

The solutions revolve around two ideas, and these need to be adopted at an industry level and driven by governments, unions, and businesses:

- Pay a living salary (for all citizens). Some countries already do this, with the Nordic nations leading the charge. And it is no surprise that some of these countries have had the most consistent long-term economic growth. The challenge today is that many governments cannot afford this – and it will become even less affordable as unemployment grows. The solution? Changing tax structures, taxing organisational earnings in-country (to stop them recognising local earnings in low-tax locations), and taxing wealth (not incomes). Also, paying essential workers who will not be replaced by AI (nurses, police, teachers etc.) better salaries will also help keep economies afloat. Easier said than done, of course!

- Move to a shorter work week (but pay full salaries). It is in the economic interest of every organisation that people stay gainfully employed. We have already discussed the ripple effect of job cuts. But if employees are given more flexibility, and working 3-day weeks, this not only spreads the work around more workers, but means that these workers have more time to spend money – ensuring continuing economic growth. Can every company do this? Probably not. But many can and they might have to. The concept of a 5-day work week isn’t that old (less than 100 years in fact – a 40-hour work week was only legislated in the US in the 1930s, and many companies had as little as 6-hour working days even in the 1950s). Just because we have worked this way for 80 years doesn’t mean that we will always have to. There is already a move towards 4-day work weeks. Tech.co surveyed over 1,000 US business leaders and found that 29% of companies with 4-day workweeks use AI extensively. In contrast, only 8% of organisations with a 5-day workweek use AI to the same degree.

AI Changes Everything

We are only at the beginning of the AI era. We have had a glimpse into the future, and it is both frightening and exciting. The opportunities for organisations to benefit from AI are already significant and will become even more as the technology improves and businesses learn to better adopt AI in areas where it can make an impact. But there will be consequences to this adoption. We already know what many of those consequences will be, so let’s start having those grown-up conversations today.

The technology market in Thailand continues to evolve at an unprecedented pace, creating both exciting opportunities and significant challenges for tech leaders in the country. Real-world AI applications and cloud expansion define the future of IT strategies in 2024, as organisations push digital transformation forward. Understanding these trends is crucial for navigating today’s market complexities and achieving exponential growth. Here are the opportunities in the Thailand technology landscape and insights on how to address them effectively.

Tech Modernisation: Breaking Free from Vendor Lock-in

Data centre consolidation and infrastructure modernisation remain top priorities for organisations in Thailand. These processes catalyse the ‘de-requisitioning’ removing outdated or unnecessary technology from an organisation’s infrastructure. But vendor lock-ins pose challenges for organisations, mainly stifling organisational flexibility, hindering innovation, and exposing them to business disruption risks.

44% of organisations in Thailand are focused on consolidating data centres and modernising tech stacks to mitigate vendor lock-ins and enhance operational efficiency.

Modernising infrastructure reduces reliance on single vendors and improves scalability and resilience. Despite the widespread adoption of hybrid and multi-cloud environments, effectively managing these systems remains challenging and requires additional strategic investments.

Over-reliance on a single provider can expose organisations to new risks. This is why CIOs in Thailand are taking decisive steps to combat technology vendor lock-in. They are centralising and modernising their data centres and enhancing cross-platform tools to reduce vendor dependency.

This approach is key to their long-term growth and innovation, allowing them to remain at the forefront of the digital transformation landscape, ready to leverage emerging technologies and adapt to expanding business challenges.

The Hybrid Cloud Labyrinth: Managing Complexity for Success

Nearly 60% of Thailand organisations have embraced hybrid and multi-cloud environments, but the challenges of managing the complexity are often underestimated.

Hybrid strategies offer numerous benefits, such as increased flexibility, optimised performance, and enhanced disaster recovery capabilities. However, managing different cloud providers, each with its unique interface and operational management tools can be challenging.

The challenges of managing a hybrid IT environment are indeed multifaceted. Integration requires harmonising various technology services to work together seamlessly, which can be complex due to differing architectures and protocols. Security is another primary concern, as managing security across on-premises and multiple cloud providers necessitates consistent policies and vigilant monitoring to prevent breaches and ensure compliance. Additionally, efficiently utilising resources across hybrid clouds involves sophisticated monitoring and automation tools to optimise performance and cost-effectiveness. These challenges are real and pressing, and they demand attention and action.

Alarmingly, only 1% of organisations in Thailand plan to increase their investments in hybrid cloud management in 2024.

Organisations can ensure seamless integration, consistent security readiness, and efficient resource utilisation across diverse cloud platforms by investing in robust tools and practices for effective hybrid cloud management. This mitigates operational risks and security vulnerabilities and leads to cost savings due to well-managed cloud environments.

It’s crucial for CIOs in Thailand to urgently prioritise investing in new comprehensive management solutions and developing the necessary skills within their IT teams. This involves training staff on the latest hybrid cloud management technologies and best practices and adopting advanced tools that provide visibility and control over multi-cloud operations. Cracking the hybrid/multi-cloud code empowers CIOs to not only navigate these environments, but also unlock the potential of advanced technologies like AI, ultimately driving superior IT services and expanded business growth. The urgency of this task cannot be overstated, and the sooner you act, the better prepared your organisation will be for the future.

The Future of Work: AI Adoption for Enhanced Productivity

AI is a powerful tool for improving employee productivity and transforming internal operations.

However, only 12% of Thailand’s organisations invest in AI to enhance the employee experience.

This represents a missed opportunity for organisations to utilise AI’s potential to streamline processes, automate repetitive tasks, and provide personalised support to employees.

AI can significantly enhance operational efficiency by automating routine tasks, enabling staff to focus on strategic initiatives. For instance, AI-driven analytics platforms can process vast amounts of data in real time, providing actionable insights that help businesses make informed decisions quickly. AI frees employees to focus on higher-level tasks like developing innovative solutions and strategies. This empowers them to take on more strategic roles, fostering personal growth and career advancement.

The early adopters of AI in Thailand are already reaping the benefits, gaining significant competitive edge by enhancing employee productivity and satisfaction.

In Thailand AI adoption is gaining momentum within tech teams – 44% are exploring its potential for various use cases.

However, its capabilities extend far beyond. AI encompasses a wide range of technologies that can generate content, such as text, images, and code, based on input data. These versatile capabilities are not limited to tech teams, but can also be used for content creation, process automation, and product design in various industries. The success of these early adopters should inspire other Thailand organisations to consider AI adoption as a means to stay ahead in the market.

The enthusiasm for AI has yet to extend beyond tech teams, with only 19% of business units considering its adoption.

This difference highlights an opportunity for CIOs in the country to play a crucial role in advocating for broader AI adoption across the organisation. By demonstrating the tangible benefits, such as increased efficiency, reduced costs, and enhanced innovation, CIOs can drive more widespread acceptance and use. Encouraging cross-departmental collaboration and training on AI applications can further support its integration across business operations. CIO leadership is crucial for successful AI adoption.

The Importance of a Collaborative Ecosystem

Together, we can navigate the intricacies of advanced technologies and foster innovation in Thailand organisation. These market trends should guide you on how to establish a resilient and adaptable IT infrastructure that facilitate long-term growth and innovation. Emphasising modernisation and the strategic use of AI will enhance operational efficiency and position your organisation to harness emerging technologies effectively, all while being part of a supportive and collaborative community.

Stay tuned for more Ecosystm insights and guidance on navigating the Thailand technology landscape, ensuring your organisation remains at the forefront of digital transformation.

The White House has mandated federal agencies to conduct risk assessments on AI tools and appoint officers, including Chief Artificial Intelligence Officers (CAIOs), for oversight. This directive, led by the Office of Management and Budget (OMB), aims to modernise government AI adoption and promote responsible use. Agencies must integrate AI oversight into their core functions, ensuring safety, security, and ethical use. CAIOs will be tasked with assessing AI’s impact on civil rights and market competition. Agencies have until December 1, 2024, to address non-compliant AI uses, emphasising swift implementation.

How will this impact global AI adoption? Ecosystm analysts share their views.

Click here to download ‘Ensuring Ethical AI: US Federal Agencies’ New Mandate’ as a PDF.

The Larger Impact: Setting a Global Benchmark

This sets a potential global benchmark for AI governance, with the U.S. leading the way in responsible AI use, inspiring other nations to follow suit. The emphasis on transparency and accountability could boost public trust in AI applications worldwide.

The appointment of CAIOs across U.S. federal agencies marks a significant shift towards ethical AI development and application. Through mandated risk management practices, such as independent evaluations and real-world testing, the government recognises AI’s profound impact on rights, safety, and societal norms.

This isn’t merely a regulatory action; it’s a foundational shift towards embedding ethical and responsible AI at the heart of government operations. The balance struck between fostering innovation and ensuring public safety and rights protection is particularly noteworthy.

This initiative reflects a deep understanding of AI’s dual-edged nature – the potential to significantly benefit society, countered by its risks.

The Larger Impact: Blueprint for Risk Management

In what is likely a world first, AI brings together technology, legal, and policy leaders in a concerted effort to put guardrails around a new technology before a major disaster materialises. These efforts span from technology firms providing a form of legal assurance for use of their products (for example Microsoft’s Customer Copyright Commitment) to parliaments ratifying AI regulatory laws (such as the EU AI Act) to the current directive of installing AI accountability in US federal agencies just in the past few months.

It is universally accepted that AI needs risk management to be responsible and acceptable – installing an accountable C-suite role is another major step of AI risk mitigation.

This is an interesting move for three reasons:

- The balance of innovation versus governance and risk management.

- Accountability mandates for each agency’s use of AI in a public and transparent manner.

- Transparency mandates regarding AI use cases and technologies, including those that may impact safety or rights.

Impact on the Private Sector: Greater Accountability

AI Governance is one of the rare occasions where government action moves faster than private sector. While the immediate pressure is now on US federal agencies (and there are 438 of them) to identify and appoint CAIOs, the announcement sends a clear signal to the private sector.

Following hot on the heels of recent AI legislation steps, it puts AI governance straight into the Boardroom. The air is getting very thin for enterprises still in denial that AI governance has advanced to strategic importance. And unlike the CFC ban in the Eighties (the Montreal protocol likely set the record for concerted global action) this time the technology providers are fully onboard.

There’s no excuse for delaying the acceleration of AI governance and establishing accountability for AI within organisations.

Impact on Tech Providers: More Engagement Opportunities

Technology vendors are poised to benefit from the medium to long-term acceleration of AI investment, especially those based in the U.S., given government agencies’ preferences for local sourcing.

In the short term, our advice to technology vendors and service partners is to actively engage with CAIOs in client agencies to identify existing AI usage in their tools and platforms, as well as algorithms implemented by consultants and service partners.

Once AI guardrails are established within agencies, tech providers and service partners can expedite investments by determining which of their platforms, tools, or capabilities comply with specific guardrails and which do not.

Impact on SE Asia: Promoting a Digital Innovation Hub

By 2030, Southeast Asia is poised to emerge as the world’s fourth-largest economy – much of that growth will be propelled by the adoption of AI and other emerging technologies.

The projected economic growth presents both challenges and opportunities, emphasizing the urgency for regional nations to enhance their AI governance frameworks and stay competitive with international standards. This initiative highlights the critical role of AI integration for private sector businesses in Southeast Asia, urging organizations to proactively address AI’s regulatory and ethical complexities. Furthermore, it has the potential to stimulate cross-border collaborations in AI governance and innovation, bridging the U.S., Southeast Asian nations, and the private sector.

It underscores the global interconnectedness of AI policy and its impact on regional economies and business practices.

By leading with a strategic approach to AI, the U.S. sets an example for Southeast Asia and the global business community to reevaluate their AI strategies, fostering a more unified and responsible global AI ecosystem.

The Risks

U.S. government agencies face the challenge of sourcing experts in technology, legal frameworks, risk management, privacy regulations, civil rights, and security, while also identifying ongoing AI initiatives. Establishing a unified definition of AI and cataloguing processes involving ML, algorithms, or GenAI is essential, given AI’s integral role in organisational processes over the past two decades.

However, there’s a risk that focusing on AI governance may hinder adoption.

The role should prioritise establishing AI guardrails to expedite compliant initiatives while flagging those needing oversight. While these guardrails will facilitate “safe AI” investments, the documentation process could potentially delay progress.

The initiative also echoes a 20th-century mindset for a 21st-century dilemma. Hiring leaders and forming teams feel like a traditional approach. Today, organisations can increase productivity by considering AI and automation as initial solutions. Investing more time upfront to discover initiatives, set guardrails, and implement AI decision-making processes could significantly improve CAIO effectiveness from the outset.

“AI Guardrails” are often used as a method to not only get AI programs on track, but also as a way to accelerate AI investments. Projects and programs that fall within the guardrails should be easy to approve, govern, and manage – whereas those outside of the guardrails require further review by a governance team or approval body. The concept of guardrails is familiar to many tech businesses and are often applied in areas such as cybersecurity, digital initiatives, data analytics, governance, and management.

While guidance on implementing guardrails is common, organisations often leave the task of defining their specifics, including their components and functionalities, to their AI and data teams. To assist with this, Ecosystm has surveyed some leading AI users among our customers to get their insights on the guardrails that can provide added value.

Data Security, Governance, and Bias

- Data Assurance. Has the organisation implemented robust data collection and processing procedures to ensure data accuracy, completeness, and relevance for the purpose of the AI model? This includes addressing issues like missing values, inconsistencies, and outliers.

- Bias Analysis. Does the organisation analyse training data for potential biases – demographic, cultural and so on – that could lead to unfair or discriminatory outputs?

- Bias Mitigation. Is the organisation implementing techniques like debiasing algorithms and diverse data augmentation to mitigate bias in model training?

- Data Security. Does the organisation use strong data security measures to protect sensitive information used in training and running AI models?

- Privacy Compliance. Is the AI opportunity compliant with relevant data privacy regulations (country and industry-specific as well as international standards) when collecting, storing, and utilising data?

Model Development and Explainability

- Explainable AI. Does the model use explainable AI (XAI) techniques to understand and explain how AI models reach their decisions, fostering trust and transparency?

- Fair Algorithms. Are algorithms and models designed with fairness in mind, considering factors like equal opportunity and non-discrimination?

- Rigorous Testing. Does the organisation conduct thorough testing and validation of AI models before deployment, ensuring they perform as intended, are robust to unexpected inputs, and avoid generating harmful outputs?

AI Deployment and Monitoring

- Oversight Accountability. Has the organisation established clear roles and responsibilities for human oversight throughout the AI lifecycle, ensuring human control over critical decisions and mitigation of potential harm?

- Continuous Monitoring. Are there mechanisms to continuously monitor AI systems for performance, bias drift, and unintended consequences, addressing any issues promptly?

- Robust Safety. Can the organisation ensure AI systems are robust and safe, able to handle errors or unexpected situations without causing harm? This includes thorough testing and validation of AI models under diverse conditions before deployment.

- Transparency Disclosure. Is the organisation transparent with stakeholders about AI use, including its limitations, potential risks, and how decisions made by the system are reached?

Other AI Considerations

- Ethical Guidelines. Has the organisation developed and adhered to ethical principles for AI development and use, considering areas like privacy, fairness, accountability, and transparency?

- Legal Compliance. Has the organisation created mechanisms to stay updated on and compliant with relevant legal and regulatory frameworks governing AI development and deployment?

- Public Engagement. What mechanisms are there in place to encourage open discussion and engage with the public regarding the use of AI, addressing concerns and building trust?

- Social Responsibility. Has the organisation considered the environmental and social impact of AI systems, including energy consumption, ecological footprint, and potential societal consequences?

Implementing these guardrails requires a comprehensive approach that includes policy formulation, technical measures, and ongoing oversight. It might take a little longer to set up this capability, but in the mid to longer term, it will allow organisations to accelerate AI implementations and drive a culture of responsible AI use and deployment.