In my earlier post this week, I referred to the need for a grown-up conversation on AI. Here, I will focus on what conversations we need to have and what the solutions to AI disruption might be.

The Impact of AI on Individuals

AI is likely to impact people a lot! You might lose your job to AI. Even if it is not that extreme, it’s likely AI will do a lot of your job. And it might not be the “boring bits” – and sometimes the boring bits make a job manageable! IT helpdesk professionals, for instance, are already reporting that AIOps means they only deal with the difficult challenges. While that might be fun to start with, some personality types find this draining, knowing that every problem that ends up in the queue might take hours or days to resolve.

Your job will change. You will need new skills. Many organisations don’t invest in their employees, so you’ll need to upskill yourself in your own time and at your own cost. Look for employers who put new skill acquisition at the core of their employee offering. They are likelier to be more successful in the medium-to-long term and will also be the better employers with a happier workforce.

The Impact of AI on Organisations

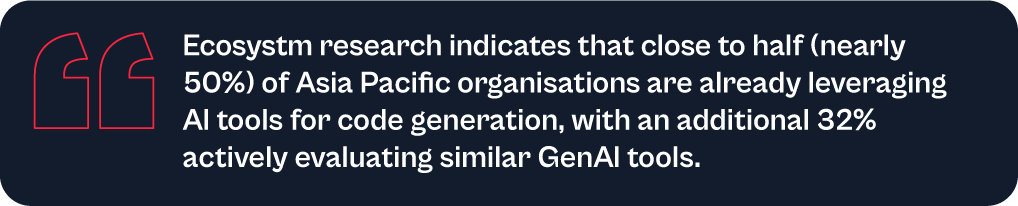

Again – the impact on organisations will be huge. It will change the shape and size of organisations. We have already seen the impact in many industries. The legal sector is a major example where AI can do much of the job of a paralegal. Even in the IT helpdesk example shared earlier, where organisations with a mature tech environment will employ higher skilled professionals in most roles. These sectors need to think where their next generation of senior employees will come from, if junior roles go to AI. Software developers and coders are seeing greater demand for their skills now, even as AI tools increasingly augment their work. However, these skills are at an inflection point, as solutions like TuringBots have already started performing developer roles and are likely to take over the job of many developers and even designers in the near future.

Some industries will find that AI helps junior roles act more like senior employees, while others will use AI to perform the junior roles. AI will also create new roles (such as “prompt engineers”), but even those jobs will be done by AI in the future (and we are starting to see that).

HR teams, senior leadership, and investors need to work together to understand what the future might look like for their organisations. They need to start planning today for that future. Hint: invest in skills development and acquisition – that’s what will help you to succeed in the future.

The Impact of AI on the Economy

Assuming the individual and organisational impacts play out as described, the economic impacts of widespread AI adoption will be significant, similar to the “Great Depression”. If organisations lay off 30% of their employees, that means 30% of the economy is impacted, potentially leading to drying up of some government and an increase in government spend on welfare etc. – basically leading to major societal disruption.

The “AI won’t displace workers” narrative strikes me as the technological equivalent of climate change denial. Just like ignoring environmental warnings, dismissing the potential for AI to significantly impact the workforce is a recipe for disaster. Let’s not fall into the same trap and be an “AI denier”.

What is the Solution?

The solutions revolve around two ideas, and these need to be adopted at an industry level and driven by governments, unions, and businesses:

- Pay a living salary (for all citizens). Some countries already do this, with the Nordic nations leading the charge. And it is no surprise that some of these countries have had the most consistent long-term economic growth. The challenge today is that many governments cannot afford this – and it will become even less affordable as unemployment grows. The solution? Changing tax structures, taxing organisational earnings in-country (to stop them recognising local earnings in low-tax locations), and taxing wealth (not incomes). Also, paying essential workers who will not be replaced by AI (nurses, police, teachers etc.) better salaries will also help keep economies afloat. Easier said than done, of course!

- Move to a shorter work week (but pay full salaries). It is in the economic interest of every organisation that people stay gainfully employed. We have already discussed the ripple effect of job cuts. But if employees are given more flexibility, and working 3-day weeks, this not only spreads the work around more workers, but means that these workers have more time to spend money – ensuring continuing economic growth. Can every company do this? Probably not. But many can and they might have to. The concept of a 5-day work week isn’t that old (less than 100 years in fact – a 40-hour work week was only legislated in the US in the 1930s, and many companies had as little as 6-hour working days even in the 1950s). Just because we have worked this way for 80 years doesn’t mean that we will always have to. There is already a move towards 4-day work weeks. Tech.co surveyed over 1,000 US business leaders and found that 29% of companies with 4-day workweeks use AI extensively. In contrast, only 8% of organisations with a 5-day workweek use AI to the same degree.

AI Changes Everything

We are only at the beginning of the AI era. We have had a glimpse into the future, and it is both frightening and exciting. The opportunities for organisations to benefit from AI are already significant and will become even more as the technology improves and businesses learn to better adopt AI in areas where it can make an impact. But there will be consequences to this adoption. We already know what many of those consequences will be, so let’s start having those grown-up conversations today.