Southeast Asia’s banking sector is poised for significant digital transformation. With projected Net Interest Income reaching USD 148 billion by 2024, the market is ripe for continued growth. While traditional banks still hold a dominant position, digital players are making significant inroads. To thrive in this evolving landscape, financial institutions must adapt to rising customer expectations, stringent regulations, and the imperative for resilience. This will require a seamless collaboration between technology and business teams.

To uncover how banks in Southeast Asia are navigating this complex landscape and what it takes to succeed, Ecosystm engaged in in-depth conversations with senior banking executives and technology leaders as part of our research initiatives. Here are the highlights of the discussions with leaders across the region.

#1 Achieving Hyper-Personalisation Through AI

As banks strive to deliver highly personalised financial services, AI-driven models are becoming increasingly essential. These models analyse customer behaviour to anticipate needs, predict future behaviour, and offer relevant services at the right time. AI-powered tools like chatbots and virtual assistants further enhance real-time customer support.

Hyper-personalisation, while promising, comes with its challenges – particularly around data privacy and security. To deliver deeply tailored services, banks must collect extensive customer information, which raises the question: how can they ensure this sensitive data remains protected?

AI projects require a delicate balance between innovation and regulatory compliance. Regulations often serve as the right set of guardrails within which banks can innovate. However, banks – especially those with cross-border operations – must establish internal guidelines that consider the regulatory landscape of multiple jurisdictions.

#2 Beyond AI: Other Emerging Technologies

AI isn’t the only emerging technology reshaping Southeast Asian banking. Banks are increasingly adopting technologies like Robotic Process Automation (RPA) and blockchain to boost efficiency and engagement. RPA is automating repetitive tasks, such as data entry and compliance checks, freeing up staff for higher-value work. CIMB in Malaysia reports seeing a 35-50% productivity increase thanks to RPA. Blockchain is being explored for secure, transparent transactions, especially cross-border payments. The Asian Development Bank successfully trialled blockchain for faster, safer bond settlements. While AR and VR are still emerging in banking, they offer potential for enhanced customer engagement. Banks are experimenting with immersive experiences like virtual branch visits and interactive financial education tools.

The convergence of these emerging technologies will drive innovation and meet the rising demand for seamless, secure, and personalised banking services in the digital age. This is particularly true for banks that have the foresight to future-proof their tech foundation as part of their ongoing modernisation efforts. Emerging technologies offer exciting opportunities to enhance customer engagement, but they shouldn’t be used merely as marketing gimmicks. The focus must be on delivering tangible benefits that improve customer outcomes.

#3 Greater Banking-Fintech Collaboration

The digital payments landscape in Southeast Asia is experiencing rapid growth, with a projected 10% increase between 2024-2028. Digital wallets and contactless payments are becoming the norm, and platforms like GrabPay, GoPay, and ShopeePay are dominating the market. These platforms not only offer convenience but also enhance financial inclusion by reaching underbanked populations in remote areas.

The rise of digital payments has significantly impacted traditional banks. To remain relevant in this increasingly cashless society, banks are collaborating with fintech companies to integrate digital payment solutions into their services. For instance, Indonesia’s Bank Mandiri collaborated with digital credit services provider Kredivo to provide customers with access to affordable and convenient credit options.

Partnerships between traditional banks and fintechs are essential for staying competitive in the digital age, especially in areas like digital payments, data analytics, and customer experience.

While these collaborations offer opportunities, they also pose challenges. Banks must invest in advanced fraud detection, AI monitoring, and robust authentication to secure digital payments. Once banks adopt a mindset of collaboration with innovators, they can leverage numerous innovations in the cybersecurity space to address these challenges.

#4 Agile Infrastructure for an Agile Business

While the banking industry is considered a pioneer in implementing digital technologies, its approach to cloud has been more cautious. While interest remained high, balancing security and regulatory concerns with cloud agility impacted the pace. Hybrid multi-cloud environments has accelerated banking cloud adoption.

Leveraging public and private clouds optimises IT costs, offering flexibility and scalability for changing business needs. Hybrid cloud allows resource adjustments for peak demand or cost reductions off-peak. Access to cloud-native services accelerates innovation, enabling rapid application development and improved competitiveness. As the industry adopts GenAI, it requires infrastructure capable of handling vast data, massive computing power, advanced security, and rapid scalability – all strengths of hybrid cloud.

Replicating critical applications and data across multiple locations ensures disaster recovery and business continuity. A multi-cloud strategy also helps avoid vendor lock-in, diversifies cloud providers, and reduces exposure to outages.

Hybrid cloud adoption offers benefits but also presents challenges for banks. Managing the environment is complex, needing coordination across platforms and skilled personnel. Ensuring data security and compliance across on-prem and public cloud infrastructure is demanding, requiring robust measures. Network latency and performance issues can arise, making careful design and optimisation crucial. Integrating on-prem systems with public cloud services is time-consuming and needs investment in tools and expertise.

#5 Cyber Measures to Promote Customer & Stakeholder Trust

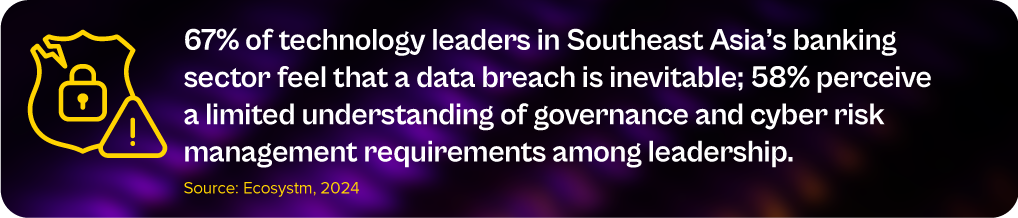

The banking sector is undergoing rapid AI-driven digital transformation, focusing on areas like digital customer experiences, fraud detection, and risk assessment. However, this shift also increases cybersecurity risks, with the majority of banking technology leaders anticipate inevitable data breaches and outages.

Key challenges include expanding technology use, such as cloud adoption and AI integration, and employee-related vulnerabilities like phishing. Banks in Southeast Asia are investing heavily in modernising infrastructure, software, and cybersecurity.

Banks must update cybersecurity strategies to detect threats early, minimise damage, and prevent lateral movement within networks.

Employee training, clear security policies, and a culture of security consciousness are critical in preventing breaches.

Regulatory compliance remains a significant concern, but banks are encouraged to move beyond compliance checklists and adopt risk-based, intelligence-led strategies. AI will play a key role in automating compliance and enhancing Security Operations Centres (SOCs), allowing for faster threat detection and response. Ultimately, the BFSI sector must prioritise cybersecurity continuously based on risk, rather than solely on regulatory demands.

Breaking Down Barriers: The Role of Collaboration in Banking Transformation

Successful banking transformation hinges on a seamless collaboration between technology and business teams. By aligning strategies, fostering open communication, and encouraging cross-functional cooperation, banks can effectively leverage emerging technologies to drive innovation, enhance customer experience, and improve efficiency.

A prime example of the power of collaboration is the success of AI initiatives in addressing specific business challenges.

This user-centric approach ensures that technology addresses real business needs.

By fostering a culture of collaboration, banks can promote continuous learning, idea sharing, and innovation, ultimately driving successful transformation and long-term growth in the competitive digital landscape.